As artificial intelligence rapidly integrates into development workflows, a concerning trend is emerging: basic security principles are being sacrificed in the rush to implement AI capabilities. A recent critical vulnerability discovered in Langflow—a low-code tool for AI workflows—demonstrates how even fundamental security practices like preventing arbitrary code execution can be overlooked in AI-focused development.

The Langflow Vulnerability: A Case Study in AI Security Failures

Langflow, designed to help developers build AI agents and workflows, recently experienced a serious security incident that highlights the dangers of neglecting core security principles. The vulnerability, formally documented and reported by security researchers at Horizon 3.AI, involved an endpoint that allowed attackers to execute arbitrary code directly on the server—a textbook example of a remote code execution (RCE) vulnerability.

The specific issue resided in an endpoint called `API v1 validate code`, which was designed to validate user-submitted code. However, in vulnerable versions, this endpoint failed to properly sandbox or sanitize input, allowing attackers to send malicious code that could execute directly on the server with elevated privileges.

How the Vulnerability Worked: Python Decorators as Attack Vectors

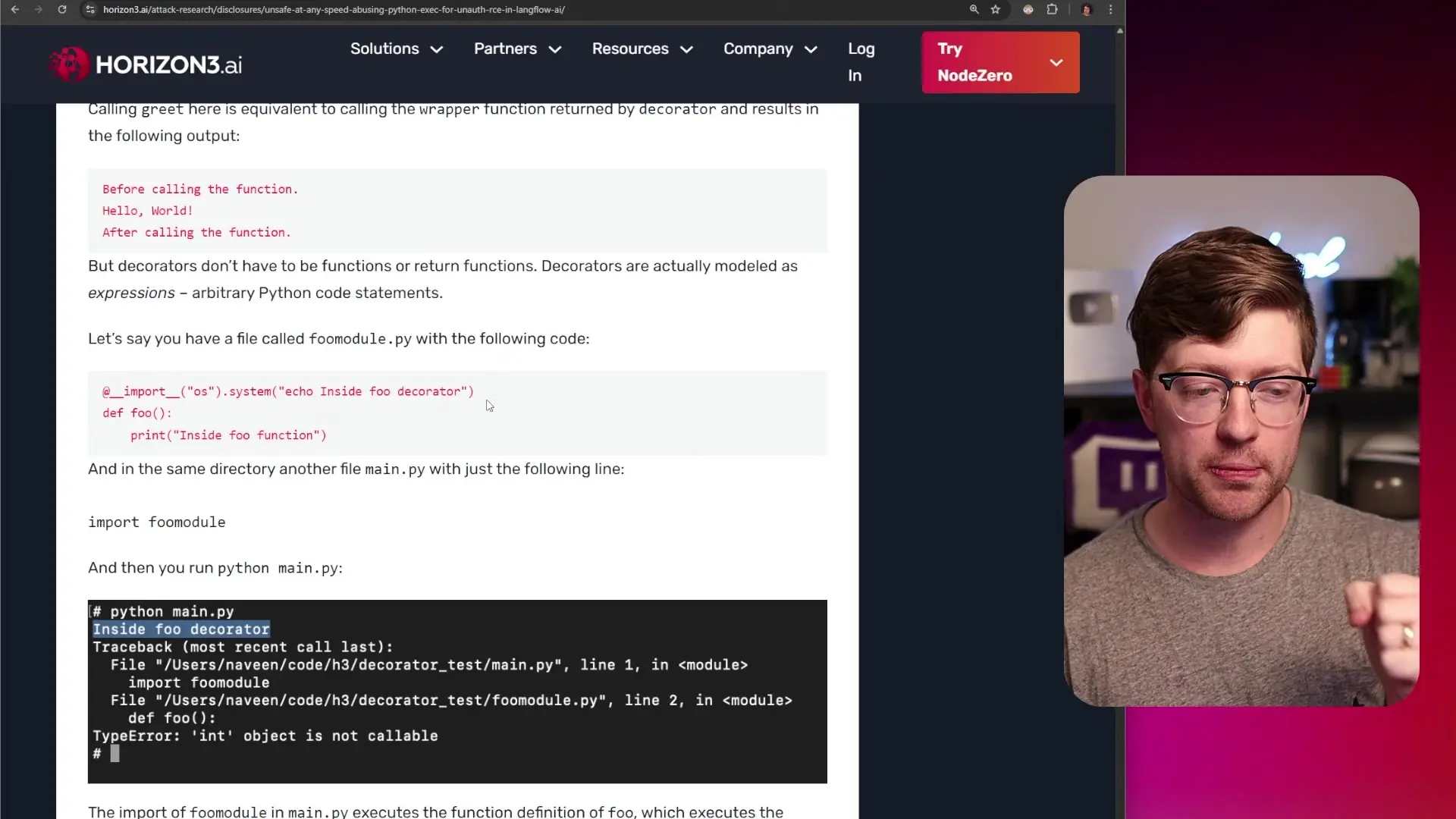

The technical details of this vulnerability reveal how seemingly innocent code validation features can become dangerous attack vectors. The vulnerability stemmed from Langflow's approach to validating Python code submitted by users.

The validation process attempted to ensure submitted code adhered to the required API by parsing the abstract syntax tree (AST) of the code, checking import statements, and executing function definitions. However, the developers overlooked a critical aspect of Python's execution model: when evaluating function definitions, any decorators attached to those functions are executed immediately—even if the function itself is never called.

# Simplified example of how the vulnerability could be exploited

def malicious_decorator(func):

# This code executes during function definition evaluation

import os

os.system('malicious command here')

return func

@malicious_decorator

def innocent_looking_function():

pass

# Even without calling innocent_looking_function(),

# the malicious_decorator code has already executed!This oversight allowed attackers to craft code with malicious decorators that would execute during the validation process, giving them a reverse shell on the server. While the user context wasn't root, researchers discovered the process had group ID privileges as root, significantly increasing the potential damage.

Why This Matters: The Broader Implications for AI Security

This incident is not merely an isolated security failure but represents a broader trend in AI development. As studies find, code-generating AI can introduce security vulnerabilities that may go undetected without proper review processes. The Langflow case illustrates several critical lessons for AI security:

- Basic security principles like input sanitization and sandboxing remain essential regardless of how advanced the AI components are

- The complexity of AI systems can obscure traditional security vulnerabilities

- Rushing to implement AI features without thorough security review creates significant risks

- Code that interacts with user-submitted content requires extra scrutiny, even in AI contexts

The U.S. Cybersecurity and Infrastructure Security Agency (CISA) added this vulnerability to their list of known exploited vulnerabilities, indicating that attackers were actively exploiting it in the wild. This elevation from theoretical risk to active threat underscores the real-world consequences of such security oversights.

Best Practices for Secure AI Development

To prevent similar vulnerabilities in AI-driven applications, developers should implement these essential security practices:

- Never execute untrusted code directly on your servers without proper sandboxing

- Use dedicated execution environments with limited privileges for code validation and execution

- Implement thorough input validation and sanitization for all user-submitted content

- Conduct regular security audits specifically focused on code execution pathways

- Employ the principle of least privilege for all system components

- Maintain a security-first mindset even when implementing cutting-edge AI features

The Role of Knowledge in AI Security

A critical factor in maintaining security in AI development is having knowledgeable professionals who understand both the AI components and fundamental security principles. Just as one wouldn't want an untrained person performing surgery guided only by AI instructions, we shouldn't rely on AI to generate secure code without proper human oversight and validation.

Developers working with AI systems need strong fundamentals in computer science and security principles to effectively identify potential vulnerabilities that AI tools might introduce or overlook. This human expertise becomes even more crucial as AI systems grow more complex and powerful.

Conclusion: Balancing Innovation with Security

The Langflow vulnerability serves as a sobering reminder that in the excitement to implement AI capabilities, we cannot afford to neglect basic security practices. As AI security vulnerabilities continue to emerge, the development community must find a balance between rapid innovation and thorough security implementation.

Fortunately, Langflow addressed this vulnerability in version 1.0.0, implementing proper protections against arbitrary code execution. However, the incident highlights how AI code security must remain a priority even as we push the boundaries of what's possible with artificial intelligence.

By maintaining strong security fundamentals while embracing AI innovation, developers can create systems that are both cutting-edge and resilient against attacks. In the rapidly evolving landscape of AI development, this security-conscious approach isn't just best practice—it's essential.

Let's Watch!

AI Code Security Risks: How Overlooked Basics Led to Critical Vulnerabilities

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence