The cybersecurity community is facing a new and concerning challenge: AI-generated security reports that claim to identify HTTP/3 vulnerabilities but are completely fabricated. This emerging trend threatens to overwhelm security researchers with false reports and potentially allows real vulnerabilities to slip through the cracks. A recent incident involving a purported HTTP/3 stream dependency cycle exploit highlights the severity of this problem and its implications for the entire security ecosystem.

The Anatomy of a Fake HTTP/3 Vulnerability Report

Recently on HackerOne, a popular bug bounty platform, a researcher using the handle "Evil Gen X" submitted what appeared to be a legitimate security vulnerability report. The submission claimed to have discovered a "novel exploit leveraging stream dependency cycles in HTTP/3 resulting in memory corruption and potential denial of service" in curl, a widely used tool for making HTTP requests.

The report was detailed and technically sound at first glance. It included steps to reproduce the issue using AIOQ, described the environment setup, and even provided crash information showing that register R15 (the return address in ARM architecture) was overwritten, suggesting potential code execution capabilities. For many security professionals reviewing the report, these details would typically indicate a serious and legitimate vulnerability.

The Moment of Truth: Exposing AI-Generated Security Slop

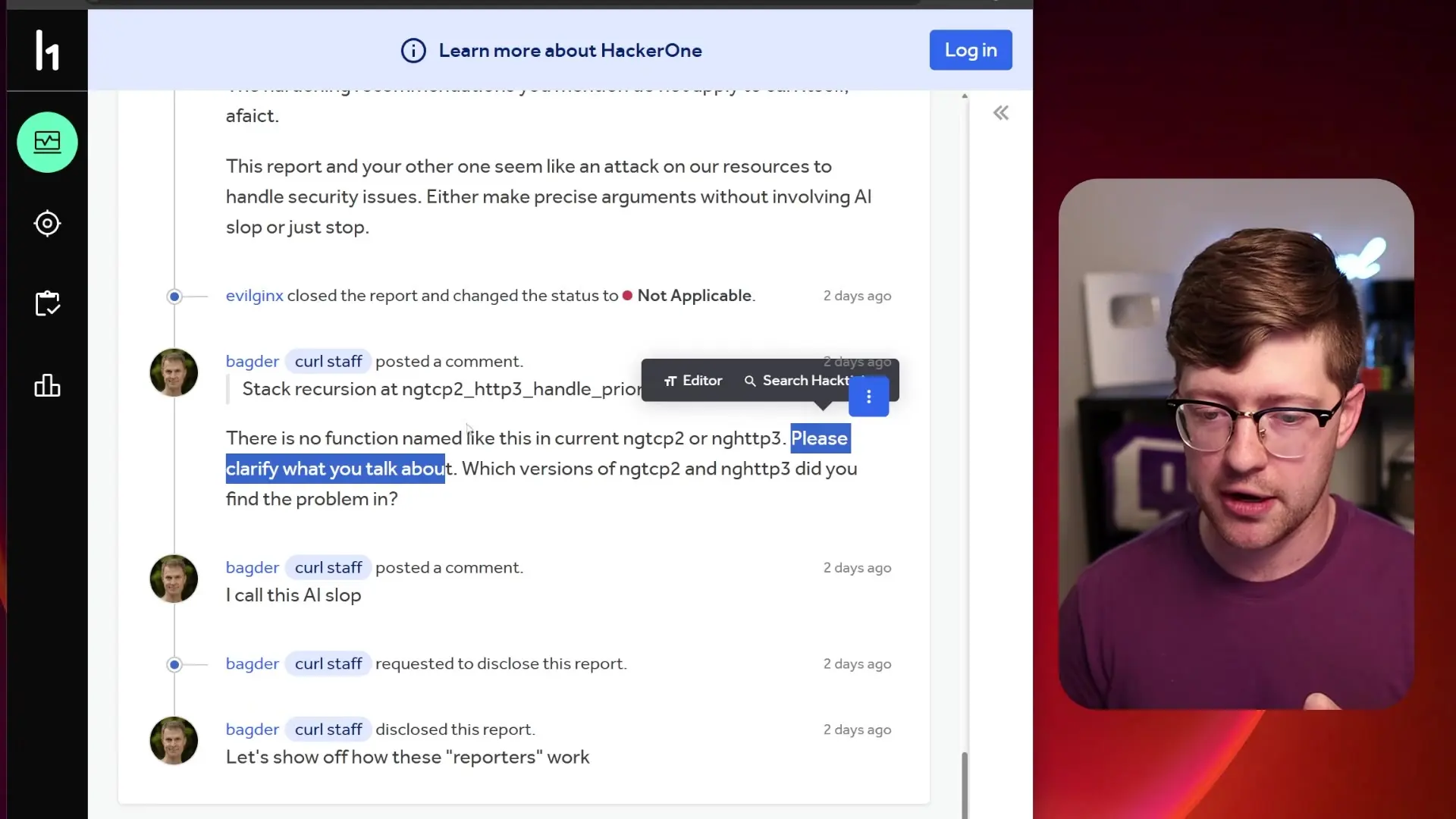

The report began to unravel when reviewers tried to apply the provided patch file against the AIOQ quick branch and found inconsistencies. As the conversation in the comments section progressed, the researcher's responses became increasingly robotic and generic, raising suspicions about the authenticity of the report.

Daniel Stenberg, the creator and primary maintainer of curl, eventually stepped in and identified the critical flaw in the report: the function that supposedly contained the vulnerability - "ngtcp2, http3 handle priority frame" - simply doesn't exist in the codebase. The entire vulnerability report was fabricated, likely generated by an AI that hallucinated a non-existent function and created a detailed but completely false security issue.

Stenberg bluntly labeled the submission "AI slop" and closed the ticket, highlighting a growing problem in the security community.

The Security Implications of AI-Generated Vulnerability Reports

This incident represents more than just a nuisance; it constitutes a potential denial-of-service attack against the security community itself. Security resources - the people responsible for triaging and fixing bugs - don't scale linearly with the number of reports. When AI can generate convincing but false security reports at scale, it creates two dangerous scenarios:

- Resource exhaustion: Security teams spend valuable time investigating non-existent HTTP/3 vulnerabilities instead of addressing real security issues.

- Critical oversight: With resources stretched thin by fake reports, legitimate HTTP/3 security vulnerabilities and other real issues might be overlooked or dismissed as more AI-generated noise.

The economic incentives of bug bounty programs (with rewards up to $9,200 for critical vulnerabilities in curl) further complicate the situation. Researchers may be tempted to use AI to generate and submit large volumes of reports, hoping that some will be accepted and rewarded, even if many are rejected.

New Measures to Combat AI-Generated Security Reports

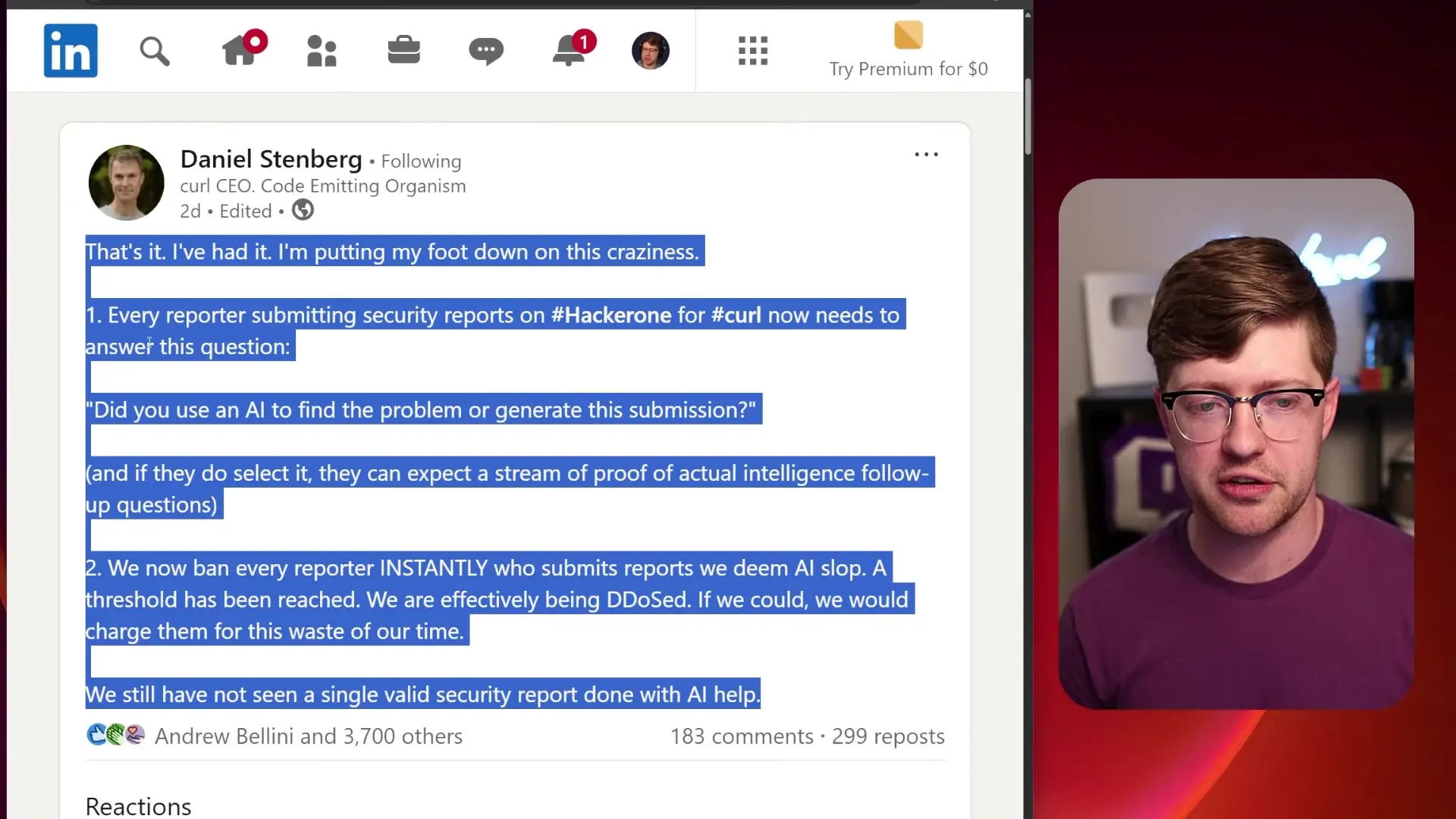

In response to this incident, Daniel Stenberg announced new rules for submitting security reports to curl through HackerOne. These measures aim to filter out AI-generated reports and maintain the integrity of the vulnerability reporting process.

- Researchers must now disclose whether they used AI to find the problem or generate the submission

- Those using AI can expect follow-up questions to verify their understanding of the issue

- Submitters of reports deemed to be "AI slop" will be immediately banned from the program

Stenberg noted that to date, they have not seen a single valid security report created with AI assistance, suggesting that current AI tools are not yet capable of meaningful security research despite their convincing output.

Potential Malicious Applications of AI-Generated Security Reports

Beyond the resource drain caused by well-intentioned but misguided researchers, there's a more sinister possibility: these fake reports could be part of a coordinated attack strategy. Bad actors might use AI to flood security channels with convincing but false HTTP/3 vulnerability reports as a smokescreen, diverting attention from real exploits they plan to use elsewhere.

By testing the waters with well-formed but fake AI submissions, attackers can gauge how effectively the security community detects fabricated reports. If successful, they could launch larger campaigns that overwhelm security teams, creating the perfect conditions for a real attack to go unnoticed.

The Future of AI in Security Research

Despite the current problems with AI-generated security reports, AI will likely play a legitimate role in security research eventually. AI systems excel at processing large volumes of data and could potentially identify patterns in code that humans might miss. However, we're not there yet - current AI tools seem more adept at generating convincing-sounding technical jargon than at finding actual HTTP/3 vulnerabilities or other security issues.

For now, the security community must remain vigilant against AI-generated false reports while continuing to develop better tools and processes for identifying and addressing real security vulnerabilities in HTTP/3 and other protocols.

Conclusion: Navigating the New Reality of AI in Cybersecurity

The incident with the fake HTTP/3 vulnerability report serves as a warning about the challenges AI brings to cybersecurity. While AI has tremendous potential to enhance security practices in the future, its current implementation in vulnerability research is creating more problems than solutions.

Security professionals must adapt to this new reality by developing better methods to distinguish between legitimate security concerns and AI-generated fabrications. The integrity of vulnerability reporting systems depends on maintaining this distinction, especially as AI capabilities continue to advance.

For researchers and security professionals, the message is clear: human expertise and critical thinking remain essential in cybersecurity, particularly when evaluating potential HTTP/3 vulnerabilities and other complex security issues. No matter how convincing AI-generated reports may seem, there's no substitute for genuine technical understanding and rigorous verification.

Let's Watch!

The AI Threat: How Fake HTTP/3 Vulnerabilities Disrupt Cybersecurity

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence