As AI becomes increasingly central to development workflows, the challenge of writing effective prompts has emerged as a critical skill. Microsoft's answer to this challenge is Pummel - an open-source markup language specifically designed to help developers write reliable and maintainable AI prompts. This powerful tool transforms the way we interact with Large Language Models by adding structure, reusability, and advanced features to what would otherwise be plain text prompts.

What Is Pummel and Why Do You Need It?

Pummel is an HTML-like markup language developed by prompt engineering researchers at Microsoft. While you might initially wonder why you'd need a specialized language just to write prompts, Pummel's capabilities quickly demonstrate its value - particularly for complex AI interactions or when working with prompts at scale.

The language allows you to compile prompts into various formats (markdown, JSON, HTML, YAML, or XML) and offers powerful features like external file integration, web content extraction, and templating. These capabilities make it especially valuable for prompt engineering professionals working with Azure OpenAI services or other LLM platforms.

Getting Started with Pummel

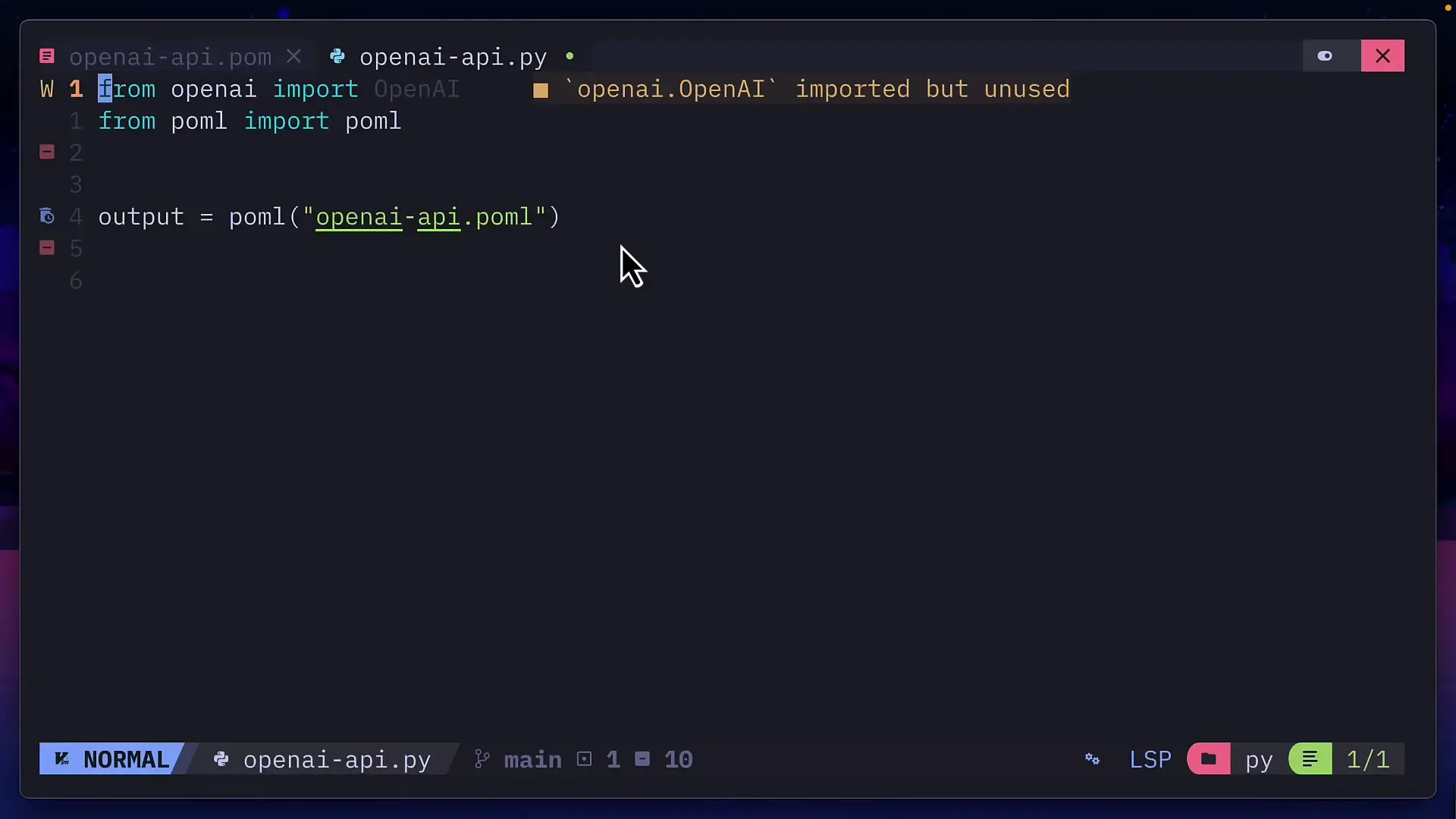

While Pummel does have a TypeScript SDK, it's not yet fully stable. For now, the Python SDK provides the most reliable experience. Here's a simple example of how to use it:

from pummel import Pummel

# Basic usage with a string

prompt = Pummel("<pummel><task>Write a poem about AI</task></pummel>")

output = prompt.to_markdown()You can also load Pummel files directly:

from pummel import Pummel

prompt = Pummel.from_file("my_prompt.pml")

output = prompt.to_markdown()Understanding Pummel Components

Pummel organizes content into "components" - specialized tags that help structure your prompts. These components fall into several categories:

- Basic components:

, , - Intentional components:

(role), , , , - Data components:

, ,

Pummel offers various component types including basic formatting, intentional components, and specialized data handling elements A simple Pummel file might look like this:

XML<pummel> <ro>You are a helpful assistant</ro> <task>Write a short poem about artificial intelligence</task> <hint>Make it optimistic about the future</hint> </pummel>12345Integrating External Data

One of Pummel's most powerful features is its ability to seamlessly incorporate external data into prompts. This capability is particularly valuable for prompt engineering in Microsoft's Azure AI ecosystem, where working with structured data is common.

For example, you can include data from Excel files, Word documents, or even specific sections of websites:

XML<pummel> <task>Analyze this data and provide insights</task> <document src="data.xlsx" limit="5" convert="csv" /> </pummel>1234This will automatically extract the first 5 records from the Excel file, convert them to CSV format, and include them in your prompt - regardless of whether the final output is in markdown, JSON, or another format.

Pummel allows seamless integration with OpenAI SDK and supports multiple output formats for your prompts Advanced Templating with Pummel

Pummel includes a powerful templating engine that enables dynamic prompt generation. You can create variables, use conditional logic, and even loop through data:

XML<pummel> <let name="language">Python</let> <task>Write a {{language}} function that calculates factorial</task> <if condition="language == 'Python'"> <hint>Use a recursive approach</hint> </if> </pummel>12345678You can also pass data from your Python code into Pummel templates using the context parameter:

PYTHONfrom pummel import Pummel context = { "user_name": "Alice", "people": [{"name": "Bob", "role": "Developer"}, {"name": "Charlie", "role": "Designer"}] } prompt = Pummel.from_file("template.pml", context=context) output = prompt.to_markdown()123456789Practical Use Case: Generating an Agent.md File

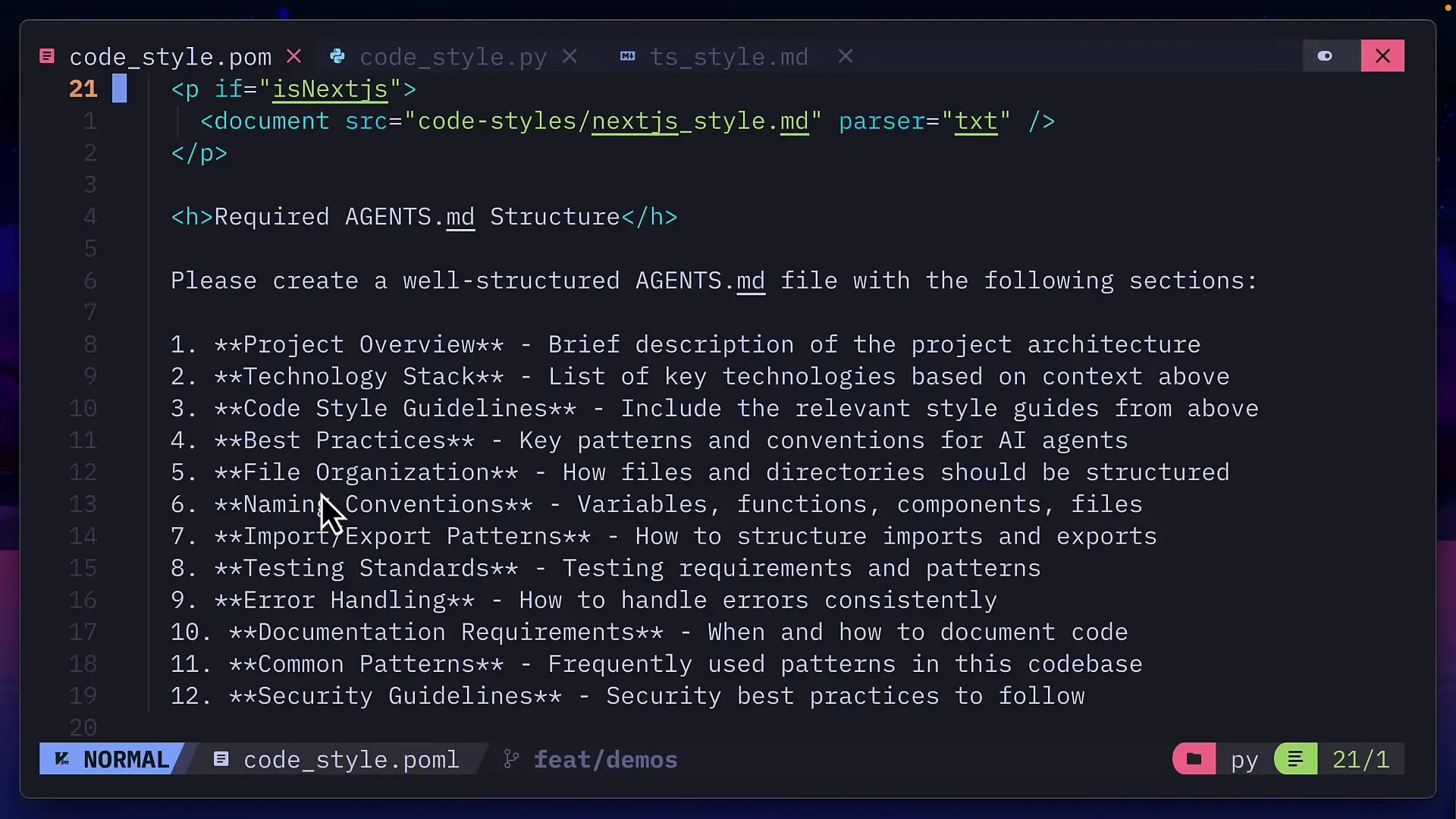

To demonstrate Pummel's practical value for AI prompt engineering, let's look at a real-world example: automatically generating an agent.md file for a development project based on coding style preferences.

Pummel can help create sophisticated prompts that generate customized documentation like agent.md files The process involves:

- Creating a Pummel file that defines the structure of the prompt

- Using conditional logic to include different code style documents based on parameters

- Passing the compiled prompt to an LLM (in this case, GPT-5 nano)

- Saving the generated content as an agent.md file

PYTHONfrom pummel import Pummel import openai import argparse import os # Parse command line arguments parser = argparse.ArgumentParser() parser.add_argument("--react", action="store_true") parser.add_argument("--typescript", action="store_true") args = parser.parse_args() # Create context for Pummel template context = { "use_react": args.react, "use_typescript": args.typescript } # Load and compile the Pummel template prompt = Pummel.from_file("agent_template.pml", context=context) formatted_prompt = prompt.to_openai_chat() # Send to OpenAI response = openai.chat.completions.create( model="gpt-5-nano", messages=formatted_prompt ) # Save the response as agent.md with open("agent.md", "w") as f: f.write(response.choices[0].message.content) print("Created agent.md file")1234567891011121314151617181920212223242526272829303132Additional Pummel Features

Pummel offers several other advanced capabilities that make it a comprehensive solution for prompt engineering in Microsoft's AI ecosystem:

- Stylesheets for specifying data formats

- Tool specification for LLM function calling

- Expression evaluation using ZOD

- Trace capture for debugging prompts before sending to LLMs

Integrating with OpenAI and Other LLM Providers

One of Pummel's strengths is its ability to output prompts in formats compatible with various LLM providers. For OpenAI integration, you can use the to_openai_chat() method:

PYTHONfrom pummel import Pummel import openai prompt = Pummel.from_file("my_prompt.pml") formatted_prompt = prompt.to_openai_chat() response = openai.chat.completions.create( model="gpt-4", messages=formatted_prompt ) print(response.choices[0].message.content)123456789101112This approach works seamlessly with Microsoft's Azure OpenAI services as well, making Pummel an excellent choice for prompt engineering in Microsoft-centric AI development environments.

Conclusion

Pummel represents a significant advancement in prompt engineering, particularly for complex AI applications. By providing structure, reusability, and advanced data integration capabilities, it transforms prompt creation from an ad-hoc process into a systematic engineering discipline.

For developers working with Microsoft's AI services like Azure OpenAI, Pummel offers a natural extension to existing workflows, enabling more sophisticated and maintainable prompt design. While it may seem complex at first glance, the benefits become apparent when dealing with complex prompts or when working with prompts at scale.

As AI continues to evolve as a core development tool, structured approaches to prompt engineering like Pummel will likely become increasingly important, especially in enterprise environments where consistency and maintainability are paramount.

Let's Watch!

Microsoft Pummel: The Secret Tool for Writing Perfect AI Prompts

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence