MCP (Model-Context-Protocol) has emerged as an important concept in AI development. Despite the buzzword status, this protocol addresses a fundamental aspect of how we build applications with Large Language Models (LLMs). To understand MCP, we first need to clarify how LLMs actually work and the challenges developers face when extending their capabilities.

The Fundamentals: LLMs as Token Generators

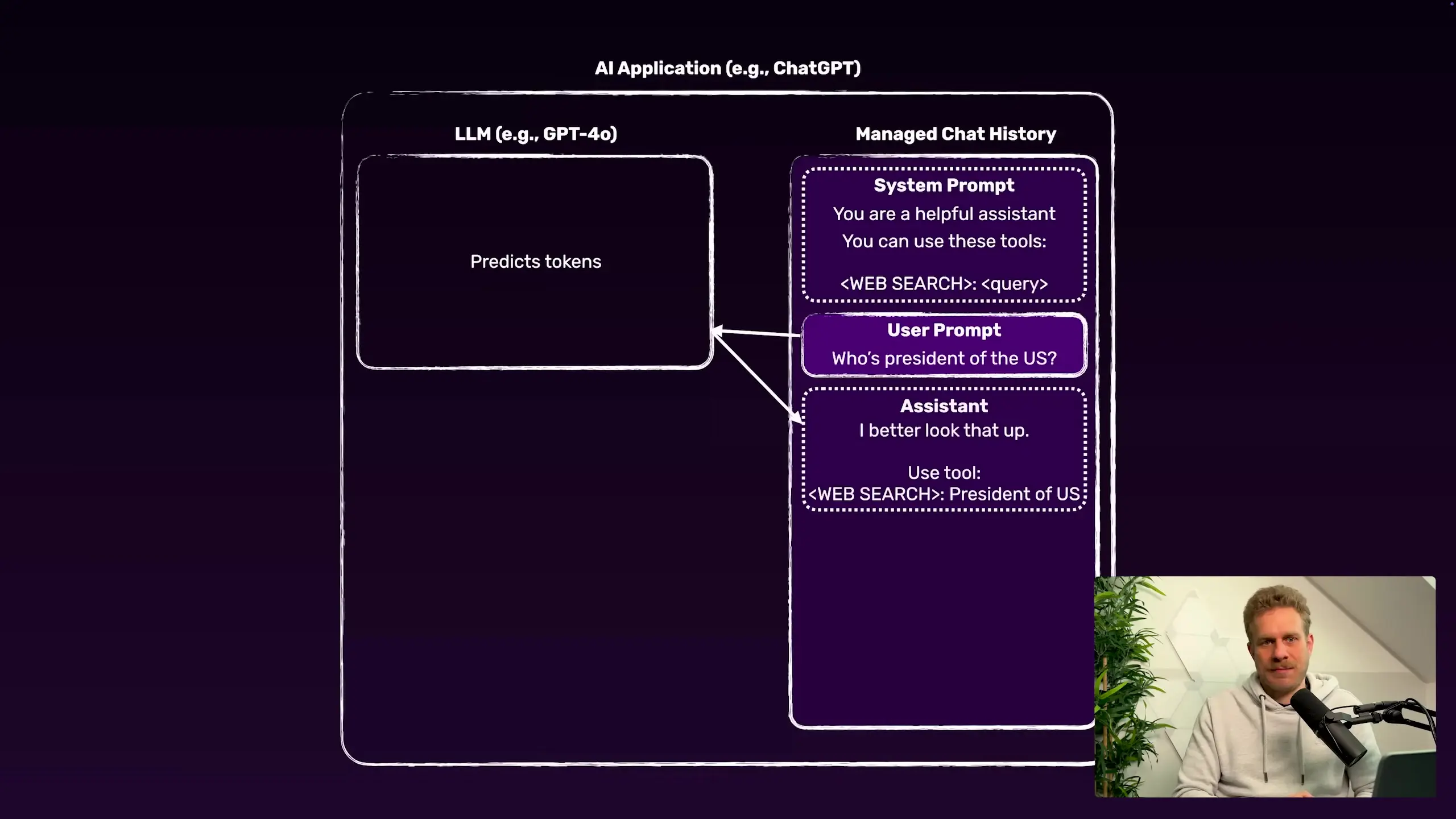

At their core, all Large Language Models are essentially token generators. They output tokens—words or parts of words—based on input they receive. This is a crucial point that's sometimes overlooked: LLMs are text generators, not autonomous agents with inherent abilities to perform tasks beyond text generation.

When you interact with systems like ChatGPT that seem to perform web searches, run code, or analyze data, what's actually happening is more complex than the LLM simply 'doing' these things. The LLM itself is still just generating text, but it's operating within an application framework that enables these additional capabilities.

How Tool Use Actually Works in LLM Applications

When applications like ChatGPT perform tasks beyond text generation, here's what's happening behind the scenes:

- A system prompt is injected into the LLM that defines available tools and how to use them

- When a user query suggests the need for a tool (like a web search), the LLM generates text that follows the predefined format for tool invocation

- The application shell intercepts this tool invocation text before showing it to the user

- Custom code written by developers (not the LLM) executes the actual tool functionality

- Results from the tool execution are fed back into the conversation context

- The LLM generates a new response based on the original query plus the tool results

This process happens invisibly to the end user, who only sees the final response. This creates the illusion that the LLM itself is directly using external tools, when in reality, it's the application framework enabling this functionality.

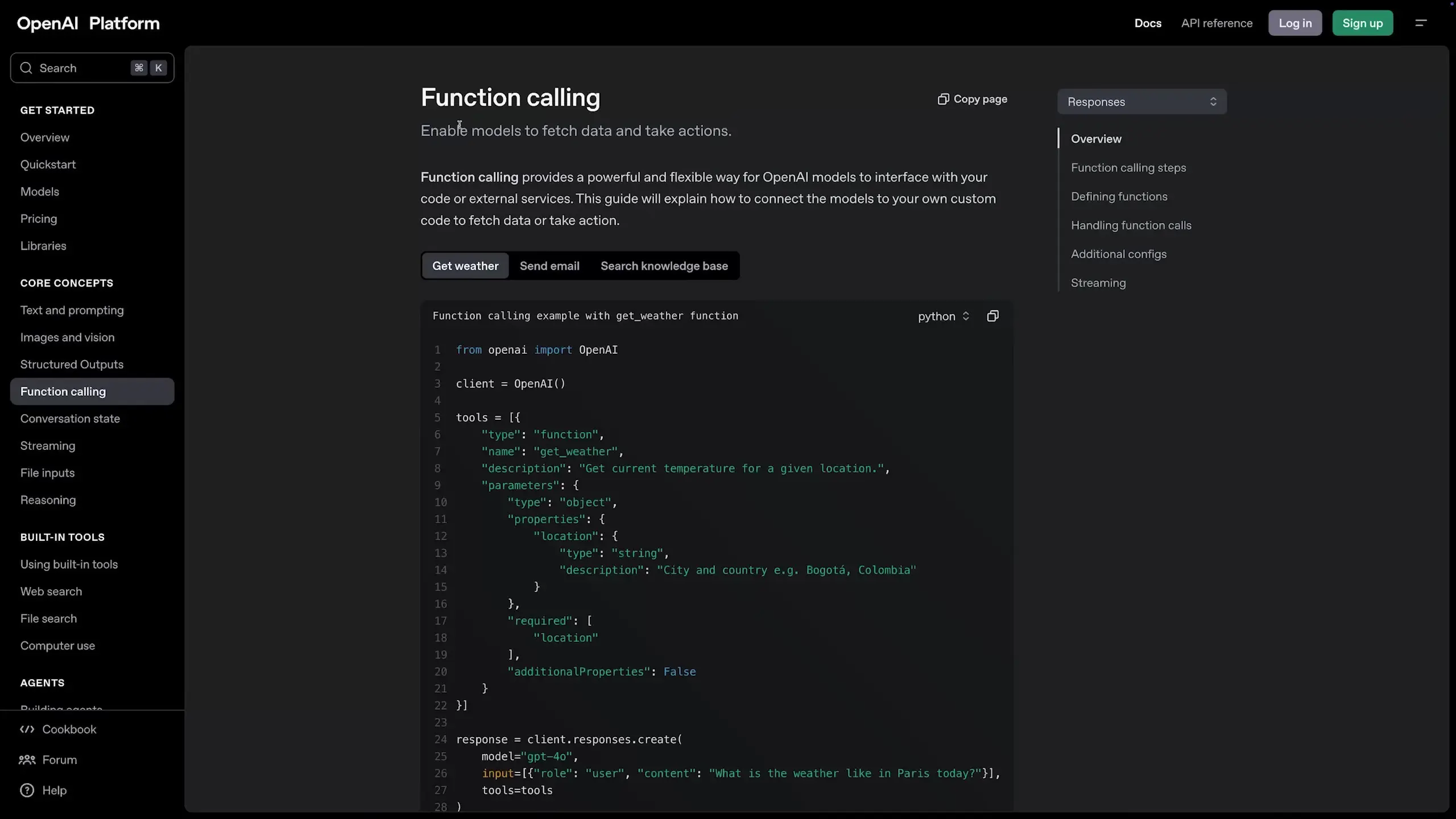

Function Calling: A Standardized Approach to Tool Use

Major LLM providers like OpenAI have standardized this process through features like 'function calling.' Instead of manually crafting system prompts that describe tools, developers can use structured APIs to define functions that the LLM can invoke.

// Example of defining a function for an LLM to use

const functions = [

{

name: "get_weather",

description: "Get the current weather for a location",

parameters: {

type: "object",

properties: {

location: {

type: "string",

description: "City and state, e.g., San Francisco, CA"

}

},

required: ["location"]

}

}

];

// When the LLM wants to use this function, it will generate a structured call

// which your application code will intercept and executeEnter MCP: Model-Context-Protocol

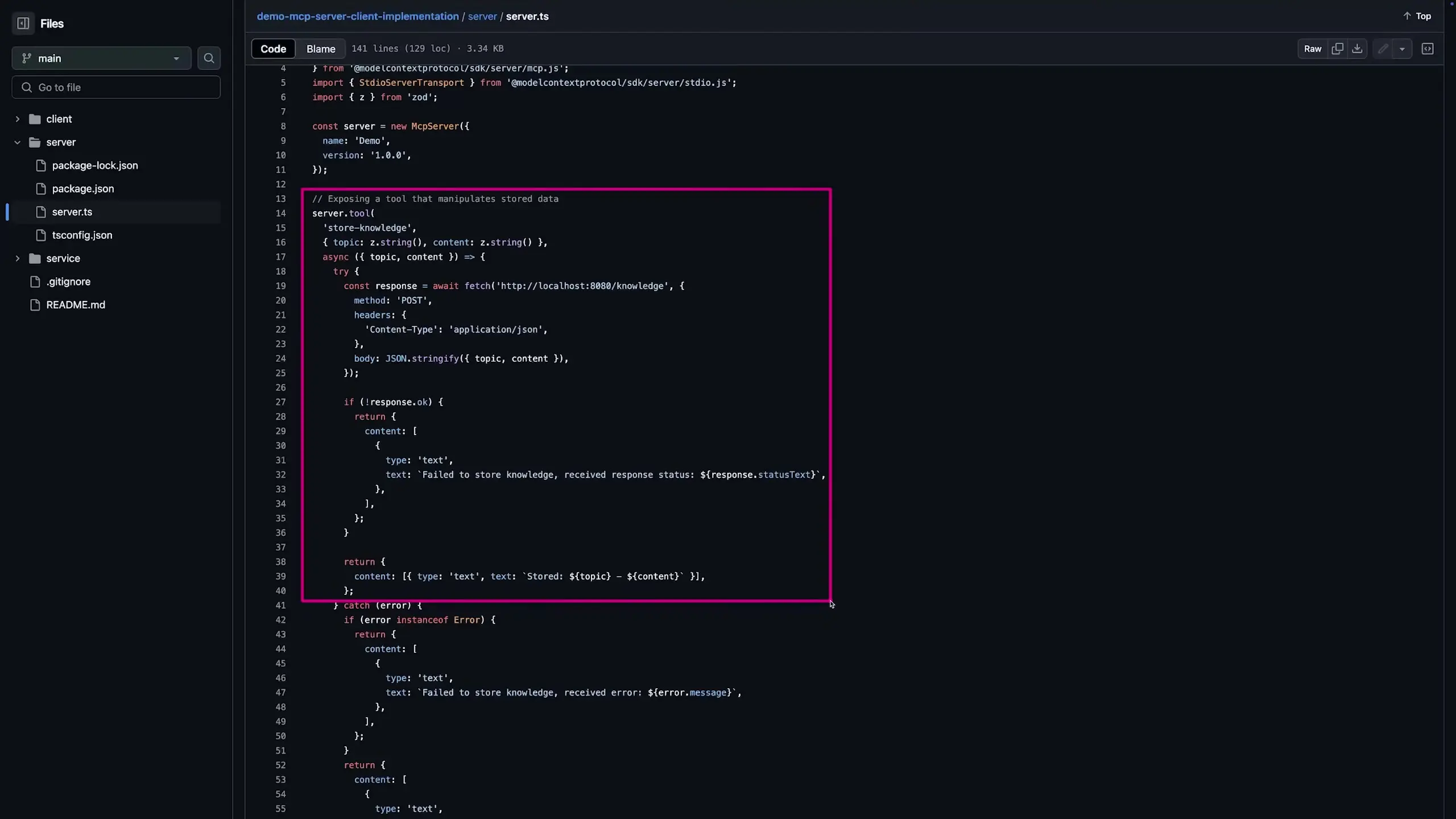

MCP (Model-Context-Protocol) takes this concept further by providing a standardized way for LLM applications to interact with tools and external systems. It addresses several key challenges in building AI applications:

- Consistency in how tools are defined and invoked across different LLMs

- Separation of concerns between the model (LLM), context management, and tool execution

- Standardized protocols for passing information between components

- Improved reliability in tool usage patterns

Building Your Own MCP-Enabled Application

Implementing MCP in your own AI applications involves several components:

- A model component that interfaces with your chosen LLM

- A context manager that maintains conversation state and history

- A protocol layer that standardizes communication between components

- Tool definitions using standardized formats

- Tool execution code that runs when the LLM invokes a tool

- Response handling to incorporate tool results back into the conversation

# Simplified example of an MCP-style tool definition

class MCPTool:

def __init__(self, name, description, parameters, handler):

self.name = name

self.description = description

self.parameters = parameters

self.handler = handler

def execute(self, params):

return self.handler(params)

# Example tool definition

weather_tool = MCPTool(

name="get_weather",

description="Get current weather for a location",

parameters={

"type": "object",

"properties": {

"location": {"type": "string"}

},

"required": ["location"]

},

handler=lambda params: weather_api.get_current(params["location"])

)Benefits of the MCP Approach

Adopting MCP principles in your AI application development offers several advantages:

- Modularity: Easier to swap out different LLMs while maintaining the same tool integrations

- Standardization: Consistent patterns for defining and invoking tools

- Reliability: More predictable tool usage and error handling

- Extensibility: Simpler process for adding new tools to your AI application

- Maintainability: Clearer separation of concerns in your codebase

Conclusion: Beyond the Buzzword

While MCP might be surrounded by hype, the underlying concept addresses real challenges in building sophisticated AI applications. By understanding that LLMs are fundamentally text generators and need structured frameworks to interact with external tools, developers can create more powerful, reliable AI applications.

The MCP approach provides a clear pattern for extending LLM capabilities beyond text generation, enabling a new generation of AI tools that can take meaningful actions in the world while maintaining the core strengths of large language models.

Let's Watch!

Understanding MCP: How LLMs Use Tools Beyond Text Generation

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence