With the recent launch of Claude 4 Opus and Claude 4 Sonnet, developers face increasing confusion about which AI model best serves their coding needs. Both Claude models demonstrate exceptional capabilities in elite coding and structured reasoning, but how do they compare to Google's Gemini 2.5 Pro? This detailed comparison explores the strengths and weaknesses of these leading AI models for various coding scenarios.

Benchmark Performance: Claude 4 vs Gemini 2.5 Pro

On the Swaybench verified test, Claude 4 models lead in structured reasoning and coding categories, outperforming many competitors including Gemini 2.5 Pro. Claude excels in various coding benchmarks, from terminal coding to agentic tool use, positioning it as a top performer for complex development tasks.

The confusion arises when considering the Gemini 2.5 Pro preview, specifically designed for coding purposes. While Gemini offers advantages in pricing and an impressive context window of up to 1 million tokens, plus multimodal understanding capabilities, its coding output quality doesn't quite match what Claude 4 models deliver.

Coding and Debugging Capabilities

Claude 4 models dominate in structured code generation, building applications from scratch, and agent-like workflows. They excel at producing logically organized, maintainable code with clear separation of concepts and concerns—ideal for applications requiring thoughtful architecture.

Gemini 2.5 Pro shines in debugging thanks to its longer context window, but it lacks the same agility and quality in code generation that Claude 4 models provide. However, for rapid prototyping and basic application development, Gemini offers a cost-effective solution that delivers acceptable results.

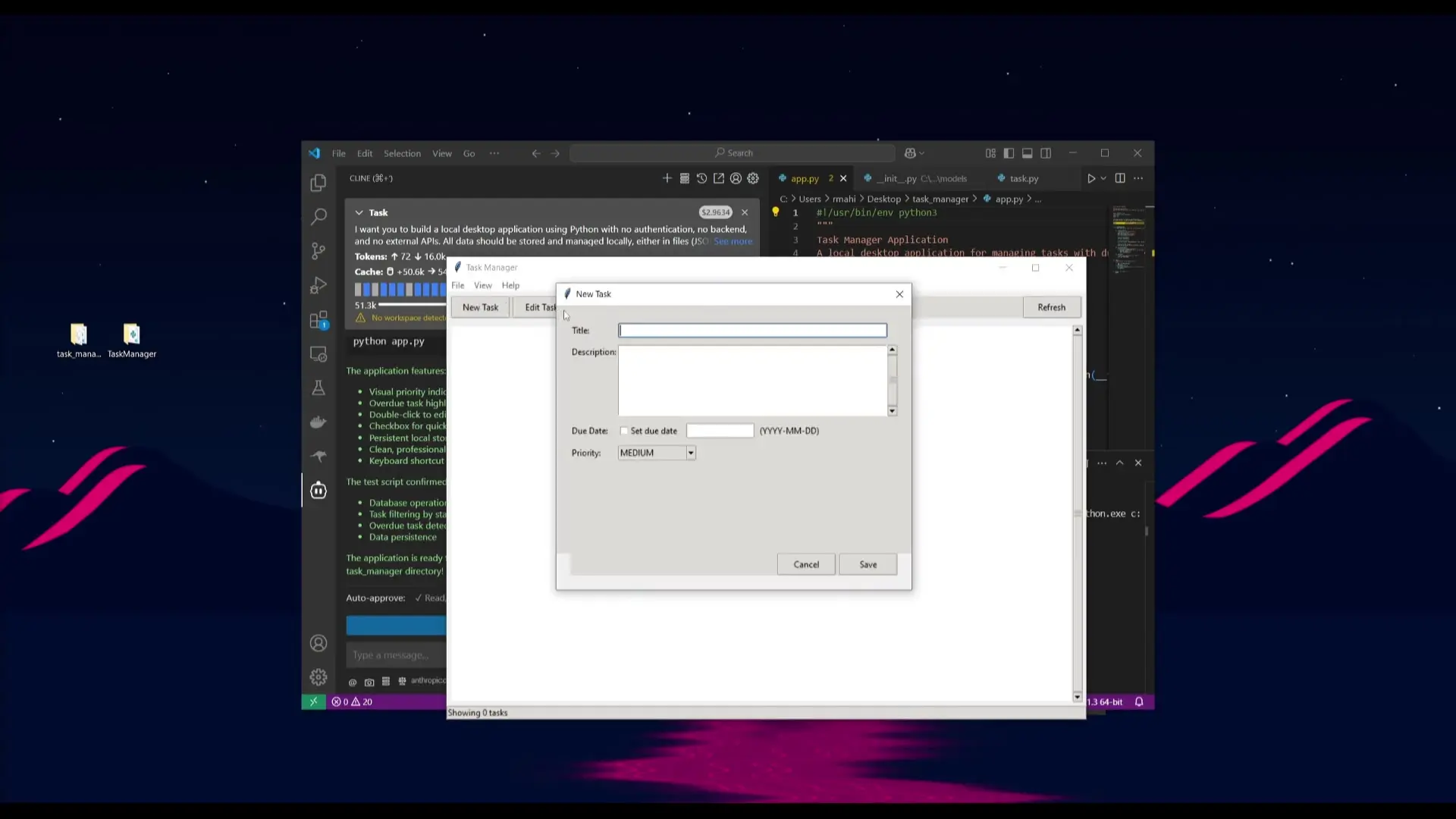

Practical Test: Building a Task Manager Application

In a direct comparison test, both models were tasked with creating a local GUI-based task manager with no backend or API requirements, focusing on clean, modular code. Both successfully generated functional Python applications with key features like task addition, editing, and deletion.

The Claude 4 model produced more comprehensive output, including additional documentation like a README file, and slightly better functionality. However, this came at a significant cost difference: approximately $3 for the Claude generation versus only 15 cents for the Gemini 2.5 Pro output.

- Claude 4: Higher quality output with better documentation (~$3)

- Gemini 2.5 Pro: Good quality output at a fraction of the cost (~$0.15)

- Both: Functional applications meeting the core requirements

UI/UX Design Capabilities

Both models demonstrate strong capabilities in UI/UX design tasks. When tasked with creating a SaaS landing page, Gemini 2.5 Pro generated an exceptional base structure with pricing plans and animations. Claude 4 Sonnet also performed well, creating a landing page with animations not present in the Gemini output.

However, Claude 4 Opus significantly outperformed both in UI/UX design quality. The landing page generated by Opus was visually superior to both Gemini 2.5 Pro and Claude 4 Sonnet outputs, demonstrating its advantage for complex UI/UX design tasks.

Game Development Test: Creating a Tetris Game

To test the models' ability to handle 3D logic, real-time game mechanics, and efficient in-browser JavaScript coding, both were tasked with creating a Tetris game. Both models performed admirably, producing functional games with 3D blocks and appropriate mechanics.

In this case, the Gemini 2.5 Pro output appeared slightly more stable and visually appealing than the Claude Opus generation, though both successfully completed the task with high-quality results.

// Sample Tetris game logic (conceptual example)

function createTetrisGame() {

// Initialize game board

const board = Array(20).fill().map(() => Array(10).fill(0));

// Create tetromino shapes

const shapes = [

[[1,1,1,1]], // I piece

[[1,1], [1,1]], // O piece

[[0,1,0], [1,1,1]], // T piece

// Additional shapes would be defined here

];

// Game loop and rendering logic would follow

function gameLoop() {

update();

render();

requestAnimationFrame(gameLoop);

}

gameLoop();

}When to Choose Claude 4 vs. Gemini 2.5 Pro

Both Claude 4 and Gemini 2.5 Pro are exceptional AI coding assistants, but they excel in different scenarios. Your choice should depend on your specific requirements, budget constraints, and the complexity of your development tasks.

Choose Claude 4 Models For:

- Advanced code generation requiring structured reasoning

- Building complex applications from scratch

- Agent-like workflows and intelligent assistants

- Enterprise applications requiring high precision and reliability

- Complex UI/UX design (especially with Claude 4 Opus)

Choose Gemini 2.5 Pro For:

- Debugging or refactoring large code bases (leveraging its 1M token context)

- Working with video and audio inputs (multimodal capabilities)

- Budget-conscious development and rapid prototyping

- General creativity tasks requiring flexibility

- Projects where cost efficiency is prioritized over absolute code quality

Cost-Benefit Analysis

The cost difference between these models is significant. In our testing, Claude 4 generations typically cost 10-20 times more than equivalent Gemini 2.5 Pro outputs. For professional development teams working on critical projects, Claude 4's superior quality may justify the higher cost. For individual developers, students, or projects with tight budgets, Gemini 2.5 Pro offers an excellent balance of capability and affordability.

Conclusion: The Right Tool for Your Coding Tasks

The choice between Claude 4 and Gemini 2.5 Pro ultimately depends on your specific use case, budget, and quality requirements. Claude 4 models (particularly Opus) represent the pinnacle of AI coding assistance for complex, structured tasks where quality is paramount. Gemini 2.5 Pro offers a more affordable alternative that performs admirably for many common development tasks.

As AI coding assistants continue to evolve, developers benefit from having multiple options to choose from based on their specific needs. Both Claude 4 and Gemini 2.5 Pro represent significant advancements in AI-assisted development, making sophisticated coding tasks more accessible to developers at all levels.

Let's Watch!

Claude 4 vs Gemini 2.5 Pro: Which AI Excels at Coding Tasks?

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence