Code coverage is a critical metric in software development that measures how much of your codebase is being tested by your automated tests. While achieving high code coverage is important for maintaining code quality, writing tests to cover every line of code can be tedious and time-consuming. This is where AI-powered code coverage tools can make a significant difference in your testing workflow.

Understanding Code Coverage in Software Testing

Before diving into how AI can help with code coverage, it's important to understand what code coverage analysis aims to achieve in software testing. Code coverage is a measurement used to describe the degree to which the source code of a program is executed when a particular test suite runs.

There are several types of code coverage metrics that tools measure in the context of software testing:

- Line coverage: The percentage of code lines that have been executed

- Branch coverage: The percentage of branches (decision points) that have been executed

- Function coverage: The percentage of functions that have been called

- Statement coverage: The percentage of statements that have been executed

While high code coverage doesn't guarantee bug-free code, it does increase confidence that your code has been thoroughly tested and reduces the likelihood of undiscovered bugs.

The Challenge of Maintaining High Code Coverage

Many development teams aim for a specific code coverage percentage as part of their quality standards. However, writing and maintaining tests to achieve high coverage can be challenging for several reasons:

- Writing tests for edge cases can be time-consuming

- Some code paths may be difficult to trigger in test environments

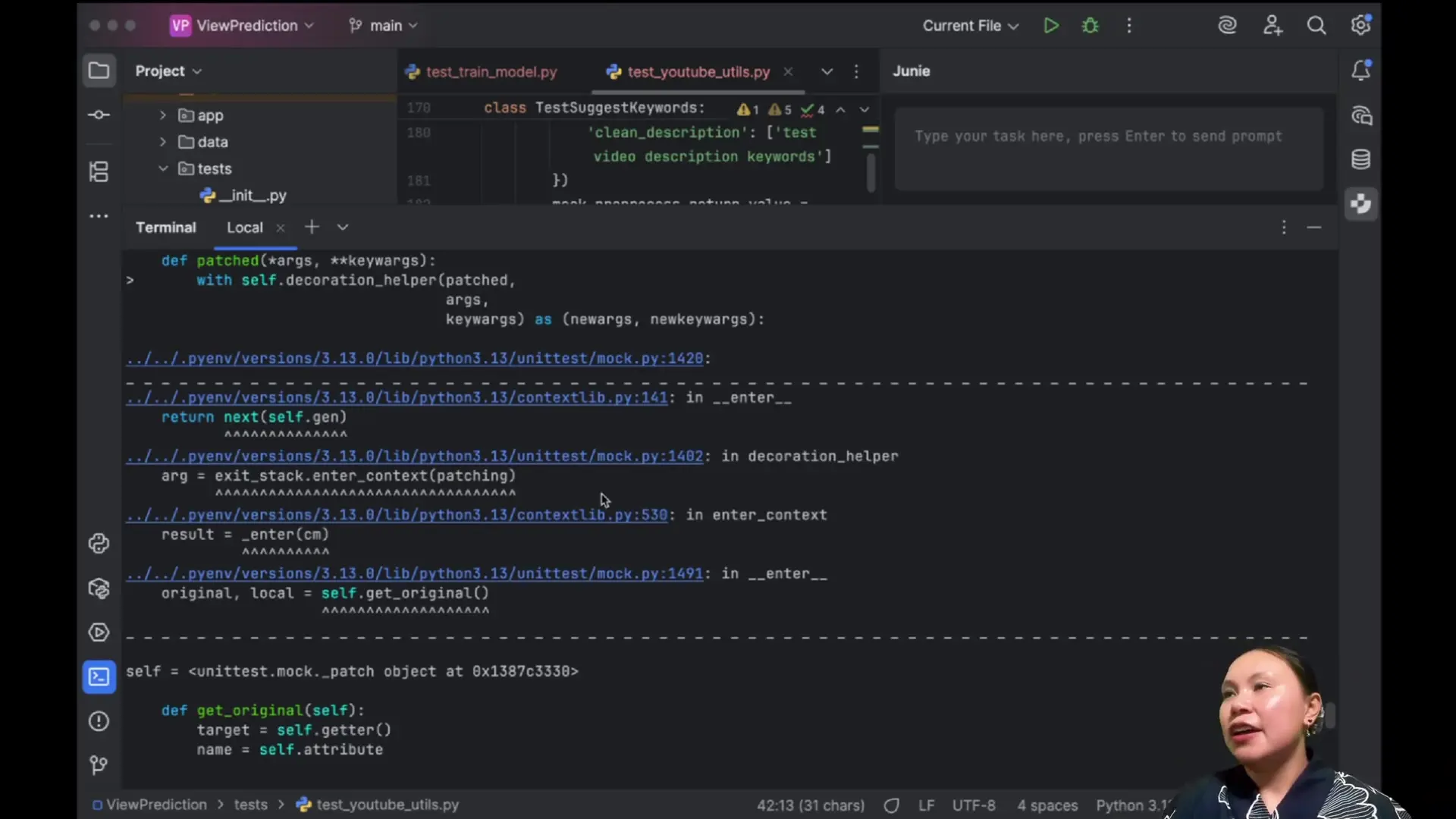

- Mocking dependencies can be complex and error-prone

- Identifying which parts of the code lack coverage requires manual analysis

- Maintaining tests as code evolves adds ongoing overhead

This is where AI-powered tools can significantly improve the testing process by automating many of these tasks.

How AI Tools Can Improve Code Coverage in Unit Testing

AI-powered testing tools can transform your approach to code coverage by automating test creation and identifying gaps in your test suite. Here's how these tools can help:

- Automatically analyzing your project structure to understand what needs testing

- Identifying modules and functions that lack coverage

- Generating comprehensive test cases including edge cases

- Creating proper mock objects for dependencies

- Running tests and verifying they pass

- Iteratively fixing issues and improving tests until coverage goals are met

These capabilities allow developers to focus on more complex aspects of development while ensuring that code coverage requirements are met efficiently.

Practical Example: Using AI to Improve Code Coverage in a Python Project

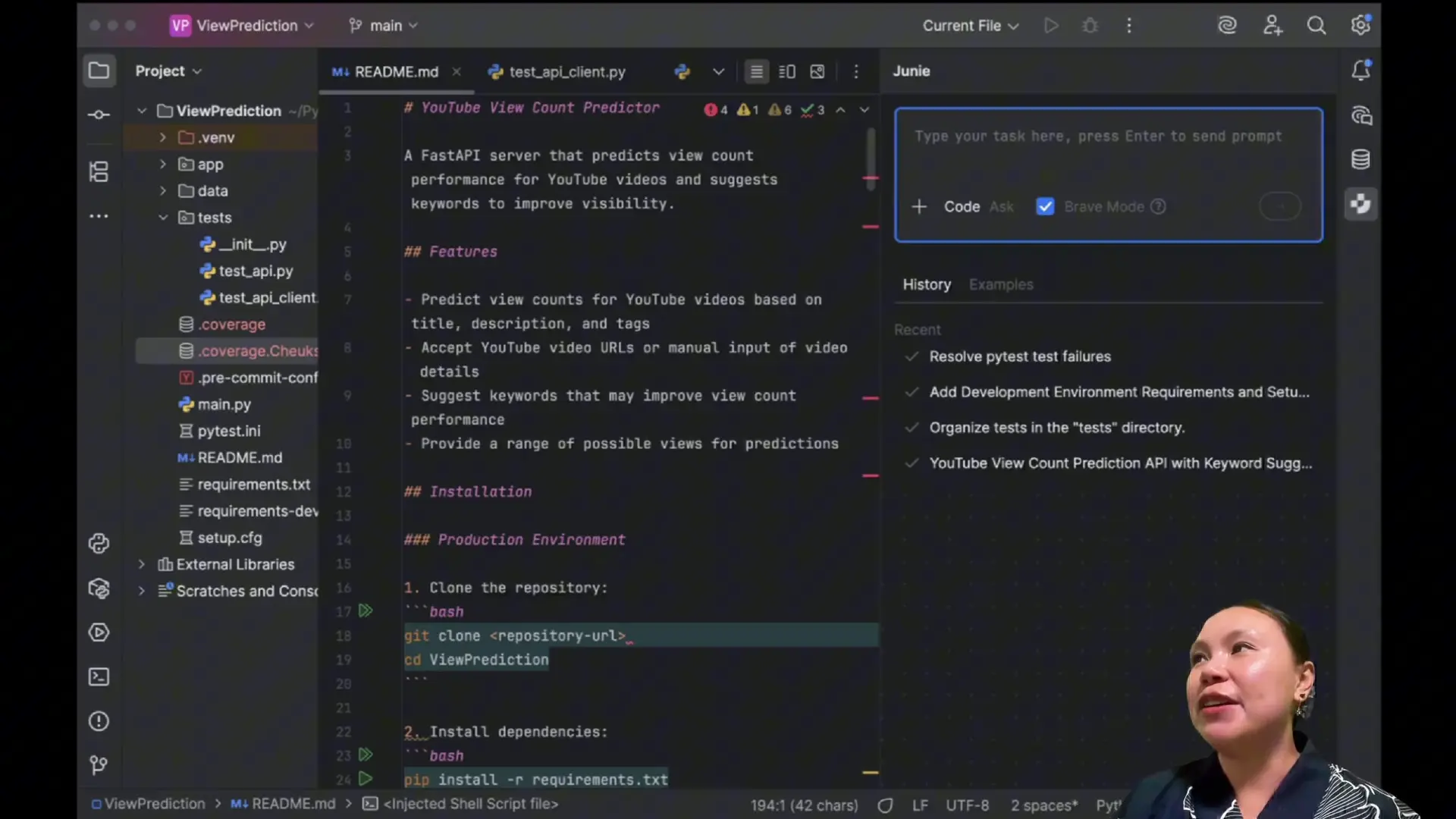

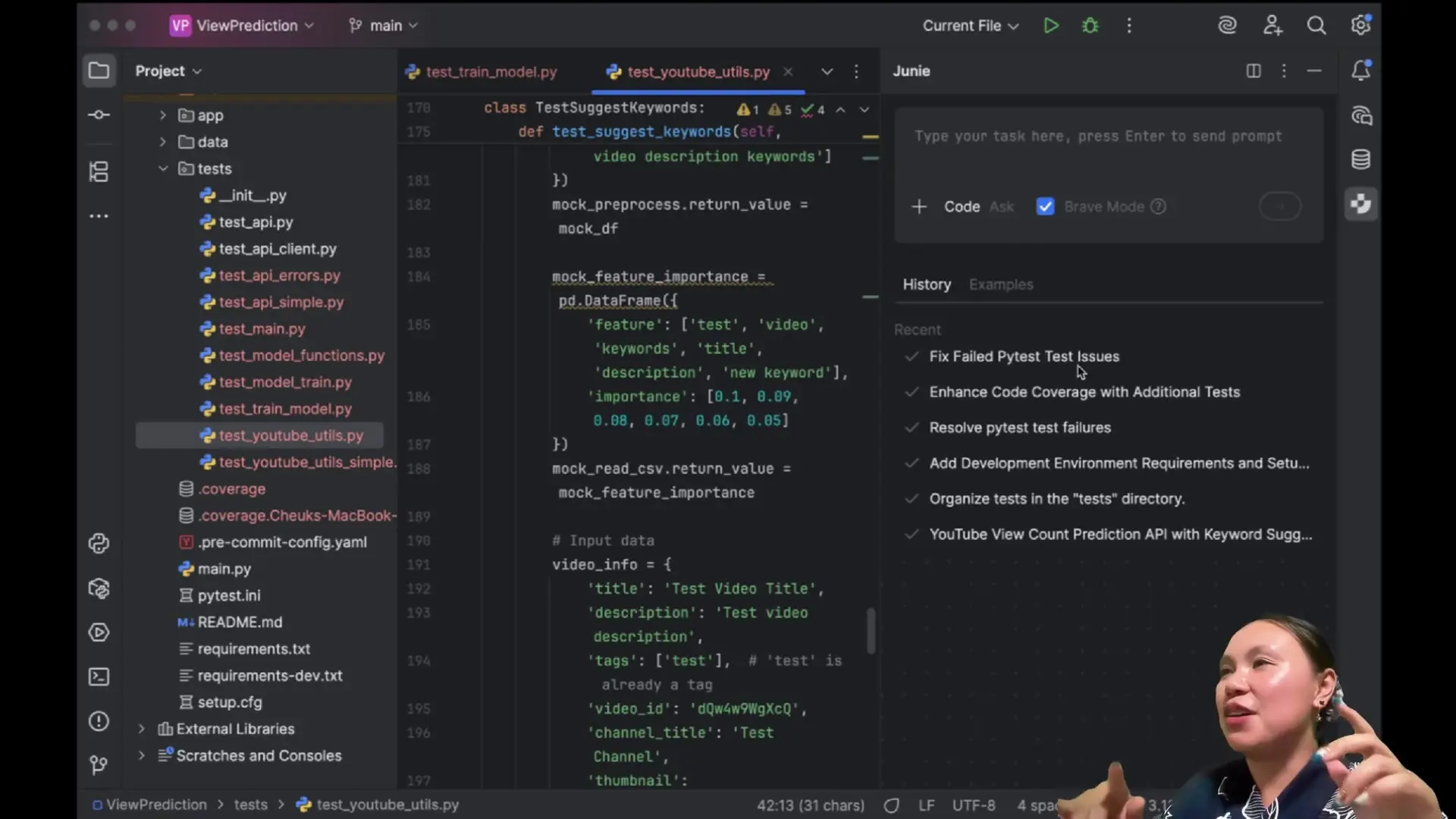

Let's look at a practical example of how an AI tool can help improve code coverage in a Python project that uses FastAPI. In this scenario, we have a machine learning model that predicts potential view counts for YouTube videos based on their titles and descriptions.

The initial code coverage report shows only 64% coverage, indicating that significant portions of the codebase remain untested:

$ pytest --cov=app

...

Name Stmts Miss Cover

----------------------------------------

app/__init__.py 0 0 100%

app/main.py 45 16 64%

app/model.py 32 12 63%

app/utils.py 18 7 61%

----------------------------------------

TOTAL 95 35 63%By using an AI-powered code coverage tool, we can automatically generate additional tests to increase coverage. Here's the process:

- The AI analyzes the project structure and existing tests

- It identifies untested modules and functions

- It creates new test files with appropriate test cases

- It runs the tests to verify they pass

- It iteratively fixes any failing tests

For our example project, the AI might generate tests like this for previously untested error handling code:

def test_handle_api_error():

"""Test that API errors are properly handled."""

# Mock the API response

mock_response = MagicMock()

mock_response.status_code = 500

mock_response.json.side_effect = ValueError("Invalid JSON")

with patch('requests.get', return_value=mock_response):

result = handle_api_request('https://example.com/api')

# Verify error handling works correctly

assert result['success'] == False

assert 'error' in result

After the AI has generated and verified all the new tests, the coverage report shows a significant improvement:

$ pytest --cov=app

...

Name Stmts Miss Cover

----------------------------------------

app/__init__.py 0 0 100%

app/main.py 45 2 96%

app/model.py 32 1 97%

app/utils.py 18 0 100%

----------------------------------------

TOTAL 95 3 97%Best Practices for Using AI in Code Coverage Testing

While AI tools can dramatically improve your code coverage, they work best when used according to these best practices:

- Review AI-generated tests to ensure they test meaningful behavior, not just increase coverage numbers

- Use AI-generated tests as a starting point and enhance them with domain-specific knowledge

- Integrate AI tools into your CI/CD pipeline to maintain coverage as code evolves

- Set realistic coverage goals—100% coverage isn't always necessary or practical

- Focus on critical paths and components that have higher risk

The Difference Between Code Coverage and Effective Testing

It's important to understand that high code coverage doesn't guarantee effective testing. The difference between code coverage and unit testing is that coverage measures quantity, while effective testing focuses on quality. Here are some key distinctions:

- Code coverage tells you which code was executed during tests, not whether the tests are meaningful

- 100% code coverage doesn't guarantee effective testing—tests could pass without properly validating behavior

- Unit tests should verify that code behaves correctly, not just that it executes

- Coverage tools measure execution, not correctness

AI tools can help increase coverage, but human judgment is still essential for ensuring tests validate the right behaviors and edge cases.

Conclusion: Balancing Automation and Human Expertise

AI-powered code coverage tools represent a significant advancement in software testing automation. They can dramatically reduce the time and effort required to achieve high code coverage, allowing developers to focus on more complex aspects of development.

However, these tools work best when combined with human expertise. Developers should review AI-generated tests, enhance them with domain-specific knowledge, and ensure they're testing meaningful behaviors—not just increasing coverage numbers.

By leveraging the strengths of both AI automation and human judgment, development teams can achieve higher code quality, better test coverage, and more efficient testing processes. This balanced approach represents the future of software testing—where AI handles the repetitive aspects of test creation while humans focus on ensuring tests validate the right behaviors and edge cases.

Let's Watch!

How to Boost Unit Testing Efficiency with AI-Powered Code Coverage Tools

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence