In the rapidly evolving landscape of AI application development, efficiency and capability are paramount concerns for developers. OpenAI's built-in tools represent a significant advancement in how developers can enhance their AI applications without writing complex code. These tools provide out-of-the-box functionality that extends the capabilities of language models, allowing them to perform actions they couldn't do on their own.

What Are Built-in Tools?

Built-in tools comprise two essential components: the 'built-in' aspect and the 'tools' themselves. The tools component refers to the ability to add capabilities to language models, enabling them to interact with data and take actions beyond their inherent capabilities. The 'built-in' component means these tools are ready to use without requiring any coding on the developer's part.

Unlike traditional function calling where developers need to handle execution on their side, built-in tools are executed directly on OpenAI's infrastructure. This means developers can simply tell the model which tools to use, and when the model decides to use a tool, it executes automatically and incorporates the results into the conversation context.

Built-in Tools vs. Function Calling: Key Differences

For developers familiar with function calling in AI development, understanding the difference between this approach and built-in tools is crucial:

- Function calling requires developers to define functions, have the model suggest parameters, execute the function on their infrastructure, and return results to the model

- Built-in tools are executed directly on OpenAI's infrastructure without developer intervention

- With built-in tools, once the model decides to use a tool, it automatically executes and generates a response

- This automation eliminates the middle execution step required in function calling

This difference makes built-in tools particularly valuable in DevOps environments where reducing infrastructure complexity and deployment overhead is a priority.

The 6 Built-in Tools Available for Developers

OpenAI currently offers six powerful built-in tools that developers can leverage in their applications:

1. Web Search

One of the most significant limitations of language models is their knowledge cutoff date. For current OpenAI models, that's May 2024. Web Search overcomes this limitation by giving models the ability to search the internet for real-time information, making them aware of current events and recent developments without requiring developers to build complex web scraping or API integration systems.

2. File Search

File Search enables models to search through a developer's knowledge base or database of files. This implements Retrieval Augmented Generation (RAG) functionality, allowing models to access and use internal knowledge that isn't in their training data. This is particularly valuable for organizations with proprietary information that needs to be accessible to their AI applications.

3. MCP Tool

The Model Context Protocol (MCP) tool is exceptionally powerful as it's not just one tool but a gateway to hundreds of tools. It allows models to access any remote MCP server, enabling developers to define how language models can interact with their own functions and APIs through a standardized protocol.

4. Code Interpreter

The Code Interpreter tool enables models to write and execute code to solve problems. This is particularly useful for data analysis tasks. The code is executed on OpenAI's infrastructure, and the results—whether text or visualizations like charts—are returned to the user. This eliminates the need for developers to build their own code execution environments.

5. Computer Use

Computer Use allows models to interact with computer interfaces visually, as if they were human users. Models can suggest actions like clicking buttons or scrolling down pages. Unlike other built-in tools, Computer Use requires developers to execute these actions in their own computer environment, making it a hybrid approach.

6. Image Generation

While not covered extensively in this overview, Image Generation is another built-in tool that allows models to create images based on text descriptions, adding visual content capabilities to AI applications.

Implementing Built-in Tools in DevOps Workflows

For DevOps professionals, built-in tools offer several advantages in development workflows:

- Reduced infrastructure requirements as execution happens on OpenAI's servers

- Simplified integration process with no need for complex middleware

- Faster development cycles with ready-to-use capabilities

- Ability to quickly prototype and test AI features before committing to custom implementations

- Standardized approach to common AI enhancement needs like data retrieval and web search

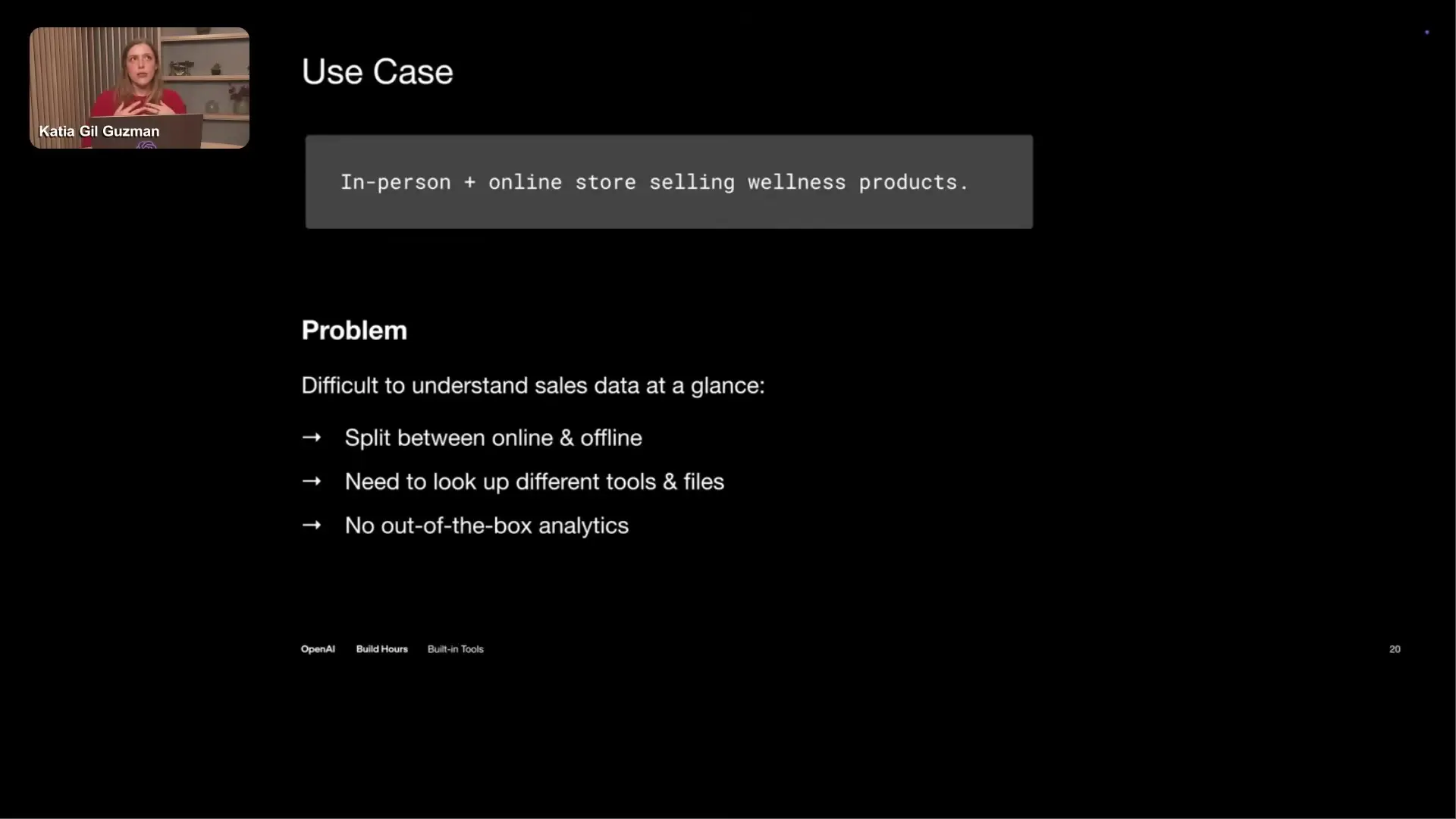

Practical Applications in Development

The built-in tools can be applied in various development scenarios:

- Creating data exploration dashboards using Code Interpreter for visualization

- Building knowledge bases with File Search for internal documentation

- Developing real-time information systems with Web Search

- Automating complex workflows through MCP Tool integration with existing services

- Prototyping AI assistants that can navigate user interfaces with Computer Use

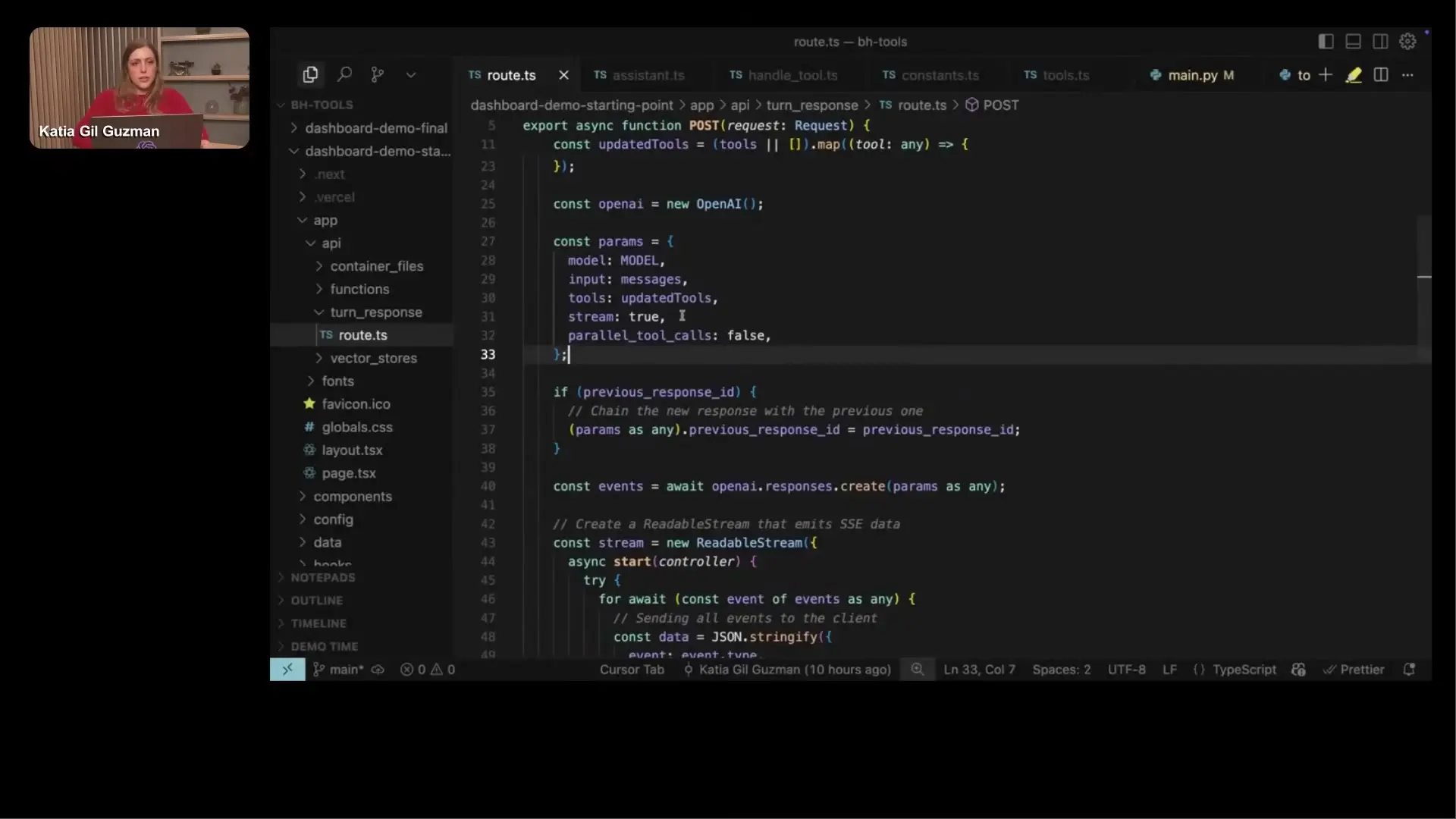

Getting Started with Built-in Tools

Developers looking to experiment with built-in tools can start with OpenAI's playground, which provides an environment for testing and iterating on these capabilities without writing code. The playground allows developers to select which tools to enable and test various prompts to see how the model uses the tools in different scenarios.

// Example of enabling built-in tools in an API call

const response = await openai.chat.completions.create({

model: "gpt-4-turbo",

messages: [{ role: "user", content: "What's the weather in San Francisco today?" }],

tools: [{ type: "web_search" }]

});This simple implementation enables web search capabilities for a weather query, demonstrating how easily these tools can be integrated into applications.

Conclusion

Built-in tools represent a significant advancement in how developers can enhance AI applications without writing complex integration code. By leveraging OpenAI's infrastructure and expertise, developers can quickly add powerful capabilities like web search, file search, and code interpretation to their applications, focusing more on their core business logic and less on the underlying AI infrastructure.

As the ecosystem of built-in tools continues to expand, developers will have even more options for creating sophisticated AI applications with minimal overhead, making advanced AI capabilities more accessible to development teams of all sizes.

Let's Watch!

7 Built-in Tools Developers Use to Enhance AI Applications

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence