Docker has revolutionized how developers build, ship, and run applications. If you're looking to enhance your engineering skills or need to use Docker at work, understanding its core concepts is essential. This comprehensive guide will walk you through everything you need to know to get started with Docker quickly and effectively.

What is Docker and Why Should You Use It?

In simple terms, Docker is virtualization software that makes developing and deploying applications significantly easier compared to traditional methods. Docker accomplishes this by packaging an application into what's called a container, which includes everything the application needs to run: the code itself, libraries, dependencies, runtime, and environment configuration.

This packaging approach solves several critical problems in software development and deployment:

- Eliminates the "it works on my machine" problem by ensuring consistent environments

- Standardizes the installation and configuration process across different operating systems

- Reduces setup time for new developers joining a project

- Simplifies the deployment process by packaging application and environment together

- Enables running multiple versions of the same service without conflicts

Docker vs. Virtual Machines: Understanding the Difference

While both Docker and virtual machines (VMs) are virtualization technologies, they work quite differently. To understand this difference, we need to look at how operating systems are structured.

An operating system consists of two main layers: the OS kernel (which communicates with hardware) and the OS applications layer. When you install a traditional VM, it includes both layers - a complete operating system with its own kernel. This makes VMs resource-intensive and slower to start.

Docker containers, on the other hand, share the host system's kernel and only package the applications layer. This makes Docker containers:

- Much more lightweight (megabytes vs. gigabytes)

- Faster to start (seconds vs. minutes)

- More efficient with system resources

- Easier to distribute due to smaller size

The Traditional Development Workflow Problem

Before containers, setting up a development environment was often tedious and error-prone. Developers would need to install all required services (databases, caching systems, message brokers, etc.) directly on their operating systems.

This approach created several challenges:

- Installation processes differed across operating systems (Windows, macOS, Linux)

- Multiple manual steps increased the chance of errors

- Configuring services correctly was time-consuming

- Version conflicts between dependencies were common

- Onboarding new team members took significant time

How Docker Solves Development Environment Problems

With Docker, you don't install services directly on your operating system. Instead, each service is packaged in an isolated container with its specific version and configuration. As a developer, you simply run a single Docker command to fetch and start any service you need.

The benefits of this approach are substantial:

- The same Docker commands work across all operating systems

- Starting services requires just one command instead of multiple installation steps

- Services run in isolation, preventing version conflicts

- Environment setup becomes standardized for all team members

- You can run different versions of the same service simultaneously

Streamlining Deployment with Docker

Docker also significantly improves the deployment process. Traditionally, development teams would produce application artifacts along with instructions for how to install and configure them. Operations teams would then follow these instructions to set up the application on servers.

This approach often led to miscommunication, configuration errors, and deployment failures. With Docker, developers package their application and its environment into a container image. Operations teams simply need to run that container, eliminating most configuration steps and reducing communication overhead.

Core Docker Concepts

To work effectively with Docker, you need to understand several key concepts:

Docker Images

A Docker image is a read-only template containing an application along with its dependencies and configuration. Think of it as a snapshot or blueprint of an application and its environment. Images are built from instructions in a Dockerfile and can be stored in registries.

Docker Containers

A container is a runnable instance of an image. When you start a container, Docker creates a writable layer on top of the immutable image, allowing the application to run in its isolated environment. Multiple containers can run from the same image, each operating independently.

Dockerfile

A Dockerfile is a text file containing instructions for building a Docker image. It specifies the base image, adds application code, installs dependencies, sets configuration options, and defines how the application should run.

FROM node:14

WORKDIR /app

COPY package*.json ./

RUN npm install

COPY . .

EXPOSE 3000

CMD ["npm", "start"]Docker Registry

A Docker registry is a repository for storing and distributing Docker images. Docker Hub is the public registry maintained by Docker, containing thousands of pre-built images. Companies often maintain private registries for their proprietary images.

Essential Docker Commands

Here are some fundamental Docker commands you'll use regularly:

- docker pull [image]: Download an image from a registry

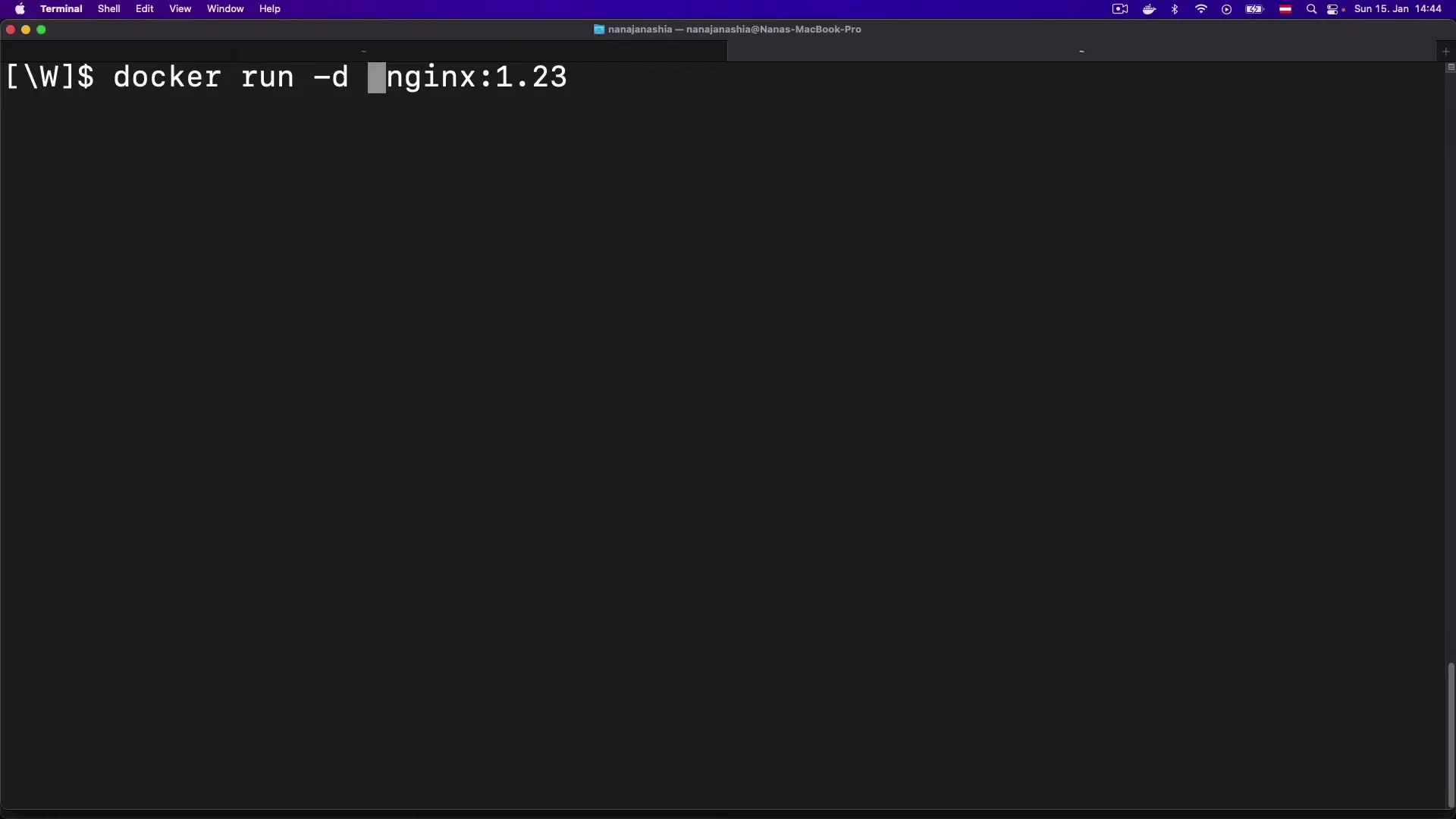

- docker run [image]: Create and start a container from an image

- docker ps: List running containers

- docker ps -a: List all containers (including stopped ones)

- docker images: List available images

- docker build -t [name:tag] .: Build an image from a Dockerfile in the current directory

- docker stop [container]: Stop a running container

- docker rm [container]: Remove a container

- docker rmi [image]: Remove an image

Working with Port Binding

When running containers that provide services (like web servers or databases), you'll need to map container ports to host ports to access these services. This is done using the -p flag:

docker run -p 8080:3000 my-node-appThis command maps port 3000 inside the container to port 8080 on your host machine, allowing you to access the application at http://localhost:8080.

Image Versioning with Tags

Docker images use tags to manage different versions. When you don't specify a tag, Docker assumes you want the 'latest' version. However, for production use, it's best practice to use specific version tags to ensure consistency:

docker pull node:14.17.0

docker build -t my-app:1.0.0 .Docker in the Software Development Lifecycle

Docker plays a crucial role throughout the software development lifecycle:

- Development: Provides consistent environments for all developers

- Testing: Ensures tests run in the same environment as production

- Continuous Integration: Enables reliable builds in CI pipelines

- Deployment: Simplifies deploying to various environments

- Production: Allows for efficient resource utilization and scaling

Next Steps in Your Docker Journey

Once you've mastered the basics of Docker, consider exploring these more advanced topics:

- Docker Compose for multi-container applications

- Docker networking concepts

- Docker volumes for persistent data

- Docker Swarm or Kubernetes for container orchestration

- CI/CD pipelines with Docker

- Security best practices for containers

Conclusion

Docker has transformed how we develop, ship, and run applications by solving critical problems in the software development and deployment process. By standardizing environments and simplifying configuration, Docker allows developers to focus more on writing code and less on environment setup. Whether you're just starting your development career or looking to enhance your DevOps skills, Docker is an essential technology worth mastering.

Let's Watch!

Docker for Beginners: Learn Essential Concepts in 3 Hours

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence