Docker has been making significant strides in the AI space recently, introducing powerful features that aim to transform how developers work with machine learning models. With the release of Docker Desktop 4.40, the company unveiled its Model Runner feature, expanding Docker's footprint in the AI ecosystem. But the question on everyone's mind: can Docker's new AI capabilities genuinely replace specialized tools like Ollama for local LLM deployment and management?

Understanding Docker's Model Runner

Docker's Model Runner, introduced in Docker Desktop 4.40, represents a significant addition to Docker's toolkit. This feature allows developers to pull and run AI models locally, making them accessible for questioning and integration into applications. The Model Runner currently supports Mac and Windows platforms, enabling seamless local AI model deployment.

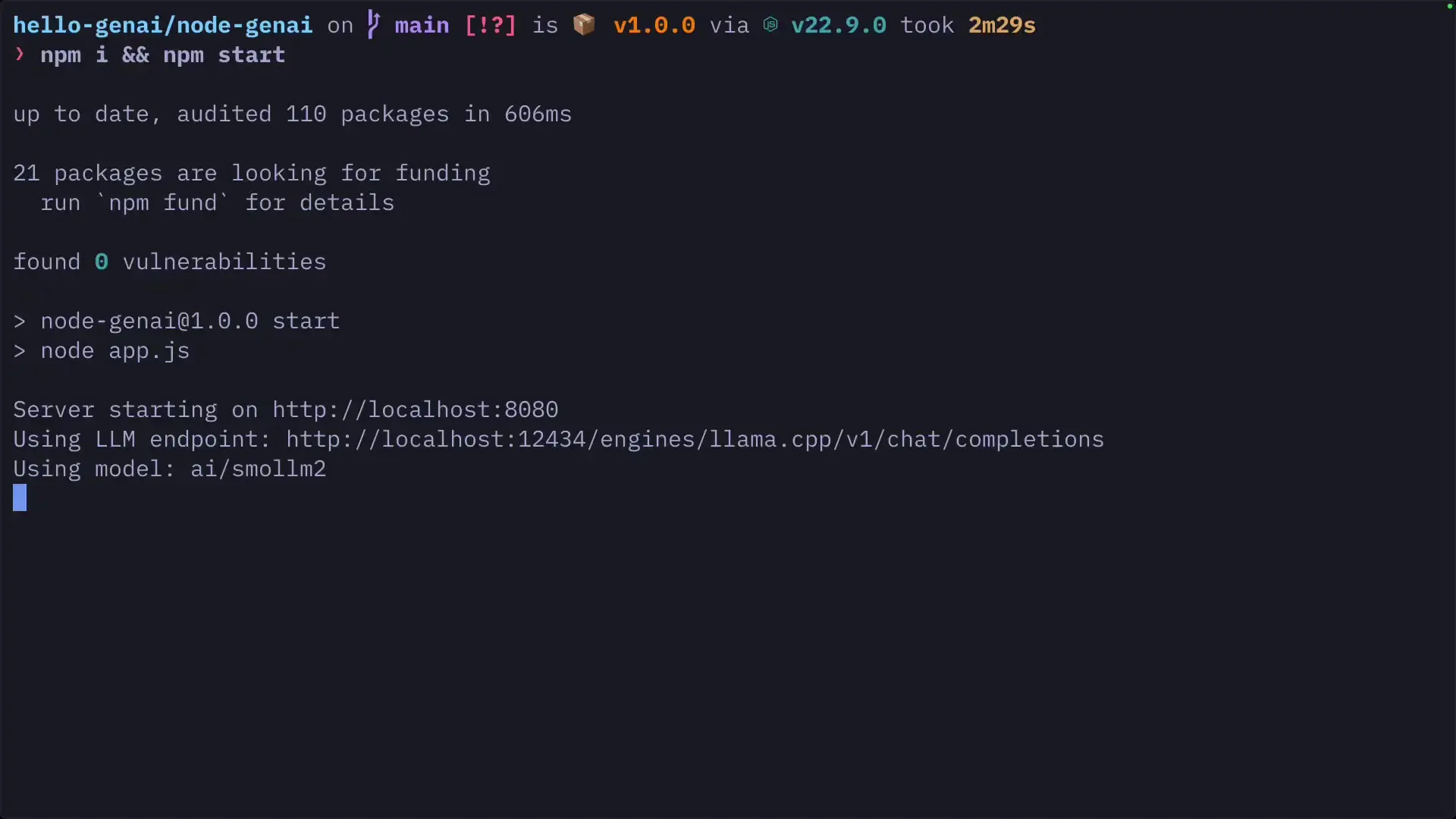

What makes Model Runner particularly interesting is how it exposes models through an OpenAI-compatible API endpoint. This design choice makes it remarkably straightforward to integrate with existing applications that already support OpenAI's API format.

# Example of exposing a model through localhost with Docker Model Runner

docker run -p 12434:12434 model-nameOnce running, the model becomes accessible through a localhost endpoint (e.g., localhost:12434) using a familiar OpenAI-style API format. This compatibility layer significantly reduces the learning curve for developers already working with AI model APIs.

Docker Model Runner vs. Ollama: A Detailed Comparison

While Docker's Model Runner offers compelling features, it's essential to evaluate how it stacks up against Ollama, a popular tool specifically designed for running LLMs locally. Let's break down the key differences:

Model Selection and Availability

One significant limitation of Docker's Model Runner is its model catalog. Currently, Docker Hub offers fewer models compared to Ollama's extensive library. For developers who need access to a wide range of specialized or cutting-edge models, this could be a decisive factor in favor of Ollama.

User Interface and Experience

Ollama's web UI has been refined over time and offers a more polished, user-friendly experience specifically designed for interacting with LLMs. Docker's interface, while functional, doesn't match Ollama's purpose-built design for AI model interaction. For users who prioritize a streamlined UI for model interaction, Ollama maintains a clear advantage.

Integration with Development Workflows

Where Docker Model Runner shines is in its natural integration with existing Docker-based development workflows. For teams already using Docker extensively, having AI models running in the same containerized environment as their applications offers significant advantages in terms of consistency and deployment simplicity.

- Docker Model Runner: Better integration with containerized applications

- Ollama: Superior dedicated UI for model interaction

- Docker Model Runner: OpenAI-compatible API endpoints

- Ollama: Larger model selection

- Docker Model Runner: Unified development and AI environment

Gordon AI Agent: Docker's Secret Weapon

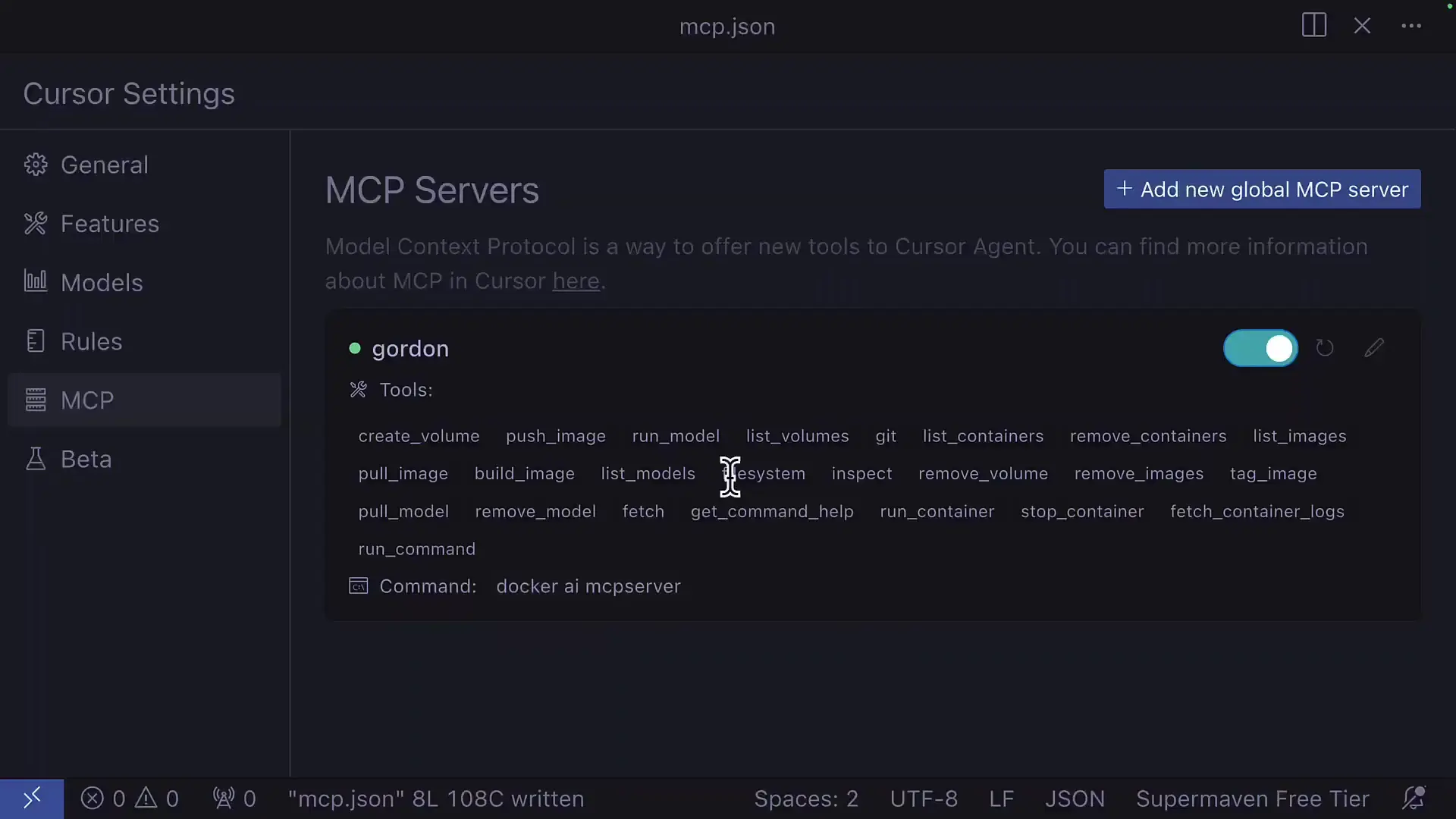

While Model Runner might not yet outshine Ollama in all aspects, Docker's Gordon AI agent represents a genuine innovation that could tip the scales in Docker's favor for many developers. Gordon is an AI assistant specialized in containerization tasks, capable of automatically containerizing applications, evaluating Dockerfiles, and performing various Docker and Kubernetes operations.

Gordon's capabilities extend beyond basic containerization through its toolbox, which allows it to perform operations like fetching URLs and accessing the file system. This extensibility makes it a powerful assistant for DevOps and containerization tasks.

MCP Support: The Game-Changing Feature

What truly distinguishes Gordon is its exceptional Model Context Protocol (MCP) support. Gordon can access hundreds of different MCP servers available in Docker Hub, from Notion to Redis to Azure, significantly expanding its capabilities beyond basic containerization.

The ability to use Docker Compose files to configure MCP servers creates powerful collaboration possibilities. Teams can commit Gordon Compose files to repositories, ensuring that everyone working on a project has access to the exact same MCP servers required, regardless of which AI assistant they're using—whether it's Cursor, Windsurf, or Copilot.

# Example Docker Compose file for Gordon AI with MCP server

version: '3'

services:

gordon-ai:

image: docker/gordon-ai

environment:

- MCP_SERVER=context7

volumes:

- ./project:/workspaceDocker Model Runner Performance and Efficiency

When evaluating Docker Model Runner against Ollama, performance considerations play a crucial role. While comprehensive benchmarks comparing Docker Model Runner and Ollama are still emerging, Docker's containerization expertise potentially gives it an edge in resource management and deployment efficiency.

Docker has demonstrated impressive capabilities in optimizing container sizes—in some cases reducing image sizes from over 1GB to just 10MB. This optimization expertise could translate to more efficient AI model serving, particularly important for resource-constrained environments or edge deployments.

Use Cases: When to Choose Docker Model Runner vs. Ollama

Ideal Scenarios for Docker Model Runner

- Teams already heavily invested in Docker infrastructure

- Projects requiring tight integration between applications and AI models

- Developers seeking unified management of both application containers and AI models

- Organizations that value Docker's enterprise-grade security and management features

- Teams that would benefit from Gordon AI's containerization assistance

Scenarios Where Ollama Still Leads

- Users requiring access to the widest possible range of LLMs

- Scenarios where a specialized, user-friendly interface for model interaction is paramount

- Lighter-weight deployments focused exclusively on LLM serving

- Users who don't need the broader Docker ecosystem integration

- Environments where Ollama's specific performance characteristics are preferred

Setting Up Docker Model Runner: A Quick Tutorial

Getting started with Docker Model Runner is straightforward, especially for those already familiar with Docker. Here's a basic tutorial to help you deploy your first AI model using Docker Model Runner:

- Ensure you have Docker Desktop 4.40 or later installed

- Open Docker Desktop and navigate to the AI section

- Select a model from the available options in Docker Hub

- Pull the model using the Docker CLI or Desktop interface

- Run the model with port mapping to make it accessible (e.g., docker run -p 12434:12434 model-name)

- Connect to the model via localhost:12434 using the OpenAI-compatible API format

- Integrate with your application using standard API calls

This simplicity of deployment is one of Docker Model Runner's strengths, particularly for developers already comfortable with Docker commands and concepts.

The Future of Docker's AI Ecosystem in 2025

Looking ahead to 2025, Docker's AI strategy appears poised for significant expansion. The combination of Model Runner for local AI deployment and Gordon AI agent for containerization assistance represents just the beginning of Docker's AI ambitions.

We can anticipate several developments in Docker's AI ecosystem:

- Expanded model catalog to rival specialized platforms like Ollama

- Enhanced integration between Gordon AI and Model Runner

- More sophisticated MCP servers and tooling for AI-assisted DevOps

- Improved performance optimizations specifically for AI workloads

- Greater enterprise features for managing AI models at scale

Conclusion: Has Docker Model Runner Destroyed Ollama?

In its current state, Docker Model Runner hasn't "destroyed" Ollama, but it has created a compelling alternative for specific use cases. The answer to whether Docker can replace Ollama depends largely on your specific needs and existing workflow.

For developers deeply embedded in the Docker ecosystem who want to run AI models alongside their containerized applications, Docker Model Runner offers a natural, integrated solution. The addition of Gordon AI with its impressive MCP support creates a powerful combination for DevOps-focused teams.

However, Ollama still maintains advantages in model selection and specialized UI. Rather than viewing these tools as direct competitors, it may be more productive to see them as complementary solutions serving different segments of the AI deployment spectrum.

As Docker continues to invest in its AI capabilities, the gap between these solutions will likely narrow. For now, the best choice depends on your specific requirements, existing infrastructure, and whether you value Docker's unified container management approach or Ollama's specialized focus on local LLM deployment.

Let's Watch!

Docker Model Runner vs Ollama: Which AI Model Serving Tool Wins in 2025?

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence