Docker containers are like perfectly packed suitcases for your code. Everything your application needs for its journey is in there - nothing more, nothing less. But the magic of Docker isn't just in the technology; it's in how it transforms deployment into something as simple as sharing a recipe. This is why Docker has become a standard technology in modern software development and deployment.

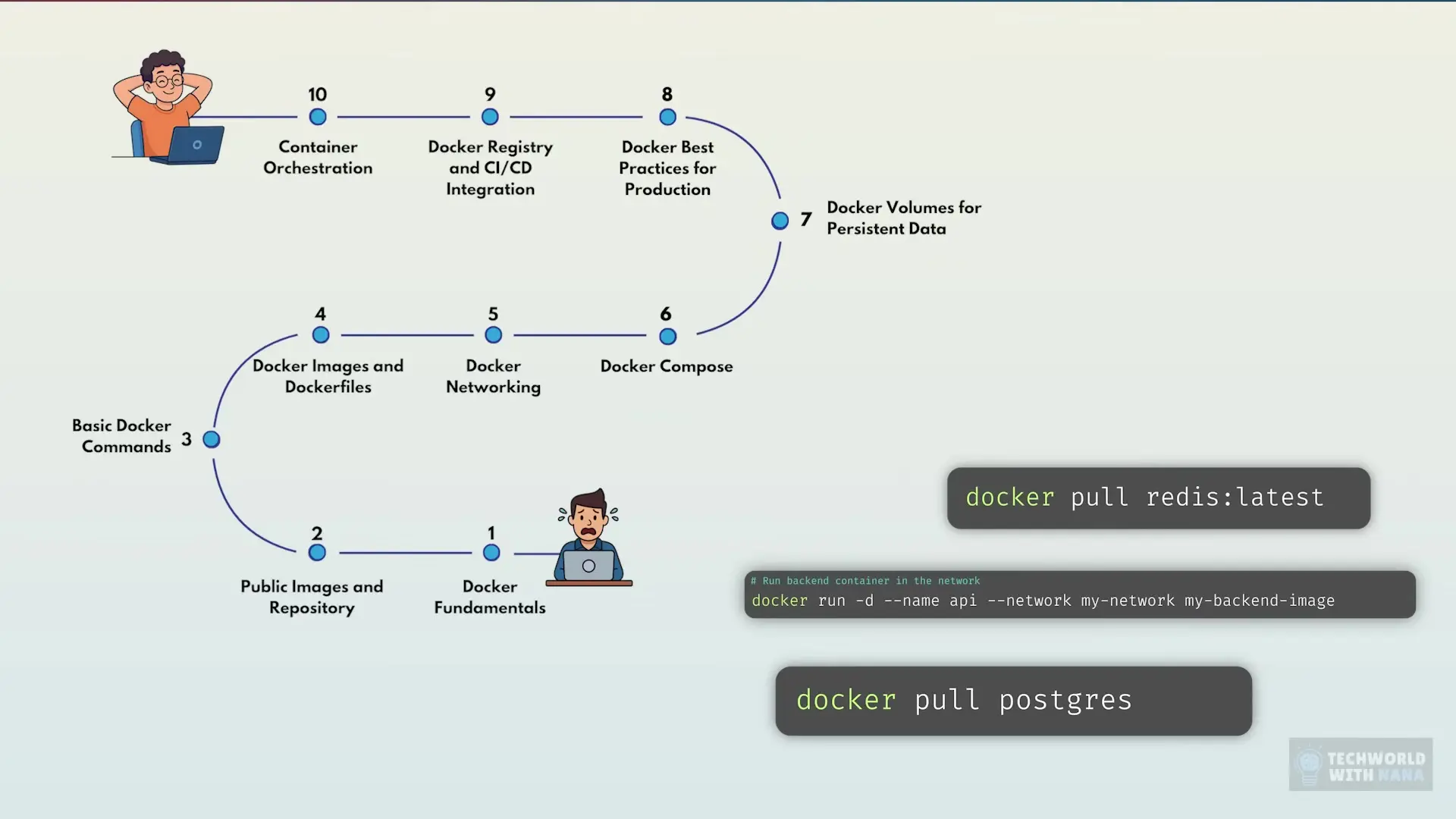

For beginners, however, Docker can seem overwhelming. It's hard to know where to start, what to focus on, and how to progress in a structured way. This comprehensive Docker roadmap will guide you from absolute beginner to confidently using Docker in your projects, breaking down exactly what you need to learn and in which order.

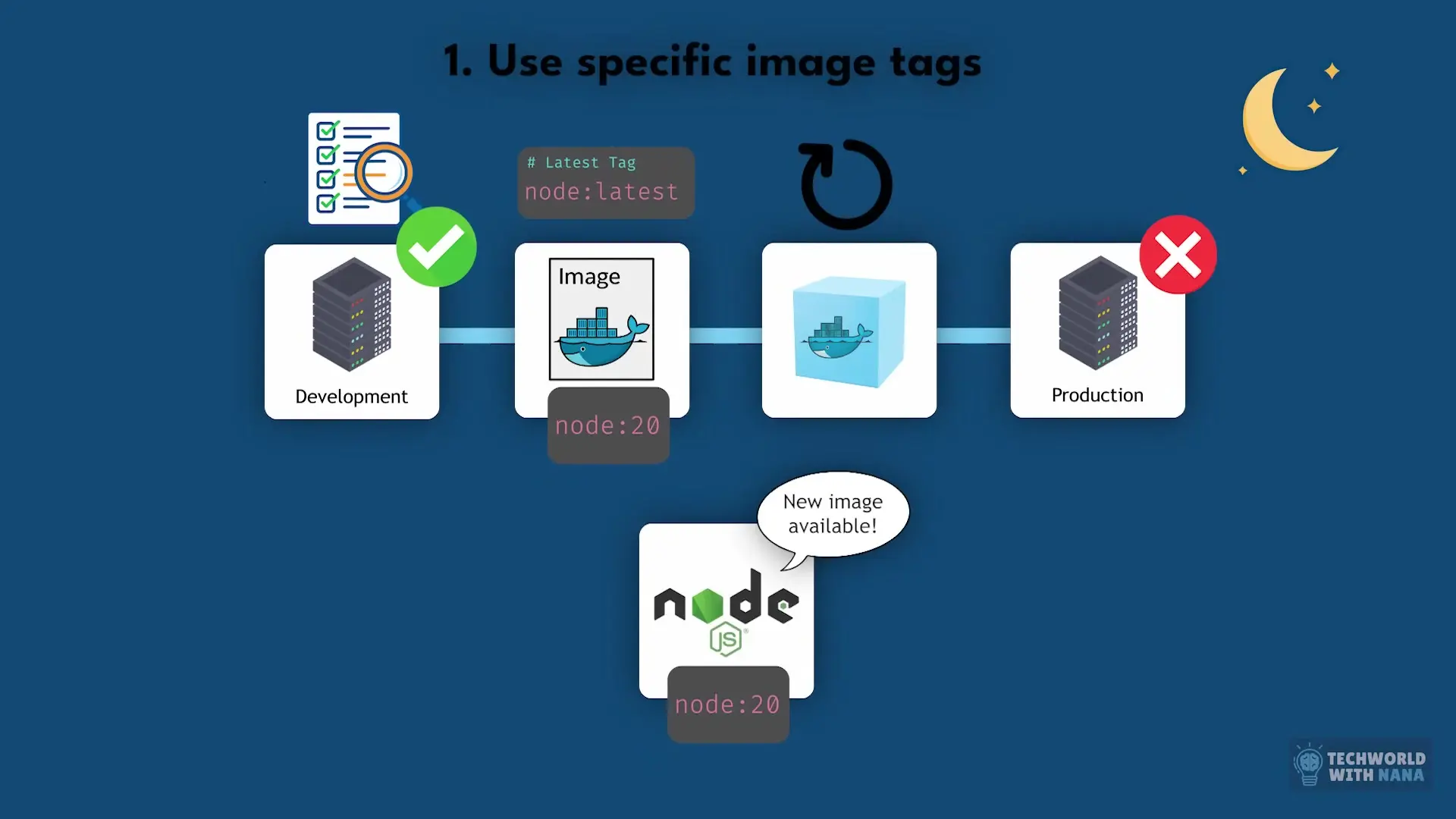

Step 1: Understand What Docker Solves

Before diving into Docker commands and creating images, you need to understand the fundamental problem Docker solves: the infamous "it works on my machine" syndrome.

Imagine you're working on a NodeJS application. On your local development machine, you have Node version 14 installed, but the production server runs version 16. Your application works perfectly locally but breaks when deployed to production due to these environment differences.

Docker solves this by packaging your application with its entire environment, dependencies, and configurations into a single container that runs exactly the same way anywhere Docker is installed. This standardization ensures consistency across development, testing, and production environments.

Step 2: Leverage Pre-built Docker Images

One of Docker's most powerful aspects is that you don't need to create images for common services yourself. Almost every major software service already has an official Docker image available.

- Databases: MySQL, PostgreSQL, MongoDB, Redis, Elasticsearch

- Message brokers: RabbitMQ, Kafka

- Web servers: Nginx, Apache

These images are maintained by the software vendors themselves or the Docker community and stored in Docker repositories, with Docker Hub being the most popular public repository. Think of Docker Hub as GitHub but for container images instead of code.

This means anyone can pull these images and use them without needing to know how to configure the services from scratch. For example, instead of installing PostgreSQL on your machine with all the OS-specific configuration headaches, you can simply pull the PostgreSQL image and have a working database running in seconds with a single Docker command.

Step 3: Master Basic Docker Commands

Once you understand conceptually why Docker was created and so widely adopted, you can immediately dive into hands-on practice with the basic Docker commands to use existing images from public repositories.

- Pull an image: `docker pull [image-name]:[tag]`

- Run a container: `docker run -p [host-port]:[container-port] [image-name]`

- List running containers: `docker ps`

- Stop a container: `docker stop [container-id]`

- Remove a container: `docker rm [container-id]`

- List downloaded images: `docker images`

Start with simple public images like Nginx or Redis. They're lightweight and easy to validate. Try pulling them, running them, and interacting with them to get hands-on practice with these fundamental commands in a real context.

# Pull the latest Nginx image

docker pull nginx:latest

# Run Nginx and map port 8080 on your machine to port 80 in the container

docker run -d -p 8080:80 nginx:latest

# Check that the container is running

docker ps

# Stop the container

docker stop [container-id]

# Remove the container

docker rm [container-id]Step 4: Create Your Own Docker Images with Dockerfiles

After mastering the basics of using existing Docker images, the next step is learning how to build your own images. This is where Docker becomes truly powerful for your specific applications.

A Dockerfile is a blueprint for your Docker image - like a recipe that tells Docker how to build an image. Here's an example of a basic Dockerfile for a NodeJS application:

# Base image

FROM node:14

# Set working directory

WORKDIR /app

# Copy package.json and package-lock.json

COPY package*.json ./

# Install dependencies

RUN npm install

# Copy application code

COPY . .

# Expose port

EXPOSE 3000

# Start the application

CMD ["npm", "start"]This Dockerfile contains the essential directives that make up most Dockerfiles:

- FROM: Specifies the base image to build upon

- WORKDIR: Sets the working directory inside the container

- COPY: Copies files from your host into the container

- RUN: Executes commands inside the container during build

- EXPOSE: Indicates which ports the container will listen on

- CMD: Specifies the command to run when the container starts

To build and run your custom image, use these commands:

# Build the image

docker build -t my-node-app .

# Run the container

docker run -p 3000:3000 my-node-appThe best way to learn is by doing. Take a simple application you already have (or create a basic one) and try to containerize it. Through this process, you'll naturally learn what each command does and why it's necessary in context.

Step 5: Understand Docker Networking

While Dockerfiles are excellent for defining single containers, real-world applications typically require multiple containers working together. For example, a web application might need separate containers for the frontend, backend API, database, and caching service.

Docker networking allows containers to communicate with each other using just the container names as hostnames. This is particularly important in microservices architecture.

# Create a network

docker network create my-app-network

# Run containers in the network

docker run -d --name frontend --network my-app-network frontend-image

docker run -d --name api --network my-app-network api-imageIn this example, the frontend container can communicate with the backend container using "api" as the hostname because they're in the same Docker network.

Step 6: Simplify Multi-Container Applications with Docker Compose

As you work with multiple containers, typing out Docker commands repeatedly becomes cumbersome and error-prone. Docker Compose solves this by allowing you to define multi-container applications in a single YAML file.

Here's an example docker-compose.yml file for a web application with a frontend, backend, and database:

version: '3'

services:

frontend:

build: ./frontend

ports:

- "3000:3000"

depends_on:

- api

api:

build: ./backend

ports:

- "5000:5000"

environment:

- DB_HOST=database

- DB_PORT=5432

depends_on:

- database

database:

image: postgres:13

volumes:

- postgres-data:/var/lib/postgresql/data

environment:

- POSTGRES_PASSWORD=mysecretpassword

- POSTGRES_USER=myuser

- POSTGRES_DB=mydb

volumes:

postgres-data:With this configuration, you can start all three containers with a single command:

docker-compose upDocker Compose automatically creates a network for the containers, sets up the dependencies in the correct order, and manages volumes for persistent data. This dramatically simplifies the development workflow for multi-container applications.

Step 7: Explore Advanced Docker Concepts

Once you're comfortable with the fundamentals, you can explore more advanced Docker concepts to further enhance your containerization skills:

- Docker volumes for persistent data storage

- Multi-stage builds to create smaller, more efficient images

- Docker health checks to monitor container health

- Docker security best practices

- Container orchestration with Docker Swarm or Kubernetes

Conclusion: Your Docker Journey

Docker isn't just for DevOps engineers and cloud experts. It's for anyone who's ever said, "I wish I could just send my entire computer along with my code." By following this roadmap, you'll progress from Docker beginner to confidently incorporating containerization into your development workflow.

Remember that the best way to learn Docker is through practical application. Start with simple projects and gradually increase complexity as you become more comfortable. Before long, you'll wonder how you ever developed without the consistency and portability that Docker provides.

The Docker ecosystem continues to evolve with the Docker roadmap introducing new features and improvements regularly. Staying current with these developments will ensure you're leveraging Docker to its fullest potential in your projects.

Let's Watch!

Docker Roadmap 2023: From Beginner to Pro in 7 Steps

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence