While social media is flooded with complex AI agent workflows promising to automate your entire life, many developers are finding more practical, everyday uses for Large Language Models (LLMs). Let's explore the realistic ways LLMs can enhance your development workflow without the unrealistic expectations of fully autonomous AI agents.

The Reality of AI Agents: Capabilities vs. Hype

Despite the buzz around AI agents that can supposedly put entire aspects of your life on autopilot, the current reality is more nuanced. While tools like N8N allow for impressive workflow automation with AI components, the concept of a single super-agent that reliably handles complex tasks autonomously remains more aspirational than practical for most developers.

That doesn't mean LLMs aren't incredibly useful - they absolutely are. But their value comes from specific, focused applications rather than attempting to build the all-encompassing AI assistant that social media posts might suggest.

7 Practical Ways Developers Use LLMs Daily

Here are the most valuable ways developers are incorporating LLMs into their daily workflow with relatively low effort and high return on investment:

1. Code Assistance and Completion

Tools like GitHub Copilot and Cursor have become essential for many developers. These AI-powered assistants offer suggestions and completions that can significantly accelerate coding tasks. Particularly valuable is the 'next edit suggestion' feature that predicts likely changes after you modify one part of your code, making refactoring much faster.

However, be mindful that these tools can sometimes be overly aggressive with their suggestions. Most developers find the sweet spot is using them for individual features or components rather than generating entire applications.

2. Technical Discussions and Problem-Solving

Many developers, especially those working solo, use ChatGPT or Google Gemini (particularly Gemini 2.5) as a sounding board for technical decisions. Having an AI to discuss and challenge your approach to solving problems provides valuable alternative perspectives.

This doesn't mean blindly implementing whatever the AI suggests, but rather using it to validate your thinking or consider different approaches to the same problem - similar to having a colleague to bounce ideas off of.

3. Deep Research for Technical Content

The deep research modes in ChatGPT and Google Gemini are powerful tools for developers who create content or need to research technical topics. Simply describe what you need information on, and the AI will compile a comprehensive report with cited sources.

This is particularly useful when preparing for technical articles, presentations, or making informed architectural decisions. While the AI-generated reports can sometimes be lengthy, they provide valuable insights and save significant research time.

4. Running Local LLMs for Private Data

For tasks involving sensitive information, many developers are running open LLMs locally using tools like LM Studio or Ollama. This approach offers several advantages:

- Data privacy - no need to send potentially sensitive information to OpenAI or Google

- Cost savings - no API fees for frequent usage

- Suitable for tasks like text summarization, information extraction, and translation

- Models like Google's Gemma are surprisingly capable for these utility tasks

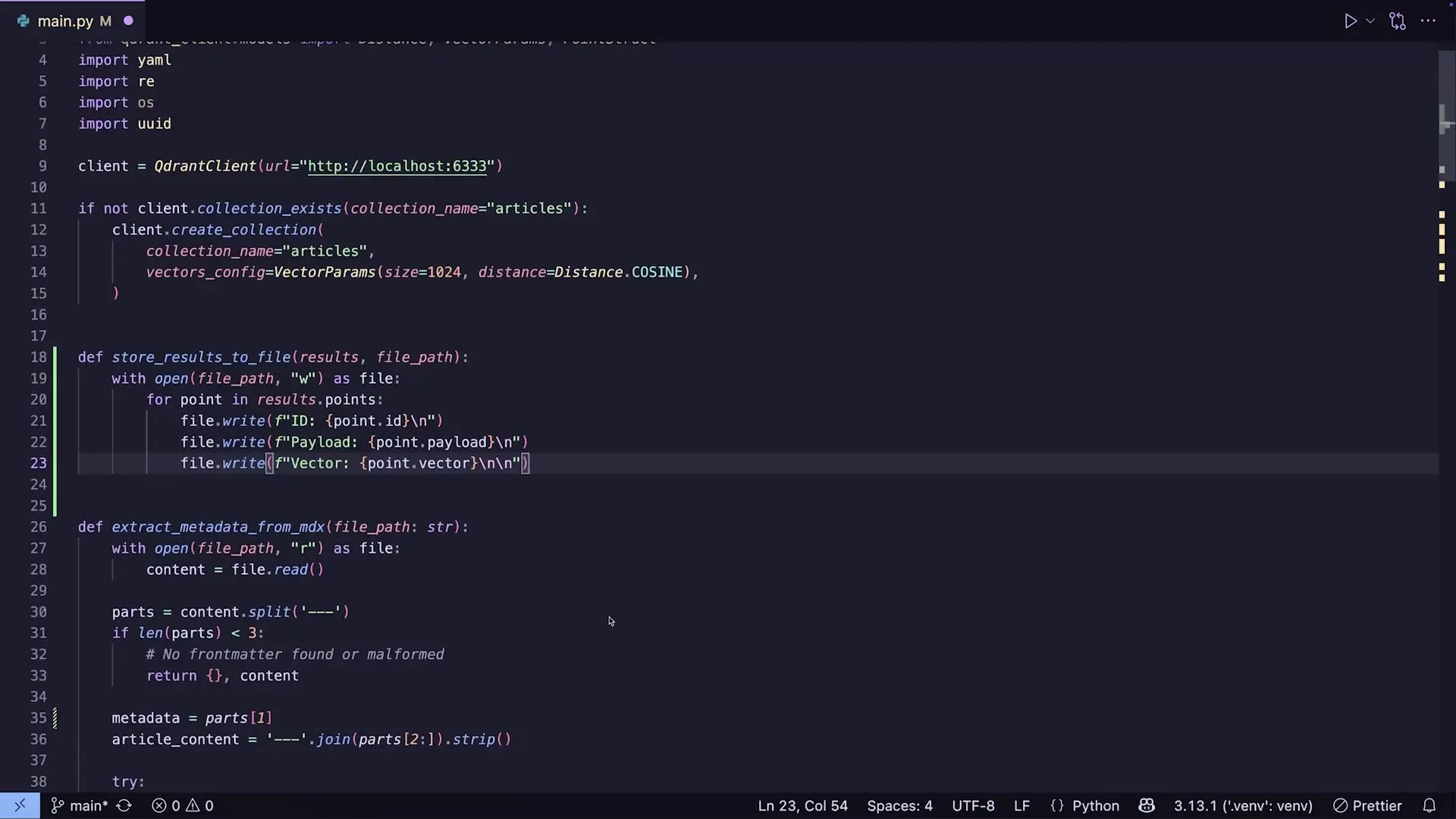

The code example above demonstrates how developers can implement vector database operations using QdrantClient, enabling efficient storage and retrieval of embeddings for local LLM workflows.

5. Content Enhancement and Generation

Developers who also create content (articles, documentation, etc.) often use LLMs to enhance their writing. A common workflow involves:

- Creating initial content or draft (e.g., from video captions)

- Feeding this content into an LLM along with examples of your preferred style

- Having the LLM generate an enhanced version

- Reviewing and editing the output to ensure accuracy and personal voice

This approach preserves your unique perspective while leveraging the LLM's ability to improve structure, clarity, and readability.

6. Building Custom RAG Systems

Retrieval-Augmented Generation (RAG) systems allow developers to enhance LLM capabilities by connecting them to specific knowledge bases. Many developers build custom RAG systems for:

- Creating documentation assistants that can answer questions about internal codebases

- Building knowledge management systems that can retrieve and synthesize information from company resources

- Developing specialized tools that combine the reasoning abilities of LLMs with accurate, up-to-date information

from qdrant_client import QdrantClient

from qdrant_client.http import models

# Initialize Qdrant client for vector database

client = QdrantClient("localhost", port=6333)

# Create collection with COSINE distance metric for embeddings

client.create_collection(

collection_name="documentation",

vectors_config=models.VectorParams(

size=1536, # OpenAI embedding dimension

distance=models.Distance.COSINE

)

)

# Function to store search results with metadata

def store_embeddings(texts, embeddings, metadata):

client.upsert(

collection_name="documentation",

points=models.Batch(

ids=list(range(len(texts))),

vectors=embeddings,

payloads=metadata

)

)7. Utility Tasks and Text Processing

LLMs excel at various utility tasks that developers frequently need, including:

- Summarizing lengthy documentation or meeting notes

- Extracting structured data from unstructured text

- Translating content between languages

- Converting between different formats (JSON to CSV, markdown to HTML, etc.)

- Explaining complex code snippets or error messages

These tasks don't require the latest cutting-edge models - even locally run open-source LLMs can handle them effectively, making them accessible to all developers.

Finding the Right LLM Workflow for Your Needs

The most effective way to incorporate LLMs into your development workflow is to start with specific, well-defined use cases rather than trying to build the ultimate AI agent. Consider these principles when developing your LLM workflow:

- Focus on augmenting rather than replacing your skills

- Start with the tasks that consume significant time but don't require complex judgment

- Balance privacy considerations with convenience when choosing between local and cloud-based models

- Always verify the output, especially for code generation or factual information

- Experiment with different models to find the best fit for specific tasks

Conclusion: Practical Over Perfect

While the idea of a single AI agent that automates your entire workflow is appealing, the current reality of LLMs is more about practical, focused applications that enhance specific aspects of development work. By understanding the practical ways LLMs can be incorporated into your daily workflow, you can leverage these powerful tools without getting caught up in unrealistic expectations.

The most successful developers are those who view LLMs as collaborative tools that augment their capabilities rather than magical solutions that replace human judgment. As LLM technology continues to evolve, the key to effective usage will remain finding the right balance between automation and human oversight.

Let's Watch!

7 Practical Ways Developers Are Using LLMs Daily: Beyond the AI Agent Hype

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence