Vercel is making a bold move to position itself as the dominant cloud platform in the AI-native era. At their recent Vercel Ship keynote, the company unveiled a comprehensive suite of AI-focused tools and infrastructure improvements designed to streamline development workflows, enhance security, and optimize costs. Let's explore the most significant announcements and what they mean for developers and organizations building on Vercel.

AI Gateway: Simplifying Multi-Provider LLM Access

One of the most exciting announcements is the beta release of Vercel AI Gateway. This proxy service functions similarly to Open Router, allowing developers to route AI requests to various providers including Anthropic, OpenAI, and Google through a single interface. The gateway eliminates the need to manage multiple billing platforms and API keys, making it significantly easier to switch between different AI providers based on performance needs or to implement fallback options for increased reliability.

Vercel offers $5 of credits on the free tier, with subsequent pricing dependent on the models you use. A particularly useful feature is the ability to bring your own API keys for individual providers, though this comes with a modest 3% markup compared to going directly through Vercel.

AI SDK v5: TypeScript-First AI Development

Complementing the AI Gateway is the beta release of AI SDK v5, which together may offer the most streamlined way to implement AI in TypeScript applications. The SDK allows developers to specify models and providers with simple string parameters, dramatically reducing the complexity of integrating multiple AI services into applications.

// Example of specifying a model with the AI SDK v5

import { openai } from '@vercel/ai';

const completion = await openai.completions.create({

model: 'gpt-4o',

prompt: 'Explain quantum computing in simple terms'

});Vercel Sandbox: Safe Execution for AI-Generated Code

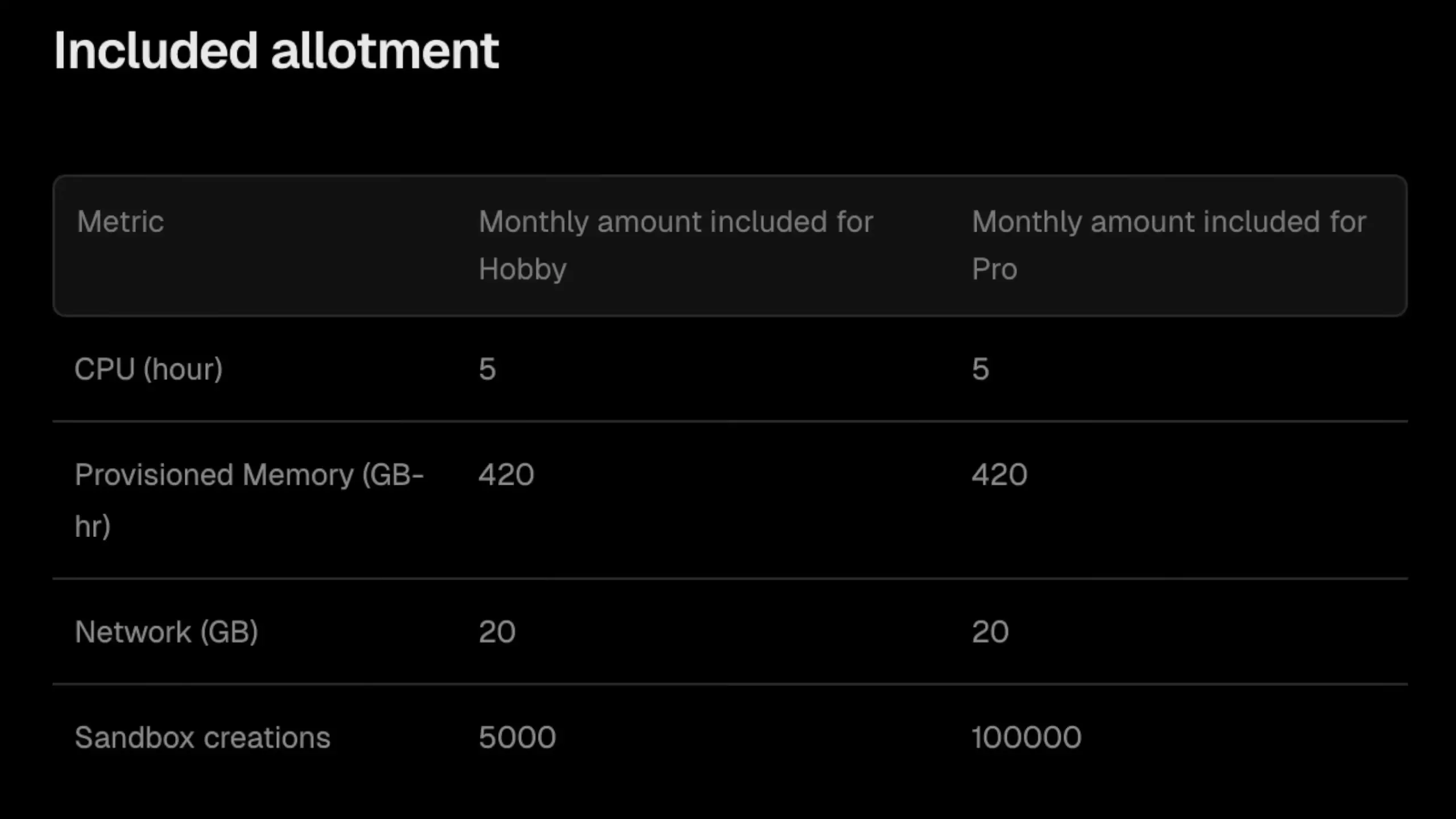

For applications that generate and execute code (a common AI use case), Vercel has introduced Sandbox. This feature allows untrusted code to run in isolated ephemeral environments, addressing a significant security concern in AI development. The sandbox supports execution times up to 45 minutes with resources of up to 8 vCPUs and 248MB of memory per vCPU.

The base system runs on Amazon Linux 2023 and comes pre-installed with Node.js 22 and Python 3.13, making it ready for most common development scenarios. Pricing follows the same model as Vercel's fluid compute service, with some included allotments based on your Vercel plan tier.

Active CPU Billing: Significant Cost Optimization

In a move that will benefit many users' budgets, Vercel has implemented active CPU billing across their platform. This means you're only charged for CPU time when it's actively working, eliminating costs during idle periods. This change can substantially reduce expenses for workloads with intermittent processing needs, such as LLM inference, long-running AI agents, or applications with natural idle periods.

This pricing model brings Vercel more in line with competitors like Cloudflare Workers. After free allotments are used, pricing is set at 12.8 cents per hour of active CPU time and 60 cents per million sandbox creations. While slightly more expensive than Cloudflare's 7.2 cents per hour and 30 cents per million invocations, the platforms aren't directly comparable due to differences in memory allocation, runtime environments, and developer experience.

Bot ID: Invisible CAPTCHA Protection

Security gets a boost with the general availability of Vercel Bot ID, an invisible CAPTCHA solution that eliminates the need for visible challenges or manual bot management. Developed in partnership with Kasada, Bot ID silently collects thousands of signals to differentiate between human users and bots.

The system continuously evolves its detection methods, mutating on every page load to prevent reverse engineering. It also feeds attack data into a global machine learning network that strengthens protection for all customers. Integration is straightforward with a type-safe SDK that includes client-side detection via the Bot ID client component and server-side verification through the checkBotID function.

Rolling Releases: Safer Deployment Workflows

For teams focused on deployment reliability, Vercel's Rolling Releases feature is now generally available. This enables safe, incremental rollouts of new deployments with built-in monitoring and rollout controls without requiring custom routing configurations. Developers can start with a defined stage and either automatically progress or manually promote to full release, providing fine-grained control over the deployment process.

Limited Beta Features: Microfrontends, Queues, and AI Agent

Vercel also announced several features currently in limited beta that point to the platform's future direction:

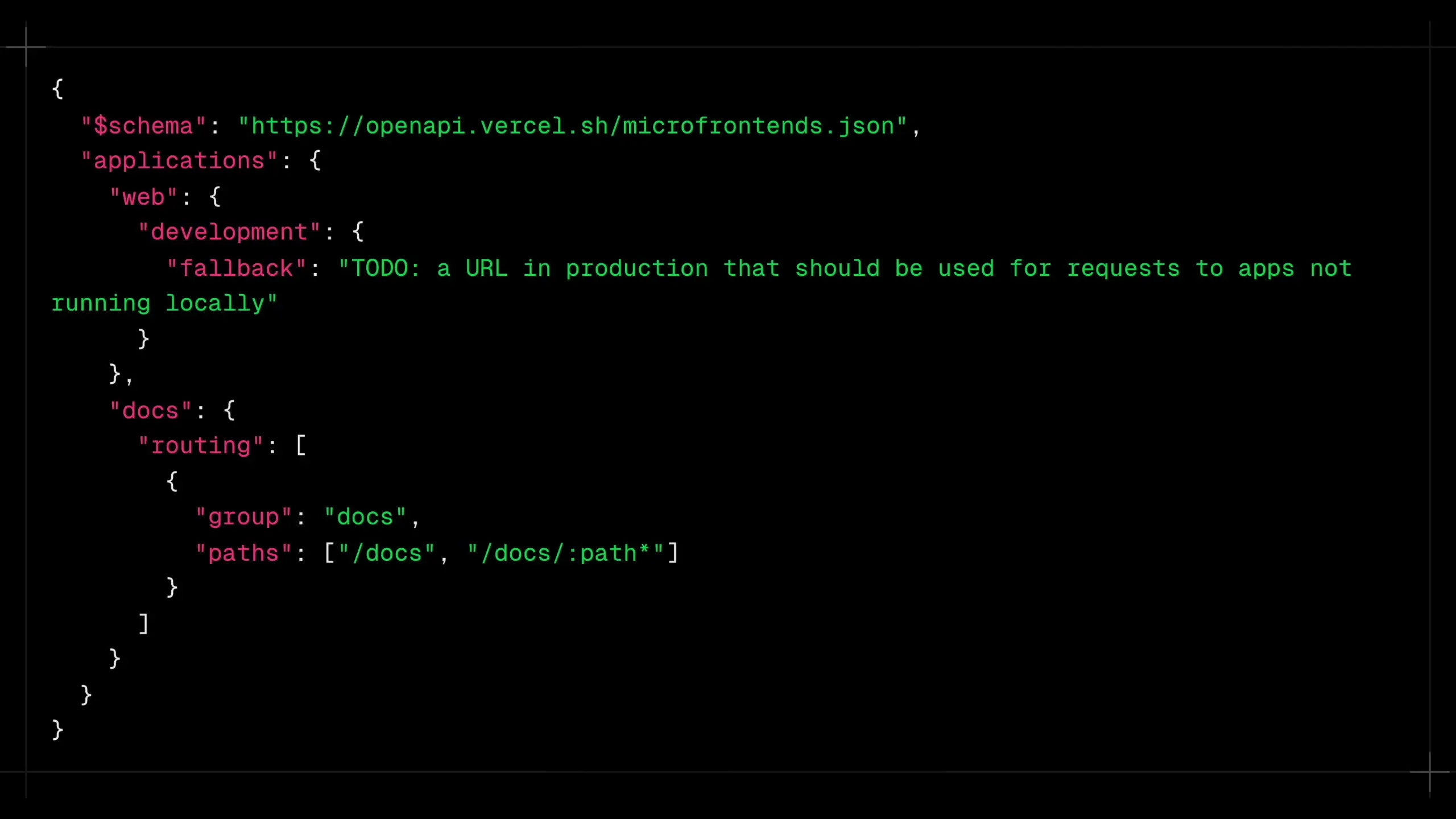

- Vercel Microfrontends: Deploy and manage multiple frontend applications that appear as a single cohesive application to users. Configuration is handled through a microfrontend.json file in the root application that controls routing.

- Vercel Queues: A message queue service built specifically for Vercel applications that allows offloading work to background processes. This improves user experience by preventing slow operations from blocking requests and enhances reliability through automated retries and failure handling.

- Vercel Agent: An AI assistant integrated into the Vercel dashboard that analyzes application performance and security data, identifies anomalies, suggests likely causes, and recommends specific actions across the platform.

Implementing Vercel's New Features in Your Projects

To take advantage of these new capabilities, developers should consider the following implementation strategies:

- For AI applications, evaluate the AI Gateway and SDK v5 to simplify provider management and potentially reduce costs through consolidated billing.

- If your application generates and executes code, implement Vercel Sandbox to enhance security while maintaining performance.

- Review your current workloads to identify opportunities for cost savings with the new active CPU billing model, particularly for applications with intermittent processing needs.

- Consider Bot ID for applications facing bot traffic or requiring security without disrupting user experience.

- For teams with complex deployment requirements, implement Rolling Releases to improve reliability and control.

The Future of Cloud Infrastructure for AI

Vercel's announcements represent a significant strategic shift toward becoming the platform of choice for AI-native development. By addressing key challenges in AI implementation—from model access and code execution safety to cost optimization and deployment reliability—Vercel is positioning itself competitively against platforms like Cloudflare in the rapidly evolving cloud infrastructure landscape.

These new features demonstrate Vercel's commitment to providing developers with the tools needed to build sophisticated AI applications without getting bogged down in infrastructure complexity or security concerns. For organizations building AI-enhanced applications, Vercel's evolving platform offers an increasingly compelling combination of developer experience, performance, and specialized AI tooling.

As the competition between cloud providers intensifies in the AI space, developers stand to benefit from continued innovation and improved capabilities. Whether Vercel emerges as the dominant platform will depend on how effectively these new features address real-world development challenges and how quickly competitors respond with similar offerings.

Let's Watch!

Vercel's AI-First Strategy: 7 Game-Changing Features Announced at Vercel Ship

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence