Large Language Models aren't performing magic—they're simply predicting what comes next in a sequence of text. However, once you understand the mechanics behind these predictions, you can effectively guide these powerful tools to accomplish exactly what you need. From generating cleaner code to debugging faster, mastering prompt engineering techniques can dramatically improve your development workflow.

Understanding How LLMs Actually Work

At their core, Large Language Models have one primary function: predicting the next word in a sequence. When you provide a prompt like "The server crashed because," the model completes it based on patterns it learned from massive amounts of training data. It might suggest "it ran out of memory" because that's a common pattern in logs, forums, and documentation.

This prediction mechanism powers everything from code generation to email summarization. But it's crucial to understand that LLMs aren't thinking—they're continuing text in a way that appears intelligent based on statistical patterns. This distinction is why precision in your prompts directly impacts the quality of the output you receive.

The Evolution of Language Models

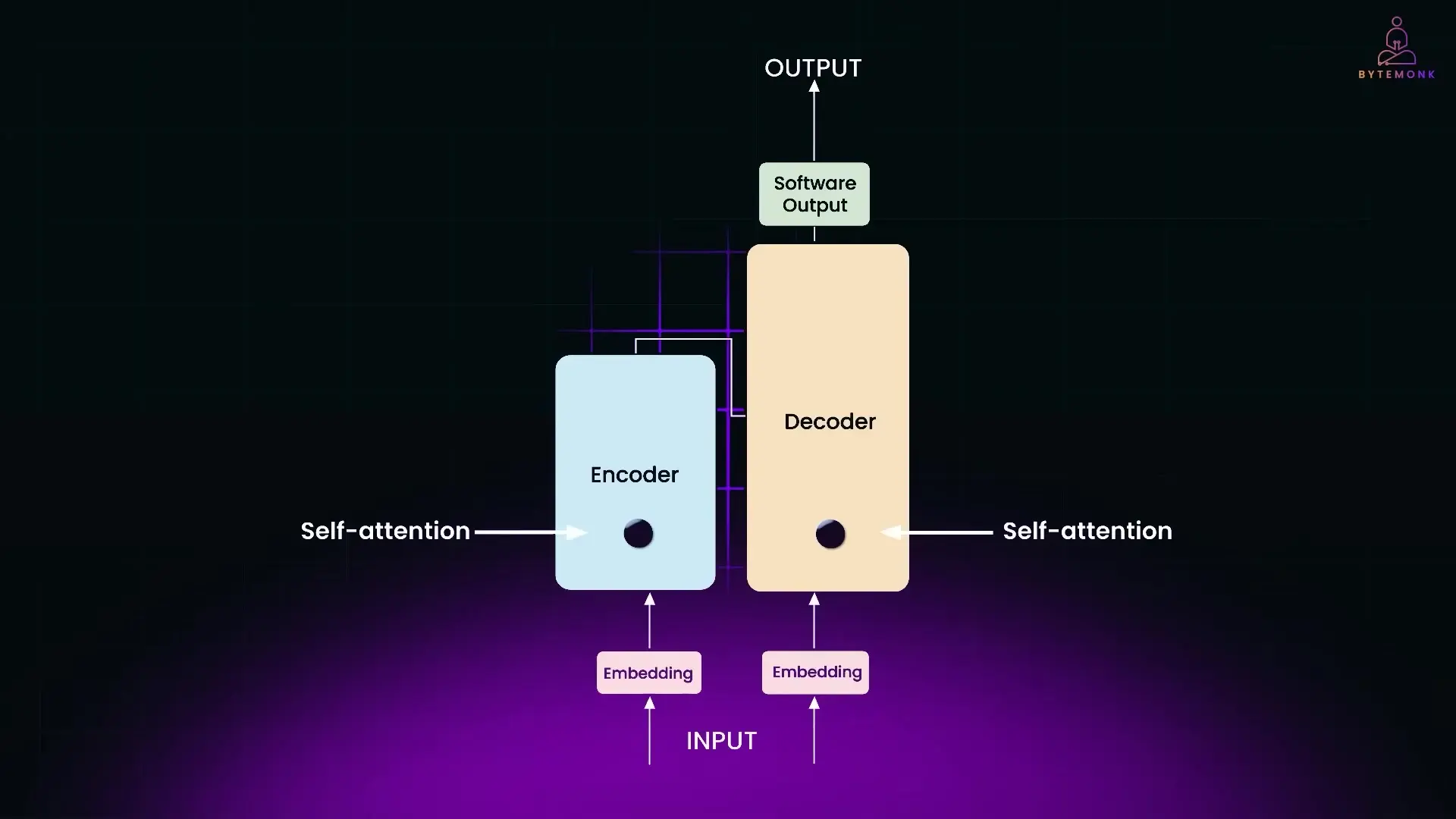

To fully appreciate modern LLMs, it helps to understand their evolution. Early language models like sequence-to-sequence architectures (2014) used Recurrent Neural Networks (RNNs) to process text one token at a time, updating an internal memory after each step. These models were effective for handling sequences but suffered from an information bottleneck—all input meaning had to be compressed into a single fixed-length vector.

The breakthrough came in 2017 with the paper "Attention is All You Need," which introduced the Transformer architecture. Transformers process all tokens in parallel rather than sequentially, making them faster to train and more powerful at capturing long-range context. This architecture forms the foundation of modern LLMs like GPT-4, Claude, Llama, and Gemini.

The Core Mechanics of Prompt Engineering

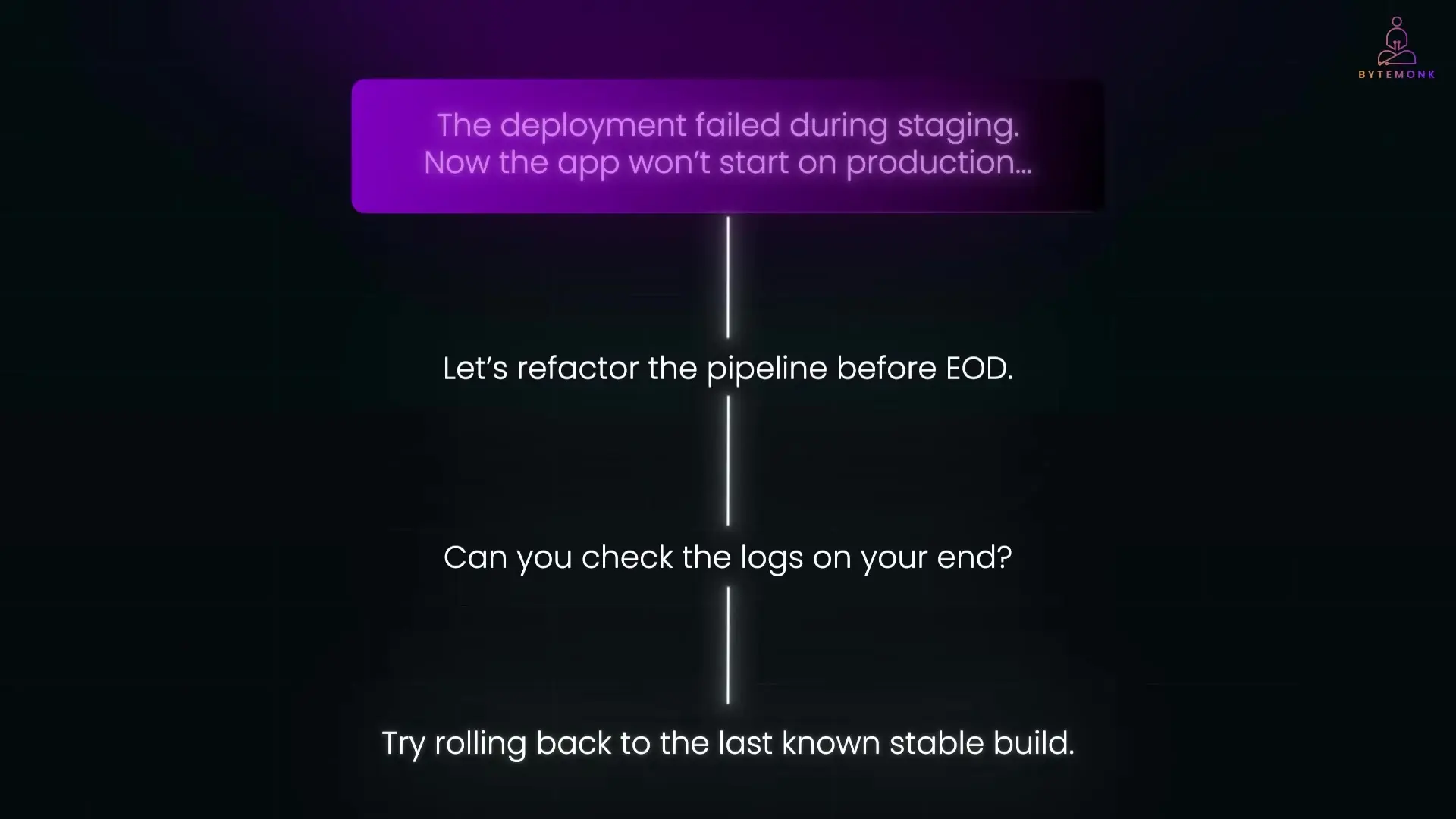

Prompt engineering is the practice of crafting inputs that guide an LLM to produce desired outputs. At its simplest level, an LLM interaction consists of two components: the prompt (your input) and the completion (the model's output). For example, with the prompt "The API request failed because," the completion might be "the authentication token was missing."

The model's response is heavily influenced by patterns it observed during training. If it was trained on engineering discussions and issue threads, it's more likely to suggest technical solutions like "try rolling back to the last known stable build" when presented with deployment problems.

Advanced Prompt Engineering Techniques for Developers

- Context Enrichment: Add relevant background information to your prompts to improve accuracy and relevance. For debugging tasks, include error logs, code snippets, and environment details.

- Role Assignment: Instruct the model to adopt a specific perspective (e.g., "As a senior backend developer with 10 years of experience...") to guide its response style and technical depth.

- Step-by-Step Decomposition: Break complex problems into smaller steps by explicitly asking the model to work through a solution methodically.

- Few-Shot Learning: Provide examples of the input-output pattern you want the model to follow, which significantly improves performance on specialized tasks.

- Chain of Thought: Prompt the model to explain its reasoning process, which often leads to more accurate and logical outputs.

- Structured Output Formatting: Specify exactly how you want the response formatted (e.g., as JSON, markdown, or with specific sections).

- Iterative Refinement: Use multiple rounds of prompting to progressively improve outputs, with each round addressing specific aspects of the solution.

Building LLM-Powered Applications

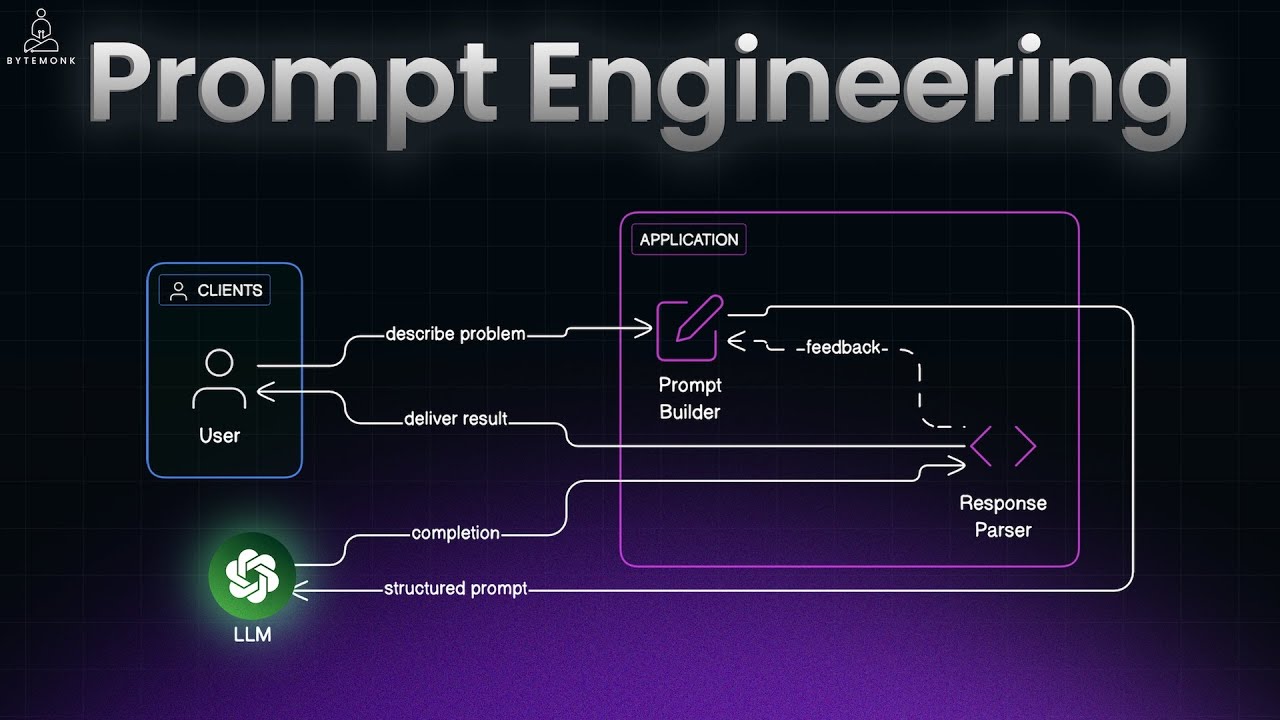

In real-world applications, prompt engineering extends beyond crafting good text—it involves designing systems that effectively manage the input-output loop. A typical LLM-based system follows this pattern:

- The user describes a problem or request

- Your application builds a structured input (the prompt)

- The LLM processes this input and generates a completion

- Your application processes the response and returns it to the user

As applications become more sophisticated, this loop can incorporate additional components like memory (tracking conversation state), tool use (calling APIs or accessing external data), and error handling (detecting and correcting hallucinations).

Practical Applications for Software Development

For developers, well-crafted prompts can transform your workflow in numerous ways:

- Code Generation: Create boilerplate code, starter functions, or entire modules with appropriate structure and documentation

- Test Case Development: Generate comprehensive test suites that cover edge cases and failure modes

- Debugging Assistance: Analyze error logs and suggest potential fixes or troubleshooting steps

- Documentation: Automatically generate clear, well-structured documentation from code or requirements

- Code Reviews: Get feedback on code quality, potential bugs, or performance improvements

Best Practices for Effective Prompt Engineering

To get the most out of LLMs in your development workflow, follow these key principles:

- Be specific and detailed in your requests

- Provide context about your codebase, technologies, and constraints

- Structure prompts logically with clear sections for background, requirements, and expected output

- Use consistent formatting to make patterns easier for the model to recognize

- Iterate on your prompts based on results, refining them to get better outputs

- Maintain a library of effective prompts for common tasks in your workflow

Remember that prompt engineering is both an art and a science. While there are fundamental techniques that work consistently, finding the optimal approach often requires experimentation and adaptation to your specific use case.

Conclusion: The Future of Developer-LLM Collaboration

As LLMs continue to evolve, prompt engineering will become an increasingly valuable skill for developers. The models themselves will improve, but the fundamental principle remains: LLMs are prediction engines that respond to the patterns you present. By mastering prompt engineering techniques, you can transform these powerful tools from interesting novelties into essential components of your development toolkit.

Developers who invest time in understanding how LLMs work and how to effectively prompt them will gain a significant advantage in productivity, problem-solving, and building innovative AI-powered applications. The future belongs to those who can speak the language of AI and guide it toward solving real-world problems.

Let's Watch!

7 Advanced Prompt Engineering Techniques for Developers to Master LLMs

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence