The internet has been buzzing with discussions about OpenAI's GPT-4 model and its uncanny ability to identify locations from photos. So impressive are its capabilities that many users speculated it might be accessing EXIF data or user location information. While those features exist, GPT-4's core location identification skills are genuinely remarkable even without such metadata. But just how good is it? Can artificial intelligence outperform human experts at GeoGuessr, the popular geography guessing game?

The Ultimate Test: AI vs. GeoGuessr World Cup Player

To definitively answer this question, we need a worthy human competitor. Enter ZigZag, a GeoGuessr World Cup player known for his analytical approach and detailed reasoning behind each guess. This head-to-head competition follows strict rules: no moving, panning, or zooming—contestants must make their guesses based solely on the initial static image. The goal is to determine whether human intuition and experience can still outperform AI in specialized geographic recognition tasks.

For fairness, GPT-4 was instructed not to use web search capabilities, ensuring it relied solely on its training data—just as human players can't use Google during gameplay. This creates a level playing field where both contestants must draw on their internal knowledge banks.

Round 1: Australian Outback Showdown

The first location presented a rural road scene with distinctive features that both contestants needed to analyze. ZigZag quickly identified several key elements: double white center lines suggesting Oceania or Europe, black-on-yellow chevrons pointing to either the US or Australia, and crucially, red reflectors on guardrails that strongly indicated South Australia. The broken outside white line further reinforced his South Australia guess.

GPT-4, meanwhile, took just under two minutes to process the image and deliver its verdict: South Australia, specifically the Adelaide Hills. Its reasoning was impressively similar to ZigZag's, noting the vegetation, twin solid white center lines, chevron signs, guardrails, and overall landscape characteristics. What's particularly interesting is GPT-4's internal processing—it automatically crops portions of the image to reanalyze specific features in greater detail.

When the final scores were revealed, GPT-4 scored 4,889 points to ZigZag's 4,713. Round one goes to artificial intelligence.

Round 2: American Rural Roadways

The second location featured a rural American road. ZigZag's analysis focused on the trees and double yellow lines in the middle, which he identified as distinctly American—even noting that the spacing between the lines looked characteristically American. Based on his experience and the overall landscape, he placed his guess north of Alabama.

GPT-4 spent nearly four minutes on this analysis before guessing southeast Oklahoma, just west of ZigZag's placement. The AI identified the double solid yellow line (matching ZigZag's observation), but also noted the absence of a paved shoulder—typical of minor US state and US-numbered highways. It further analyzed the mixed pine and broadleaf vegetation, terrain characteristics, and even spotted a barbed wire fence that it associated with private cattle land in the region.

The results: GPT-4 scored 4,319 points versus ZigZag's 3,718. Another round to AI.

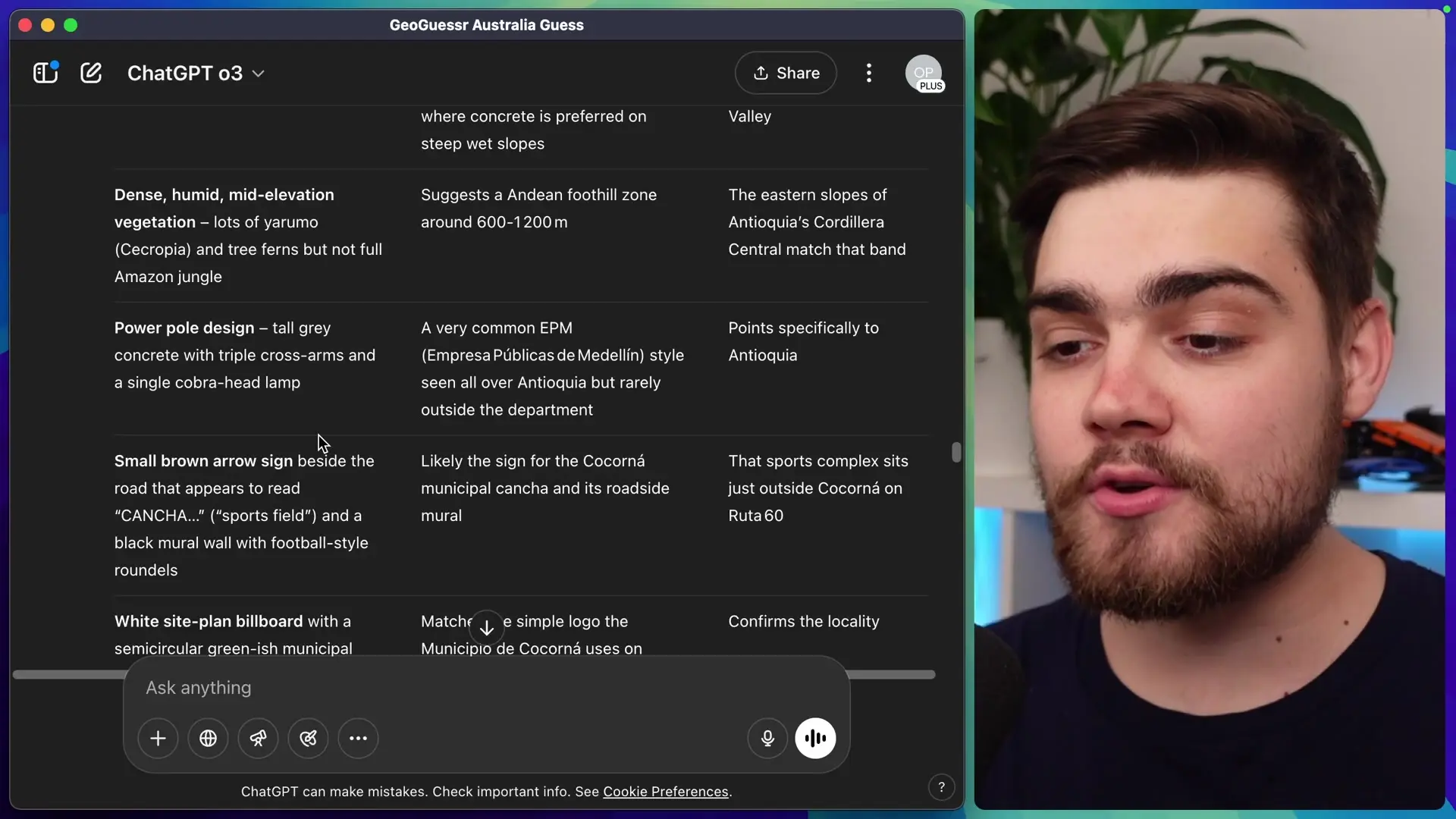

Round 3: Colombian Countryside Challenge

The third location included a sign with text, providing an additional clue. ZigZag quickly identified yellow number plates visible in the image as characteristic of Ecuador or Colombia. The Spanish text on a sign narrowed it further, but his clincher was spotting a black cross on the back of a road sign—a feature he knew was specific to Colombia.

GPT-4 took nearly seven minutes with this complex scene but also placed its guess in Colombia. Its reasoning included identifying right-hand traffic with yellow rear license plates (a Colombian characteristic), concrete roads typical of departmental routes, humid mid-elevation terrain, and distinctive power pole designs. Impressively, it read text on a sign indicating pig rearing, which it noted was typical of certain Colombian regions.

This round went to ZigZag with 3,624 points versus GPT-4's 3,523—the first human victory.

Round 4: Norwegian Landscape

The fourth location offered minimal obvious clues, yet ZigZag confidently identified long outer dashes on the road as distinctively Norwegian, particularly noting their length. Combined with his experience of the general landscape, he placed his guess in northern Norway.

GPT-4 spent over eight minutes analyzing this challenging scene before also concluding it was Norway. Its reasoning included detecting tall midnight sun glare, analyzing the compass direction (southwest), tree species, water features, modest hills, and the distinctive road markings that ZigZag had also identified.

The AI won this round with 4,680 points to ZigZag's 4,533.

Round 5: Tunisian Desert Finale

The final round featured a Google Street View car reflection—a key clue for experienced players. ZigZag immediately noted that the antenna style indicated Tunisia, with metal poles confirming his suspicion. The lack of greenery led him to place his guess in southern Tunisia.

GPT-4 responded with remarkable speed on this round, taking just 44 seconds to also identify Tunisia. Its analysis included right-hand traffic with white dashed center lines, narrow asphalt roads, vegetation patterns, and a telecom mast style common in Tunisia.

In a photo finish, ZigZag won by just four points: 4,791 to GPT-4's 4,787.

Final Results: Is GeoGuessr Pro Worth It Against AI?

When the dust settled, GPT-4's total score was 22,197 points versus ZigZag's 21,379—giving the AI a clear overall victory. This raises fascinating questions about the value of human expertise in the age of advanced AI systems.

- GPT-4 won 3 out of 5 rounds against a World Cup level GeoGuessr player

- The AI demonstrated ability to analyze minute details like road markings, vegetation patterns, and infrastructure styles

- GPT-4 could even read and interpret text on signs to inform its geographic reasoning

- The human player still won 2 rounds, showing expertise remains valuable

- The AI's performance suggests location identification is no longer limited to specialized human experts

The Implications of AI's GeoGuessr Prowess

While this competition was entertaining, it reveals something profound about modern AI capabilities. Previously, only a select few people worldwide could look at an image and identify its location within minutes. Now, this capability is available to anyone with access to GPT-4.

The technology raises interesting questions about data sources. Does GPT-4 include Street View training data? Google would likely restrict such data for their own AI models like Gemini, but GPT-4's performance suggests it has internalized enormous amounts of geographic information during its training.

For casual players wondering if GeoGuessr Pro is worth it, this demonstration shows that while AI can match or exceed human performance, the game remains enjoyable as a test of personal knowledge and deduction skills. The pro subscription still offers value for those who enjoy the challenge, even if AI can now achieve similar results.

Conclusion: Human Expertise in an AI World

This AI vs. GeoGuessr Pro test demonstrates that advanced AI systems can now match and sometimes exceed specialized human expertise in tasks requiring visual analysis and geographic knowledge. However, the close competition also shows that top human players remain formidable, drawing on intuition and experience in ways that differ from AI's analytical approach.

As AI tools become more accessible, skills once limited to experts are now available to everyone. Whether this democratization of expertise is exciting or concerning depends on your perspective, but one thing is clear: the line between human and artificial intelligence continues to blur in fascinating ways.

Let's Watch!

AI vs GeoGuessr Pro: Can OpenAI's GPT-4 Beat a World Cup Player?

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence