Voice agents are at an inflection point, with both voice models and integration tools improving rapidly. The latest generation of AI voice agents offers unprecedented flexibility, accessibility, and personalization capabilities that can transform how users interact with applications. In this comprehensive guide, we'll explore OpenAI's latest voice agent technologies and show you how to implement them in your own projects.

Why Voice Agents Are Transforming User Interactions

Voice agents are becoming increasingly important in the AI landscape for three key reasons. First, they offer remarkable flexibility compared to older, more deterministic voice systems. Modern voice agents can handle a wider range of intents and navigate ambiguous situations with ease. Second, they provide greater accessibility than text-based interfaces, allowing users to interact with applications while commuting, exercising, or performing other activities. Finally, they enable deeper personalization through their ability to detect vocal cues like tone and cadence that text alone cannot convey.

As user awareness and expectations grow, voice interactivity is rapidly becoming an expected feature in popular applications. Companies like Intercom and Perplexity are already leveraging these capabilities to create more engaging user experiences.

Two Approaches to Building Voice Agents

There are two primary approaches to building voice applications today, each with its own advantages and use cases.

1. The Chained Approach

The traditional method involves chaining together three separate components:

- A speech-to-text model that transcribes user speech

- A text-only language model (like GPT-4.1) that processes the transcript and generates a response

- A text-to-speech model that converts the response into audio

Developers appreciate this approach because it allows them to select different models for each part of the pipeline and reuse existing text-based agent infrastructure. However, this method has significant limitations, as much of the emotional nuance and context gets lost during the transcription process.

2. Speech-to-Speech Models

The more advanced approach uses speech-to-speech models that can understand audio natively, reason over what has been said, and produce audio output directly. These models power features like advanced voice mode in ChatGPT and OpenAI's real-time API.

Speech-to-speech models offer two major advantages: they're extremely fast, and they're emotionally intelligent because they don't rely on transcription, which inherently loses nuances of speech like tone and emotion.

Recent OpenAI Voice Agent Innovations

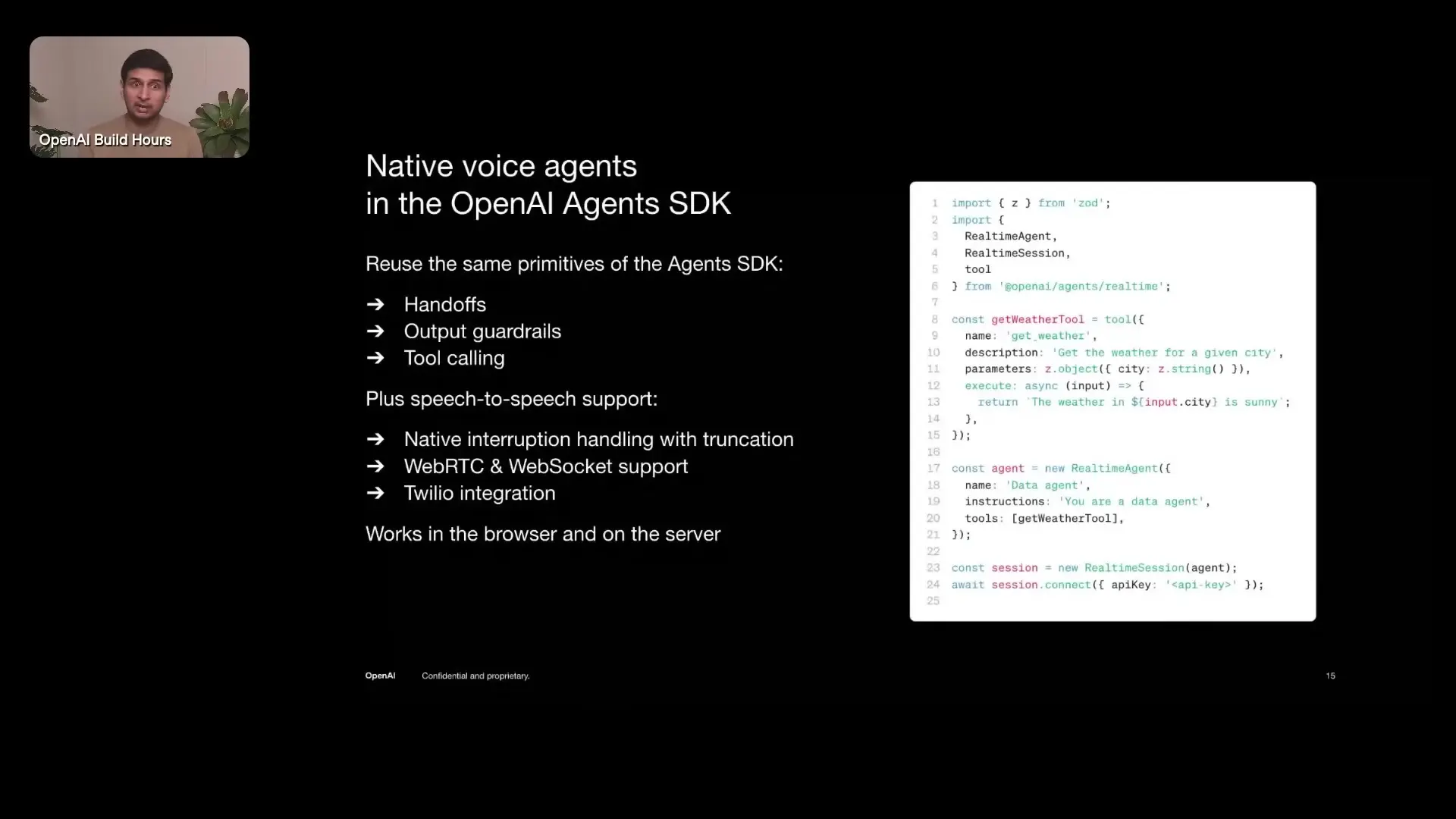

OpenAI has released several key updates that make voice agent development more accessible and powerful:

- TypeScript version of the Agents SDK with first-class support for the real-time API

- Integration of real-time models into the traces tab in the platform dashboard for easier debugging

- Improved model snapshot for the real-time API with better instruction and tool calling accuracy

- A new speed parameter for more granular control over the AI's speaking pace

- Enhanced handoff capabilities for routing between specialized agents

Understanding the Agents SDK

The TypeScript Agents SDK supports all the same primitives as the Python version, including a crucial feature called handoffs. Handoffs allow one agent to delegate control to another in a conversation flow, enabling chaining or routing across specialized agents in a multi-agent network.

This is particularly useful for building systems with domain-specific or language-specific behaviors, such as routing between support and sales agents in a customer service application or between different language agents in a translation service.

// Initialize a speech-to-speech agent using the real-time agent constructor

const agent = new RealTimeAgent({

model: "realtime",

instructions: "You are a helpful assistant specializing in home remodeling advice.",

tools: [searchTool, scheduleTool],

voice: "alloy"

});

// Start the conversation

await agent.start();With the SDK, developers can turn any agent into a real-time agent with minimal code changes. The SDK automatically handles technical details like using WebRTC in browsers or websockets in other environments.

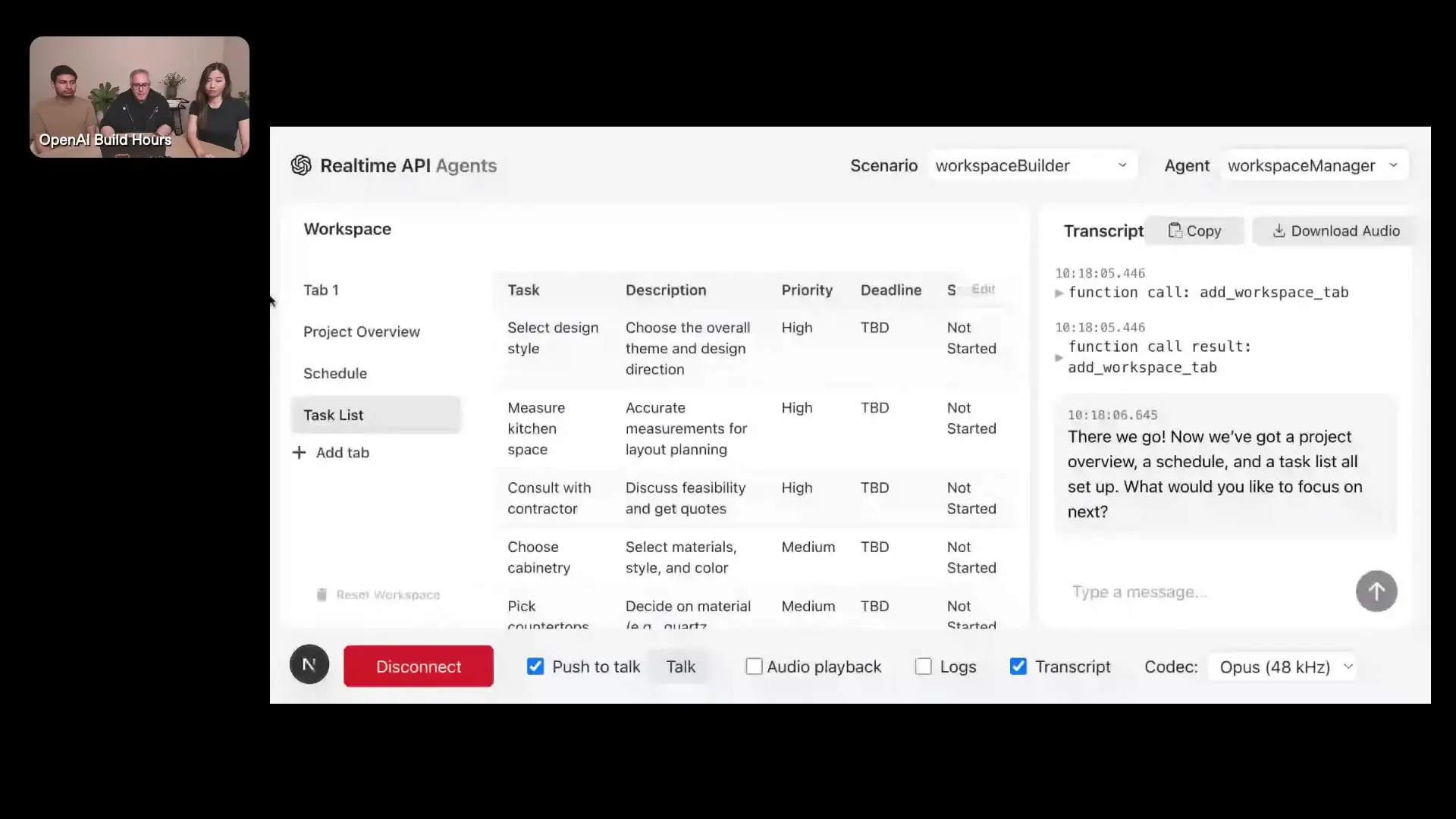

Implementing Handoffs Between Specialized Agents

Handoffs are a powerful primitive that allow for creating networks of specialized agents. For example, you might have a greeter agent that handles initial customer interactions before handing off to specialized agents for specific domains like technical support or sales.

// Define a greeter agent that can hand off to a specialized agent

const greeterAgent = new RealTimeAgent({

model: "realtime",

instructions: "You are a friendly greeter. If the user asks about math, hand off to the math tutor.",

tools: [{

type: "function",

function: {

name: "handoff_to_math_tutor",

description: "Hand off the conversation to a math tutor agent",

parameters: { type: "object", properties: {}, required: [] }

}

}]

});

// The handoff is implemented via a tool call under the hoodBest Practices for Voice Agent Development

When developing voice agents, consider these best practices to ensure a natural and effective user experience:

- Design for conversation flow rather than command-response interactions

- Use the speed parameter to adjust speaking pace based on content type and user preferences

- Leverage handoffs to create specialized agents for different domains

- Implement proper error handling and fallback mechanisms for misunderstood inputs

- Use the traces dashboard for debugging real-time conversations

Future of Voice Agent Development

The field of voice agent development is evolving rapidly. As models continue to improve, we can expect even more natural-sounding voices, better emotional intelligence, and more sophisticated reasoning capabilities. The gap between AI voice agents and human representatives will continue to narrow, creating opportunities for more seamless and satisfying user experiences across a wide range of applications.

Voice agents represent a fundamental shift in how we interact with technology, offering a more natural, accessible, and emotionally aware interface than traditional text-based interactions. By leveraging OpenAI's latest tools and best practices, developers can create voice experiences that truly delight users and solve real-world problems in novel ways.

Conclusion

Voice agents are rapidly becoming an essential component of modern applications. With OpenAI's latest speech-to-speech models, TypeScript SDK, and real-time API, developers now have powerful tools to create emotionally intelligent voice interfaces that can transform user experiences. By understanding the different approaches to voice agent development and implementing best practices, you can build voice agents that feel natural, responsive, and truly helpful to your users.

Let's Watch!

Build Voice Agents: 5 Techniques for Realistic AI Conversations

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence