Text-to-video AI generation has become one of the most exciting developments in artificial intelligence, with tools like Sora and VO3 demonstrating incredible capabilities. While these commercial solutions leverage massive computational resources, you can build your own AI video generator using ComfyUI, an open-source tool that makes advanced AI workflows accessible to everyone with the right setup.

What is ComfyUI?

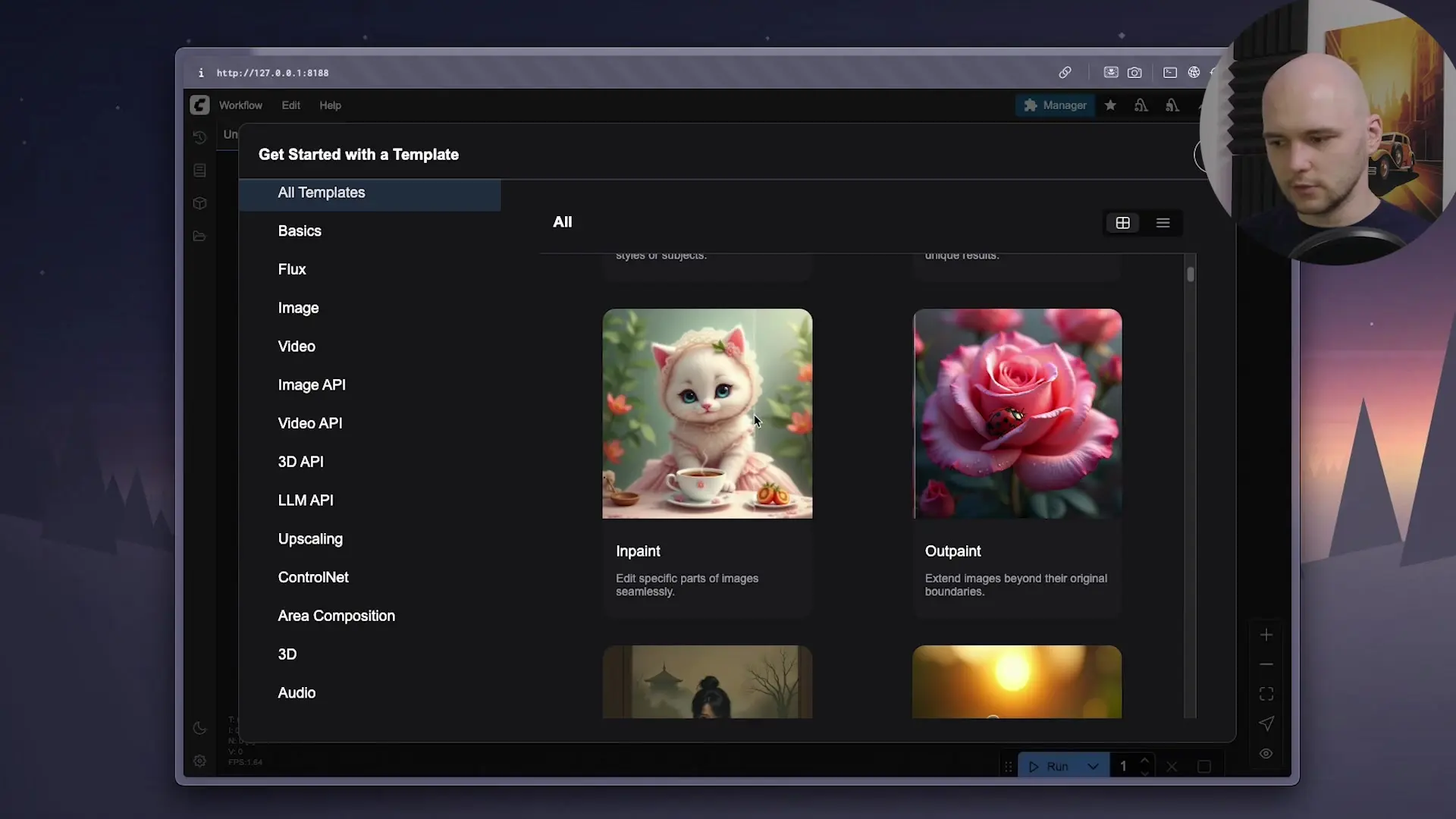

ComfyUI is a powerful node-based interface for generative AI that allows you to create custom workflows by connecting different components. It provides a visual way to design complex AI generation pipelines without writing code. The node-based system makes it possible to experiment with different models, parameters, and processing steps to achieve the exact results you want.

With ComfyUI, you can create workflows for image generation, video synthesis, audio processing, and even 3D content creation. The flexibility of this tool makes it perfect for building your own text-to-video generator.

Setting Up Your GPU Environment

Before diving into ComfyUI, it's important to understand that generative AI tasks require significant GPU resources. Most consumer laptops (especially those without NVIDIA graphics cards) won't be able to handle the computational demands efficiently. For this reason, we'll set up a remote GPU server using Digital Ocean's GPU droplets.

Creating a GPU Droplet on Digital Ocean

- Sign up for a Digital Ocean account if you don't have one

- Navigate to the Droplets section and select GPU Droplets

- Choose the NVIDIA RTX 4000 ADA option (or higher if you need more performance)

- Name your droplet (e.g., "ComfyUI-Server")

- Complete the setup and create your droplet

Note that GPU droplets incur costs even when powered off. For occasional use, it's recommended to save a snapshot of your configured droplet, destroy the instance when not in use, and recreate it from the snapshot when needed.

Connecting to Your GPU Server

Once your GPU droplet is running, connect to it via SSH using your terminal:

ssh root@your_droplet_ipInstalling ComfyUI

With access to your server, you can now install ComfyUI and its dependencies:

- Install pip3: `apt-get update && apt-get install -y python3-pip`

- Install the ComfyUI CLI: `pip3 install comfy-cli`

- Install ComfyUI: `comfy install`

- Launch ComfyUI: `comfy launch`

Once launched, ComfyUI will be available on localhost port 8188 on your remote server. To access it from your local machine, you'll need to create an SSH tunnel.

Creating an SSH Tunnel

Open a new terminal window on your local machine and run:

ssh -L 8188:localhost:8188 root@your_droplet_ipThis command forwards the remote server's port 8188 to your local machine's port 8188, allowing you to access the ComfyUI interface by opening http://localhost:8188 in your browser.

Setting Up Your Text-to-Video Workflow

When you first open ComfyUI, you'll see various sample workflows. For our text-to-video generator, we'll focus on the WAN 2.25 billion parameter video generation workflow.

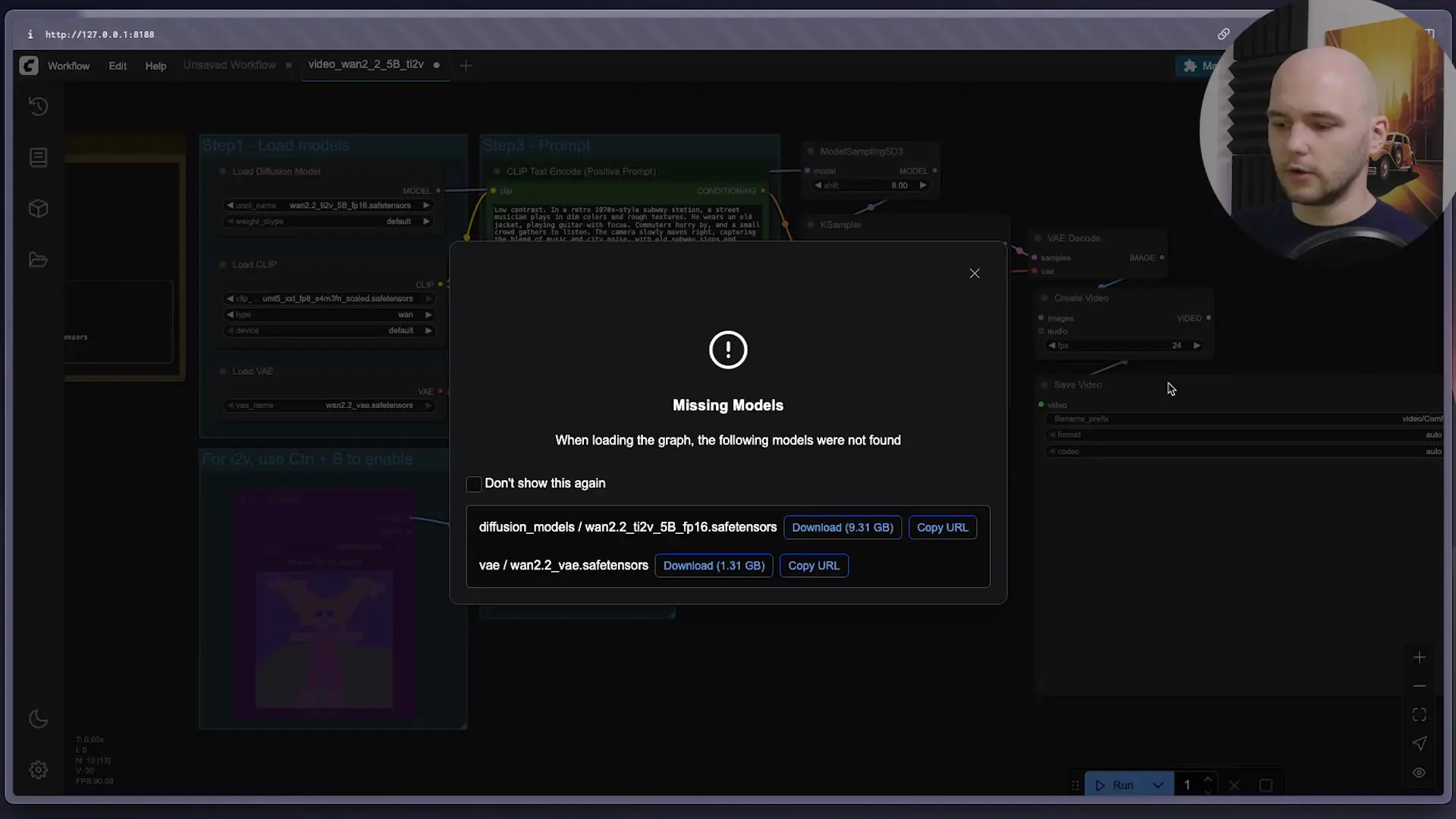

Downloading Required Models

Before using the workflow, you'll need to download the required AI models. ComfyUI will show warnings about missing models that need to be downloaded.

To download a model, copy its URL from the warning message, then use the ComfyUI CLI on your server:

comfy download [model_url]When prompted, specify the correct folder path for the model (e.g., "diffusion_models" or "VAE") as indicated in the warning message.

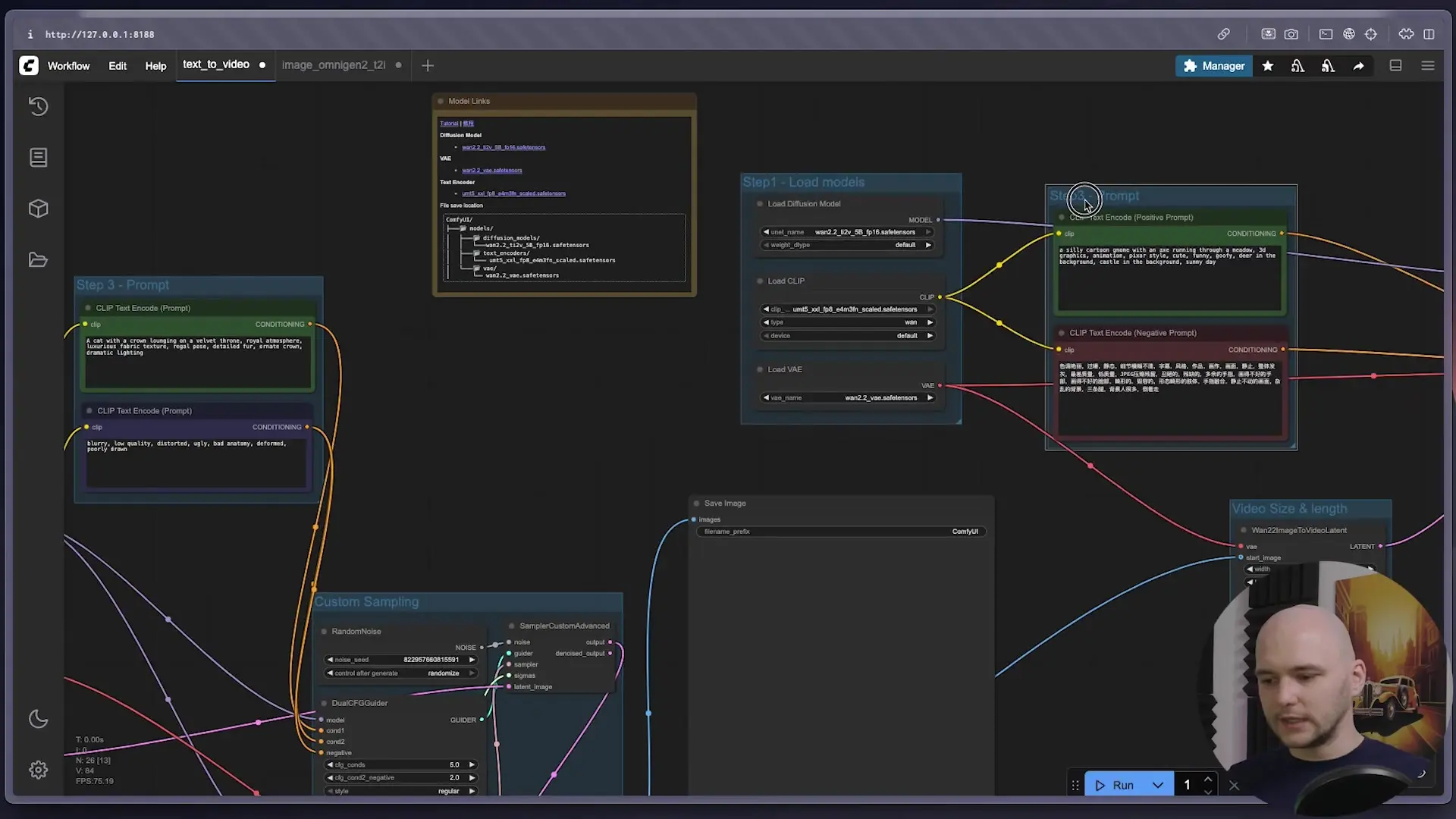

Creating an Enhanced Text-to-Video Workflow

While the basic video generation workflow works, you can achieve better results by combining text-to-image and image-to-video models. Here's how to create an enhanced workflow:

- Start with the WAN video generation workflow

- Add the Omni Gen 2 text-to-image workflow (you'll need to download its models too)

- Connect the output of the image generation workflow to the input of the video generation workflow

- Create common prompt nodes to feed both workflows with the same text input

It's important to note that you can't simply reuse the same encoded text for both workflows, as they use different text encoder models. Instead, create common text prompt nodes and connect them to each workflow's respective text encoder.

Building a Web Interface for Your AI Video Generator

Once your workflow is working correctly, you can create a user-friendly web interface to make it accessible without requiring direct interaction with ComfyUI. While you could build a custom web app from scratch, we'll use Vue Comfy, a ready-made Next.js application that integrates with ComfyUI workflows.

Setting Up Vue Comfy

- Clone the Vue Comfy repository: `git clone https://github.com/vue-comfy/vue-comfy.git`

- Navigate to the project directory: `cd vue-comfy`

- Install dependencies: `npm install`

- Start the application: `npm run dev`

This will start Vue Comfy on port 3000. Create another SSH tunnel to access it locally:

ssh -L 3000:localhost:3000 root@your_droplet_ipImporting Your Workflow

From ComfyUI, export your workflow as an API configuration (JSON file). Then, in Vue Comfy:

- Open http://localhost:3000 in your browser

- Upload your workflow JSON file

- Give your workflow a name

- Save the changes

- Go to the playground to test your workflow

Now you have a clean, user-friendly interface where you can simply enter text prompts and generate videos without interacting with the node-based interface.

Optimizing Your AI Video Generator

To improve the quality of your generated videos, consider these optimization strategies:

- Increase the number of sampling steps for better quality (at the cost of longer generation time)

- Experiment with different prompt engineering techniques to guide the AI more effectively

- Try different model combinations - some models work better for certain types of content

- Adjust video resolution and frame count based on your GPU capabilities

- For production use, consider upgrading to a more powerful GPU instance

Conclusion

Building your own AI video generator with ComfyUI gives you complete control over the generation process and allows you to create custom workflows tailored to your specific needs. While the results may not match commercial solutions like Sora that use vastly more computational resources, this DIY approach provides an excellent foundation for understanding and experimenting with text-to-video AI technology.

ComfyUI's node-based interface makes it accessible to users without deep programming knowledge, while still offering the flexibility and power that advanced users need. By combining different models and carefully crafting your workflows, you can create impressive text-to-video results even with relatively modest GPU resources.

As AI technology continues to evolve, tools like ComfyUI will become increasingly powerful, enabling more creators to build sophisticated AI-powered applications without relying on proprietary services.

Let's Watch!

Build Your Own AI Video Generator: DIY Text-to-Video with ComfyUI

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence