A critical security vulnerability was recently discovered in Google Chrome's Angle component, but what makes this discovery particularly noteworthy isn't just the bug itself—it's that it was found entirely by Google's internal AI system called 'Big Sleep'. This collaboration between Google DeepMind and Project Zero researchers represents a significant advancement in how security vulnerabilities are identified and highlights the growing role of artificial intelligence in cybersecurity.

Understanding the Vulnerability: Use-After-Free in Chrome's Angle Component

The vulnerability in question is CVE-2025-9478, a use-after-free vulnerability in Chrome's Angle component. Angle (Almost Native Graphics Layer Engine) is a critical part of Chrome that enables the browser to render 2D and 3D graphics by interfacing with the GPU. When you view graphics-heavy content in Chrome, Angle is the component that handles the communication between WebGL (the JavaScript API for rendering graphics) and your computer's graphics hardware.

A use-after-free vulnerability occurs when a program continues to use a pointer after the memory it references has been freed. This can lead to memory corruption, crashes, and potentially allow attackers to execute arbitrary code. These vulnerabilities are particularly dangerous because they can often be exploited to gain unauthorized access to systems.

The Mechanics of Use-After-Free Vulnerabilities

To understand how use-after-free vulnerabilities work, let's examine a simplified example. Consider two structures in memory: a 'cat' structure and a 'dog' structure. Both have different internal layouts—perhaps the cat structure has an integer ID followed by a pointer, while the dog structure has the pointer first, followed by an integer ID.

The vulnerability arises when:

- A program creates an instance of one structure (e.g., a dog)

- The program later frees (deletes) that instance

- The same memory space is then allocated for a different structure (e.g., a cat)

- The program attempts to use the original pointer (to the dog), which now points to memory containing a cat structure

Since the internal layouts of these structures differ, the program incorrectly interprets the data. When the program tries to use a member of the original structure (like a function pointer), it might instead be accessing the ID field of the new structure. If an attacker can control the values in the new structure, they can potentially cause the program to execute arbitrary code.

// Example of structures with different layouts

struct Cat {

int id; // First field is an integer

void* function; // Second field is a pointer

};

struct Dog {

void* function; // First field is a pointer

int id; // Second field is an integer

};

// Vulnerable code path

Dog* dog = new Dog();

delete dog; // Memory is freed but pointer still exists

Cat* cat = new Cat(); // Reuses same memory location

// dog pointer now points to memory containing a Cat structure

dog->function(); // CRASH: Tries to use cat.id as a function pointer

Google's AI-Powered Vulnerability Detection

The discovery of this vulnerability marks a significant milestone as it was found entirely by Google's AI tool called Big Sleep. This collaboration between Google DeepMind and Project Zero researchers demonstrates how AI can be leveraged to identify complex security issues that might be difficult for human researchers to find through traditional methods.

While the specific details of how Big Sleep works aren't fully public, it appears to be an agentic AI solution designed specifically for security research. According to Cindra Joyce, VP of Google Threat Intelligence, Google is actively developing AI solutions like Big Sleep to enhance the capabilities of security researchers.

Challenges in AI-Driven Security Research

Despite the promise of AI in security research, there are significant challenges. One major issue is the signal-to-noise ratio. When using AI to identify potential vulnerabilities, researchers often have to sift through numerous false positives to find genuine security issues.

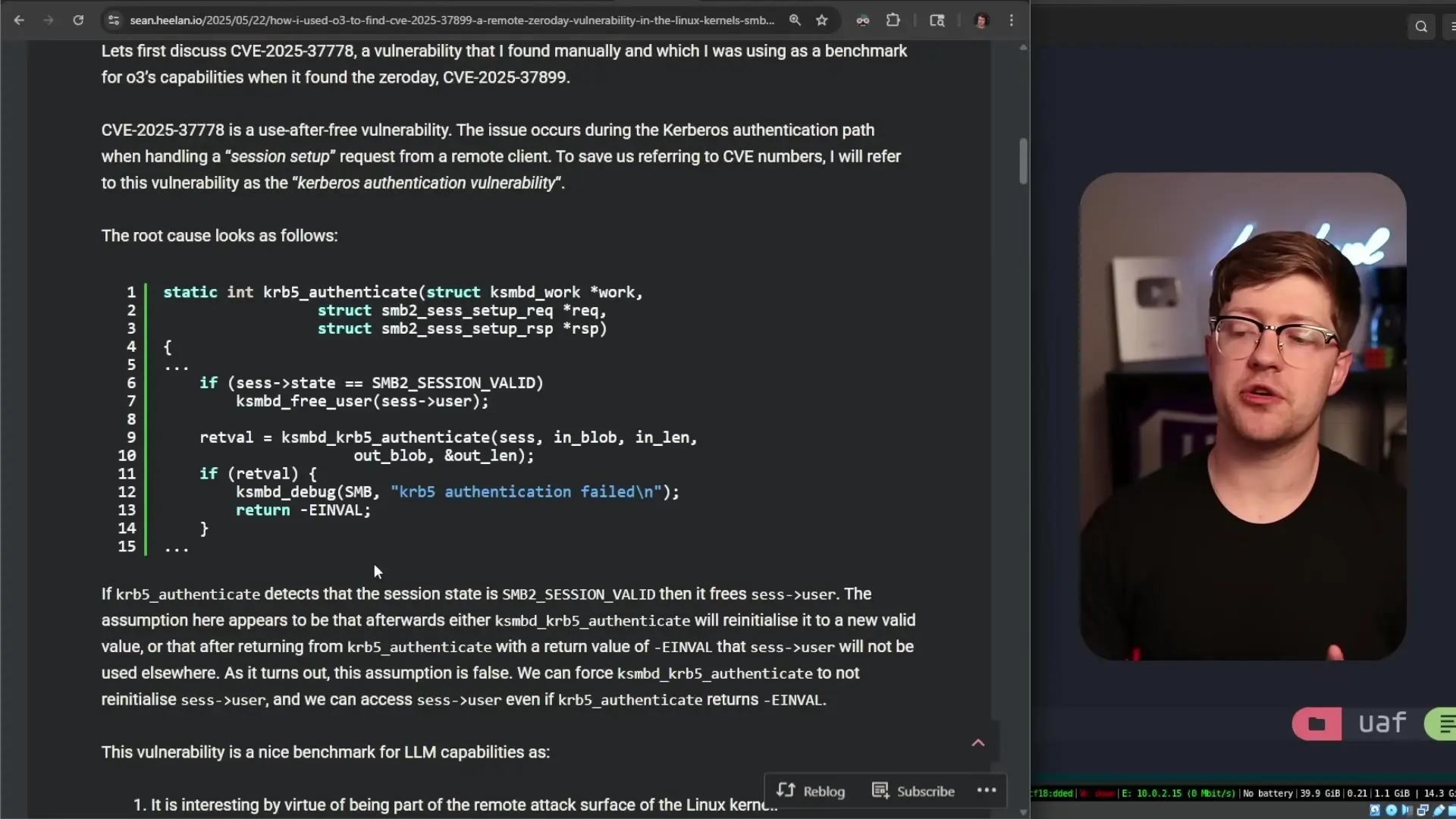

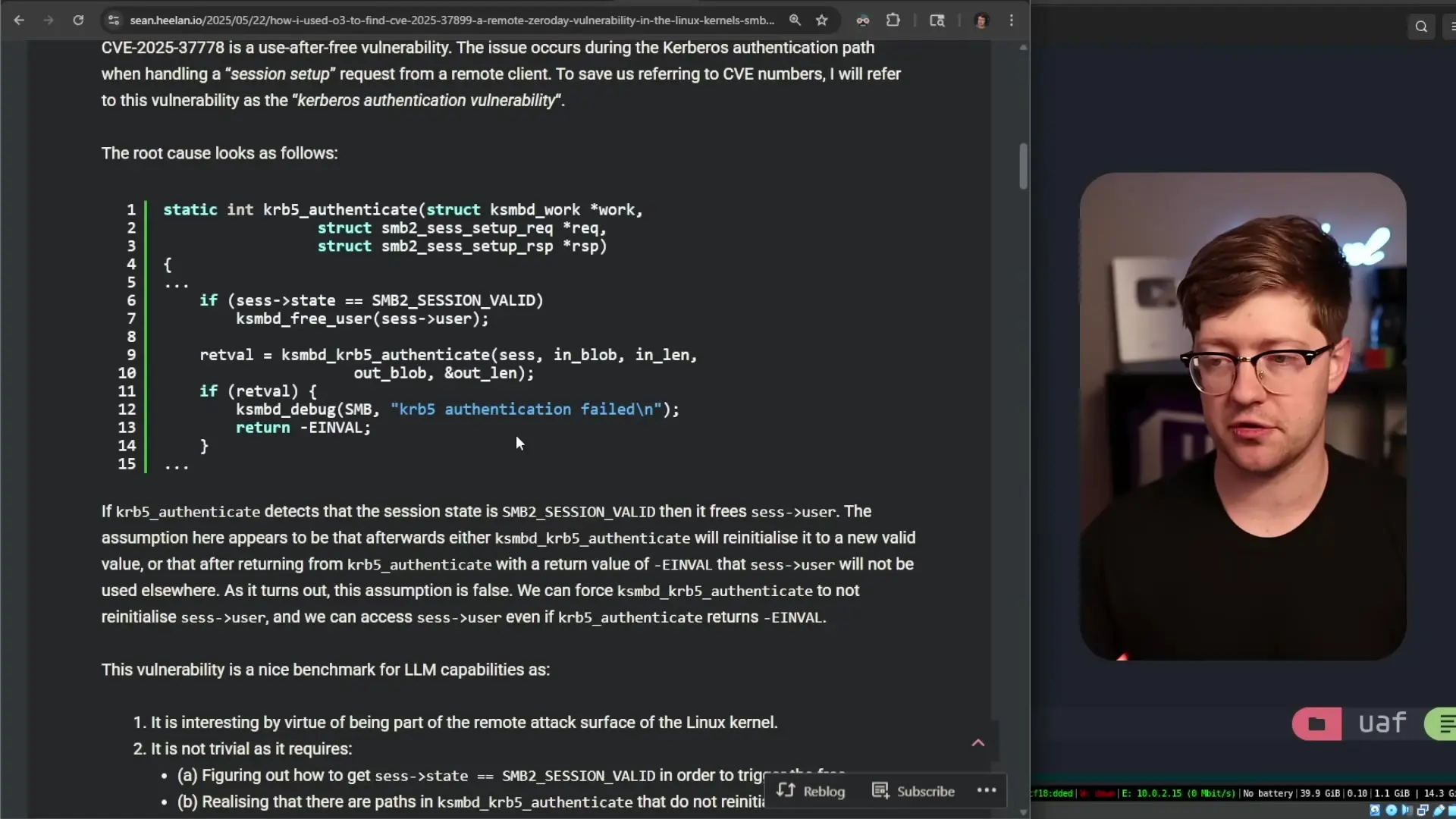

For example, in similar research conducted by security professionals using AI to find vulnerabilities in the Linux kernel, the signal-to-noise ratio was approximately 1 in 50. This means that for every 50 potential vulnerabilities identified by the AI, only one was a genuine security issue. The rest were essentially hallucinations or misinterpretations by the AI.

Another challenge is the context window limitation. As AI models process larger amounts of code, their ability to meaningfully analyze that code deteriorates. This means researchers need to carefully segment codebases when using AI for vulnerability detection, rather than simply feeding entire codebases into the system.

Why AI Excels at Finding Use-After-Free Vulnerabilities

Use-after-free vulnerabilities are particularly challenging to detect through traditional methods like fuzzing because they require specific sequences of memory allocation and deallocation to trigger. Traditional fuzzing tools might struggle to create the exact conditions needed to expose these vulnerabilities.

AI systems, however, can potentially analyze code paths and identify patterns that might lead to use-after-free conditions. They can recognize situations where memory is allocated, freed, and then potentially accessed again—a pattern that might indicate a vulnerability.

Implications for the Future of Security Research

The discovery of this Chrome vulnerability by an AI system points to a future where AI plays an increasingly important role in security research. As AI systems become more sophisticated, they may be able to identify increasingly complex vulnerabilities that human researchers might miss.

However, the current limitations—particularly the high rate of false positives and context window constraints—suggest that AI is still best used as a tool to augment human researchers rather than replace them. The most effective approach appears to be a collaboration between AI systems and human security experts, with AI identifying potential issues and humans verifying and exploring them further.

Improving Your Debugging Skills for Security

For developers and security professionals looking to better understand and prevent vulnerabilities like use-after-free, developing strong debugging skills and a deep understanding of low-level programming concepts is essential. Understanding how memory management works, particularly in languages like C and C++ that don't have automatic garbage collection, can help you write more secure code and identify potential vulnerabilities before they make it into production.

- Learn languages like C to understand memory management at a fundamental level

- Practice identifying common memory corruption patterns in code

- Understand how memory allocators work and how they can be exploited

- Develop skills in using debugging tools to trace memory usage

- Study existing CVEs to understand the patterns that lead to vulnerabilities

Conclusion

The discovery of the Chrome Angle vulnerability by Google's Big Sleep AI represents an important milestone in the evolution of security research. While AI-driven security tools still face significant challenges, they show promise in identifying complex vulnerabilities that might otherwise go undetected.

As we move forward, the most effective approach to security research will likely involve collaboration between AI systems and human researchers, with each complementing the other's strengths and compensating for their weaknesses. For developers and security professionals, staying informed about these developments and continuing to build fundamental skills in debugging and low-level programming will be essential for creating and maintaining secure systems.

Let's Watch!

Critical Chrome Zero-Day: How Google's AI Found a Dangerous Use-After-Free Vulnerability

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence