On May 29th, DeepSeek officially announced the release of DeepSeek R1 V2, a significant upgrade to their flagship AI model. While initially described as a "minor upgrade" in Chinese announcements, the performance benchmarks reveal substantial improvements that position this open-source model as a serious competitor to proprietary giants like OpenAI's GPT-4 and Google's Gemini Pro.

Key Improvements in DeepSeek R1 V2

The new DeepSeek R1 V2 brings several notable enhancements that significantly improve its capabilities as an AI assistant and development tool. Using deepsource local implementations, developers can now access a model that rivals commercial offerings while maintaining the flexibility of open-source software.

- Enhanced front-end capabilities for better user interaction

- Significant reduction in hallucinations for more reliable outputs

- Full support for JSON output formatting

- Integrated function calling capabilities

- Approximately 20% overall performance increase from R1 V1

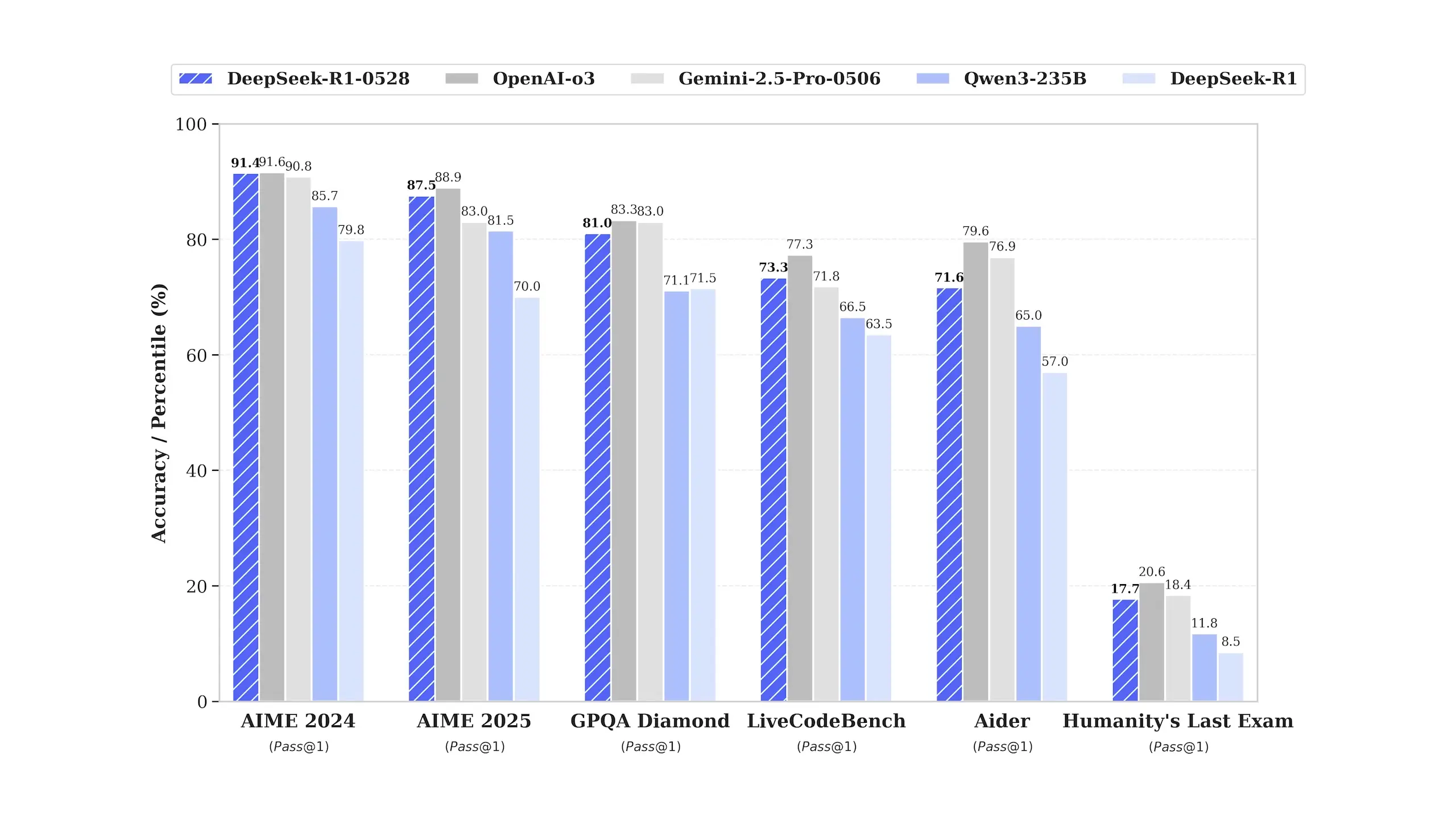

Benchmark Performance Against Industry Leaders

According to official benchmarks, DeepSeek R1 V2 (also known as 052A) now outperforms Google's newest Gemini Pro 0506 in 50% of test scenarios and trails only slightly behind OpenAI's GPT-4. The model's performance on the Ader leaderboard is comparable to Claude 4, both with and without the "thinking" capability enabled.

Third-party evaluations confirm these impressive results. For creative writing measured by EQbench, DeepSeek R1 V2 now ranks as the third-best model, behind only GPT-4 and GPT-4 Mini. When implementing DeepSeek R1 V2 using deepsource github repositories, developers can access this high-level performance for various applications.

Output Style and Performance Characteristics

Initial testing reveals that DeepSeek R1 V2 has developed a distinctive output style that combines elements from both Gemini and GPT-4. The model shows a preference for structured output formats like nested bullet points (similar to Gemini) while using fewer paragraphs (resembling GPT-4).

- Favors structured responses with nested bullet points

- Uses fewer paragraphs compared to previous versions

- Performs well on basic logical questions despite occasional reasoning issues

- Generation speed remains relatively slow on the official platform

- Context window size appears unchanged from previous version

Distilled Model for Improved Reasoning

In addition to the main model release, DeepSeek has also created a distilled version that captures the "train of thought" reasoning process from R1 V2. This distilled model, based on a 3B architecture, achieves state-of-the-art performance for open-source models on the AME 2024 benchmark. When using deepsky v2 implementations, this smaller model can be particularly valuable for resource-constrained environments.

- Surpasses Qwen 3B by 10% on benchmark tests

- Matches performance of Qwen 3 235B with thinking capabilities

- Currently ranks as the second-best open-source model

- Shows some limitations in coding and scientific fact domains

- Represents a significant performance boost for a 3B-parameter model

Availability and Implementation

Both the full DeepSeek R1 V2 model and the distilled 3B model are now available for download from Hugging Face. Users can also access R1 V2 directly through DeepSeek's official website for testing and evaluation. For those looking to implement these models in local environments, deepsource local implementations offer a straightforward path to deployment.

Developers interested in exploring deepin v20 compatibility or integrating with deep view software solutions will find comprehensive documentation and resources available through official channels. The open-source nature of these models makes them particularly valuable for research, custom applications, and scenarios where data privacy is paramount.

Implications for the AI Landscape

The release of DeepSeek R1 V2 represents a significant milestone in the open-source AI community. By achieving performance levels comparable to proprietary models from OpenAI, Google, and Anthropic, DeepSeek is helping democratize access to cutting-edge AI capabilities. This development is particularly important for researchers, smaller companies, and developers who may not have the resources to access or fine-tune commercial models.

As open-source models like DeepSeek R1 V2 continue to narrow the performance gap with commercial alternatives, we can expect to see greater innovation and more diverse applications of AI technology across industries. The availability of high-performance, open-source models also provides valuable alternatives for deployments where data privacy, customization, and control are critical requirements.

Conclusion

DeepSeek R1 V2 represents a major advancement in open-source AI, offering performance that rivals the best commercial models while maintaining the flexibility and accessibility of open-source software. With significant improvements in hallucination reduction, structured output capabilities, and overall performance, this model provides developers and organizations with a powerful new tool for AI applications.

Whether you're implementing deepsky v2 for research purposes or integrating with deepsource github repositories for production applications, DeepSeek R1 V2 offers compelling capabilities that can enhance a wide range of AI-powered solutions. As the model continues to mature and the community develops additional tools and resources around it, we can expect to see even more innovative applications emerge.

Let's Watch!

DeepSeek R1 V2: The New Open-Source AI Model Challenging GPT-4

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence