Artificial intelligence systems today can answer complex questions, create stunning artwork, drive vehicles, and even diagnose diseases with remarkable accuracy. But how did we reach this point? The story of machine learning fundamentals begins decades ago with pioneering computer scientists who first contemplated the possibility of teaching machines to learn.

The Pioneers: Claude Shannon and Alan Turing

In 1950, Claude Shannon, one of the pioneers of computer science, created a robotic mouse named Theseus that could navigate through a maze. This early experiment in machine learning used telephone relay switches to remember which path led to the goal. Once Theseus had successfully navigated the maze once, it could find its way back regardless of where it was placed within the maze.

That same year, Alan Turing published his famous paper "Computing Machinery and Intelligence," introducing what would later be known as the Turing Test. Rather than asking if machines could think, Turing proposed a more precise question: could a computer behave so intelligently that humans couldn't distinguish it from human thought?

Turing speculated that rather than encoding an adult human's mind into a computer program, it might be easier to program a computer to behave like a child's brain and then teach it through experiences, rewards, and punishments—essentially describing the fundamental concept behind modern reinforcement learning.

Early Challenges in Computer Learning

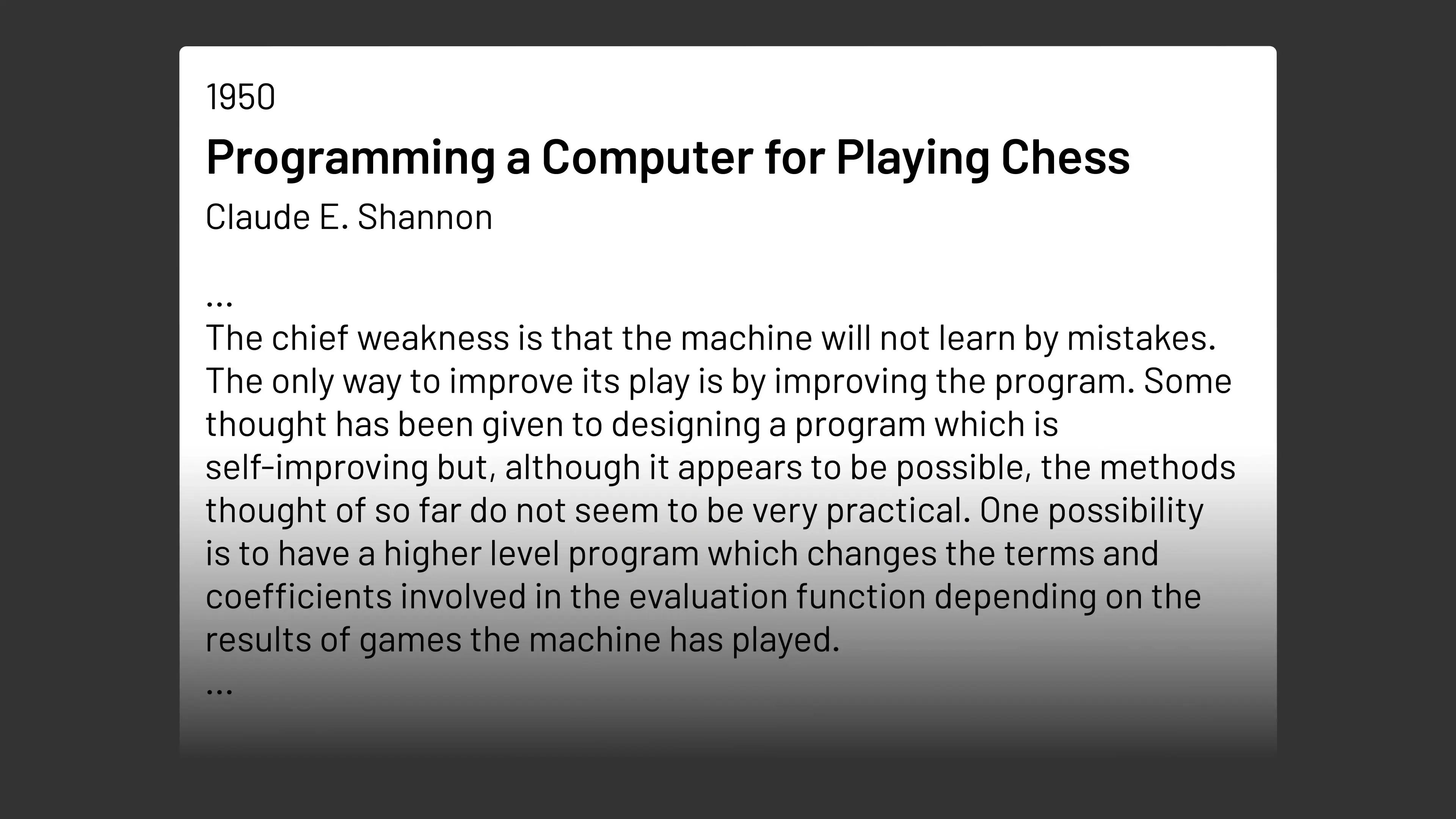

Shannon also explored the possibility of machines that could play chess. He recognized that calculating all possible moves through brute force would be impractical. Instead, he proposed using heuristics based on chess knowledge—valuing pieces differently, recognizing strong configurations, and prioritizing mobility—to evaluate board positions without exhaustive search.

However, Shannon acknowledged a significant limitation: his chess machine couldn't learn from experience. Unlike human players who improve with practice, his algorithm would remain static. Shannon recognized that a truly intelligent system would need to learn from game results and update its evaluation function accordingly—a core principle of machine learning fundamentals that would take decades to fully realize.

The Birth of Neural Networks

The mathematical foundation for modern machine learning can be traced back to 1943, when neuroscientist Warren McCulloch and logician Walter Pitts developed a mathematical model for neural activity. Their work aimed to understand how neurons in the human brain function rather than to create learning machines.

In their model, each neuron's activity was binary—either firing (1) or not firing (0). Neurons connected to each other with specific thresholds determining when they would fire. For example, if a neuron had three inputs and a threshold of two, it would only fire if at least two input neurons fired. Their model also included inhibitory inputs that could prevent neurons from firing regardless of other inputs.

Neural Networks as Logical Functions

The McCulloch-Pitts model demonstrated how networks of neurons could represent logical functions. For instance:

- AND function: Two neurons connected to an output neuron with a threshold of two

- OR function: Two neurons connected to an output neuron with a threshold of one

- NOT function: An inhibitory input with a threshold of zero

By chaining together multiple layers of neurons, these networks could compute increasingly complex logical functions. However, these early neural networks couldn't learn—they simply calculated predetermined mathematical functions. The McCulloch-Pitts model wasn't focused on learning but would later become a crucial foundation for developing learning algorithms.

From Mathematical Models to Machine Learning Fundamentals

The journey from these early concepts to modern machine learning systems spans decades of research and innovation. Both Turing and Shannon recognized in 1950 that algorithms capable of learning would be powerful tools for building intelligent machines, but neither knew exactly how to achieve this goal.

The mathematical foundations laid by McCulloch and Pitts, combined with the visionary ideas of Turing and Shannon, set the stage for the development of modern machine learning techniques. These pioneers helped establish the fundamental concepts that would eventually lead to today's sophisticated AI systems capable of learning from experience and adapting to new situations.

Core Components of Machine Learning Fundamentals

Understanding machine learning fundamentals requires familiarity with several key concepts that have evolved from these early ideas:

- Mathematical foundations: Probability, statistics, linear algebra, and calculus that underpin machine learning algorithms

- Neural network architecture: How layers of artificial neurons process information and learn patterns

- Learning paradigms: Supervised learning (learning from labeled examples), unsupervised learning (finding patterns without labels), and reinforcement learning (learning through trial and error)

- Evaluation metrics: Methods for measuring how well algorithms perform and improve over time

- Bias and variance: Understanding the tradeoff between models that are too simple versus too complex

The Ongoing Evolution of Machine Learning

The field of machine learning continues to evolve at a rapid pace. From Shannon's maze-solving mouse to today's sophisticated deep learning systems, the journey has been marked by theoretical breakthroughs, technological innovations, and increasingly powerful applications.

Modern machine learning fundamentals build upon these historical foundations while incorporating new techniques and approaches. As we continue to develop more sophisticated AI systems, understanding these fundamental concepts becomes increasingly important for anyone working in technology, data science, or related fields.

The story of machine learning is not just about algorithms and mathematics—it's about the human quest to understand intelligence itself and to create systems that can learn, adapt, and solve problems in ways that once seemed possible only for humans. As we build upon the work of pioneers like Shannon, Turing, McCulloch, and Pitts, we continue to push the boundaries of what machines can learn and achieve.

Let's Watch!

The Evolution of Machine Learning: From Early Concepts to Modern AI Systems

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence