The history of artificial intelligence has been shaped by two fundamental approaches that emerged in the 1950s. As computer scientists began developing intelligent machines, they created distinct pathways that would eventually converge to form the foundation of modern AI. Understanding the evolution of perceptrons—the first trainable neural networks—provides crucial insight into how machines gained the ability to learn.

Early Approaches to Artificial Intelligence

The first approach, now known as symbolic artificial intelligence, was inspired by human reasoning. It focused on encoding knowledge and logic directly into computers. An early example was the Logic Theorist, designed in 1956 by Alan Newell and Herbert Simon. This program could prove logical theorems using a set of programmed rules, demonstrating that computers could perform logical reasoning tasks.

However, symbolic AI faced significant challenges when dealing with human perception. While computers could follow logical rules, they struggled with pattern recognition tasks that humans perform effortlessly—like distinguishing shapes or letters. This limitation prompted researchers to explore alternative approaches that could better mimic the human brain's ability to learn from experience.

The Rise of Connectionism and Neural Networks

The second approach, called connectionism, emerged as a promising alternative. Building on the work of artificial neurons described by McCulloch and Pitts, connectionism proposed that if computers could be structured like the human brain—with networks of interconnected neurons—they might replicate human intelligence more effectively.

Central to this effort was the perceptron, a computerized model based on the brain that could learn from experience. First developed by Frank Rosenblatt in 1957, the perceptron represented a significant breakthrough in machine learning, demonstrating that computers could be designed to improve their performance through training rather than explicit programming.

The Architecture of Early Perceptrons

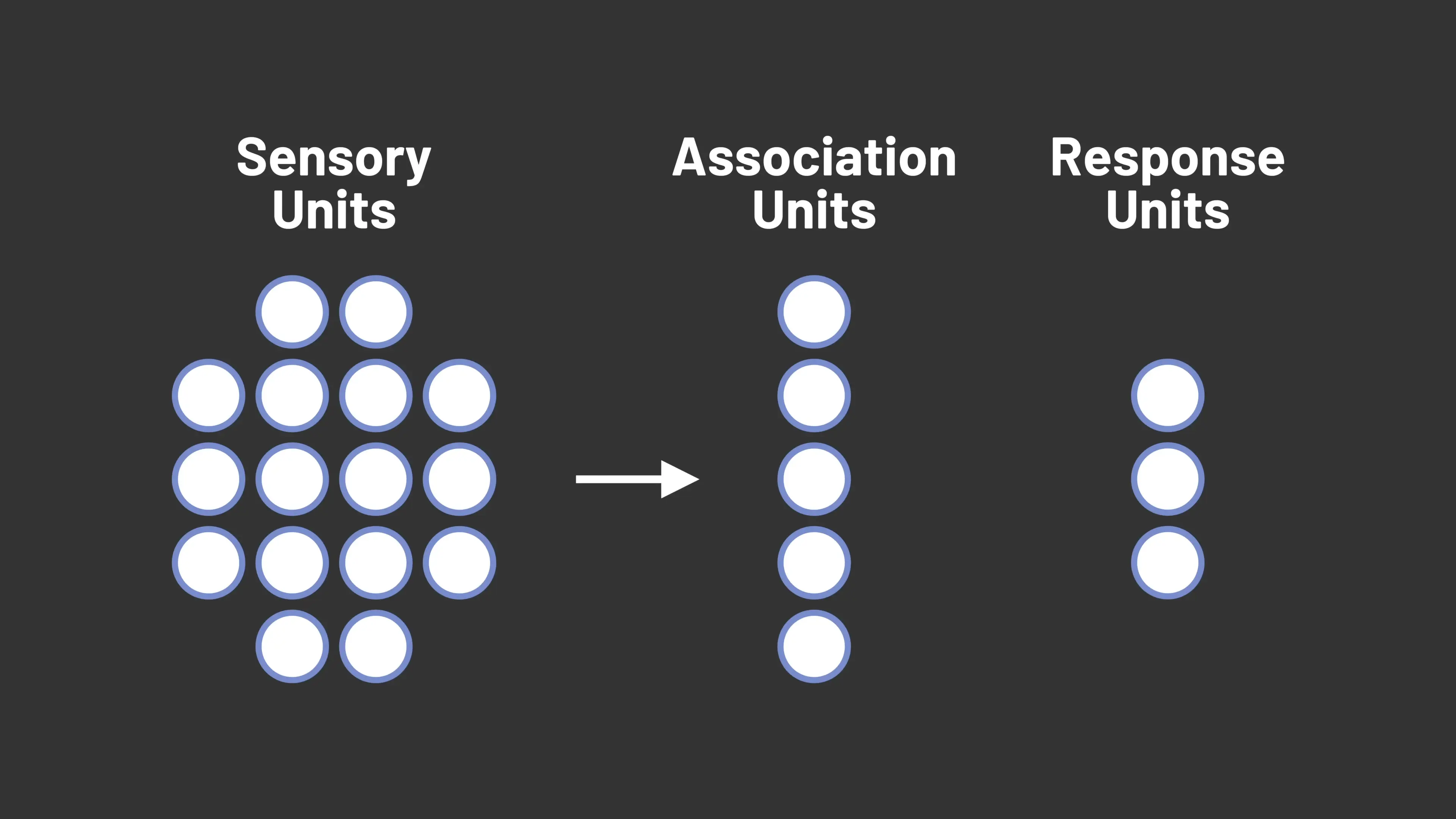

Rosenblatt's early perceptron model consisted of three distinct layers of units, where each unit represented a digital neuron storing a numerical value corresponding to its activity level:

- Sensory Units: Inspired by the retina of the eye, these units formed the input layer and responded to visual patterns by becoming activated when exposed to specific stimuli.

- Association Units: This intermediate layer received input from random sets of sensory units. Each association unit would fire (activate) based on whether the sum of its excitatory connections minus its inhibitory connections exceeded a threshold.

- Response Units: The output layer represented the final classification. These units took input from the association units and fired based on the weighted sum of their connections.

The key innovation was that while the connections between layers were initially random, the perceptron could learn through training. This learning capability differentiated perceptrons from previous AI approaches and laid the groundwork for modern neural networks.

How Perceptrons Learn: The Training Process

The learning process in perceptrons represented a fundamental shift in how computers could be programmed. Rather than explicitly coding rules, perceptrons could adjust their internal parameters (weights) based on experience with training data.

Rosenblatt experimented with multiple learning algorithms, but the core principle remained consistent: the perceptron would learn by adjusting the weights between its association and response layers based on whether its classifications were correct or incorrect.

- When the perceptron classified an input correctly, no changes were made to the weights.

- When it incorrectly identified a pattern (false positive), the weights of active connections were decreased by a fixed amount called the learning rate.

- When it missed a pattern (false negative), the weights of active connections were increased by the learning rate.

Through this process of trial and error with hundreds of training examples, perceptrons gradually improved their ability to recognize patterns. This approach to machine learning—adjusting parameters based on feedback—remains fundamental to modern neural networks, including today's sophisticated deep learning systems.

The Modern Perceptron Model

As research progressed, the perceptron model evolved into what we now consider the modern perceptron. The essential learning component remained in the weights between layers, but several refinements were introduced:

- Real-valued inputs: Instead of binary inputs, modern perceptrons accept any real number as input, allowing for more nuanced representations of data.

- Proportional weight adjustments: Rather than using a fixed learning rate for all weight adjustments, modern perceptrons adjust weights proportionally to the magnitude of the corresponding input.

- Bias term: Modern perceptrons include a bias value that effectively allows the model to learn the appropriate threshold, rather than using a fixed threshold.

These refinements made perceptrons more flexible and powerful, but they still had fundamental limitations. The most significant constraint was identified in 1969 by Marvin Minsky and Seymour Papert in their influential book "Perceptrons," which demonstrated that single-layer perceptrons could only learn to classify linearly separable patterns.

The Difference Between Perceptrons and Neural Networks

Understanding the difference between perceptrons and neural networks is crucial for appreciating the evolution of machine learning. While a perceptron is a feed-forward neural network, it represents just one specific type of neural network architecture. The key distinctions include:

- Layer structure: Single-layer perceptrons have only input and output layers, while multilayer perceptrons (MLPs) include one or more hidden layers.

- Learning capability: Single-layer perceptrons can only learn linearly separable patterns, while multilayer perceptrons can approximate any continuous function.

- Activation functions: Modern neural networks use various activation functions beyond the simple threshold function used in early perceptrons.

- Training algorithms: While perceptrons use a simple weight update rule, modern neural networks often employ more sophisticated algorithms like backpropagation.

The limitations of single-layer perceptrons led to a period known as the "AI winter" when neural network research slowed considerably. However, the development of multilayer perceptrons and backpropagation algorithms in the 1980s revitalized the field, eventually leading to today's deep learning revolution.

Applications of Multilayer Perceptron Neural Networks

Modern multilayer perceptron neural networks have found applications across numerous domains. Their ability to learn complex patterns and relationships from data has made them valuable tools for:

- Pattern recognition and classification tasks

- Time series prediction and financial modeling

- Medical diagnosis and healthcare applications

- Natural language processing and text classification

- Control systems and robotics

- Image and speech recognition (though convolutional neural networks have largely superseded MLPs for these tasks)

While more specialized neural network architectures like convolutional neural networks (CNNs) and recurrent neural networks (RNNs) have been developed for specific tasks, the basic principles of learning through weight adjustment pioneered by perceptrons remain fundamental to all neural network approaches.

The Legacy of Perceptrons in Modern AI

The perceptron represents one of the most important milestones in the history of artificial intelligence. By demonstrating that machines could learn from experience rather than just follow explicit programming, perceptrons fundamentally changed how we approach AI development.

Today's sophisticated deep learning systems—with their multiple layers, complex architectures, and advanced training algorithms—can trace their conceptual lineage directly back to Rosenblatt's early perceptron models. The core idea that networks of artificial neurons can learn by adjusting connection weights based on feedback remains the foundation of modern machine learning.

As we continue to develop increasingly powerful AI systems, the humble perceptron serves as a reminder of how fundamental principles, once discovered, can evolve into transformative technologies that reshape our world. The journey from simple perceptrons to today's neural networks illustrates both the remarkable progress of AI research and the enduring value of its foundational concepts.

Let's Watch!

Evolution of Perceptrons: How the First Trainable Neural Networks Revolutionized AI

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence