Google recently announced Gemma 3N, a groundbreaking open-source large language model that promises to revolutionize how we run AI locally. This new addition to the Gemma family introduces an innovative architecture that combines two models into one while significantly reducing memory requirements—making powerful AI accessible on more devices than ever before.

What Makes Gemma 3N Special: Two Models in One

Unlike traditional language models, Gemma 3N combines a 5-billion and an 8-billion parameter model into a single package. What's truly remarkable is how Google has applied advanced optimization techniques to shrink the memory footprint of these models to the equivalent of 2-billion and 4-billion parameter models respectively—without sacrificing performance.

This innovative approach means developers can install Gemma 3N once and seamlessly switch between the two integrated models depending on their needs. For tasks like text summarization where speed is critical, the smaller model configuration provides faster results. For content generation where quality matters most, the larger configuration delivers superior output—all from the same Gemma installation.

Architectural Innovation: Shared Neural Network Layers

The secret behind Gemma 3N's efficiency lies in its architecture. Google engineers designed the model with shared layers in the neural network that are utilized by both model configurations. This approach, combined with other optimization techniques, allows the model to maintain the performance characteristics of much larger models while requiring significantly less memory.

This architectural innovation is particularly important for Gemma application developers who want to provide users with options. The Gemma documentation will likely explain how applications can offer users the choice between performance modes, allowing them to select the appropriate configuration based on their specific needs and device capabilities.

True Multimodal Capabilities

Gemma 3N isn't just about text—it's a true multimodal AI model capable of processing audio, text, images, and video. This expanded capability set opens up numerous possibilities for developers creating Gemma applications:

- Transcribe audio to text locally with complete privacy

- Generate accurate captions for videos

- Translate audio content between languages

- Analyze and describe image content

- Process and understand video content

These multimodal capabilities, combined with local deployment options, make Gemma 3N particularly attractive for applications where privacy and offline functionality are priorities.

Impressive Performance Benchmarks

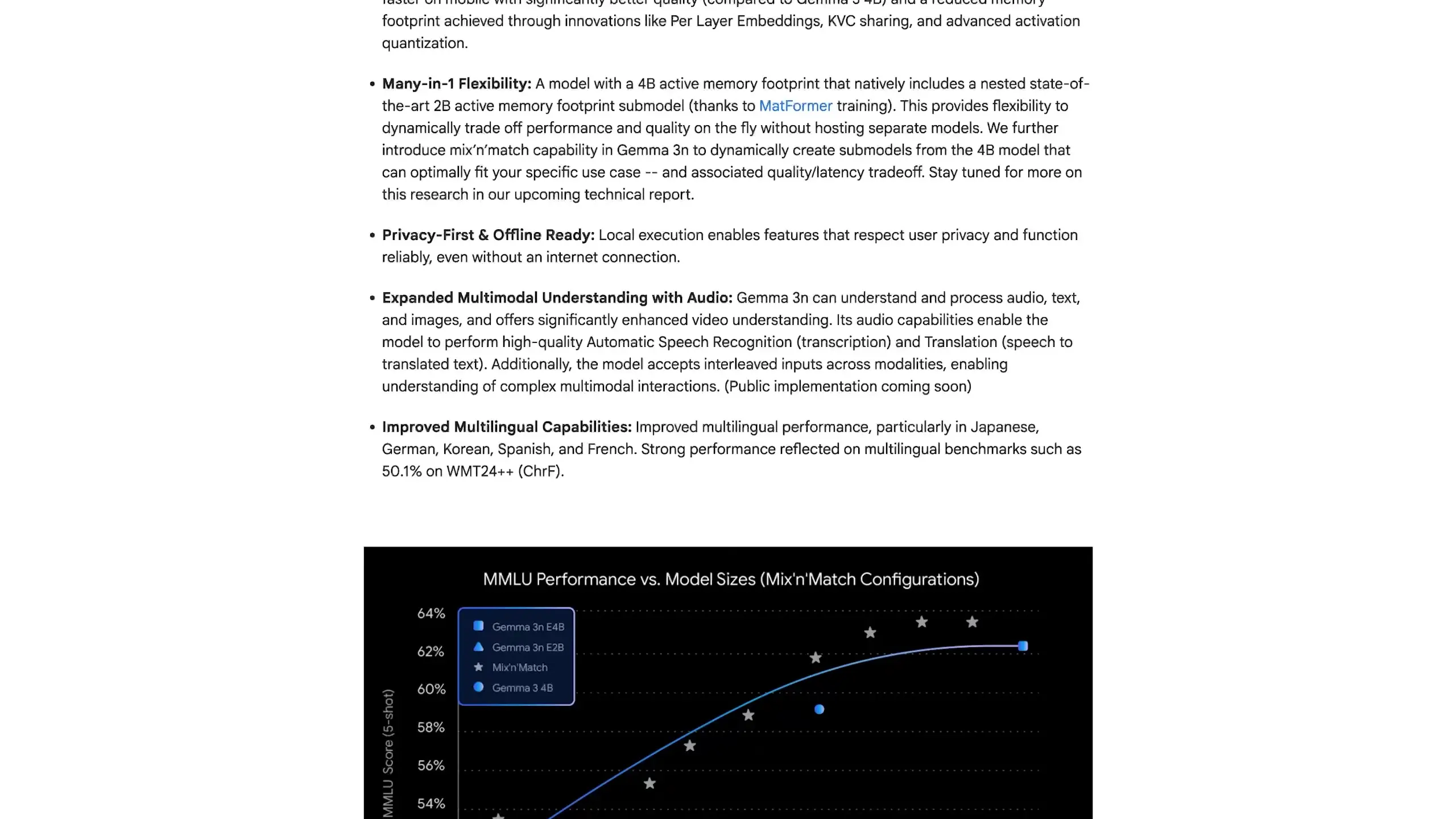

According to Google's benchmarks, Gemma 3N demonstrates impressive performance compared to other models in its class, including Claude 3.7. While benchmark results should always be viewed critically, the initial data suggests that Gemma 3N provides exceptional capabilities for its size.

The performance advantages stem from the model's unique architecture, which allows it to maintain the capabilities of larger models while operating within the memory constraints of smaller ones. This makes Gemma operations more efficient, especially on devices with limited resources.

Local Deployment: Privacy and Accessibility

One of the most compelling aspects of Gemma 3N is its focus on local deployment. Running the model locally offers several significant advantages:

- Complete privacy—data never leaves your device

- No subscription fees or usage limits

- Offline functionality without internet connectivity

- Reduced latency compared to cloud-based models

- Full control over model operation and configuration

The reduced memory footprint means Gemma install requirements are more accessible than ever. Google suggests that Gemma 3N could potentially run on relatively low-end devices, including some smartphones, bringing powerful AI capabilities to a much wider range of hardware.

Current Availability and Future Prospects

As of the announcement, Gemma 3N is available in preview mode, but it's not yet integrated with popular local LLM tools like Ollama or LM Studio. However, given the open nature of the model, we can expect wider availability through these platforms in the near future.

For developers interested in exploring Gemma 3N, the official Gemma documentation provides the most up-to-date information on installation procedures, API usage, and example applications. Once the model becomes more widely available, developers will be able to leverage Gemma examples to quickly implement its capabilities in their applications.

# Example of how Gemma 3N might be used in a Python application

# (Conceptual example based on similar LLM implementations)

from gemma import GemmaModel

# Initialize the model with configuration options

model = GemmaModel.from_pretrained("gemma-3n")

# Switch between model configurations based on task needs

model.set_configuration("small") # Use 2B equivalent for faster responses

fast_summary = model.generate("Summarize this article: " + long_text)

model.set_configuration("large") # Use 4B equivalent for higher quality

detailed_content = model.generate("Write a detailed analysis of: " + topic)

# Use multimodal capabilities

transcription = model.transcribe(audio_file_path)

image_description = model.analyze_image(image_path)Potential Applications for Gemma 3N

The unique capabilities of Gemma 3N open up numerous possibilities for application developers:

- Privacy-focused personal assistants that run entirely on-device

- Offline content creation tools for writers and marketers

- Accessibility applications that provide real-time audio transcription

- Educational tools that can function in environments with limited connectivity

- Creative applications that generate or analyze multimedia content locally

For developers already working with other Gemma models, the transition to implementing Gemma 3N should be relatively straightforward once the model becomes widely available through standard deployment tools.

Conclusion: A Significant Step Forward for Local AI

Gemma 3N represents a significant advancement in making powerful AI accessible locally. By combining two models in one package and dramatically reducing memory requirements, Google has created a solution that addresses many of the current limitations of local AI deployment.

As the model becomes more widely available and developers begin integrating it into their applications, we can expect to see a new generation of AI-powered tools that offer the benefits of advanced language models without the privacy concerns, subscription costs, or connectivity requirements of cloud-based alternatives.

For developers interested in staying at the forefront of AI technology, exploring Gemma 3N's capabilities and preparing for its wider release should be a priority. The combination of powerful multimodal features, efficient resource usage, and local deployment options makes it one of the most promising developments in the open-source AI landscape.

Let's Watch!

Gemma 3N: Google's Game-Changing Multimodal AI Model Explained

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence