In the rapidly evolving landscape of AI models, GLM 4.5 has emerged as a compelling affordable alternative to premium options like Claude Sonnet 4. At just $3 per month, this Chinese AI model is positioning itself as a serious contender in the coding assistance space, offering significant cost advantages while maintaining competitive performance.

GLM 4.5: The Cost-Effective Coding Assistant

GLM 4.5, developed by ZAI, has recently launched a coding-specific plan that's approximately seven times cheaper than Claude Pro while offering three times more usage. This makes it roughly 20 times more cost-effective overall—a compelling proposition for developers seeking affordable AI tools without compromising on capabilities.

The GLM series has consistently offered economical options, including a free 'flash' model (an optimized version of their 106 billion parameter model), cross-lingual versions, and their flagship GLM 4.5 model with 355 billion parameters. All models feature a 128k context window and strong reasoning capabilities, making them versatile for various development tasks.

Head-to-Head Comparison: Methodology

To evaluate how GLM 4.5 stacks up against Claude Sonnet 4, we conducted three progressively challenging coding tasks:

- Easy Task: Building a personal portfolio website with HTML, CSS, and JavaScript

- Medium Task: Upgrading a Hacker News clone with infinite scrolling, caching, and advanced search

- Hard Task: Creating a Minecraft-like game using Three.js

For testing, we used OpenCode, which provides estimated cost information while using a single model at a time. This approach allowed for direct comparison of both performance and cost-efficiency.

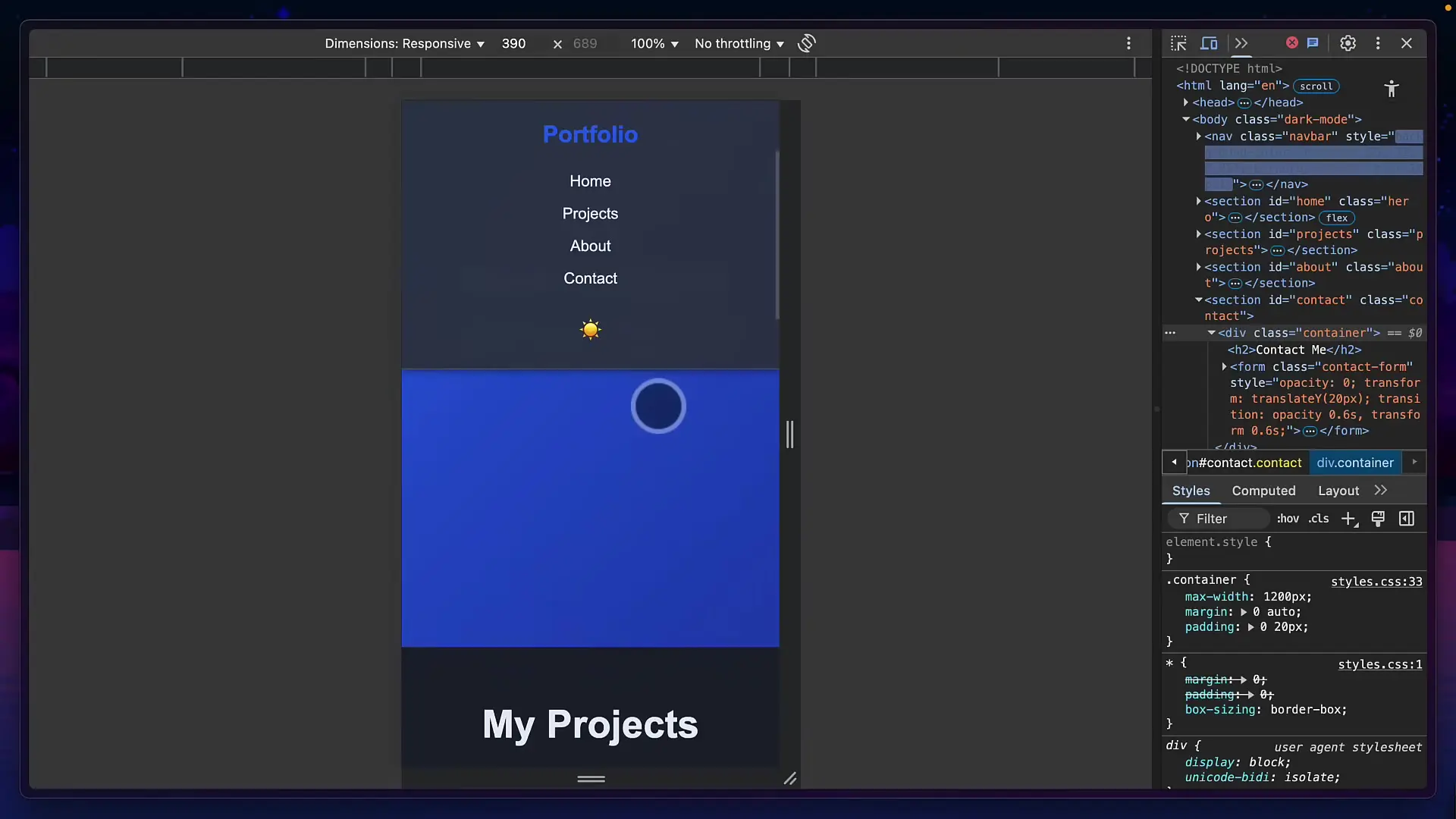

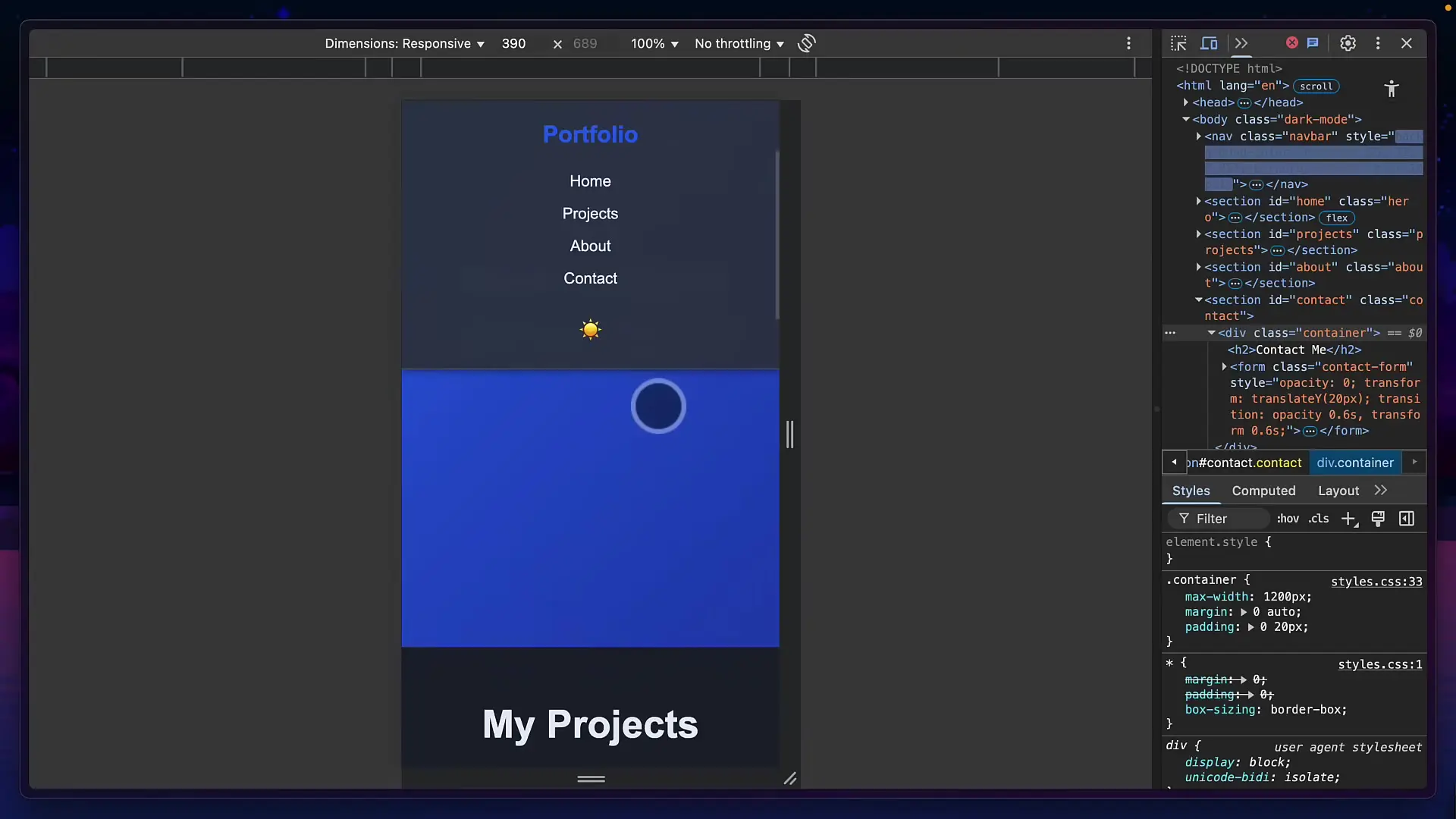

Task 1: Building a Portfolio Website

GLM 4.5 completed the portfolio website in just 2 minutes and 20 seconds at an approximate cost of 12 cents. The result featured animations, a dark/light mode toggle, project sections, and a contact form. The website was responsive, though it lacked a mobile menu collapse functionality.

Claude Sonnet 4 took longer and cost nearly triple what GLM 4.5 did, but delivered slightly better results. Its portfolio included nicer animations, gradient effects, and importantly, a hamburger menu for mobile layouts. Claude also provided a breakdown of common issues and debugging steps, adding educational value.

Winner of Task 1: Claude Sonnet 4 (by a small margin)

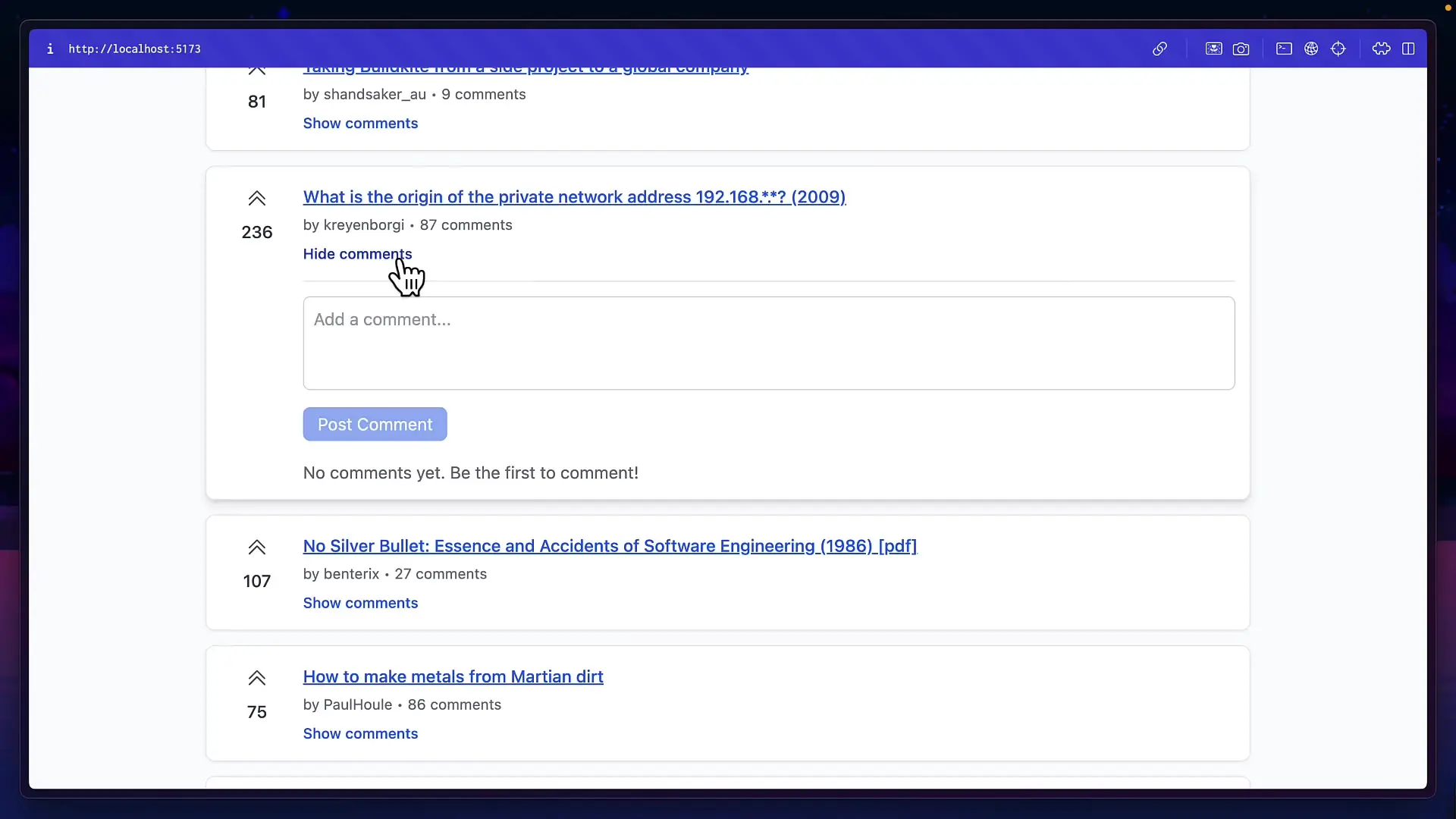

Task 2: Upgrading a Hacker News Clone

For the medium difficulty task, Claude Sonnet 4 completed the upgrade in 10 minutes and 39 seconds. The result was impressive, featuring infinite scrolling that loaded more stories when reaching the bottom of the page, live comment updates, and an advanced search function with instant results and search tips.

GLM 4.5 finished in approximately half the time (5 minutes 28 seconds) at half the cost. However, its implementation had some issues with the comments section not rendering properly, though the infinite scrolling and search functionality worked well. The UI looked good but required additional fixes to address errors.

Winner of Task 2: Claude Sonnet 4

Task 3: Creating a Minecraft-like Game

In the most challenging task, GLM 4.5 surprised by completing a functional Minecraft-like game in just 1 minute at a cost of only 7 cents. While the initial version had collision detection issues (the character walked through walls), these were quickly fixed with a follow-up prompt. The game featured basic movement controls, jumping mechanics, and even trees as environmental objects.

Claude Sonnet 4 took over 6 minutes and produced a game with significant control issues. The mouse movement was erratic, causing a disorienting slanting effect, and the controls were reversed (left input moved right and vice versa). The collision detection was also problematic, making the game essentially unplayable without extensive fixes.

Winner of Task 3: GLM 4.5 (by a significant margin)

Overall Results and Value Proposition

In our testing, Claude Sonnet 4 slightly outperformed GLM 4.5 overall, winning two of the three tasks. However, the performance gap was relatively small, especially considering the dramatic price difference between the two services.

- GLM 4.5 Strengths: Extremely cost-effective ($3/month), fast execution times, impressive performance on complex 3D tasks

- GLM 4.5 Limitations: Not multimodal, occasional implementation errors requiring follow-up prompts

- Claude Sonnet 4 Strengths: More polished results, better attention to UI/UX details, more comprehensive explanations

- Claude Sonnet 4 Limitations: Significantly more expensive, struggled with complex 3D programming

Is GLM 4.5 Worth Considering as an Affordable AI Alternative?

For developers working with tight budgets or those who need frequent AI assistance without breaking the bank, GLM 4.5's coding plan offers exceptional value. At just $3 monthly (approximately £2), you get access to a state-of-the-art model with 355 billion parameters that performs competitively against premium alternatives costing many times more.

The primary limitation is that GLM 4.5 isn't multimodal, and its image reading capabilities (via GLM 4.5V) aren't as strong as some competitors. However, for pure coding tasks, it delivers impressive results at a fraction of the cost of other leading models.

Conclusion: Best Use Cases for GLM 4.5

GLM 4.5 is an excellent choice for:

- Individual developers or small teams with limited AI budgets

- Educational environments where cost is a significant factor

- Projects requiring frequent AI assistance for coding tasks

- Developers who need a capable AI coding assistant but don't require multimodal capabilities

- Anyone looking to experiment with AI coding assistance without significant financial commitment

While Claude Sonnet 4 might deliver slightly more polished results in some scenarios, GLM 4.5's performance-to-price ratio makes it one of the most compelling affordable AI alternatives currently available for developers. As AI tools continue to evolve, this kind of accessible pricing could help democratize access to advanced coding assistance.

Let's Watch!

GLM 4.5 vs Claude Sonnet 4: The $3 AI Model That Rivals Premium Alternatives

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence