Google recently announced a suite of AI agent-related products aimed at positioning the company as a leader in the emerging world of AI agents. Among these announcements, the A2A (Agent-to-Agent) protocol stands out as particularly significant for the future of AI system architecture and interoperability.

Google's AI Agent Ecosystem: Setting the Stage

Before diving into the A2A protocol specifically, it's worth understanding the broader context of Google's recent announcements. Google unveiled several products designed to establish its presence in the AI agent space:

- Agent Space: A hub for enterprises to connect various data sources to AI agents, enabling search and work capabilities powered by AI

- Agent Development Kit: A Python SDK for building agentic applications, positioning itself as an alternative to frameworks like LangChain and LangGraph

- Firebase Studio: An AI-powered web application creator using natural language prompts

- A2A Protocol: A standardized protocol for agent-to-agent communication

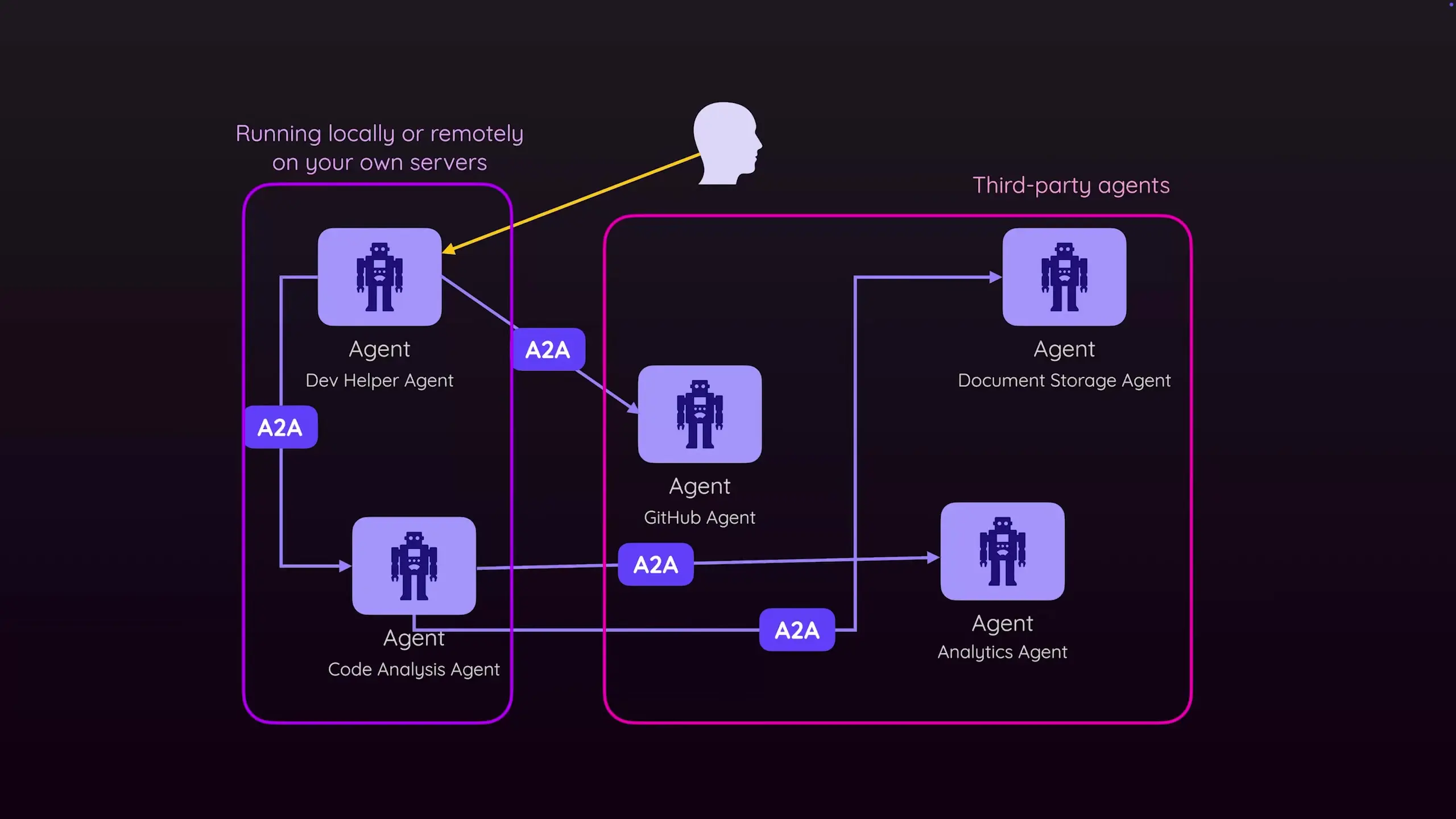

This suite of tools indicates Google's strategic vision of a future where specialized AI agents collaborate to accomplish complex tasks, with the A2A protocol serving as the communication backbone.

Why Multiple Specialized Agents Instead of One Super Agent?

A fundamental question arises: Why focus on connecting multiple specialized agents rather than building one comprehensive agent? There are several compelling reasons for the multi-agent approach:

- Model specialization: Different AI models excel at different tasks. A coding-focused model might be optimal for programming tasks, while another might be better at generating human-friendly reports

- Complexity management: Just as we use different applications for different purposes in traditional software, distributing functionality across specialized agents can reduce complexity

- Security considerations: Specialized agents can implement appropriate security measures for their specific domain

- Avoiding vendor lock-in: Using agents from different providers prevents dependence on a single vendor's ecosystem

- Fine-tuning efficiency: It's more efficient to fine-tune specialized models for specific domains than to create one model that performs well across all tasks

This distributed approach mirrors how we've traditionally organized software applications, with specialized tools for specialized tasks, rather than monolithic all-in-one solutions.

How the Google A2A Protocol Works: Technical Deep Dive

The A2A protocol addresses a critical challenge in the multi-agent ecosystem: how can independent agents, potentially created by different developers or companies, discover each other's capabilities and communicate effectively? The protocol includes several key components:

Agent Discoverability Through Agent Cards

At the core of the A2A protocol is the concept of agent discoverability. Each agent exposes an "agent card" - a JSON document that describes:

- The agent's capabilities and specialized functions

- Authentication requirements and methods

- Available communication mechanisms (e.g., server-sent events, push notifications)

- API endpoints and interaction patterns

{

"name": "GitHub Assistant Agent",

"description": "Specialized agent for GitHub operations",

"capabilities": ["code review", "issue management", "PR handling"],

"authentication": {

"required": true,

"type": "oauth",

"tokenEndpoint": "https://github-agent.example.com/auth/token"

},

"communicationMethods": ["synchronous", "serverSentEvents"],

"apiEndpoint": "https://github-agent.example.com/api/v1"

}This agent card allows other agents to understand what the agent can do and how to interact with it, facilitating dynamic discovery and collaboration.

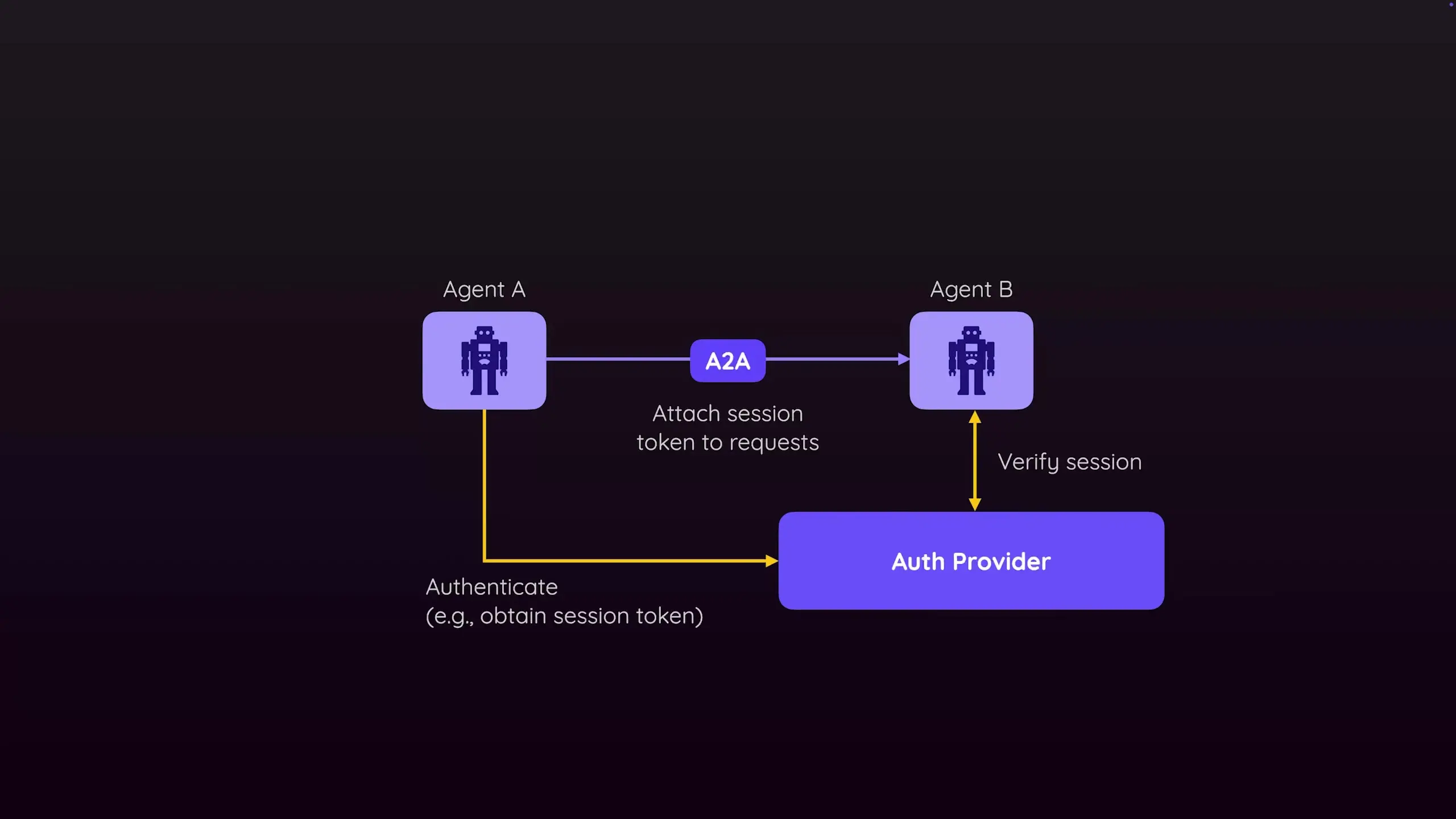

Authentication Flow

When an agent needs to communicate with another agent that requires authentication, the A2A protocol defines a standardized authentication flow:

- The initiating agent examines the target agent's card to identify authentication requirements

- The initiating agent obtains the necessary credentials from the specified provider

- The initiating agent sends an authentication request with the obtained credentials

- Upon successful authentication, the target agent grants access for subsequent interactions

This standardized approach ensures secure communication between agents while maintaining flexibility in authentication methods.

Request-Response Communication

The basic communication pattern in the A2A protocol follows a request-response model, where agents exchange structured messages to delegate tasks and return results. The protocol specifies formats for these messages, including:

- Task descriptions and parameters

- Response formats and status codes

- Error handling conventions

- Metadata for tracking and logging

A2A Protocol vs. MCP (Model Context Protocol): Complementary Approaches

Google positions the A2A protocol as complementary to Anthropic's Model Context Protocol (MCP), rather than competitive. This distinction is important for understanding the evolving AI agent ecosystem:

- MCP focuses on standardizing how tools are exposed to a single agent, making it easier to add capabilities to an individual agent

- A2A focuses on how different agents communicate with each other, enabling collaboration between specialized agents

- An agent could use MCP internally to access tools while using A2A to communicate with other agents

- Together, they provide a comprehensive framework for building complex, multi-agent AI systems

This complementary relationship suggests a future where both protocols might coexist, serving different but related purposes in the AI agent ecosystem.

Practical Examples: The A2A Protocol in Action

To illustrate how the Google A2A protocol might work in practice, consider these scenarios:

Example 1: Document Processing Workflow

A user asks their primary agent to "analyze the quarterly financial reports and prepare a summary presentation." This complex task might involve:

- The primary agent uses A2A to discover and communicate with a document retrieval agent specialized in finding and accessing financial documents

- After obtaining the documents, the primary agent connects with a financial analysis agent that specializes in interpreting financial data

- Finally, the primary agent collaborates with a presentation creation agent to format the findings appropriately

- Throughout this process, each agent performs its specialized function without the user needing to manually coordinate between them

Example 2: Software Development Assistant

A developer might use an agent to help with coding tasks, which could leverage the A2A protocol to:

- Connect with a GitHub-specialized agent for repository management and code review

- Utilize a code generation agent optimized for specific programming languages

- Integrate with a testing agent that specializes in generating and running test cases

- Communicate with a documentation agent that can create clear explanations of the code

In both examples, the A2A protocol enables seamless collaboration between specialized agents, each optimized for specific tasks within the broader workflow.

Implications for the Future of AI Systems

The introduction of the Google A2A protocol has several significant implications for how AI systems might evolve:

- Ecosystem development: A standardized communication protocol could foster a rich ecosystem of specialized agents from different providers

- Democratization of AI capabilities: Smaller companies could focus on building excellent specialized agents rather than trying to compete with comprehensive solutions

- Improved AI performance: Specialized agents can leverage purpose-built models that excel at specific tasks

- Enhanced extensibility: Systems can evolve by adding new agent capabilities without redesigning existing components

- Cross-vendor interoperability: Agents from different providers can work together seamlessly

However, challenges remain, including ensuring security across agent boundaries, managing performance when tasks span multiple agents, and establishing governance models for the emerging agent ecosystem.

Conclusion: The A2A Protocol and the Future of AI Agents

Google's A2A protocol represents a significant step toward a future where AI systems consist of networks of specialized, interoperable agents rather than monolithic applications. By providing a standardized way for agents to discover each other's capabilities and communicate effectively, A2A could enable more flexible, powerful, and maintainable AI systems.

While it's too early to predict whether A2A, MCP, both, or neither will become the dominant standard, Google's investment in this area signals a clear vision of AI's future direction. For developers and enterprises planning their AI strategies, understanding protocols like A2A will be increasingly important as the AI agent ecosystem continues to evolve and mature.

As with any emerging technology, the true test will be adoption and implementation. The success of the Google A2A protocol will ultimately depend on whether it can deliver on its promise of enabling more powerful, flexible AI systems through standardized agent communication.

Let's Watch!

Google A2A Protocol Explained: The Future of AI Agent Communication

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence