OpenAI has released a new family of models: GPT-4.1, GPT-4.1 Mini, and GPT-4.1 Nano. These models represent a significant leap forward in AI capabilities, particularly for developers working with code. All three outperform their predecessors with major improvements in coding abilities, instruction following, and long-context comprehension.

The GPT-4.1 Family: What's New

The headline feature across all three new models is their expanded context window. Each supports up to 1 million tokens of context - a substantial increase that enables more comprehensive document analysis, code repository exploration, and complex problem-solving. What's more impressive is that these models demonstrate improved ability to actually utilize this expanded context effectively.

- GPT-4.1: The flagship model with premium capabilities

- GPT-4.1 Mini: A balanced option for most use cases

- GPT-4.1 Nano: OpenAI's first 'nano' model - fastest and most cost-effective

These models come at a time when competition in the AI space is intensifying, with Gemini and Claude pushing boundaries in various performance metrics. This competition ultimately benefits developers and users who now have more powerful, accessible tools at their disposal.

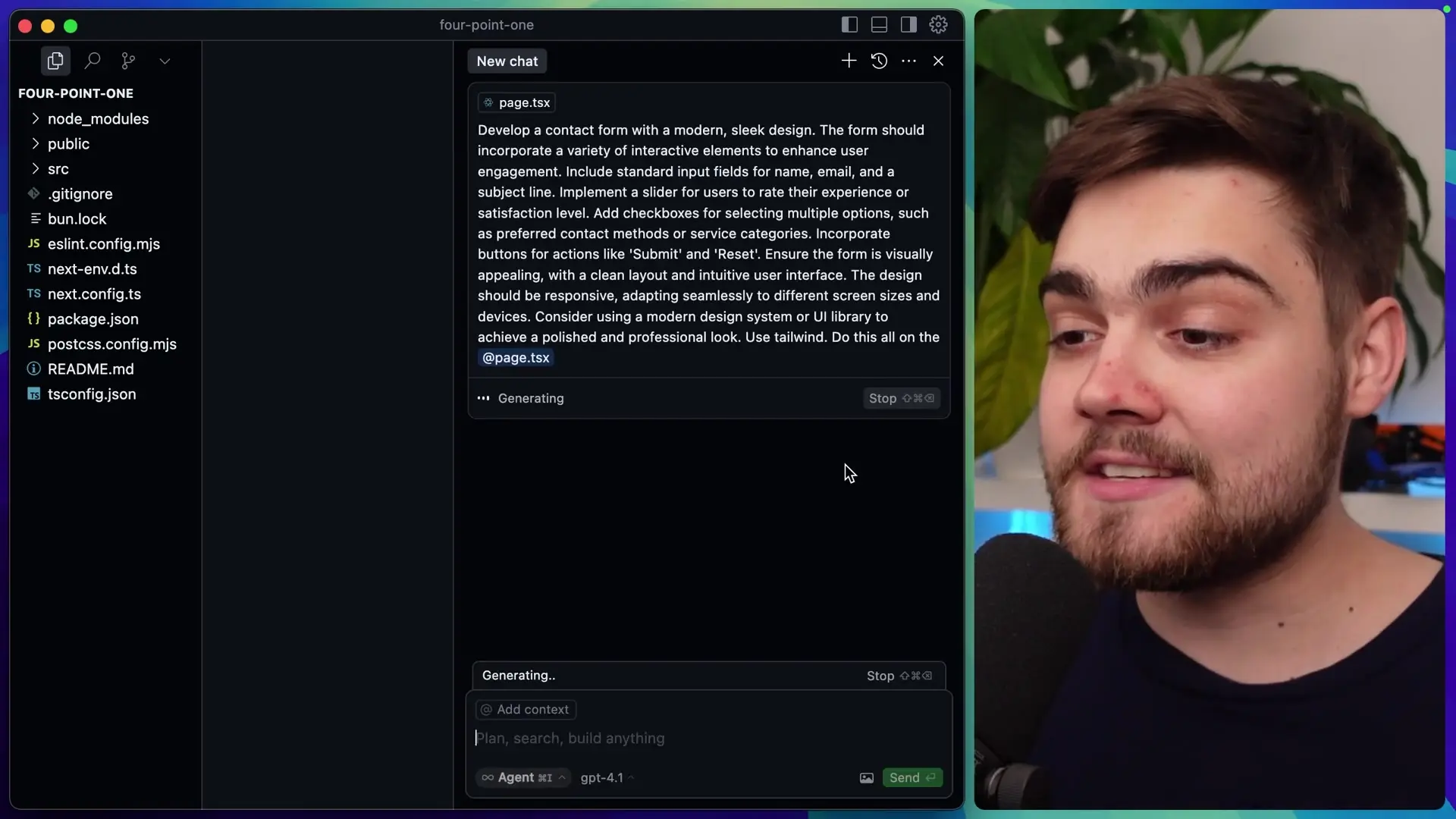

Front-End Development Capabilities: A Practical Test

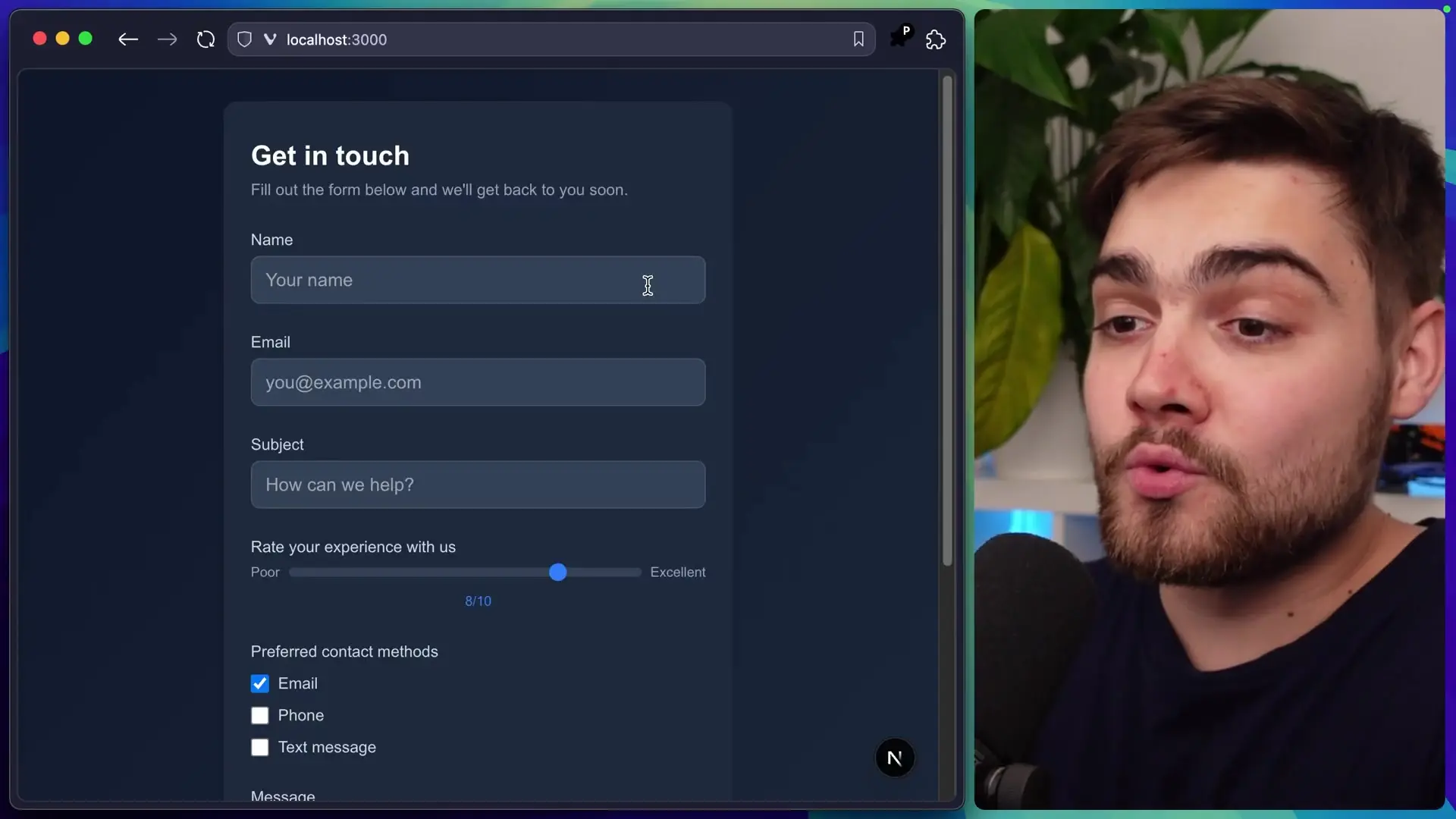

To evaluate GPT-4.1's coding abilities, particularly for front-end development, we can test it with a common task: creating a modern contact form with interactive elements. When prompted to build a contact form using Tailwind CSS in a Next.js environment, GPT-4.1 produces notably superior results compared to GPT-4.0.

The form generated by GPT-4.1 shows a more sophisticated understanding of modern UI design principles. It includes proper focus states for inputs, respects the dark mode setting of the environment, and produces more accessible color contrasts. The styling appears to draw inspiration from design systems like Shadcn UI, resulting in a more polished, professional appearance.

// Sample of contact form component generated by GPT-4.1

export default function ContactForm() {

return (

<div className="w-full max-w-md mx-auto bg-gray-800 rounded-xl shadow-md overflow-hidden p-6">

<h2 className="text-2xl font-bold text-white mb-6">Contact Us</h2>

<form className="space-y-4">

<div>

<label htmlFor="name" className="block text-sm font-medium text-gray-300 mb-1">Name</label>

<input

type="text"

id="name"

className="w-full px-3 py-2 bg-gray-700 border border-gray-600 rounded-md text-white focus:outline-none focus:ring-2 focus:ring-blue-500"

placeholder="Your name"

/>

</div>

{/* Additional form elements would follow */}

</form>

</div>

);

}In comparison, GPT-4.0 produces a more basic implementation with less attention to detail. Its form lacks the refined styling, proper color contrast for accessibility, and the sophisticated focus states present in the GPT-4.1 version.

Benchmark Performance: The Numbers Behind the Improvement

OpenAI's internal benchmarks reveal significant improvements across various coding metrics. Using Windsurf's internal coding benchmark, GPT-4.1 scored 60% higher than GPT-4.0. On the SWBench verified tasks, GPT-4.1 completes 54.6% of tasks compared to just 33.2% for GPT-4.0.

The improvements are particularly notable in several key areas:

- Front-end coding accuracy and style implementation

- Fewer required edits after initial code generation

- Better adherence to diff formats

- More consistent tool usage

- Improved code exploration in repositories

- Higher success rate in producing code that both runs and passes tests

On the Ada Polyot benchmark, GPT-4.1 more than doubles GPT-4.0's score and even outperforms GPT-4.5 by 8%, indicating its exceptional coding capabilities.

Long Context Performance: Million-Token Comprehension

One of the most impressive aspects of the GPT-4.1 family is their ability to effectively utilize their expanded context window. In the "needle in a haystack" benchmark, all three models (GPT-4.1, Mini, and Nano) can successfully retrieve information from any position within their million-token context window.

On OpenAI's MRCR benchmark, GPT-4.1 outperforms GPT-4.0 at context lengths up to 128,000 tokens and maintains strong performance all the way up to the full million tokens. This represents a significant advancement in AI's ability to process and comprehend large volumes of information.

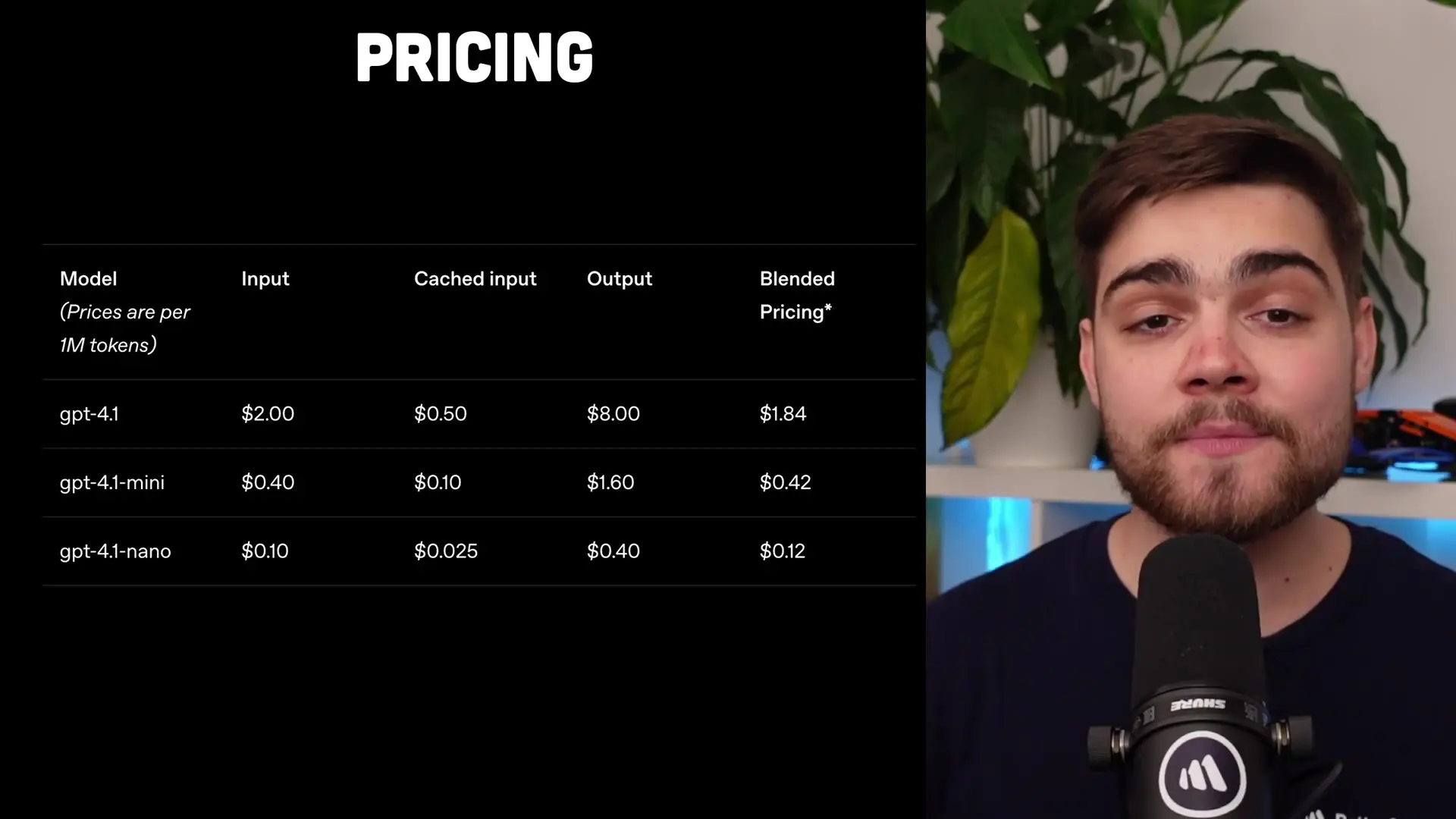

Pricing: Competitive Rates for Enhanced Performance

Perhaps one of the most compelling aspects of the GPT-4.1 family is its pricing structure, which makes these advanced capabilities more accessible.

- GPT-4.1: $2 per million input tokens and $8 per million output tokens

- GPT-4.1 Mini: $0.40 per million input tokens and $1.60 per million output tokens

- GPT-4.1 Nano: $0.10 per million input tokens and $0.40 per million output tokens

These rates position the GPT-4.1 family as strong competitors to Anthropic's Claude 3.5 and 3.7 models, which are considerably more expensive. The introduction of GPT-4.1 Nano is particularly noteworthy as OpenAI's fastest and most cost-effective model to date.

Instruction Following and Reasoning

Beyond coding, GPT-4.1 shows significant improvements in instruction following, especially with complex prompts. It nearly matches GPT-4.5's performance on difficult instruction tasks and reasoning benchmarks, including multi-challenge accuracy tests.

This enhanced ability to understand and execute complex instructions makes GPT-4.1 more reliable for a wider range of applications, from data analysis to content creation and beyond.

Conclusion: A New Standard for AI Development Tools

The GPT-4.1 family represents a significant advancement in AI capabilities, particularly for developers. With exceptional performance in coding, instruction following, and long-context comprehension, all at competitive price points, these models set a new standard for AI development tools.

For front-end developers especially, GPT-4.1's improved understanding of modern design principles and frameworks like Tailwind CSS makes it an invaluable assistant for creating polished, accessible user interfaces. The combination of enhanced capabilities and competitive pricing positions the GPT-4.1 family as the go-to choice for a wide range of AI-assisted development tasks.

Let's Watch!

GPT-4.1 Outperforms Previous Models with Enhanced Coding Capabilities

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence