OpenAI has released GPT-4.1, a non-reasoning multimodal model that introduces an unprecedented 1 million token context window—the largest OpenAI has ever offered. This release marks a significant step forward in context window capabilities, particularly for developers working with large codebases or extensive documentation.

Key Features of GPT-4.1's Context Window

The most notable feature of GPT-4.1 is its massive context window size, which allows it to process up to 1 million tokens in a single interaction. This represents a substantial increase over previous GPT models and positions it as a powerful tool for tasks requiring extensive context retention.

Interestingly, this release follows a reverse naming strategy, rolling back from GPT-4.5 to GPT-4.1. This unusual approach might suggest that OpenAI had initially overhyped GPT-5 expectations, and GPT-4.1 represents a more measured advancement in their model lineup.

Developer-Focused Performance Benchmarks

GPT-4.1 is specifically tailored for developers, with impressive performance on coding benchmarks. On the Software Engineering Benchmark Verified (a Python-focused benchmark), it achieves 55% accuracy—the best performance among OpenAI's current model lineup. On Eider's polyglot benchmark, which tests model skills at writing and editing code, it scores 53%, placing ninth overall and third among non-reasoning models.

These benchmarks indicate that GPT-4.1 excels in code generation and understanding, making it particularly valuable for software development workflows.

GPT-4.1 Model Variants and Pricing Structure

The GPT-4.1 release includes three different model sizes to accommodate various needs and budgets:

- GPT-4.1: $2 per 1 million input tokens and $8 per 1 million output tokens

- GPT-4.1 Mini: 40 cents per 1 million input tokens and $1.60 per 1 million output tokens

- GPT-4.1 Nano: 10 cents per 1 million input tokens and 40 cents per 1 million output tokens

This pricing structure makes GPT-4.1 one of the most affordable high-performance models OpenAI has released. The pricing is particularly competitive when reusing the KV cache (the same context window repeatedly), which significantly reduces costs for certain applications.

API-Only Access: Important Considerations

A crucial detail for potential users is that GPT-4.1 is currently only available through API access. This means that even ChatGPT Plus subscribers ($20/month) cannot access this model through the ChatGPT interface. This API-only approach underscores OpenAI's focus on developer use cases rather than general consumer applications.

Multimodal Capabilities and Performance

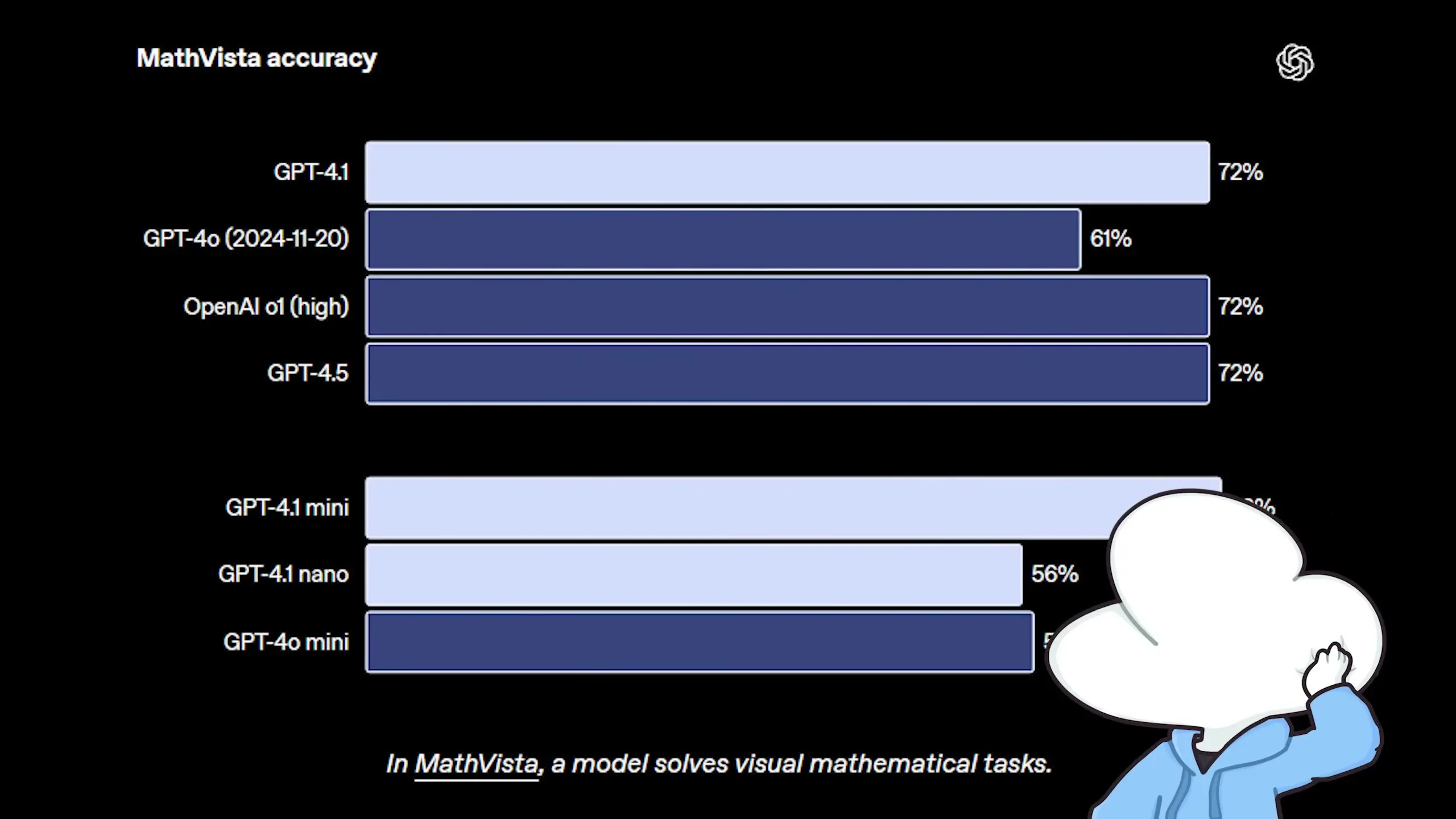

Beyond its extensive context window, GPT-4.1 offers multimodal capabilities, accepting inputs like images and videos. On Math Vista, a visual benchmark for math questions, it scores 72%. While this is respectable, it places below Llama 4 Maverick and around Gemini 2.0 Flash in performance.

For general visual problem-solving on the MMU benchmark, GPT-4.1 achieves 75% accuracy, ranking third highest after 01 High and Llama 4 Behemoth (excluding the newer Kim K 1.6 model).

Context Window Performance Compared to Competitors

While the 1 million token context window is impressive, it's important to evaluate its actual retrieval performance. On OpenAI's new long context benchmark called MRCR, GPT-4.1 achieves approximately 50% accuracy between 100K tokens to 1 million tokens. On the Fiction Life Bench, it hits around 60% at 120K tokens.

By comparison, Gemini 2.5 Pro achieves around 90% on similar benchmarks, suggesting that while GPT-4.1's context window is large, its retrieval capabilities may not match the best available alternatives.

GPT-4.1 vs. Competing Models: A Comparative Analysis

When comparing GPT-4.1 to other available models, several interesting contrasts emerge:

- DeepSeek V324: Outperforms GPT-4.1 on Aderbench and is 7.5 times cheaper, with additional 50% discounts during off-peak hours. However, it lacks GPT-4.1's multimodal capabilities and has a smaller context window.

- Gemini 2.5 Pro: Offers $1.25 per 1 million input tokens (for <200K tokens) and $10 per 1 million output tokens. While slightly more expensive, it delivers state-of-the-art performance across most benchmarks. Its main disadvantages are slower processing speed and higher costs for large context windows.

GPT-4.1's competitive advantages include its cheaper pricing for very large context windows and faster token output speed as a non-reasoning model.

The Deprecation of GPT-4.5

Perhaps the most surprising aspect of the GPT-4.1 announcement is OpenAI's decision to deprecate GPT-4.5. The official reason given is to free up GPUs dedicated to serving GPT-4.5. Given that GPT-4.5's API price was 37 times more expensive than GPT-4.1, this suggests it may have been a resource-intensive model with limited practical applications.

Industry speculation suggests GPT-4.5 might have been a failed run for GPT-5, with its high costs and lack of strong use cases making it impractical to maintain alongside the more efficient GPT-4.1. The API version is being deprecated immediately, though the ChatGPT version will remain available for now.

Implementation Considerations for Developers

For developers considering GPT-4.1 integration, several factors should influence your decision:

- Project budget: GPT-4.1 offers competitive pricing, especially for the Mini and Nano variants, making it accessible for projects with limited resources.

- Context window requirements: If your application requires processing large documents or codebases in a single context, GPT-4.1's 1 million token window provides significant advantages.

- Multimodal needs: While not leading in all visual benchmarks, GPT-4.1 offers solid multimodal capabilities that may be sufficient for many applications.

- Speed requirements: As a non-reasoning model, GPT-4.1 offers faster token generation than some competitors like Gemini 2.5 Pro.

- API-only limitations: Consider whether your application architecture can accommodate API-only access rather than ChatGPT interface integration.

Conclusion: Is GPT-4.1 Right for Your Development Needs?

GPT-4.1 represents a significant advancement in OpenAI's model lineup, particularly for developers requiring large context windows and strong coding capabilities. While it doesn't lead in every benchmark category, its combination of features—massive context window, competitive pricing, multimodal capabilities, and strong coding performance—makes it a compelling option for many development scenarios.

The model's focus on developer use cases through API-only access signals OpenAI's strategic direction toward serving technical users with specialized needs. For projects requiring extensive context processing at reasonable costs, GPT-4.1 offers a balanced combination of performance and affordability that may be difficult to match with alternative models.

As with any AI model selection, the best choice will depend on your specific requirements, budget constraints, and performance priorities. GPT-4.1's introduction expands the options available to developers and continues the rapid evolution of capabilities in the AI development landscape.

Let's Watch!

GPT-4.1 Unveiled: What Developers Need to Know About the 1M Context Window

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence