AI coding agents represent the next evolution in developer assistance tools, going beyond what traditional large language models (LLMs) offer. While many developers have become comfortable asking ChatGPT for code snippets or explanations, AI coding agents like JetBrains' Juni take this interaction to a new level by actively formulating action plans and executing them within your development environment.

What Makes AI Coding Agents Different from Regular LLMs

The key distinction between an AI coding agent and a standard large language model is autonomy. While both utilize powerful language models at their core, an agent can formulate plans and take actions independently. This means that rather than simply generating text responses, coding agents can interact with your development environment, analyze your codebase, and implement solutions directly.

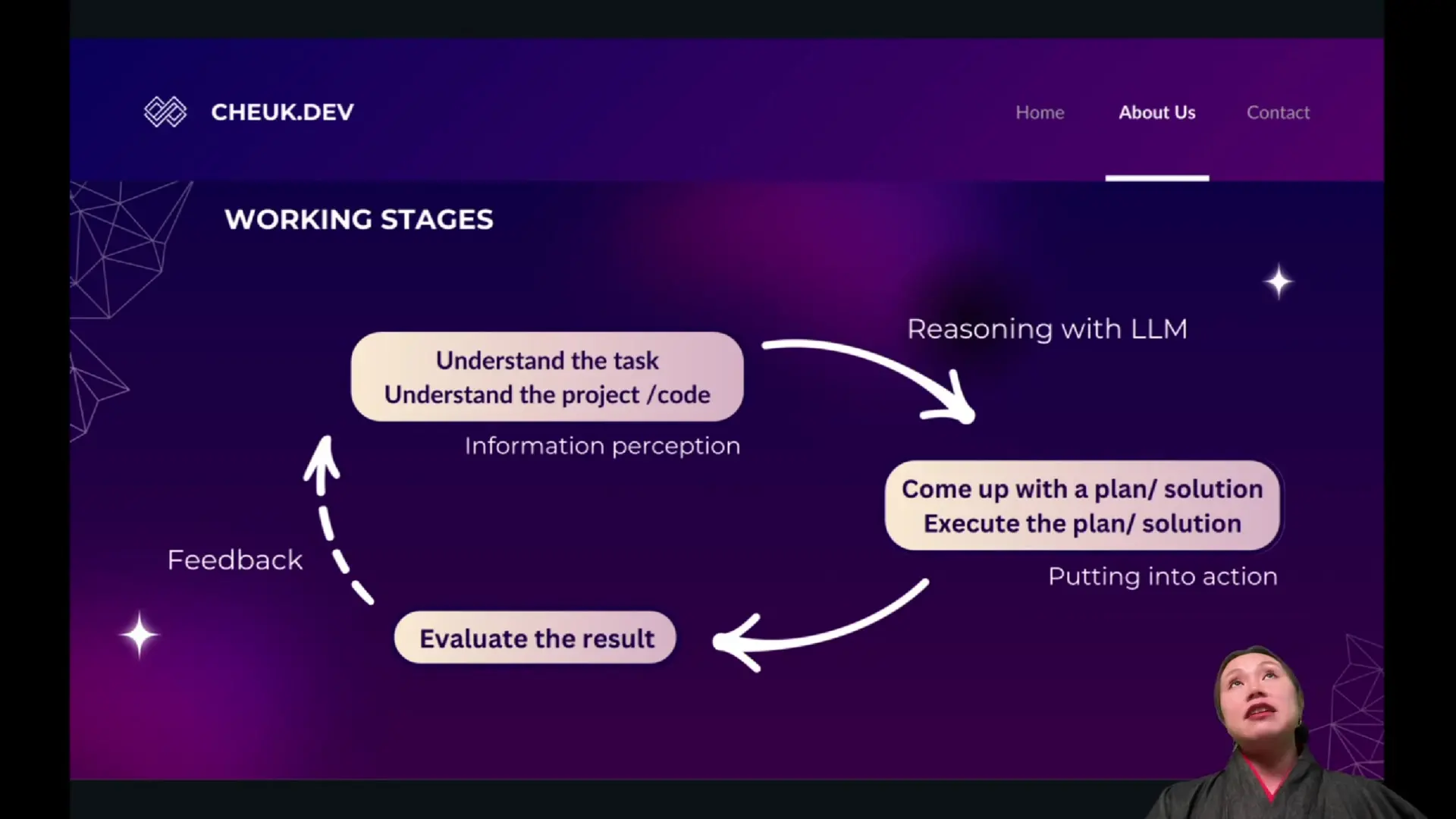

The architecture behind these systems follows a cyclical pattern that enables them to be more effective than simple prompt-response interactions. This architecture consists of several key stages that work together to create a more intelligent and useful development assistant.

The Four-Stage Architecture of AI Coding Agents

AI coding agents operate through a four-stage process that enables them to understand your requirements, formulate plans, execute them, and adapt based on the results. This architecture is what makes them particularly effective for coding tasks.

1. Information Perception and Context Setting

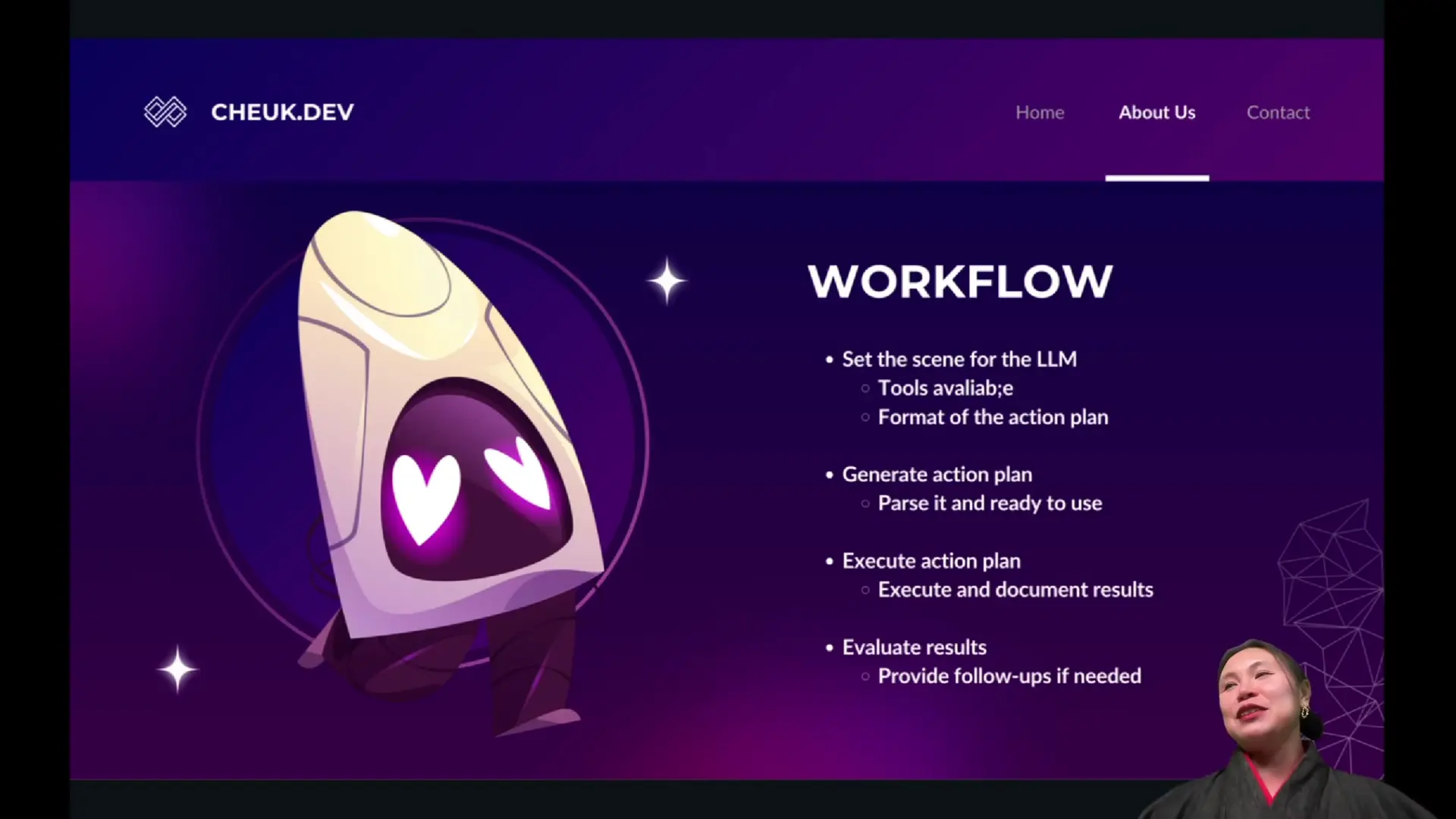

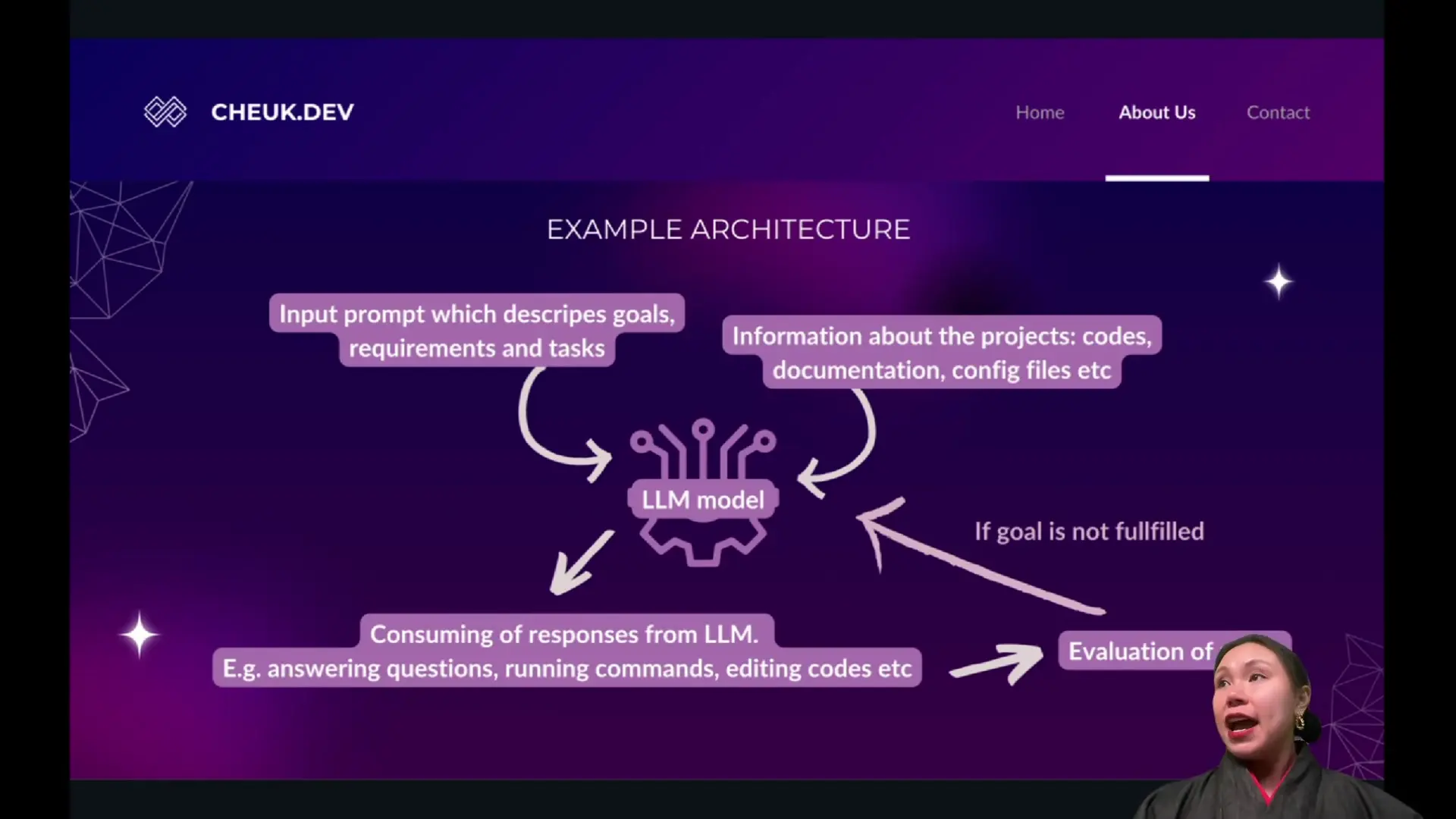

When you provide a prompt to an AI coding agent, it doesn't simply forward your request to the underlying LLM. Instead, it performs crucial context-setting work, creating what's sometimes called a 'script' that helps the LLM understand the environment it's working in.

This context includes critical information such as:

- Available tools and commands (e.g., which operating system you're using)

- Permissions and limitations (what actions the agent can and cannot perform)

- Access to file systems and code repositories

- Programming languages and frameworks in use

- Environmental variables and configurations

This stage is crucial because LLMs, despite their broad knowledge, don't inherently know what's available on your specific machine or in your development environment. By providing this context, the agent ensures that the LLM generates relevant and executable plans.

2. Solution Planning with the LLM

With the context established, the agent then leverages the LLM to generate a step-by-step plan to accomplish your requested task. This plan outlines the specific actions needed to fulfill your requirements, similar to how you might see Juni create a multi-step approach when helping with a coding task.

It's worth noting that the knowledge cutoff of the underlying LLM can impact the quality of these plans. For instance, Juni uses a model with knowledge up to April 2024, which means it may not be aware of the very latest libraries, frameworks, or programming techniques released after that date.

3. Action Execution

This is where AI coding agents truly differentiate themselves from standard LLMs. Rather than simply providing you with instructions or code snippets, the agent actually implements the plan by executing commands, creating or modifying files, and interacting with your development environment.

The execution stage translates the text-based plan from the LLM into concrete actions in your development environment. This requires the agent to have a clear understanding of the available tools and permissions established in the first stage.

4. Result Evaluation and Adaptation

The final stage involves evaluating whether the executed plan achieved the desired outcome. Unlike simpler systems that would stop after execution, sophisticated AI coding agents like Juni can assess the results and adapt their approach if necessary.

If the initial plan doesn't work as expected, the agent can formulate alternative approaches or correct its course. This feedback loop is what makes AI coding agents particularly powerful - they don't just try once and give up, but can iterate toward a solution.

Key Components in AI Coding Agent Architecture Design

Building an effective AI coding agent requires careful consideration of several architectural components that work together to create a seamless experience:

- Tool Integration Framework: Allows the agent to interact with various development tools, IDEs, and command-line interfaces

- Context Management System: Maintains awareness of the current state of the codebase, project structure, and development environment

- Planning Engine: Transforms user requests into structured action plans using the underlying LLM

- Execution Engine: Safely implements the planned actions within the constraints of the system

- Feedback Mechanism: Evaluates the results of actions and determines whether goals have been met

- Adaptation System: Modifies plans based on feedback and changing requirements

Implementing a Simple AI Coding Agent

While professional tools like Juni involve sophisticated implementations of these concepts, you can create a simplified version to understand the core principles. A basic implementation would include:

import openai

class SimpleCodingAgent:

def __init__(self, api_key, tools):

self.openai = openai

self.openai.api_key = api_key

self.tools = tools # Available commands/actions

self.context = {}

def set_scene(self, user_prompt):

"""Prepare context for the LLM"""

system_message = f"""You are a coding assistant with access to these tools: {self.tools}.

You can only use the tools listed. Generate a step-by-step plan to solve the user's request."""

return system_message, user_prompt

def generate_plan(self, system_message, user_prompt):

"""Use LLM to create an action plan"""

response = self.openai.chat.completions.create(

model="gpt-4",

messages=[

{"role": "system", "content": system_message},

{"role": "user", "content": user_prompt}

]

)

return response.choices[0].message.content

def execute_plan(self, plan):

"""Implement the plan steps"""

# Parse the plan into executable steps

steps = self._parse_plan(plan)

results = []

for step in steps:

# Execute each step using appropriate tool

if step.tool in self.tools:

result = self._execute_tool(step.tool, step.params)

results.append(result)

else:

results.append(f"Error: Tool {step.tool} not available")

return results

def evaluate_results(self, results, original_prompt):

"""Determine if the execution succeeded"""

# Send results back to LLM to evaluate success

evaluation = self.openai.chat.completions.create(

model="gpt-4",

messages=[

{"role": "system", "content": "Evaluate if these results satisfy the user's request"},

{"role": "user", "content": f"Request: {original_prompt}\n\nResults: {results}"}

]

)

return evaluation.choices[0].message.content

# Helper methods would be implemented hereThis simplified implementation demonstrates the core architecture we've discussed. In a real-world scenario, you would need to implement more robust error handling, security measures, and integration with your specific development environment.

Challenges in AI Coding Agent Architecture

Despite their power, AI coding agents face several architectural challenges that affect their performance:

- Knowledge Limitations: The LLM's knowledge cutoff date means it may not be aware of the latest libraries or best practices

- Hallucination Problems: LLMs can sometimes generate plausible-sounding but incorrect information

- Security Concerns: Giving an AI agent the ability to execute code raises important security considerations

- Context Window Limitations: LLMs have finite context windows, limiting how much code or project information they can process at once

- Tool Integration Complexity: Different development environments require different integration approaches

The Future of AI in Coding Architecture Design

As AI coding agents continue to evolve, we can expect several advancements in their architecture and capabilities:

- Tighter IDE Integration: More seamless workflows within popular development environments

- Improved Reasoning: Better understanding of complex codebases and architectural patterns

- Personalization: Agents that learn your coding style and preferences over time

- Multi-Agent Collaboration: Teams of specialized AI agents working together on different aspects of development

- More Frequent Knowledge Updates: Reducing the gap between the LLM's knowledge cutoff and current technologies

Conclusion

AI coding agents represent a significant advancement in how developers interact with artificial intelligence. By understanding the architecture behind these tools - from context setting to planning, execution, and evaluation - developers can better leverage them in their workflows and potentially even build their own specialized agents for specific tasks.

The four-stage architecture we've explored provides a foundation for understanding how these systems work beneath the surface. Whether you're using tools like Juni or considering implementing your own AI coding assistants, this architectural knowledge helps demystify the technology and opens up possibilities for how AI can enhance the software development process.

Let's Watch!

How AI Coding Agents Work: Building Your Own Simplified Version of JetBrains' Juni

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence