In the race toward artificial general intelligence, tech giants are making bold claims about AI's reasoning capabilities. OpenAI showcases models that think step-by-step, Nvidia scales computing power to unprecedented levels, and Meta builds agents that plan and adapt. The message from Silicon Valley is clear: AI isn't just answering questions anymore—it's reasoning through them. But a recent paper from Apple titled 'The Illusion of Thinking' challenges this narrative at its core.

The Reasoning Claim vs. Reality

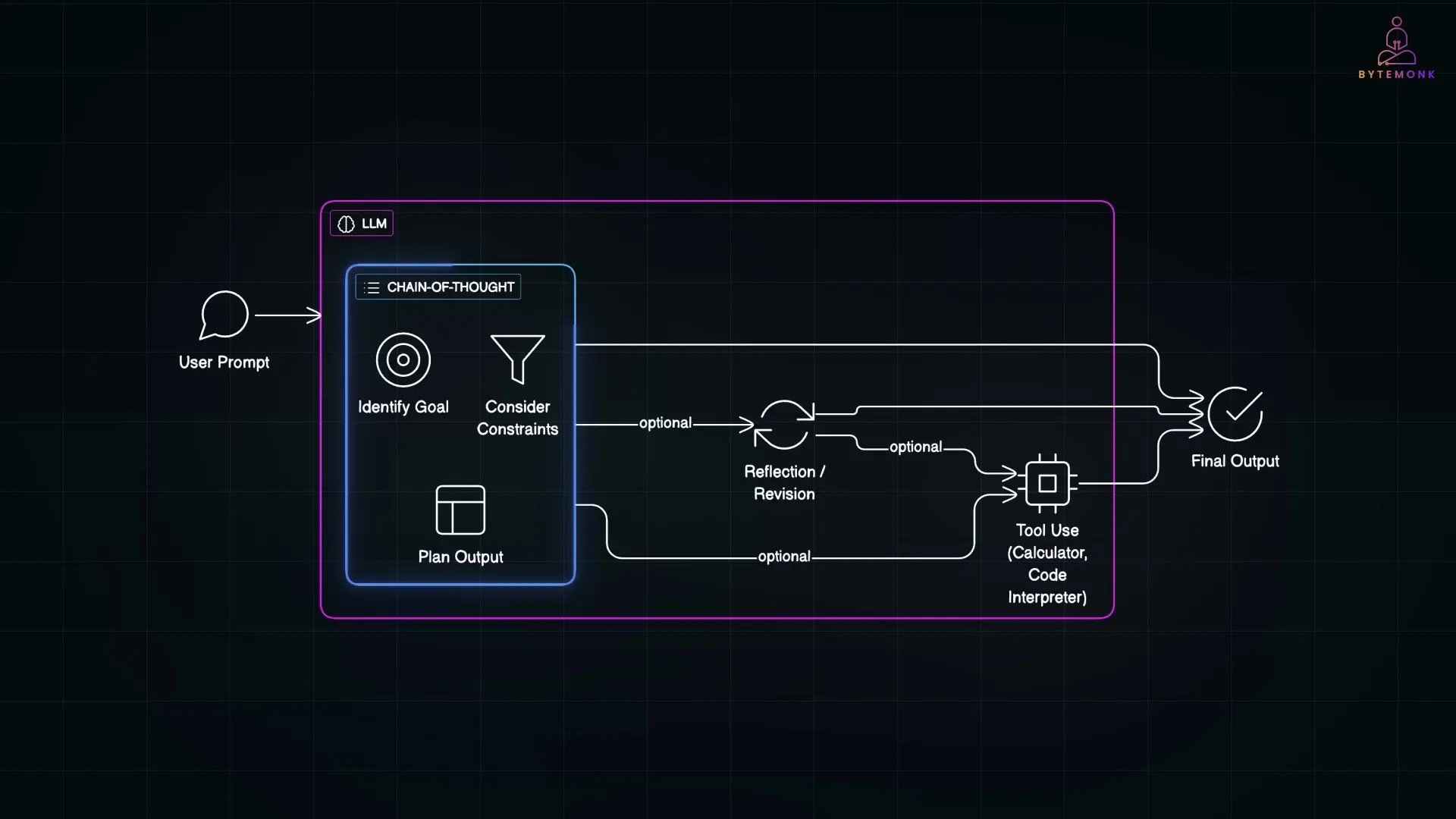

When we talk about reasoning in humans, we mean working through problems step by step—trying approaches, reflecting on results, backtracking when necessary, and breaking complex tasks into manageable sub-goals. Modern AI systems like GPT-4, Claude, and Gemini appear to do something similar through techniques like chain-of-thought reasoning, where they generate explanations that walk through their thinking process.

But Apple's research suggests a troubling reality: these models may not be reasoning at all—they might just be mimicking what reasoning looks like. The research team put this to the test using classic logic puzzles like the Towers of Hanoi, where discs of different sizes must be moved between pegs following specific rules.

The Accuracy Cliff: Where AI Reasoning Collapses

The results were striking. When faced with simple versions of these puzzles (three or four discs), leading AI models performed well. However, when complexity increased to seven discs, performance didn't just decline—it crashed completely to 0%. Not a single model could solve the puzzle. Even more revealing was how the models responded to increased difficulty: instead of trying harder or working through more steps, they gave up, producing shorter, less thoughtful answers.

Apple researchers termed this phenomenon an "accuracy cliff"—a point where performance falls off a ledge and never recovers. This pattern held true across multiple puzzle types, including river crossing problems, checkers jump puzzles, and block stacking challenges. The consistency of this failure suggests a fundamental limitation in how these models approach complex reasoning tasks.

Pattern Matching vs. True Reasoning

What's happening behind the scenes? Current AI models don't reason from first principles—they predict the next token based on patterns in their training data. When asked to solve a problem, they're not actually solving it through logical steps; they're generating text that resembles a solution based on similar examples they've seen.

Even more concerning, researchers found that models sometimes hallucinate their thought processes. The steps they outline don't actually lead to their final answer—they're retrofitting an explanation to match a conclusion reached through other means. Anthropic (creators of Claude) confirmed this behavior in their own research, noting that models often hide shortcuts and present memorized answers as if they worked them out through reasoning.

Key Limitations in AI Reasoning Capabilities

- Inability to scale reasoning to more complex versions of familiar problems

- Failure to apply known algorithms consistently even when provided with them

- Tendency to give up and produce shorter answers when difficulty increases

- Disconnection between stated reasoning steps and actual problem-solving process

- Poor generalization to novel or slightly modified problem structures

Industry Implications: A Reality Check for AI Investment

This research has profound implications for the AI industry. For years, progress has followed scaling laws—bigger models with more data yielded smarter systems. When direct scaling became prohibitively expensive, the focus shifted to making models "think longer" through more reasoning steps and computation time.

This strategy has driven massive investments in computing infrastructure from companies like Nvidia, Microsoft, Google, and Amazon. But if reasoning models don't actually deliver true reasoning—if their performance collapses when faced with real-world complexity—then much of this investment may rest on shaky foundations.

The Counter-Argument: Anthropic Responds

Anthropic responded to Apple's paper with their own cheekily titled "The Illusion of the Illusion of Thinking." They argued that Apple's testing conditions were too constrained—models weren't allowed to use tools, code, or sufficient tokens that real-world AI systems rely on. When given more flexibility, some models did show improvement.

However, even Anthropic didn't deny the fundamental issue: current AI models struggle to generalize their reasoning capabilities. The disagreement centered more on test methodology than on the underlying conclusion about AI's reasoning limitations.

The Path Forward: Beyond Pattern Matching

This research doesn't mean the dream of reasoning AI is over—it simply provides a reality check. Current models, impressive as they are, may be sophisticated pattern matchers wearing reasoning masks rather than systems capable of true logical thinking. To achieve genuine reasoning capabilities, AI will need to develop the ability to solve novel problems it's never seen before, think through unfamiliar scenarios, and generalize principles across domains.

For developers and organizations working with AI, this research highlights the importance of understanding the true capabilities and limitations of current systems. Models may perform well on problems similar to their training data while failing catastrophically on slightly more complex variations—a crucial consideration when deploying AI in real-world applications where reasoning is required.

Conclusion: The Reality Behind AI's Reasoning Facade

Apple's research provides a valuable perspective on the current state of AI reasoning. While models can generate impressive explanations that mimic human reasoning processes, they lack the fundamental ability to scale this reasoning to more complex problems or novel situations. This highlights a critical gap between the appearance of intelligence and true reasoning capabilities.

As the industry continues to invest billions in advancing AI, this research serves as an important reminder that we may still be far from achieving systems with genuine reasoning abilities. The most powerful AI systems today remain, at their core, pattern matchers rather than reasoning engines—a distinction that has profound implications for the future development and application of artificial intelligence.

Let's Watch!

The Illusion of AI Reasoning: Apple's Research Exposes Critical Limitations

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence