LangChain has emerged as a powerful framework in the AI development ecosystem, especially since the introduction of GPT-4 in March 2023. For developers looking to harness the capabilities of large language models (LLMs) while connecting them to external data sources, LangChain provides an elegant solution that bridges the gap between AI models and real-world applications.

What is LangChain?

LangChain is an open-source framework available as both Python and JavaScript/TypeScript packages that allows developers to combine large language models like GPT-4 with external sources of computation and data. This integration enables the creation of AI applications that are both data-aware and capable of taking actions based on that data.

Why Use LangChain? The Practical Problem It Solves

While models like GPT-4 have impressive general knowledge, they're limited when it comes to accessing specific information from your own data sources. LangChain solves this limitation by allowing you to connect LLMs to your proprietary information - whether that's in PDFs, databases, or other formats.

Rather than simply pasting text snippets into a prompt, LangChain enables referencing entire databases of your own information. Furthermore, once the model retrieves the information you need, LangChain can help you take action with that information, such as sending an email or updating a database.

How LangChain Works: The Core Pipeline

LangChain applications typically follow a general pipeline:

- A user asks an initial question

- The question is sent to the language model

- A vector representation of the question is created

- This vector is used to perform a similarity search in a vector database

- Relevant chunks of information are fetched from the vector database

- The language model receives both the original question and the relevant information

- The model provides an answer or takes an action based on all available information

This approach makes LangChain applications both data-aware (they can reference your own data in a vector store) and agentic (they can take actions beyond just providing answers).

Practical Applications of LangChain

The capabilities of LangChain open up numerous practical applications across various domains:

- Personal assistance: Booking flights, transferring money, paying taxes

- Education: Referencing entire syllabi to help learn material efficiently

- Coding and data analysis: Enhancing development workflows with contextual assistance

- Business intelligence: Connecting LLMs to company data for advanced analytics

- Customer service: Creating agents that can access customer histories and take appropriate actions

Key Components of LangChain

LangChain's value proposition can be divided into five main components:

- LLM Wrappers: Connect to models like GPT-4 or Hugging Face models

- Prompt Templates: Create dynamic prompts without hard-coding text

- Indexes/Vector Stores: Extract and store relevant information for LLMs

- Chains: Combine multiple components to solve specific tasks

- Agents: Allow LLMs to interact with external APIs and take actions

Getting Started with LangChain: A Basic Setup

To begin working with LangChain, you'll need to install the necessary libraries and set up your environment:

pip install langchain python-dotenv pinecone-clientYou'll also need API keys for services like OpenAI and Pinecone (for vector storage). These should be stored in an environment file for security.

Working with LLM Wrappers

LangChain provides wrappers for various language models. Here's a simple example using OpenAI's models:

from langchain.llms import OpenAI

# Initialize the model

llm = OpenAI(model_name="text-davinci-003")

# Ask a question

response = llm("Explain what a large language model is.")

print(response)For chat models like GPT-3.5 Turbo or GPT-4, LangChain provides a different interface:

from langchain.schema import AIMessage, HumanMessage, SystemMessage

from langchain.chat_models import ChatOpenAI

chat = ChatOpenAI(model_name="gpt-3.5-turbo")

messages = [

SystemMessage(content="You are a helpful AI assistant."),

HumanMessage(content="Explain what a large language model is.")

]

response = chat(messages)

print(response)Creating Dynamic Prompts with Templates

Prompt templates allow you to create dynamic prompts by injecting user input into predefined text structures:

from langchain.prompts import PromptTemplate

template = PromptTemplate(

input_variables=["concept"],

template="Explain the following concept: {concept}"

)

prompt = template.format(concept="neural networks")

print(prompt)

# This can then be passed to a language model

response = llm(prompt)

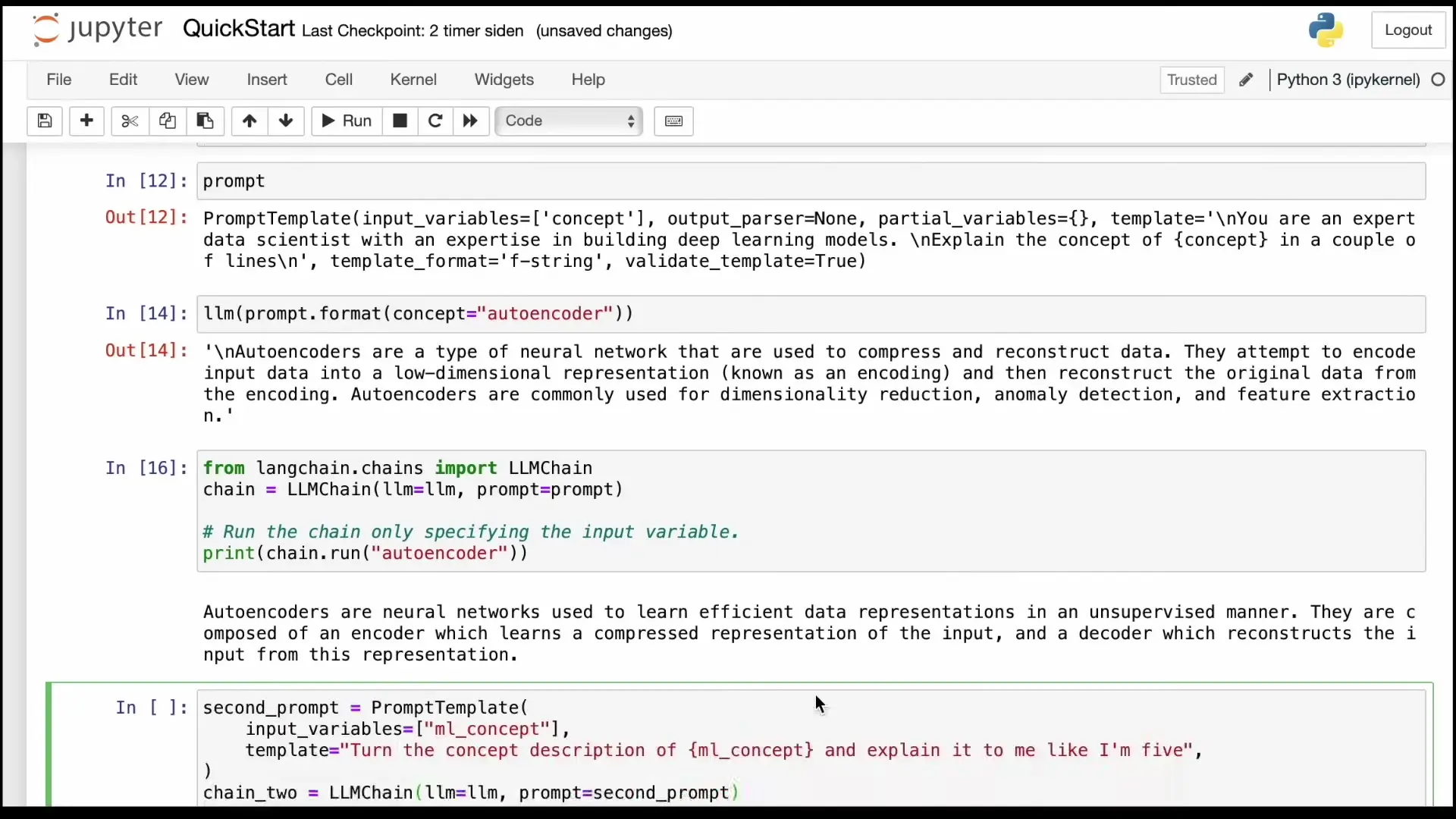

print(response)Building Chains for Sequential Processing

Chains combine language models and prompt templates into unified interfaces that can process inputs and produce outputs:

from langchain.chains import LLMChain

template = PromptTemplate(

input_variables=["concept"],

template="Explain the following concept briefly: {concept}"

)

chain = LLMChain(llm=llm, prompt=template)

response = chain.run("neural networks")

print(response)

You can also create sequential chains where the output of one chain becomes the input for another:

from langchain.chains import SimpleSequentialChain

# First chain explains a concept

template1 = PromptTemplate(

input_variables=["concept"],

template="Explain the following concept briefly: {concept}"

)

chain1 = LLMChain(llm=llm, prompt=template1)

# Second chain explains the result to a 5-year-old

template2 = PromptTemplate(

input_variables=["explanation"],

template="Explain the following to me like I'm 5 years old in about 500 words: {explanation}"

)

chain2 = LLMChain(llm=llm, prompt=template2)

# Combine the chains

overall_chain = SimpleSequentialChain(chains=[chain1, chain2])

# Run the chain

response = overall_chain.run("neural networks")

print(response)Working with Embeddings and Vector Stores

To work with your own data, you'll need to create embeddings (vector representations) of your text and store them in a vector database like Pinecone:

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.embeddings import OpenAIEmbeddings

from langchain.vectorstores import Pinecone

import pinecone

# Split text into chunks

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=1000,

chunk_overlap=0

)

text = "Your long document text here..."

chunks = text_splitter.split_text(text)

# Create embeddings

embeddings_model = OpenAIEmbeddings()

# Initialize Pinecone

pinecone.init(

api_key=os.environ["PINECONE_API_KEY"],

environment=os.environ["PINECONE_ENVIRONMENT"]

)

# Create or connect to an index

index_name = "your-index-name"

if index_name not in pinecone.list_indexes():

pinecone.create_index(index_name, dimension=1536) # OpenAI embeddings are 1536 dimensions

# Store embeddings in Pinecone

docsearch = Pinecone.from_texts(chunks, embeddings_model, index_name=index_name)

# Now you can query your vector store

query = "What does the document say about neural networks?"

docs = docsearch.similarity_search(query)

print(docs[0].page_content)Building a Complete LangChain Application

Combining all these components, you can create a complete question-answering system that references your own data:

from langchain.chains import RetrievalQA

# Create a retrieval chain

qa_chain = RetrievalQA.from_chain_type(

llm=llm,

chain_type="stuff",

retriever=docsearch.as_retriever()

)

# Ask questions about your document

question = "What are the key concepts in the document?"

response = qa_chain.run(question)

print(response)Conclusion: The Future of LangChain

LangChain is rapidly evolving, with new features and capabilities being added regularly. The framework's ability to connect large language models to external data sources and APIs is transforming how we build AI applications, making them more contextual, accurate, and actionable.

As LangChain continues to develop, we can expect to see increasingly sophisticated applications that leverage the power of LLMs while overcoming their inherent limitations. Whether you're building personal assistants, educational tools, or business intelligence solutions, LangChain provides a powerful framework for creating AI applications that can access, understand, and act upon your specific data.

By mastering the core components of LangChain - LLM wrappers, prompt templates, chains, embeddings, vector stores, and agents - developers can create AI applications that were previously impossible, opening up new possibilities for how we interact with and leverage artificial intelligence.

Let's Watch!

LangChain Quickstart: Build AI Apps with External Data in 13 Minutes

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence