Meta AI has made a significant comeback with the launch of three new Llama 4 models that promise to revolutionize AI-assisted coding and content generation. These models offer impressive capabilities at cost-efficient rates, making them accessible alternatives to more expensive options like GPT-4 and Claude. In this guide, we'll explore how to run Llama code effectively and leverage these models for your development needs.

Introducing the New Llama 4 Model Family

Meta has released three distinct models in the Llama 4 family, each with unique strengths for different use cases:

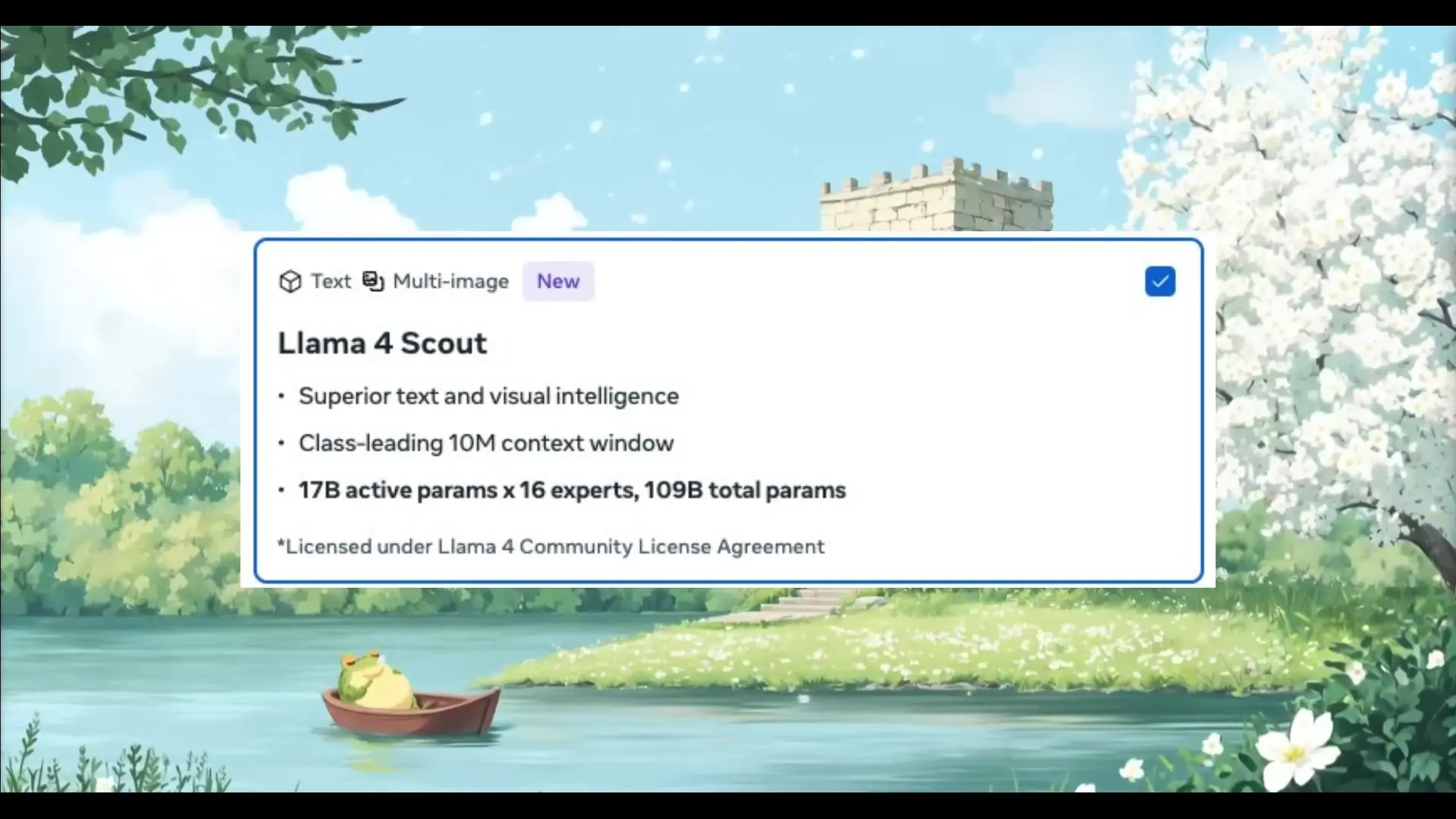

- **Llama 4 Scout**: A 17 billion active parameter model with 16 experts and a groundbreaking 10 million token context window. This model outperforms Gemma 3, Gemini 2.0, and Mistral 3.1 across multiple benchmarks.

- **Llama 4 Maverick**: Also a 17 billion active parameter model but with 128 experts. It excels at image grounding, surpassing GPT-4 Omni and Gemini 2.0 Flash in key benchmarks. It matches DeepSeek V3 in reasoning and coding with half the parameters.

- **Llama 4 Behemoth**: Currently still in training, this model already outperforms GPT-4.5, Claude 3.7 Sonnet, and Gemini 2.0 Pro on STEM-heavy tasks. It's a powerhouse that distills capabilities from the other two models.

Key Advantages of Llama 4 for Coding

While Llama 4 models may not be the absolute best coding assistants on the market, they offer several compelling advantages that make them worth considering for your development workflow:

- **Massive Context Window**: Scout's 10 million token context window practically eliminates the need for RAG (Retrieval-Augmented Generation) in many cases, allowing you to process entire codebases or large documents at once.

- **Lightning-Fast Response Speed**: One of the most impressive aspects of Llama 4 models is their rapid generation capabilities, producing code almost instantly.

- **Cost Efficiency**: These models offer an excellent performance-to-cost ratio, scoring a400 on LM Arena benchmarks, making them accessible for developers with budget constraints.

- **Multimodal Capabilities**: The Maverick model can process both text and images effectively, making it versatile for various development scenarios.

For coding specifically, Llama 4 models perform at a level comparable to DeepSeek V3, though they don't quite match the capabilities of Claude 3.7 Sonnet or Gemini 2.5 Pro. However, their speed and cost advantages make them excellent alternatives to Gemini 2.0 Flash for many coding tasks.

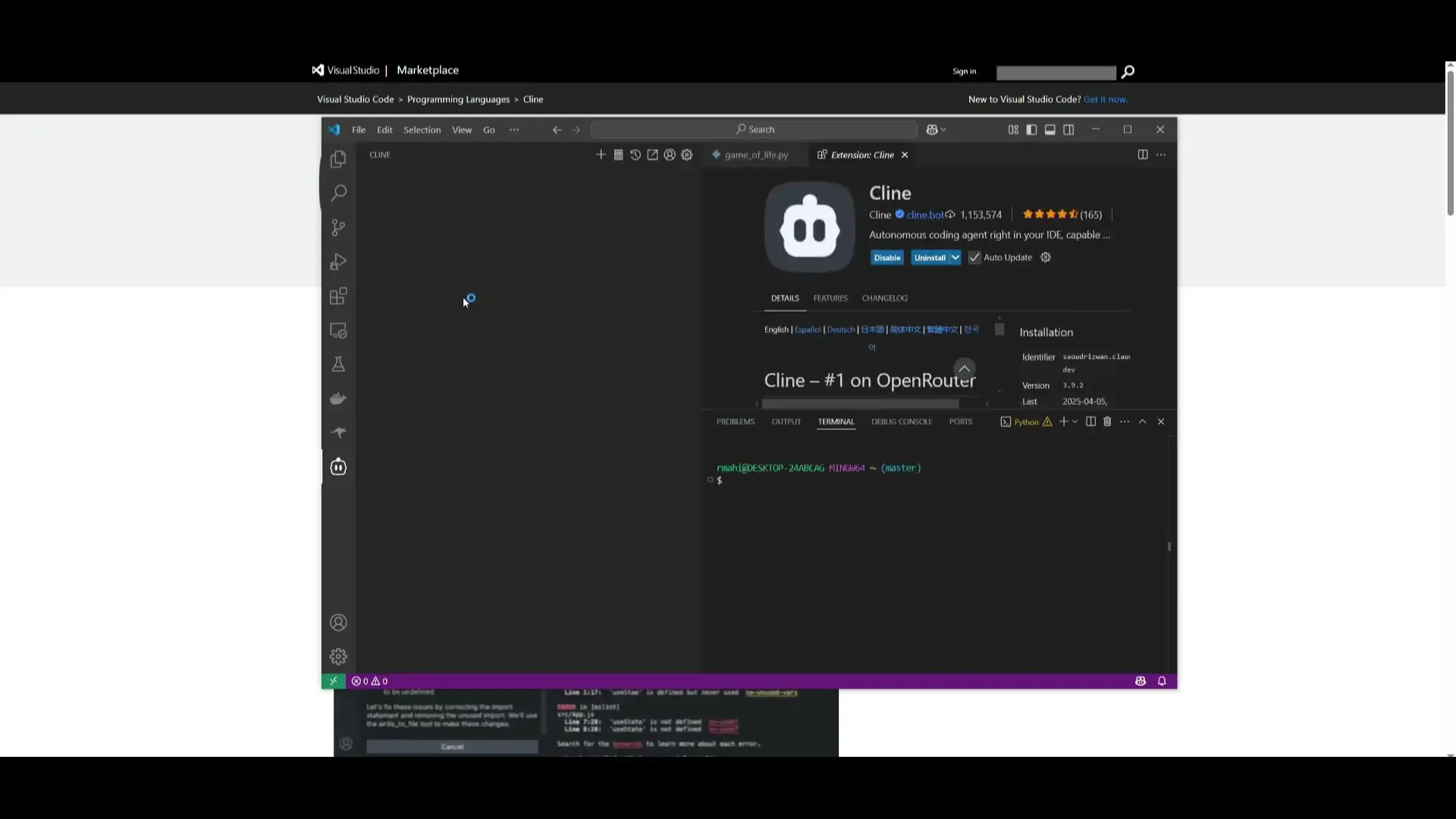

How to Run Llama Code Using Client IDE Extension

To start using Llama 4 for coding in your development environment, you'll need to set up the Client IDE extension and connect it to the Llama API. Here's a step-by-step guide:

- **Get API Access**: Head over to Open Router and create an account to access the free Llama 4 Scout and Maverick APIs.

- **Create an API Key**: In Open Router, navigate to the API section and generate a new API key.

- **Install Client Extension**: Install the Client extension in your preferred IDE (Visual Studio Code is recommended).

- **Configure the Extension**: Open the Client extension in your IDE, go to settings, and paste your Open Router API key.

- **Select Llama 4 Model**: Choose either the Scout or Maverick model depending on your specific needs.

Once configured, you can start generating code by typing prompts directly in the Client chat interface. The extension allows Llama models to create and edit files, execute commands, browse the web, and perform other tasks autonomously within your IDE.

Sample Llama Model Code Generation

Let's examine how Llama 4 performs with a typical coding task. When prompted to create a SaaS website or task management application, the models generate functional code relatively quickly. Here's an example of what you might expect:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Task Management App</title>

<style>

body {

font-family: 'Arial', sans-serif;

background-color: #f5f5f5;

margin: 0;

padding: 20px;

}

.container {

max-width: 600px;

margin: 0 auto;

background-color: white;

border-radius: 8px;

box-shadow: 0 2px 10px rgba(0,0,0,0.1);

padding: 20px;

}

h1 {

color: #333;

text-align: center;

}

.input-group {

display: flex;

margin-bottom: 20px;

}

#taskInput {

flex: 1;

padding: 10px;

border: 1px solid #ddd;

border-radius: 4px 0 0 4px;

font-size: 16px;

}

button {

background-color: #4CAF50;

color: white;

border: none;

padding: 10px 15px;

cursor: pointer;

border-radius: 0 4px 4px 0;

font-size: 16px;

}

ul {

list-style-type: none;

padding: 0;

}

li {

padding: 10px 15px;

background-color: #f9f9f9;

border-left: 4px solid #4CAF50;

margin-bottom: 10px;

display: flex;

justify-content: space-between;

align-items: center;

}

.delete-btn {

background-color: #f44336;

color: white;

border: none;

padding: 5px 10px;

border-radius: 4px;

cursor: pointer;

}

</style>

</head>

<body>

<div class="container">

<h1>Task Management App</h1>

<div class="input-group">

<input type="text" id="taskInput" placeholder="Add a new task...">

<button onclick="addTask()">Add Task</button>

</div>

<ul id="taskList"></ul>

</div>

<script>

function addTask() {

const taskInput = document.getElementById('taskInput');

const taskList = document.getElementById('taskList');

if (taskInput.value.trim() === '') return;

const li = document.createElement('li');

li.innerHTML = `

<span>${taskInput.value}</span>

<button class="delete-btn" onclick="this.parentElement.remove()">Delete</button>

`;

taskList.appendChild(li);

taskInput.value = '';

}

</script>

</body>

</html>While the code is functional, it's relatively basic. Llama 4 models tend to generate the minimum viable solution rather than creating highly innovative or complex implementations. This aligns with observations that these models are better suited for straightforward coding tasks rather than complex, creative development challenges.

When to Use Llama 4 for Coding

Based on testing, here are the scenarios where Llama 4 models excel for coding tasks:

- **Processing Large Codebases**: The Scout model's 10M token context window makes it ideal for analyzing and working with extensive code repositories.

- **Quick Prototyping**: When you need rapid code generation for simple applications or features, Llama 4's speed is advantageous.

- **Budget-Conscious Development**: For teams or individuals with limited resources, these models offer excellent value.

- **Working with Multiple Modalities**: If your development involves both code and visual elements, Maverick can handle both effectively.

For more complex or creative coding tasks, you might still prefer models like Claude 3.7 Sonnet, GPT-4, or Gemini 2.5 Pro. However, Llama 4 models represent a significant advancement in making powerful AI coding assistants accessible to more developers.

Limitations and Considerations

While Llama 4 models offer impressive capabilities, it's important to be aware of their limitations:

- **Basic Code Generation**: The models tend to produce functional but basic code solutions without many advanced features or optimizations.

- **Limited Creativity**: Don't expect highly innovative or creative coding solutions from these models.

- **Benchmark Considerations**: While the models score well on LM Arena benchmarks, these scores don't always translate directly to real-world coding performance.

- **Still in Development**: The Behemoth model, which promises even better performance, is still in training and not yet available.

Conclusion: Is Llama 4 Worth Using for Coding?

Llama 4 models represent a significant advancement in accessible AI coding assistants. Their combination of impressive context length, rapid response times, and cost efficiency makes them valuable tools for many development scenarios. While they may not match the sophistication of top-tier models for complex coding tasks, they offer an excellent balance of capability and accessibility.

For developers looking to integrate AI assistance into their workflow without significant cost, Llama 4 models provide a compelling option. The Scout model is particularly valuable for tasks involving large codebases or documents, while Maverick offers strong multimodal capabilities. As these models continue to evolve, they may become even more powerful tools for AI-assisted coding.

To get started with Llama coding, simply follow the setup instructions outlined above and begin experimenting with these models in your own development environment. Their free availability through Open Router makes them an accessible entry point into the world of AI-assisted coding.

Let's Watch!

Llama 4 Coding Guide: How to Run Free AI Code Generation with 10M Context Window

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence