The AI community has been rocked by a startling revelation: large language models (LLMs) that appear to carefully reason through problems step-by-step might not actually be 'thinking' at all—at least not in the way we've assumed. This discovery fundamentally challenges our understanding of how these powerful AI systems work and what their capabilities truly are.

The Illusion of Step-by-Step Reasoning

When we interact with advanced LLMs like GPT-4 or Claude, we often see them work through problems methodically, showing their reasoning process before arriving at a final answer. This verbose, step-by-step approach has been widely interpreted as evidence that these models are engaging in a thinking process similar to humans. However, multiple research studies now suggest this is largely an illusion.

The reality is far more complex: the intermediate reasoning steps that LLMs generate don't actually represent how they arrive at their answers internally. Instead, these explanations are more like post-hoc rationalizations—the model creating a plausible-sounding explanation for a conclusion it reached through entirely different internal mechanisms.

Evidence from Recent Research

Several groundbreaking studies have demonstrated this disconnect between an LLM's visible reasoning and its actual internal processes:

- Researchers found that replacing coherent chain-of-thought reasoning with meaningless tokens still improved model performance just as effectively

- Models using simple 'pause tokens' instead of verbose reasoning showed similar performance improvements on complex tasks

- The paper "Reasoning Models Can Be Effective Without Thinking" demonstrated that lengthy verbalized thinking processes aren't essential for strong reasoning capabilities

- Claude, once considered state-of-the-art in reasoning, didn't use any test-time compute or verbalized thinking

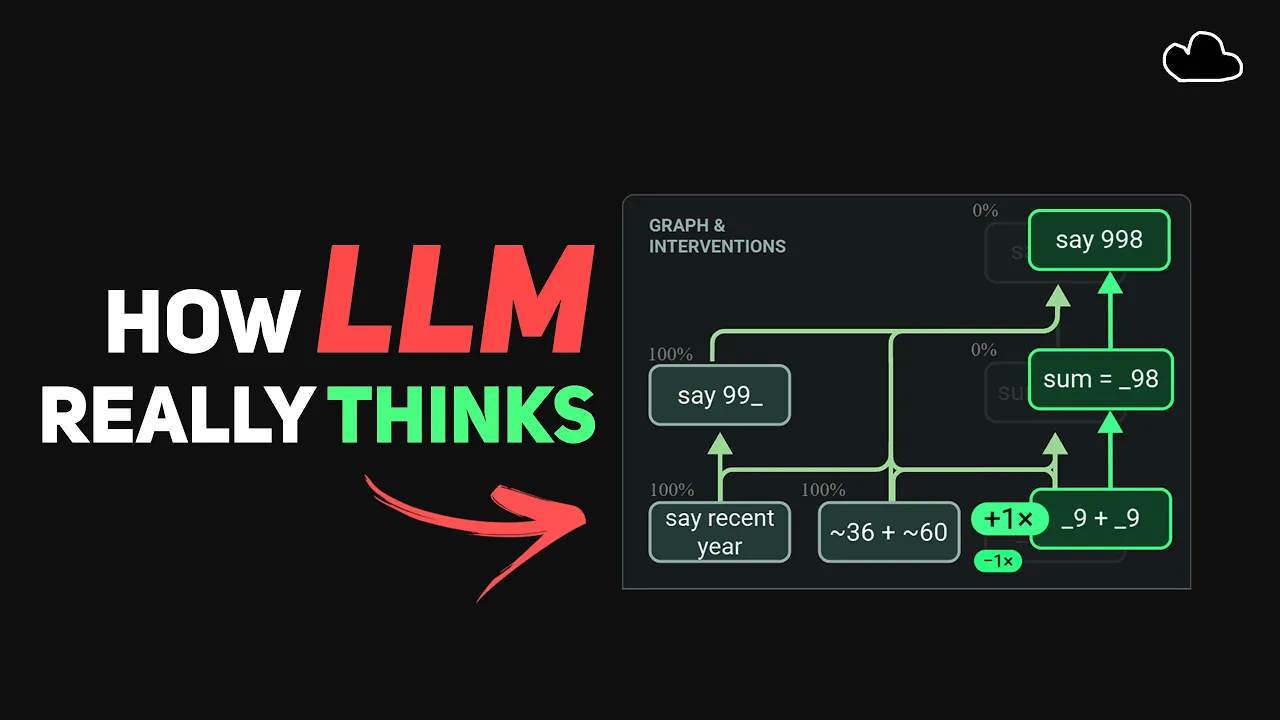

Perhaps most revealing is Anthropic's research titled "On the Biology of a Large Language Model," which used circuit tracing to observe how LLMs actually solve arithmetic problems internally. When solving a problem like 36 + 59, different parts of the neural network activated simultaneously rather than sequentially—some focusing on the last digits (6+9), others estimating the general magnitude of the answer, and some acting like memory nodes with pre-calculated information.

How LLMs Really Process Information

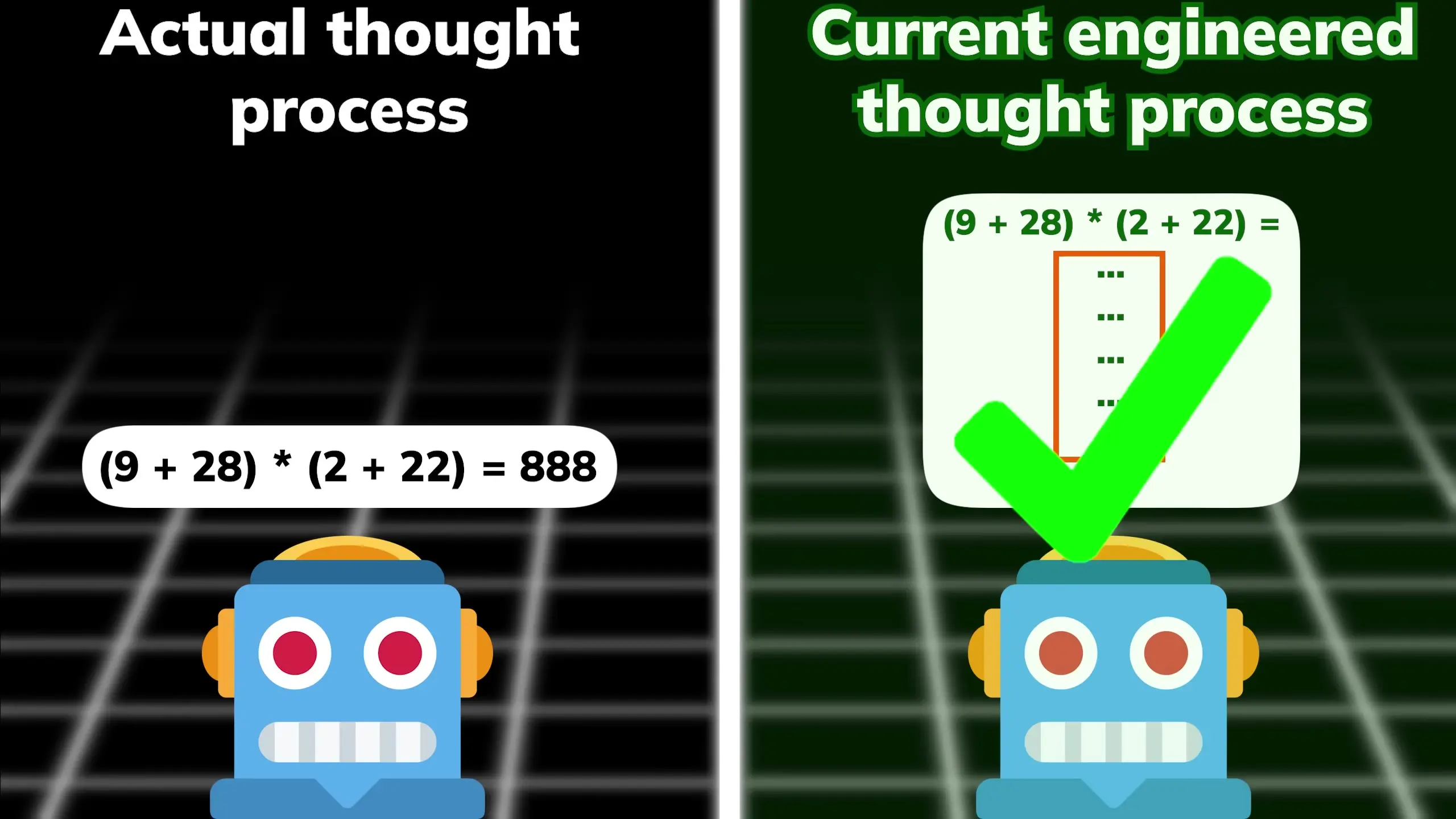

Unlike the linear, sequential reasoning process humans typically use and that LLMs appear to demonstrate in their outputs, these models actually employ a parallel, distributed approach to problem-solving. When asked to explain their reasoning afterward, they generate plausible-sounding step-by-step explanations that don't reflect their actual internal computation process.

This disconnect exists because LLMs lack metacognition—they cannot observe their own thinking processes. When they generate explanations of their reasoning, they're not reporting on how they actually arrived at an answer but rather constructing a human-like explanation that seems reasonable based on their training data.

Test-Time Compute vs. True Reasoning

The AI research community has developed techniques called "test-time compute" or "chain-of-thought prompting" to improve LLM performance on complex reasoning tasks. These methods encourage models to generate intermediate tokens before providing a final answer, which has been widely interpreted as helping the model "think through" problems.

However, research now suggests that the benefits of these techniques might not come from the logical content of the intermediate steps at all. Instead, the mere act of generating additional tokens—even meaningless ones—seems to provide similar performance benefits, suggesting that what we've been calling "thinking" is something quite different from human reasoning.

Implications for AI Development

This revelation has profound implications for AI development and alignment. If current LLMs don't actually reason the way humans do, this suggests fundamental limitations in their architecture that might make achieving true human-like intelligence—let alone superintelligence—much more challenging than previously thought.

Without metacognition and true introspective capabilities, LLMs may be limited to modeling human intelligence rather than developing novel forms of reasoning that could surpass human capabilities. They excel at aggregating and synthesizing human knowledge but may lack the cognitive architecture needed for genuine innovation beyond what's represented in their training data.

The Future of LLM Reasoning

Understanding the true nature of LLM reasoning is crucial for advancing AI capabilities. Rather than focusing exclusively on improving verbalized reasoning through test-time compute, researchers might need to develop new architectures that better support metacognition and introspection.

Some researchers suggest that the current autoregressive token prediction approach may have fundamental limitations that prevent true human-like reasoning. Future breakthroughs might require entirely new paradigms that enable models to observe and modify their own thinking processes.

Conclusion

The discovery that LLMs "think without thinking"—that they can reason effectively through internal distributed processes while generating human-like explanations that don't reflect those processes—fundamentally changes how we should understand these systems. They are not simply scaled-up versions of human cognition but rather alien forms of intelligence with their own unique properties and limitations.

As we continue to develop and deploy these powerful AI systems, maintaining a clear-eyed understanding of how they actually work—rather than how they appear to work—will be essential for both maximizing their benefits and addressing their limitations. The journey toward truly understanding machine intelligence has only just begun, and it's already challenging our most basic assumptions about what it means to think.

Let's Watch!

The Shocking Truth: LLMs Don't Actually Think Step-by-Step

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence