The Vercel AI SDK has emerged as a powerful solution for developers looking to integrate AI capabilities into their applications. As a versatile TypeScript library, it provides a standardized way to work with large language models (LLMs) without getting bogged down in provider-specific implementation details. This guide will walk you through everything you need to know to start building sophisticated AI applications using the Vercel AI SDK.

What is the Vercel AI SDK?

The Vercel AI SDK is an open-source library that provides a unified interface for working with various LLM providers such as OpenAI and Anthropic. It abstracts away the complexities of different API implementations, allowing you to switch between models seamlessly without rewriting your application logic.

Key features of the Vercel AI SDK include:

- Provider-agnostic API for easy model switching

- Text streaming capabilities for real-time responses

- Structured output handling for type-safe data

- Tool calling for extending AI capabilities

- Agent building for complex, multi-step tasks

- Image and file handling for multimodal applications

The SDK consists of three main components: the core package for backend code, the UI package for frontend integration, and the RSC package for React Server Components. In this guide, we'll focus primarily on the core functionality.

Getting Started with Vercel AI SDK

To begin using the Vercel AI SDK, you'll need to install the core package along with any model-specific providers you want to use:

npm install ai

npm install @ai-sdk/anthropic # for Anthropic models

npm install @ai-sdk/openai # for OpenAI modelsOnce installed, you can import the necessary functions and start building your AI-powered application.

Basic Text Generation

The most fundamental operation in the Vercel AI SDK is generating text. Let's look at a simple example using Anthropic's Haiku model:

import { generateText } from 'ai';

import { anthropic } from '@ai-sdk/anthropic';

async function answerMyQuestion(prompt: string) {

const model = anthropic('claude-3-haiku-20240307');

const { text } = await generateText({

model,

prompt

});

return text;

}

// Example usage

const answer = await answerMyQuestion('What is the chemical formula for dihydrogen monoxide?');

console.log(answer); // Output: "The chemical formula for dihydrogen monoxide is H2O"In this example, we import the `generateText` function from the AI SDK and the Anthropic provider. We then create a function that takes a prompt, passes it to the model, and returns the generated text. The model is specified by calling the provider function with the specific model name.

Streaming Text Responses

For a more interactive experience, you can stream the model's response token by token using the `streamText` function:

import { streamText } from 'ai';

import { anthropic } from '@ai-sdk/anthropic';

async function streamResponse(prompt: string) {

const model = anthropic('claude-3-haiku-20240307');

const { textStream, text } = await streamText({

model,

prompt

});

// Process the stream token by token

for await (const chunk of textStream) {

process.stdout.write(chunk);

}

// You can also access the complete text after streaming

return await text;

}

streamResponse('What is the color of the sun?');The `streamText` function returns both a `textStream` for processing chunks as they arrive and a `text` promise that resolves to the complete response. This gives you flexibility in how you handle the model's output.

Using System Prompts

System prompts allow you to give the model persistent instructions about how to behave. This is useful for defining the model's role, tone, or constraints:

import { generateText } from 'ai';

import { anthropic } from '@ai-sdk/anthropic';

async function summarizeText(text: string) {

const model = anthropic('claude-3-haiku-20240307');

const { text: summary } = await generateText({

model,

system: "You are a text summarizer. Condense the provided text into a concise summary while preserving the key points.",

prompt: text

});

return summary;

}

// Alternative approach using messages array

async function summarizeTextWithMessages(text: string) {

const model = anthropic('claude-3-haiku-20240307');

const { text: summary } = await generateText({

model,

messages: [

{ role: 'system', content: "You are a text summarizer. Condense the provided text into a concise summary while preserving the key points." },

{ role: 'user', content: text }

]

});

return summary;

}Both approaches achieve the same result, but the messages array gives you more flexibility for complex conversations.

Model Flexibility and Dependency Injection

One of the most powerful features of the Vercel AI SDK is its ability to abstract away model-specific implementation details. This allows you to easily switch between different providers or models:

import { generateText } from 'ai';

import { LanguageModel } from 'ai';

import { anthropic } from '@ai-sdk/anthropic';

import { openai } from '@ai-sdk/openai';

async function ask(prompt: string, model: LanguageModel) {

const { text } = await generateText({

model,

prompt

});

return text;

}

// Use with Anthropic

const claudeModel = anthropic('claude-3-haiku-20240307');

const claudeResponse = await ask("What is the capital of France?", claudeModel);

// Use with OpenAI

const gptModel = openai('gpt-4');

const gptResponse = await ask("What is the capital of France?", gptModel);This pattern allows for dependency injection, making your code more testable and adaptable to changing requirements. You can easily switch models or even mock them for testing purposes.

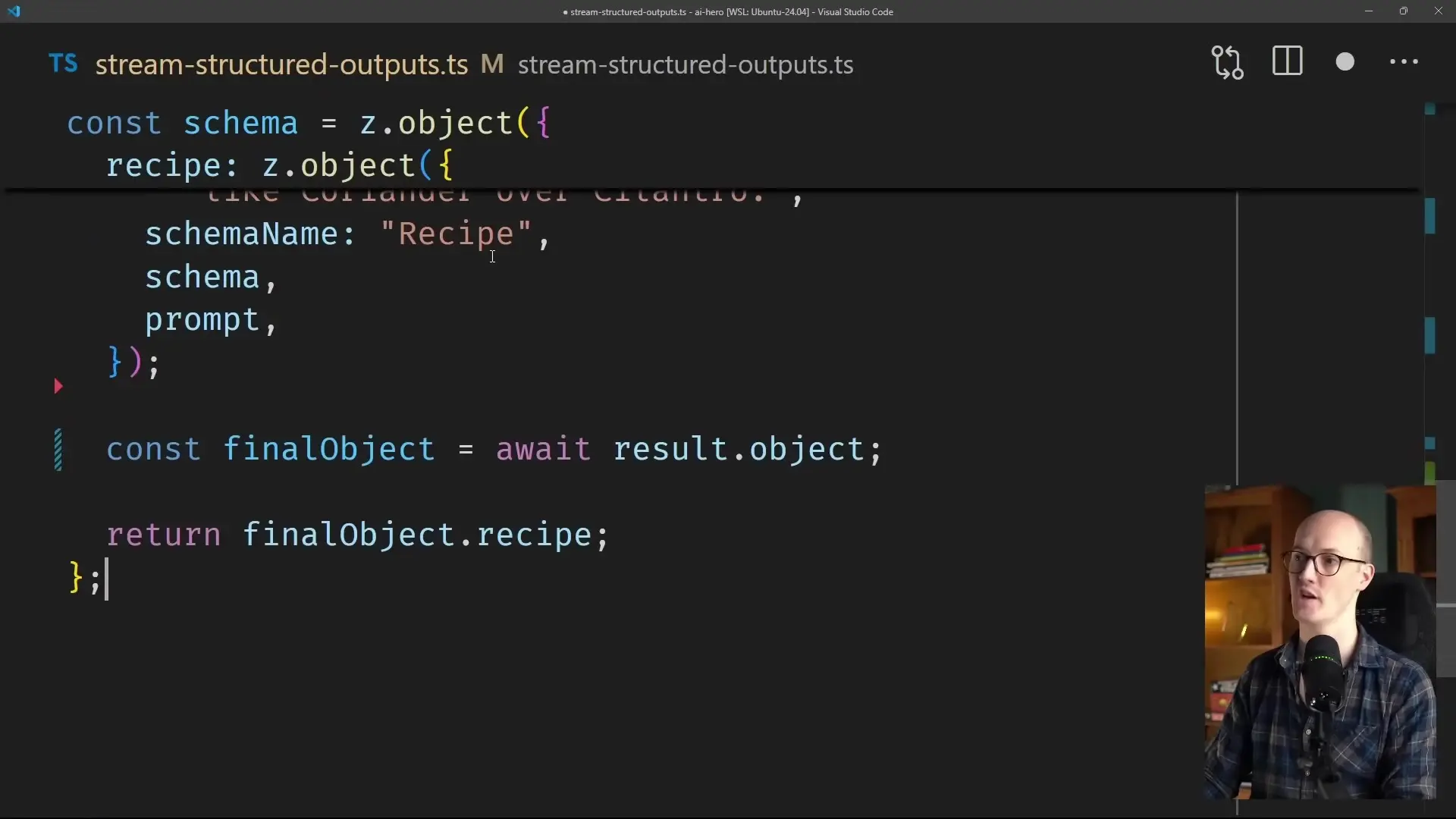

Working with Structured Outputs

When working with AI, you often need structured data rather than free-form text. The Vercel AI SDK provides tools for defining schemas and extracting structured information from model responses:

import { generateObject } from 'ai';

import { anthropic } from '@ai-sdk/anthropic';

import { z } from 'zod';

// Define a schema for the structured output

const RecipeSchema = z.object({

title: z.string(),

ingredients: z.array(z.string()),

steps: z.array(z.string()),

prepTime: z.number().min(0),

cookTime: z.number().min(0)

});

async function generateRecipe(prompt: string) {

const model = anthropic('claude-3-haiku-20240307');

const recipe = await generateObject({

model,

prompt,

schema: RecipeSchema

});

return recipe;

}

const recipe = await generateRecipe("Create a simple pasta recipe");

console.log(recipe.title);

console.log(recipe.ingredients);

// Access other properties in a type-safe mannerThe `generateObject` function takes a schema defined with Zod and ensures that the model's response conforms to that structure. This gives you type safety and predictable outputs, making it easier to integrate AI into your application.

Handling Conversation History

For chat applications, you need to maintain conversation history to provide context for the model. The Vercel AI SDK makes this straightforward:

import { generateText } from 'ai';

import { anthropic } from '@ai-sdk/anthropic';

async function chatWithHistory(messages) {

const model = anthropic('claude-3-haiku-20240307');

const { text } = await generateText({

model,

messages: messages

});

// Add the assistant's response to the history

messages.push({ role: 'assistant', content: text });

return {

response: text,

updatedHistory: messages

};

}

// Example usage

let conversationHistory = [

{ role: 'system', content: 'You are a helpful assistant.' },

{ role: 'user', content: 'Hello, who are you?' }

];

const { response, updatedHistory } = await chatWithHistory(conversationHistory);

console.log(response);

// Continue the conversation

conversationHistory = updatedHistory;

conversationHistory.push({ role: 'user', content: 'What can you help me with?' });

const nextTurn = await chatWithHistory(conversationHistory);By passing the conversation history as an array of messages, you allow the model to understand the context of the conversation, enabling more coherent and contextually relevant responses.

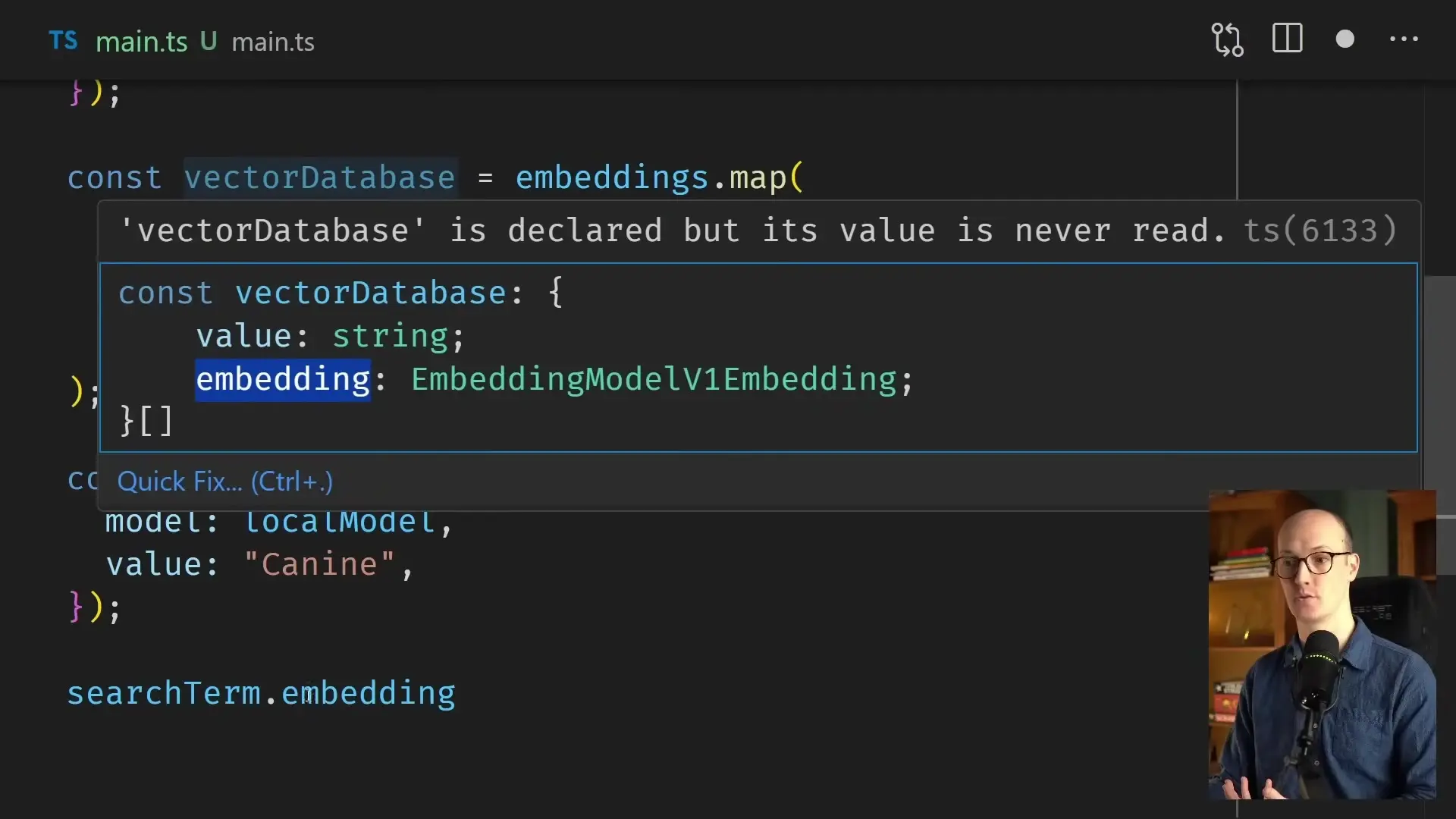

Working with Vector Databases and Embeddings

For more advanced applications, you might need to work with embeddings and vector databases for semantic search or retrieval-augmented generation:

import { generateEmbedding } from 'ai';

import { openai } from '@ai-sdk/openai';

async function createEmbedding(text: string) {

const model = openai('text-embedding-3-small');

const embedding = await generateEmbedding({

model,

input: text

});

return embedding;

}

// Example usage with a simple vector database

const documents = [

{ id: 1, text: "Introduction to AI and machine learning concepts" },

{ id: 2, text: "Advanced techniques in natural language processing" },

{ id: 3, text: "Building recommendation systems with collaborative filtering" }

];

// Create embeddings for all documents

const documentEmbeddings = await Promise.all(

documents.map(async (doc) => ({

...doc,

embedding: await createEmbedding(doc.text)

}))

);

// Function to find similar documents

async function findSimilarDocuments(query: string, topK: number = 2) {

const queryEmbedding = await createEmbedding(query);

// Compute similarity scores (simplified example)

const scoredDocuments = documentEmbeddings.map(doc => ({

...doc,

score: computeSimilarity(queryEmbedding, doc.embedding)

}));

// Return top K documents

return scoredDocuments

.sort((a, b) => b.score - a.score)

.slice(0, topK);

}

// Helper function to compute cosine similarity

function computeSimilarity(embedding1, embedding2) {

// Implementation of cosine similarity calculation

// This is a simplified placeholder

return 0.5; // Dummy value

}This example demonstrates how to generate embeddings for documents and queries, which can then be used for semantic search or as context for retrieval-augmented generation (RAG) applications.

Conclusion: Why Choose Vercel AI SDK

The Vercel AI SDK offers several compelling advantages for developers building AI-powered applications:

- Provider-agnostic API that lets you switch between models without changing your code

- Comprehensive support for modern AI capabilities like streaming, structured outputs, and tool calling

- Type-safe interfaces that improve developer experience and reduce errors

- Simple yet powerful abstractions that handle complex operations with minimal code

- Open-source and free to use, with no vendor lock-in or additional costs

Whether you're building a simple chatbot or a complex AI agent, the Vercel AI SDK provides the right level of abstraction to make development faster, more maintainable, and more flexible. By standardizing how you interact with different AI models, it allows you to focus on building features rather than wrestling with provider-specific APIs.

As AI continues to evolve rapidly, having a flexible foundation like the Vercel AI SDK becomes increasingly valuable. It allows you to experiment with different models, adapt to new capabilities, and scale your applications without major rewrites. For TypeScript developers looking to incorporate AI into their applications, the Vercel AI SDK is an essential tool that significantly simplifies the development process.

Let's Watch!

Mastering Vercel AI SDK: The Ultimate Guide for JavaScript Developers

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence