The Model Context Protocol (MCP) has emerged as a significant development in the AI landscape, particularly for developers working with Large Language Models (LLMs). Despite being labeled as a buzzword, MCP addresses a fundamental challenge in how LLM tools interact with AI applications. But to understand MCP's value, we first need to grasp how LLMs actually work with tools.

The Fundamentals of LLMs: Token Generators

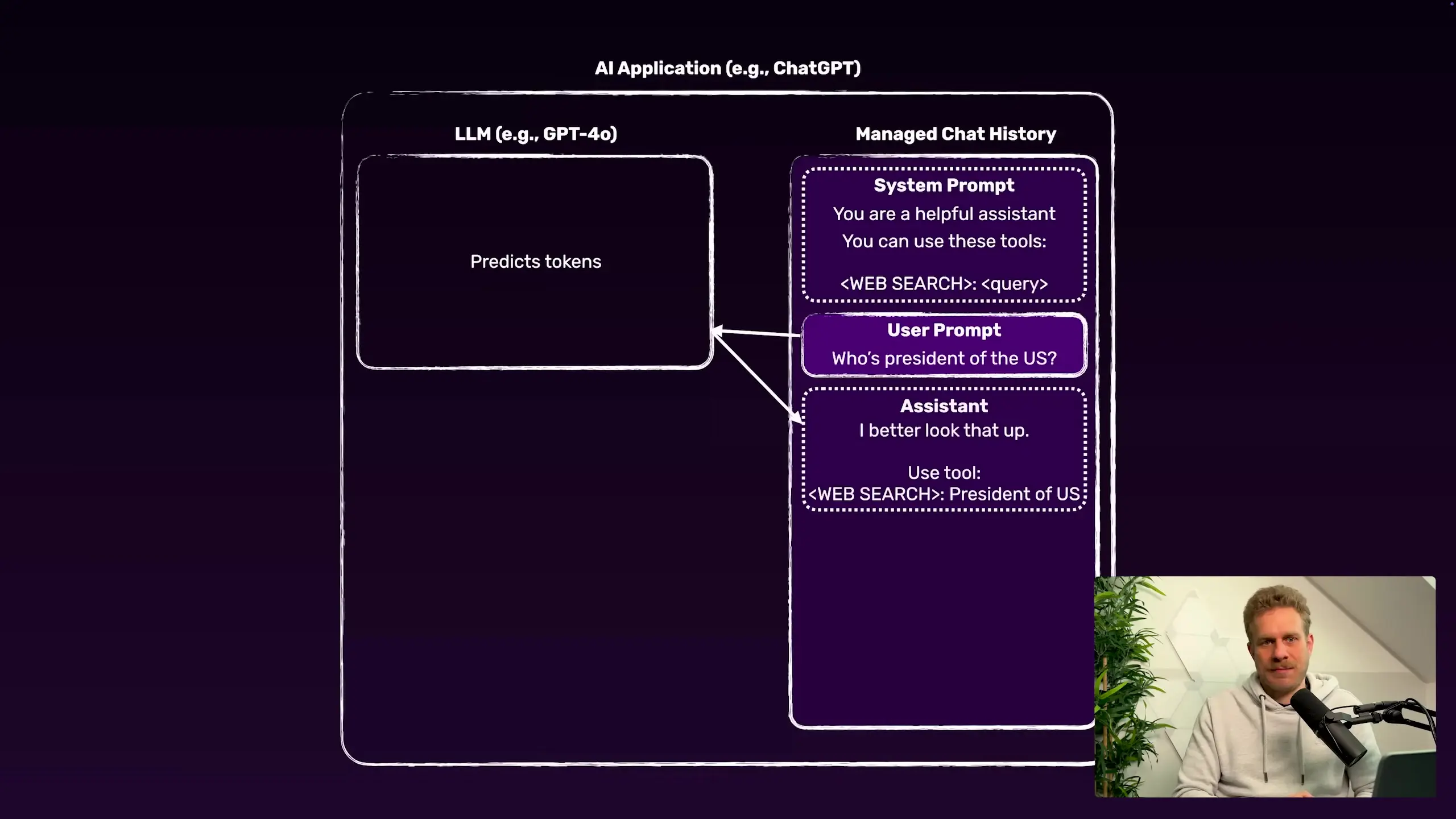

At their core, LLMs are simply token generators. They produce text (tokens) based on input they receive, with tokens representing words or parts of words. This fundamental truth is crucial to understand: LLMs themselves don't have inherent abilities to search the web, run code, or access external systems. They merely generate text responses based on patterns they've learned during training.

This reality contradicts a common misconception that modern LLMs are all-powerful agents with built-in capabilities to interact with external tools. Even the most advanced reasoning models are ultimately just sophisticated text generators.

How LLM Tools Actually Work: The Application Shell

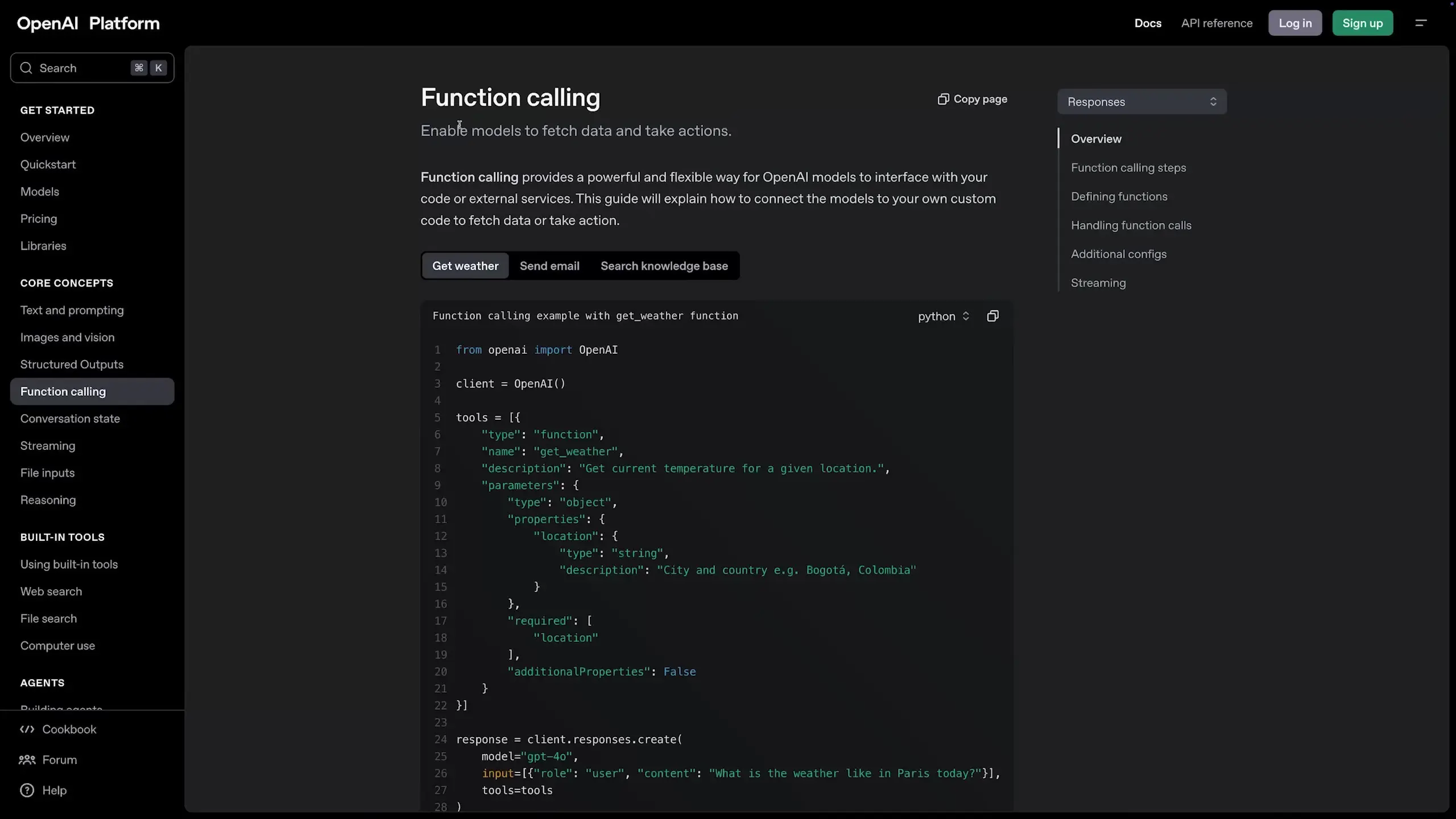

When you use ChatGPT or similar AI applications that appear to have capabilities like web search or code execution, what you're actually experiencing is an application shell built around an LLM. This application shell contains human-written code that enables tool usage through a specific process:

- The application injects a system prompt into the LLM that includes descriptions of available tools and instructions on how to use them

- When a user asks a question that might benefit from tool use, the LLM generates text that includes a standardized request to use a specific tool

- The application intercepts this tool request before showing it to the user

- Human-written code executes the requested tool function (like performing a web search)

- Results from the tool are fed back into the conversation history

- The LLM generates a new response based on the original query plus the tool results

- Only this final response is shown to the user

This process is entirely controlled by the application developers. The LLM alignment process includes setting up these system instructions to make the model more likely to generate tool-use requests in appropriate situations, but it's still probabilistic. There's no guarantee the model will choose to use a tool even when it would be helpful.

The Problem MCP Solves: Tool Integration Fragmentation

Currently, every AI application has its own approach to implementing and describing tools to LLMs. If you've developed a specialized service—like a hotel ratings database—and want to make that knowledge available to AI applications, you face a significant challenge: you must convince each AI application developer to specifically code integration with your service.

This creates a fragmented ecosystem where valuable specialized knowledge remains siloed and inaccessible to most AI applications unless you're a major player with negotiating power.

The MCP Solution: Standardizing Tool Integration

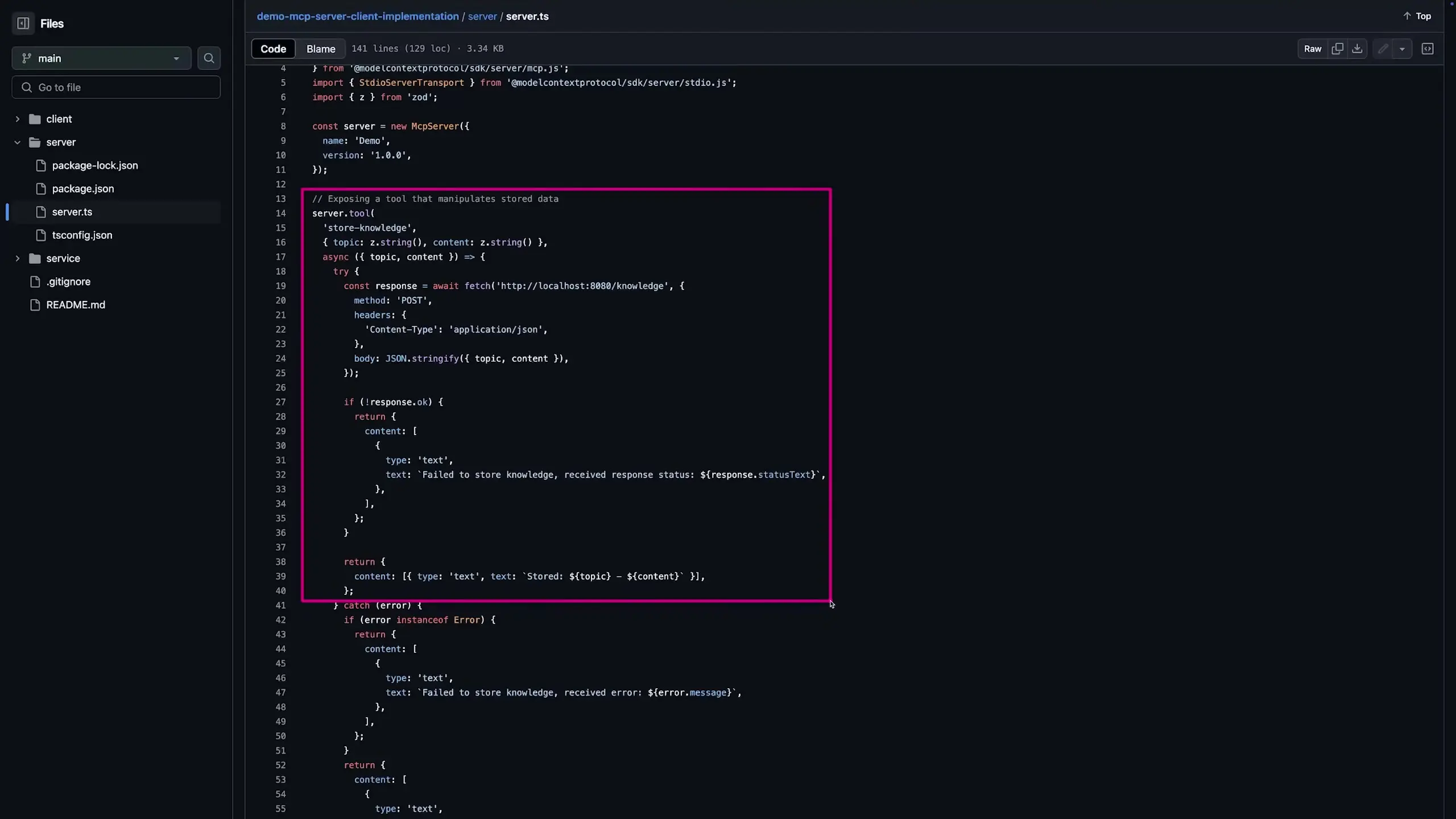

The Model Context Protocol (MCP) aims to standardize how tools are described to LLMs and how they're used. With MCP, you can build an MCP server for your specialized service that exposes your functionality in a standardized way that any MCP-compatible AI application can discover and use.

For example, if you've built that hotel ratings database, you could create an MCP server that exposes endpoints for retrieving ratings by hotel name or by geographic region. Because you're using the MCP standard, your tools will be described in a consistent format that AI applications can understand without custom integration code.

Building with MCP: Server and Client Implementation

Implementing MCP involves two main components:

- MCP Server: Exposes your specialized tools using the standardized MCP format

- MCP Client: An AI application that can discover and use MCP-compatible tools

Official SDKs are available to help developers build MCP-compatible servers. The protocol handles all the standardization aspects, including how tools are described, how parameters are passed, and how results are returned.

// Example of defining a tool in an MCP server

const hotelRatingsTool = {

name: "getHotelRatings",

description: "Retrieves ratings for a specific hotel",

parameters: {

type: "object",

properties: {

hotelName: {

type: "string",

description: "The name of the hotel"

},

minRating: {

type: "number",

description: "Minimum rating threshold (optional)"

}

},

required: ["hotelName"]

}

};

The Benefits of MCP for LLM Software Development

Adopting the MCP standard offers several advantages for both tool providers and AI application developers:

- Tool providers can make their specialized knowledge available to a wide range of AI applications without custom integration work

- AI application developers can discover and incorporate specialized tools without building custom integrations for each one

- End users benefit from AI applications that can access a broader range of specialized knowledge and capabilities

- The entire ecosystem becomes more open and interoperable

This standardization is particularly valuable for LLM alignment efforts, as it creates a consistent way for models to understand tool capabilities and limitations across different implementations.

Implementing MCP in Your LLM Software

If you're developing AI applications or specialized tools, implementing MCP support can significantly enhance your offering's capabilities and reach. For AI application developers, adding MCP client capabilities allows your application to discover and use a growing ecosystem of specialized tools. For tool providers, building an MCP server makes your specialized knowledge accessible to a broader range of AI applications.

The implementation process is straightforward thanks to available SDKs, and the standardized nature of MCP means you only need to implement the protocol once to make your tools or application compatible with the entire ecosystem.

Conclusion: The Future of LLM Tool Integration

The Model Context Protocol represents an important step toward a more open, interoperable AI ecosystem. By standardizing how LLM tools are described and used, MCP addresses a fundamental challenge in making specialized knowledge accessible to AI applications.

As the MCP ecosystem grows, we can expect to see a proliferation of specialized tools becoming available to AI applications, enhancing their capabilities and making them more useful across a wider range of domains. For developers working with LLMs, understanding and implementing MCP will be an increasingly valuable skill in building more capable and versatile AI applications.

Let's Watch!

MCP Protocol Explained: How LLM Tools Actually Work Behind the Scenes

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence