Meta is pioneering groundbreaking research that many in the AI community have overlooked. Their work on Large Concept Models (LCMs) represents a fundamental shift in how AI systems process information and reason about the world. While recent advances in AI have focused on making language models better at generating text, Meta's research takes a different approach by addressing a core limitation: teaching AI to think in abstract concepts rather than being restricted to word-based reasoning.

The Evolution of AI Thinking Models

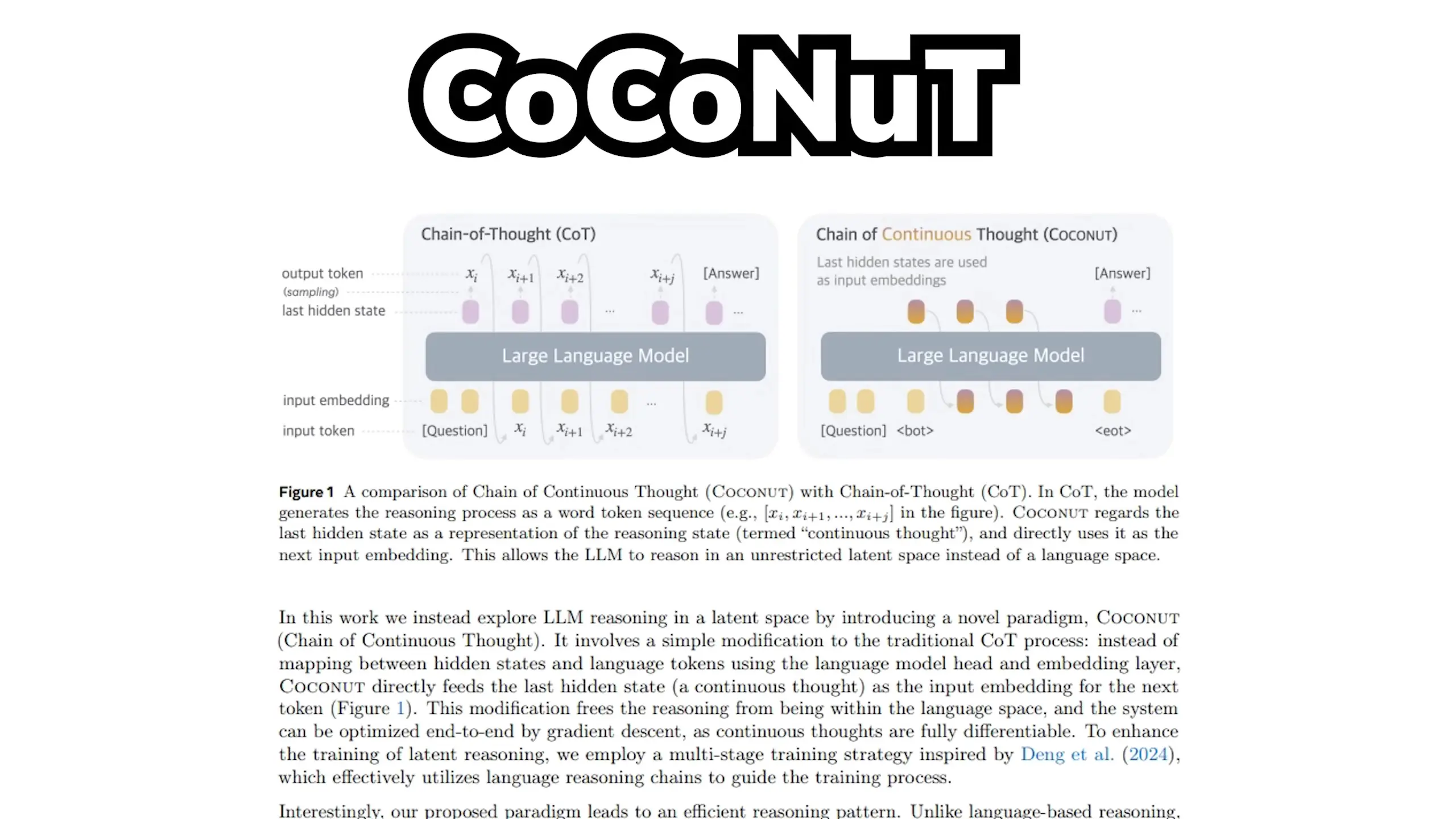

The AI research community has been exploring various approaches to improve how language models "think." Previous innovations like Huggin introduced thinking blocks that allow language models to simulate reasoning without generating unnecessary tokens. Another approach called Coconut enabled models to think using non-word tokens to enhance reasoning capabilities.

However, these approaches faced a fundamental inconsistency: models primarily trained on words were suddenly expected to think in abstract ways. This disconnect limited their effectiveness. Meta's research addresses this problem at its root by developing base models that can think abstractly from the ground up.

Introducing Large Concept Models

In December 2023, Meta researchers introduced Large Concept Models (LCMs), designed to overcome the limitations of language-only models by incorporating concept-based reasoning alongside traditional language processing. The goal is straightforward but revolutionary: enable models to think in abstract ideas rather than being limited to words.

The challenge lies in implementing this approach when most training data exists as text. Meta's solution leverages an existing AI research technique in a novel way.

Sparse Autoencoders: From Interpretability Tool to Training Component

The key innovation in Meta's approach comes from repurposing Sparse Autoencoders (SAEs), which were originally developed for mechanistic interpretability - a field focused on understanding how AI models organize information internally.

Sparse Autoencoders are unique because they activate only a handful of nodes (unlike typical neural networks where most nodes are active), allowing researchers to identify patterns in neural activations that correspond to specific concepts.

In Meta's latest LCM implementation called Coco Mix, they've transformed SAEs from mere interpretability tools into integral components of the training process itself. This fundamentally changes how models learn and reason.

Next Concept Prediction: A New Training Paradigm

The Coco Mix approach introduces a novel training paradigm called "next concept prediction" that works alongside traditional next token prediction. Here's how it works:

- During pre-training, SAEs scan the model to identify and learn concepts the model has developed

- The model undergoes next concept prediction training, similar to next token prediction but at the concept level

- After training, concept and token prediction are interleaved, with the model predicting both simultaneously

- Predicted concepts directly influence next token predictions, creating a continuous feedback loop

This results in outputs that are conceptually grounded and potentially enables more controllable language generation. To use a simple analogy, traditional word generation is like building a house with only bricks, while Coco Mix adds steel beams that guide and support those bricks, resulting in better structural integrity and consistency.

Impressive Efficiency Gains

The benefits of this approach are substantial. Meta's research shows that Coco Mix can save up to 21.5% of training tokens while achieving similar performance compared to traditional approaches. Even accounting for the additional compute required for the SAE process, the models still performed better on benchmarks across different sizes (up to 1.38 billion parameters).

While the performance improvements are modest in standard benchmarks, the real potential lies in more complex tasks requiring multilingual or multimodal reasoning, where concept-guided predictions could provide significant advantages.

Continuous Concept Guidance: A Game-Changer for AI

Perhaps the most promising aspect of Large Concept Models is their ability to provide continuous concept guidance during text generation. This could potentially replace traditional text or system instructions placed in the context window.

With concept-guided generation, models are less likely to forget instructions given thousands of words earlier because the underlying concepts remain active throughout the generation process. Meta researchers demonstrated how easily they could steer the concepts of different models to change text generation patterns.

Weak-to-Strong Supervision: A Practical Implementation Path

One of the most fascinating aspects of Large Concept Models is the introduction of "weak-to-strong supervision" as a training methodology. Since extracting concepts from very large language models using SAEs is computationally expensive, Meta researchers discovered that concepts from smaller models can effectively guide the training of larger ones.

This approach works because if both large and small models are trained on similar data, even the less sophisticated concepts from smaller models map closely enough to guide larger models effectively. This makes Large Concept Models much more practical for real-world applications.

Future Implications for AI Development

The implications of Large Concept Models extend far beyond incremental improvements in language model performance. This approach could be a critical stepping stone for enhancing test-time computation that doesn't rely on words, potentially unlocking stronger multimodal reasoning capabilities - an area where current AI systems struggle significantly.

It's somewhat surprising that Meta, rather than Anthropic (which has been deeply involved in SAE research), pioneered this implementation. Anthropic has previously mentioned challenges in applying SAEs to alignment problems, suggesting that LCMs might offer a solution to those difficulties.

Conclusion: A New Direction for AI Thinking

Meta's research on Large Concept Models represents a significant shift in how we approach AI development. By enabling models to think in abstract concepts rather than being limited to word-based reasoning, this work opens new possibilities for more efficient, controllable, and capable AI systems.

While still in early stages, the potential applications for multimodal reasoning, instruction following, and general AI capabilities make this an exciting development to watch. As the field continues to evolve, concept-based thinking could become a foundational element of next-generation AI systems.

Let's Watch!

Meta's Breakthrough: How Large Concept Models Transform AI Thinking

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence