The world of AI has long been dominated by models requiring powerful hardware, putting advanced capabilities out of reach for many users. Microsoft's BitNet is changing this paradigm as the first one-bit large language model that performs nearly on par with full precision models while running on standard consumer hardware.

What Makes BitNet Revolutionary?

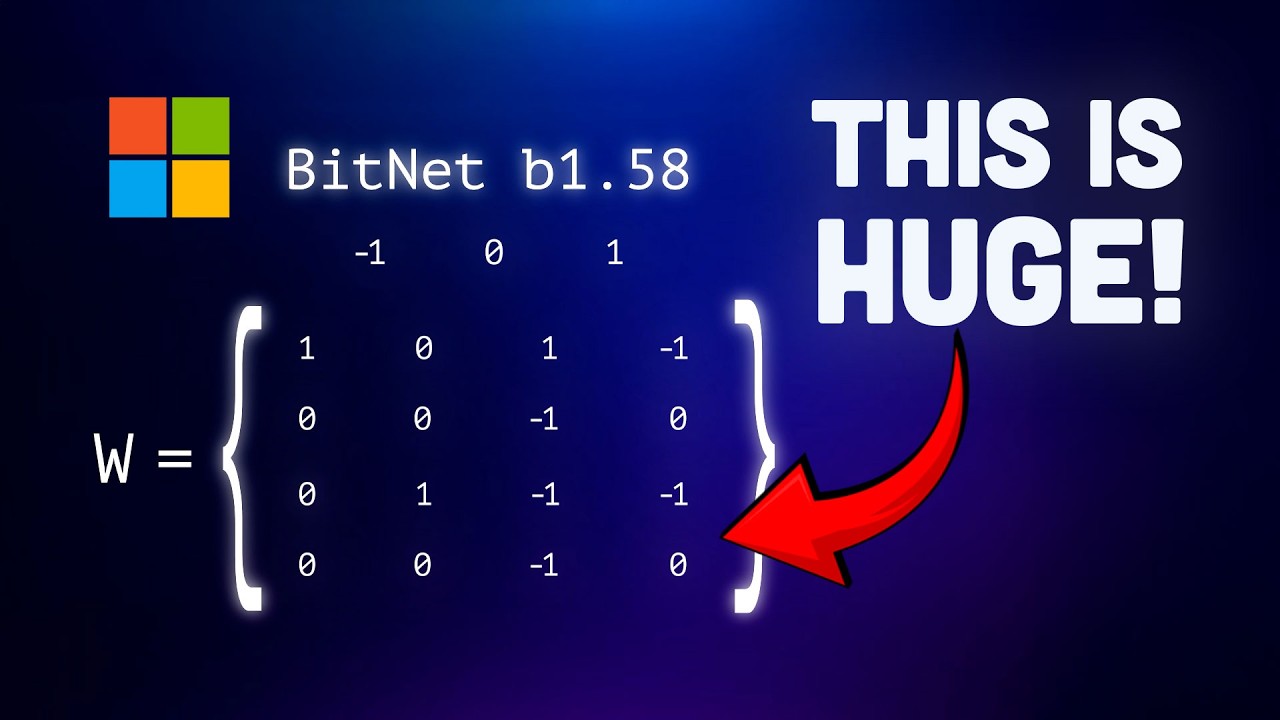

BitNet represents a breakthrough in AI model architecture by storing each weight using just 1.58 bits on average instead of the traditional 16 or 32 bits used in conventional models. This radical compression is achieved through ternary quantization, where weights are limited to just three possible values: -1, 0, and +1.

While technically using 1.58 bits per weight (the minimum required to represent three states), the "one-bit model" branding highlights its revolutionary approach to model efficiency. This isn't merely a compression trick—it's a fundamental rethinking of how neural networks can operate with dramatically reduced precision.

The Impact of 1-Bit Architecture

The implications of BitNet's design are far-reaching for the AI ecosystem, offering several significant advantages:

- Dramatically smaller model size: Up to 10x reduction compared to FP16 models

- Reduced memory bandwidth requirements: Less data movement between CPU, GPU, and RAM

- Faster inference: Microsoft reports up to 6x speedups on CPUs

- Energy efficiency: 55-82% lower power consumption

- Edge device compatibility: Makes running LLMs on laptops, desktops, and potentially phones realistic

The most impressive aspect is that these efficiency gains come with minimal accuracy loss. When tested against popular benchmarks, BitNet performs remarkably well compared to much larger FP16 models.

Native 1-Bit Training: The Key Innovation

BitNet's success isn't achieved by simply compressing an existing model. The key innovation is that BitNet is trained natively in one-bit space from the beginning. Rather than training a full-precision model and then quantizing it (which typically results in significant performance degradation), BitNet learns to work within the constraints of ternary weights from day one.

This approach allows the model to adapt to the limitations of one-bit precision during the training process, resulting in much better performance than post-training quantization methods could achieve.

Hands-On with BitNet: Real-World Performance

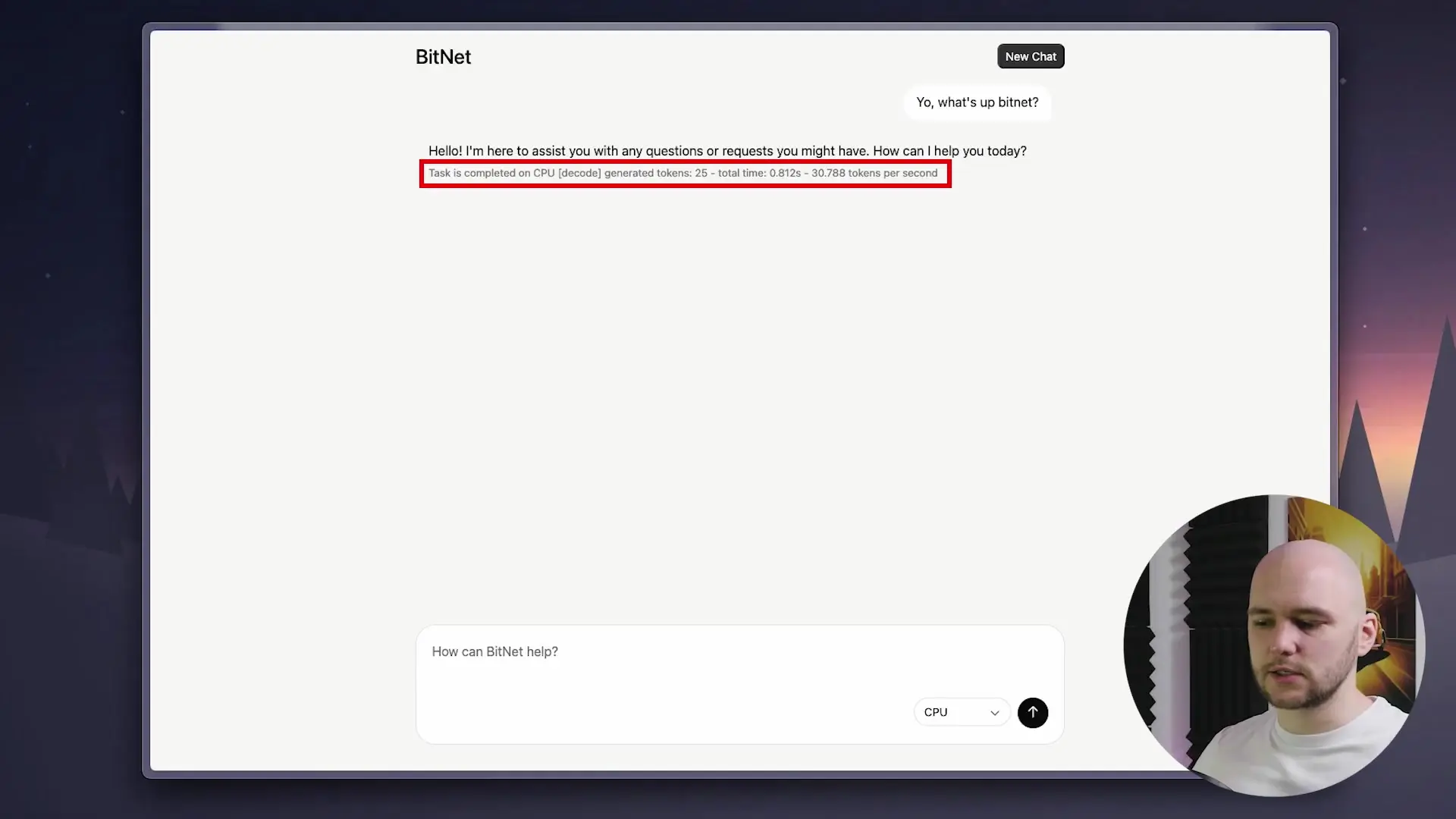

Running BitNet is surprisingly straightforward using Microsoft's transformers library implementation. The model runs efficiently even on CPU-only setups like a MacBook Pro, with impressive response times considering the hardware limitations.

For those wanting to try BitNet without installation, Microsoft provides a demo interface that displays generated tokens and response times, making it easy to experiment with the model's capabilities.

BitNet's Performance on Logic Tests

To evaluate BitNet's capabilities, it was tested on a series of logic puzzles commonly used to benchmark language models:

- Colored boxes question: Answered correctly

- Card deck riddle ("What has 13 hearts but no other organs?"): Answered correctly

- Complex math problem involving students and buses: Answered correctly

- Four-legged llama question: Failed (a common failure point for many LLMs)

Overall, BitNet's performance on logic tests places it within the average range of most language models, which is impressive considering its minimal resource requirements.

Limitations: Code Generation Challenges

While BitNet excels at many tasks, it struggles significantly with code generation. When prompted to create a simulation of a bouncing ball in a rotating hexagon (a common benchmark for code generation capabilities), BitNet required multiple attempts to produce code that would even compile.

The final result lacked both the required visual elements and physics, highlighting that one-bit models still have significant limitations for complex programming tasks. This suggests that specialized coding models will remain necessary for development work.

Practical Applications for BitNet

Despite its limitations, BitNet shows tremendous promise for specific use cases where efficiency is paramount:

- Edge computing: Running AI capabilities on resource-constrained devices

- Mobile applications: Enabling on-device AI without cloud dependencies

- IoT implementations: Adding language capabilities to smart devices

- Accessibility: Making AI available to users without high-end hardware

- Low-power environments: Situations where energy efficiency is critical

The Future of 1-Bit Neural Networks

BitNet represents an important step toward more efficient AI models. While it may not yet compete with the capabilities of larger models like GPT-4 or Claude, the architecture demonstrates that significant compression is possible without catastrophic performance degradation.

Microsoft's investment in this technology suggests we'll likely see further advancements in bit-efficient models, potentially leading to a new generation of AI systems that balance performance with accessibility.

Conclusion: Democratizing AI Through Efficiency

BitNet may not be ready to replace the most powerful AI models, but it represents an important direction for the field—making AI more accessible by focusing on efficiency rather than just raw performance. As the 1-bit neural network approach matures, we could see increasingly capable models that run effectively on consumer hardware.

For users interested in exploring AI without investing in expensive hardware, BitNet offers a glimpse of a future where advanced language models can run locally on everyday devices. This approach to AI development could ultimately help democratize access to these powerful technologies.

# Simple example of loading and running BitNet with transformers

from transformers import AutoModelForCausalLM, AutoTokenizer

# Load the BitNet model

tokenizer = AutoTokenizer.from_pretrained("microsoft/BitNet-b1.58-1b")

model = AutoModelForCausalLM.from_pretrained("microsoft/BitNet-b1.58-1b")

# Generate text with BitNet

inputs = tokenizer("What is the capital of France?", return_tensors="pt")

outputs = model.generate(**inputs, max_length=100)

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(response)Let's Watch!

Microsoft's 1-Bit BitNet: Run Powerful AI Models on Your Everyday Computer

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence