Microsoft's general artificial intelligence team has unveiled a groundbreaking AI model called BitNet B1.58-2B-4T that represents a significant leap in AI efficiency. Unlike conventional models that require specialized hardware and substantial resources, BitNet operates on standard CPUs with minimal memory footprint and power consumption while maintaining competitive performance.

The Revolutionary Ternary Approach

What makes BitNet truly revolutionary is its ternary weight system. Unlike traditional AI models that use 32-bit, 16-bit, or even 8-bit weights, BitNet restricts every single weight in the network to just three possible values: -1, 0, or +1. This averages to just 1.58 bits of information per weight (as log base 2 of 3 equals approximately 1.58), hence the name BitNet B1.58.

While other low-bit models exist, most were initially trained at full precision and then compressed afterward through post-training quantization. This approach typically results in accuracy degradation. Microsoft took a fundamentally different approach by training BitNet in ternary format from scratch, allowing the model to learn natively in this highly constrained representation.

Technical Architecture and Training Process

BitNet is a 2 billion parameter transformer model trained on 4 trillion tokens. The training process occurred in three strategic phases:

- Pre-training with 4 trillion tokens at a high learning rate

- Fine-tuning with supervised examples to improve response quality

- Direct Preference Optimization (DPO) to refine output style and helpfulness

Throughout all training phases, the model maintained its ternary weights, ensuring no information was lost in translation between different precision formats. This native ternary training approach is key to BitNet's exceptional performance despite its extreme quantization.

Performance Benchmarks and Comparison

Despite its drastically reduced precision, BitNet delivers impressive results on standard AI benchmarks. Across 17 different tests, BitNet achieved a 54.19% macro score, just slightly behind the best float-based competitor in its weight class (Llama-derived QN 2.5 at 55.23%).

Where BitNet particularly excels is in logical reasoning tasks. It topped the chart on ARC Challenge with 49.91%, led on ARC Easy at 74.79%, and outperformed competitors on the challenging Winnow Grande benchmark with 71.9%. In mathematics, it achieved a 58.38% exact match score on GSM8K, outperforming other 2 billion parameter models while using significantly less power.

When compared to post-training quantized models like GPTQ and AWQ int4 versions of QN 2.5, BitNet maintains higher accuracy while requiring less than half the memory footprint.

Hardware Impact and Efficiency Gains

The hardware implications of BitNet are perhaps its most impressive feature. While a standard 2 billion parameter model in full precision typically requires 2-5GB of VRAM, BitNet operates with just 0.4GB. This dramatic reduction allows the model to fit comfortably in the L-cache layers of many CPUs.

In practical terms, BitNet can generate 5-7 tokens per second on an Apple M2 chip—approximately human reading speed—while drawing 85-96% less energy than comparable float models. This efficiency makes BitNet suitable for deployment on laptops, mobile devices, and other resource-constrained environments.

Technical Implementation Details

To make this extreme quantization work effectively, Microsoft implemented several technical innovations:

- An ABS mean quantizer that determines which ternary value (-1, 0, or +1) fits each weight

- 8-bit activations to maintain efficient message passing between layers

- Sub-layer normalization to maintain stability with low-precision weights

- Squared ReLU activation function replacing more complex alternatives

- Llama 3's tokenizer to leverage existing vocabulary optimization

For inference, Microsoft developed custom software that efficiently packs four ternary weights into a single byte, optimizing memory transfers and computational operations. This specialized implementation enables BitNet to run efficiently on standard hardware without dedicated accelerators.

Practical Applications and Availability

BitNet's efficiency unlocks numerous practical applications that were previously challenging with resource-intensive AI models:

- Offline chatbots that don't require cloud connectivity

- Smart keyboards with advanced AI capabilities

- Edge device copilots that operate without draining battery life

- Local AI assistants for privacy-sensitive applications

- Reduced operational costs for AI deployments at scale

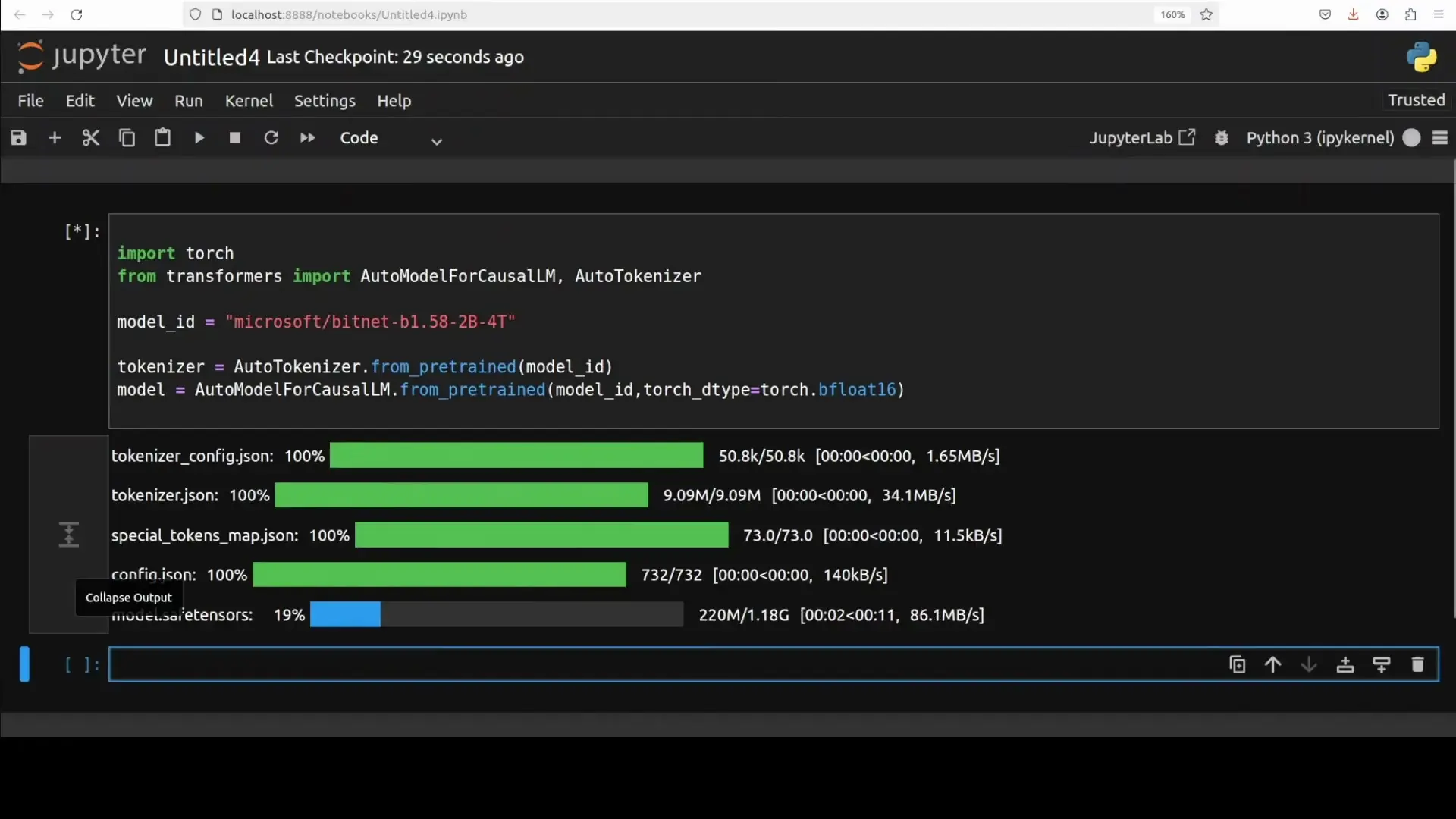

Microsoft has made BitNet publicly available on Hugging Face in three formats: inference-ready packed weights, BF-16 master weights for researchers interested in retraining, and a GGUF file for use with BitNet CPP. A web demo is also available for those who want to experiment with the model's capabilities.

Future Directions and Limitations

While BitNet represents a significant advancement in AI efficiency, Microsoft acknowledges several areas for future improvement:

- Extending the current 4K token context window for document-length tasks

- Expanding beyond English to support multilingual capabilities

- Exploring multimodal applications combining text with other data types

- Developing specialized hardware accelerators optimized for ternary operations

- Testing how ternary scaling laws hold at larger model sizes (7B, 13B, and beyond)

Researchers are also investigating the theoretical foundations of why such extreme quantization works effectively, which could lead to further innovations in efficient AI architectures.

Conclusion: The Significance of Microsoft's BitNet Innovation

BitNet B1.58 demonstrates that powerful AI doesn't necessarily require massive computational resources. By fundamentally rethinking how neural networks are trained and represented, Microsoft has created a model that achieves comparable results to much more resource-intensive alternatives while dramatically reducing memory, computation, and energy requirements.

This breakthrough has profound implications for democratizing AI access, enabling powerful capabilities on everyday devices, and reducing the environmental impact of AI deployments. As hardware catches up with specialized support for ternary operations and as the approach scales to larger models, we may witness a significant shift toward more efficient AI architectures across the industry.

For developers and researchers interested in efficient AI, BitNet represents an exciting new direction that challenges conventional wisdom about the resource requirements for effective large language models. With just 400MB of memory and standard CPU hardware, anyone can now experiment with a surprisingly capable AI assistant—a remarkable testament to the power of innovative approaches in the rapidly evolving field of artificial intelligence.

Let's Watch!

Microsoft's BitNet: The Revolutionary 1.58-Bit AI Model That Runs on CPU

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence