In a week dominated by Google I/O announcements and Anthropic's new models, Mistral AI quietly released something remarkable - Devstral, their state-of-the-art open-source coding model. Released under the Apache 2.0 license, this purpose-built LLM for coding agents represents a significant advancement in AI-powered software engineering tools.

Benchmark-Breaking Performance

Devstral isn't just another coding model - it's a performance powerhouse. The model significantly outperforms all previous open-source alternatives on the SWE-bench verified benchmark, scoring an impressive 46.8%. That's more than 6 percentage points higher than previous leaders and even surpasses some large closed models like GPT-4.1 Mini by over 20%.

What makes Devstral stand out is its ability to tackle real-world coding challenges that typically stump other large language models. While conventional LLMs struggle with navigating large codebases, resolving bugs across multiple files, and understanding project structures, Devstral was specifically engineered to excel at these complex tasks.

Built for Real Software Engineering

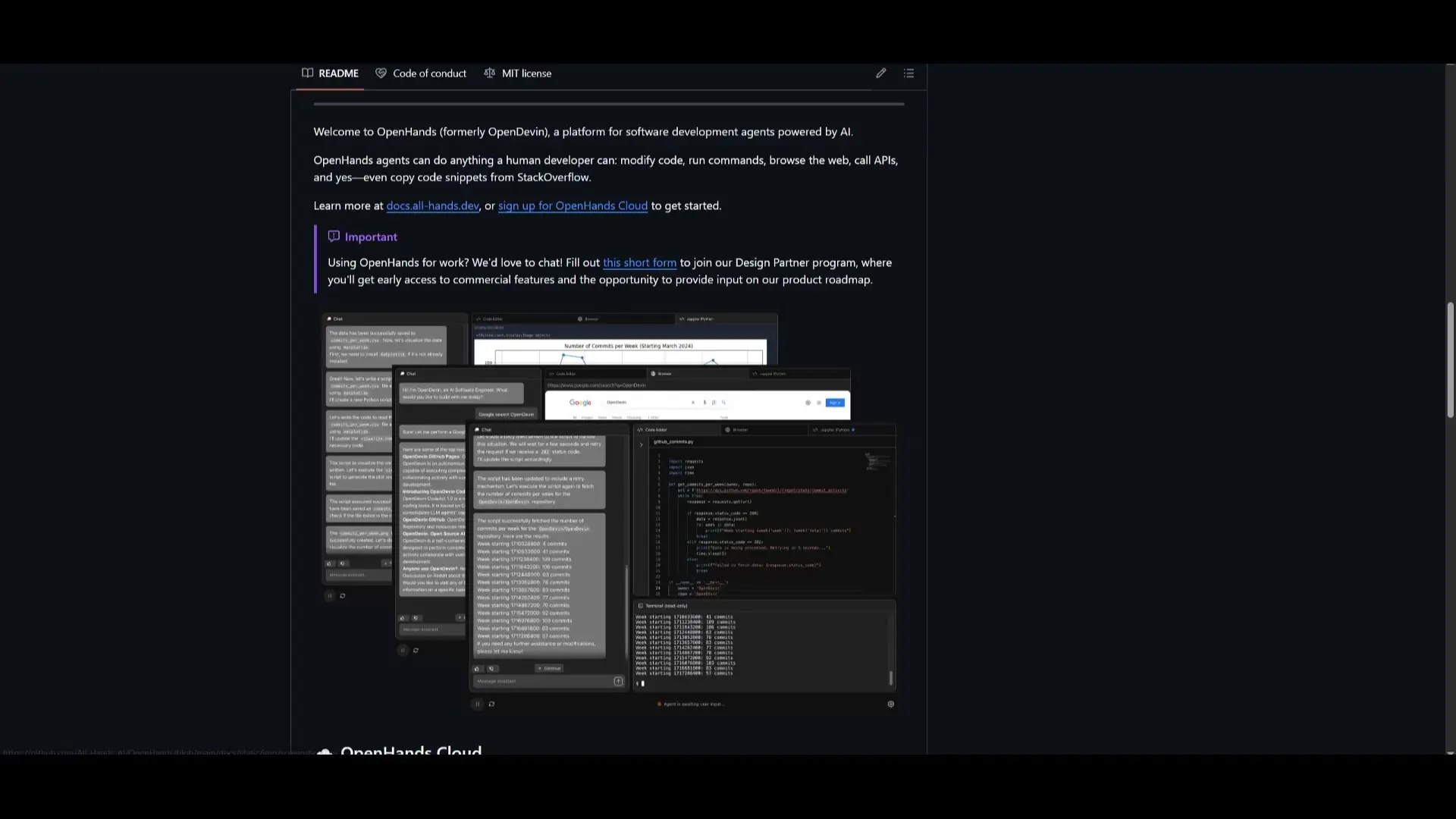

Developed in partnership with OpenHands, Devstral goes beyond simply generating functions. It works through actual GitHub issues using agent scaffolds like OpenHands or SWE-agent, simulating how a real developer would approach and solve problems. This agentic approach makes it particularly effective for debugging, code review, and complex software engineering tasks.

The model's ability to autonomously navigate and edit large codebases is particularly valuable. When making changes, Devstral ensures modifications don't negatively impact other components, making coordinated changes across multiple files when necessary. This comprehensive approach prevents broken code and maintains project integrity.

Accessible and Flexible Deployment Options

Despite its powerful capabilities, Devstral remains remarkably accessible. The 24 billion parameter model can run locally on consumer hardware like an RTX 4090 GPU or even a Mac with 32GB RAM. Mistral AI provides detailed instructions for setting up Devstral with tools like LM Studio or Continue.

- Run locally on consumer hardware (RTX 4090 or Mac with 32GB RAM)

- Access through Continue extension in VS Code

- Available via OpenRouter (including a smaller free version)

- Accessible through Mistral's API with competitive pricing

For those using Mistral's API, Devstral is available with competitive pricing: 10 cents per million input tokens and 30 cents per million output tokens. OpenRouter also provides access to a smaller version of Devstral for free, though with rate limitations.

Setting Up Devstral with Continue in VS Code

One of the easiest ways to integrate Devstral into your development workflow is through the Continue extension for VS Code. Here's how to get started:

- Install the Continue extension in VS Code

- Install Ollama on your system

- Run the command 'ollama run devstral' in your terminal

- Open Continue in VS Code and switch to Agent mode

- Select the Devstral model as your LLM

Real-World Performance Analysis

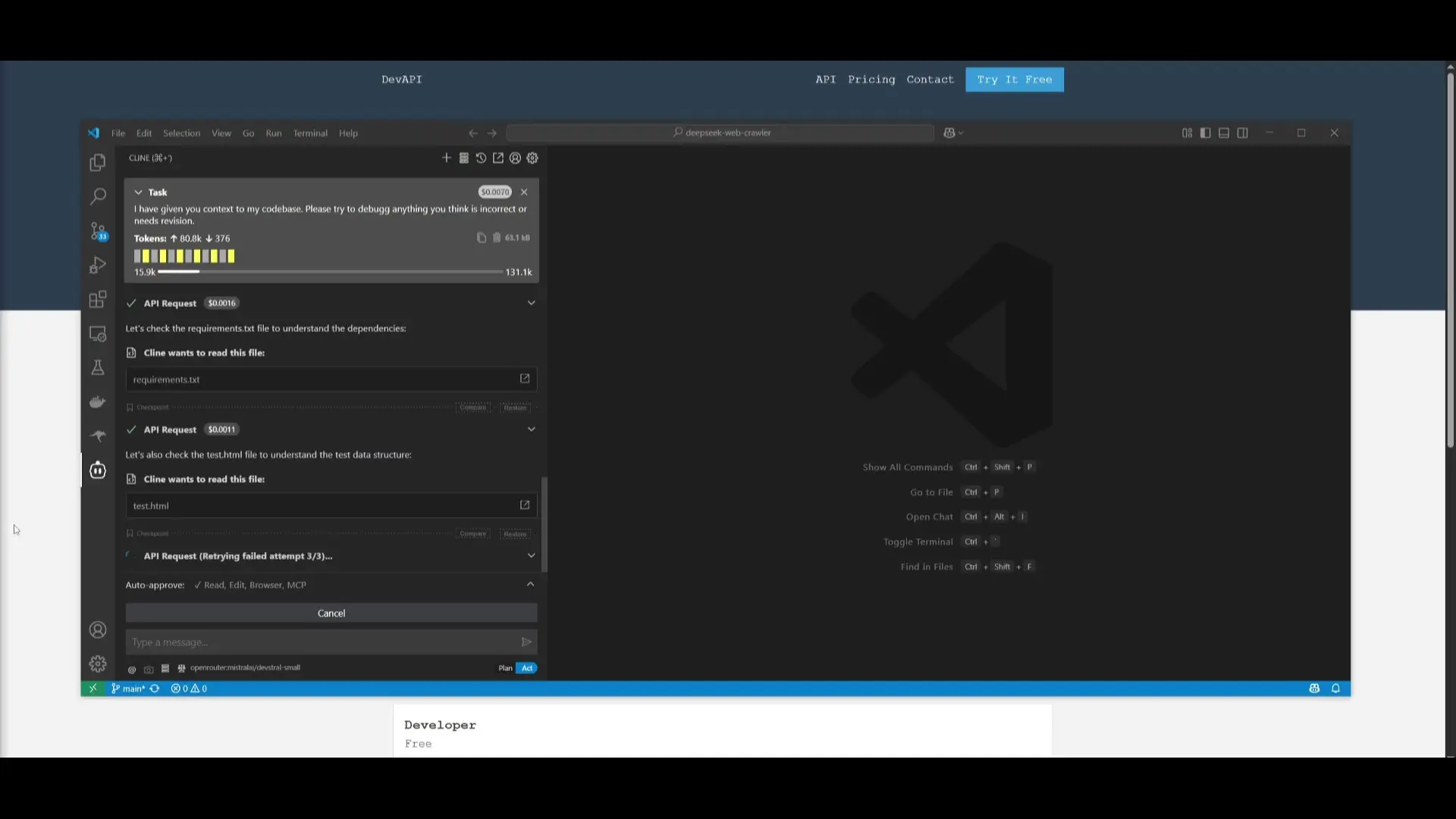

To evaluate Devstral's capabilities, I tested it on two common development tasks: front-end design generation and code debugging. While front-end generation produced decent results for a 24B parameter model not specifically optimized for UI/UX tasks, debugging is where Devstral truly shines.

When presented with a buggy repository, Devstral autonomously identified six out of eight intentional issues and systematically resolved them. The model demonstrated impressive contextual understanding, carefully analyzing dependencies between files before making changes to ensure no new issues were introduced.

# Running Devstral locally with Ollama

ollama run devstral

# Using Devstral through Mistral's API

curl -X POST "https://api.mistral.ai/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $MISTRAL_API_KEY" \

-d '{

"model": "devstral",

"messages": [

{"role": "user", "content": "Debug this function: function add(a, b) { return a - b; }"}

]

}'Future Developments and Potential

Devstral represents just the beginning of Mistral AI's ambitions in the coding space. This initial release is their smaller-sized model, with medium and large versions potentially on the horizon. If the performance of this 24B parameter model is any indication, larger versions could rival or even surpass many closed-source alternatives, including older models like Anthropic's Claude 3.7 Sonnet.

For development teams looking to incorporate LLM capabilities into their workflows without relying on closed APIs or dealing with data privacy concerns, Devstral offers a compelling open-source alternative that doesn't compromise on performance.

Conclusion

Mistral AI's Devstral represents a significant milestone in open-source coding models. By outperforming previous open-source alternatives and even some closed models on the SWE-bench benchmark, it demonstrates that high-quality, specialized AI tools for software engineering don't need to be locked behind proprietary APIs.

With its flexible deployment options, impressive performance on real-world coding tasks, and open Apache 2.0 license, Devstral is poised to become an essential tool in many developers' arsenals. Whether you're debugging complex issues, navigating large codebases, or looking for an AI assistant that truly understands software engineering workflows, Devstral offers capabilities that were previously only available in much larger or closed-source models.

Let's Watch!

Mistral's Devstral: The New Open-Source LLM Revolutionizing Software Engineering

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence