The AI landscape is experiencing a significant shift with three major players releasing powerful models that directly challenge OpenAI's market dominance. DeepSeek, Xiaomi, and Microsoft have each unveiled impressive AI systems that excel in mathematical reasoning and coding tasks, demonstrating that innovation in artificial intelligence is accelerating beyond a single company's control.

DeepSeek's Prover V2: A 671 Billion Parameter Math Verification Giant

Beijing-based DeepSeek has released what might be the most ambitious AI model of the trio: Prover V2, a massive 671 billion parameter model specifically designed for formal math proof verification. Released under the permissive MIT license, this model represents a significant advancement in AI's ability to handle complex mathematical reasoning.

What makes Prover V2 exceptional is its specialized ability to tackle Olympiad-level math problems, translate them into formal Lean code (a mathematical proof language), and generate machine-verifiable proofs. This isn't just about generating answers; it's about producing rigorous, step-by-step mathematical proofs that can be independently verified.

The model builds upon DeepSeek's earlier work with Prover V1 and V1.5, which were constructed on a 7 billion parameter DeepSeek math base and trained primarily on synthetic data generated by other models. The new V2 represents a massive leap forward, likely built on their R1 foundation that previously matched OpenAI's capabilities in head-to-head comparisons.

Technical Innovations in Prover V2

Despite its enormous size, DeepSeek has implemented several technical innovations to make Prover V2 more accessible and efficient:

- FP8 quantization that reduces the model size to around 650GB (half of what would be required with standard FP16 storage)

- Improved memory bandwidth efficiency and inference speed through precision optimization

- Potential for distillation techniques where smaller "student" models can learn from the larger Prover V2

The community is already experimenting with further optimizations, including 4-bit and even 3-bit quantization techniques to make this powerful model run on more modest hardware configurations.

Xiaomi's MIMO 7B: Small Size with Outsized Performance

While DeepSeek went big, Xiaomi took the opposite approach with their MIMO 7B model. Despite its relatively compact 7 billion parameter size, this model outperforms many larger competitors in coding and math tasks through smarter training techniques rather than raw parameter count.

The secret to MIMO 7B's success lies in its extensive pre-training on 25 trillion tokens through a sophisticated three-stage data mixing pipeline. This process gradually increased the proportion of math and coding tasks to 70% by the final training stage, creating a model with specialized capabilities in these domains.

Key Features of Xiaomi's MIMO 7B

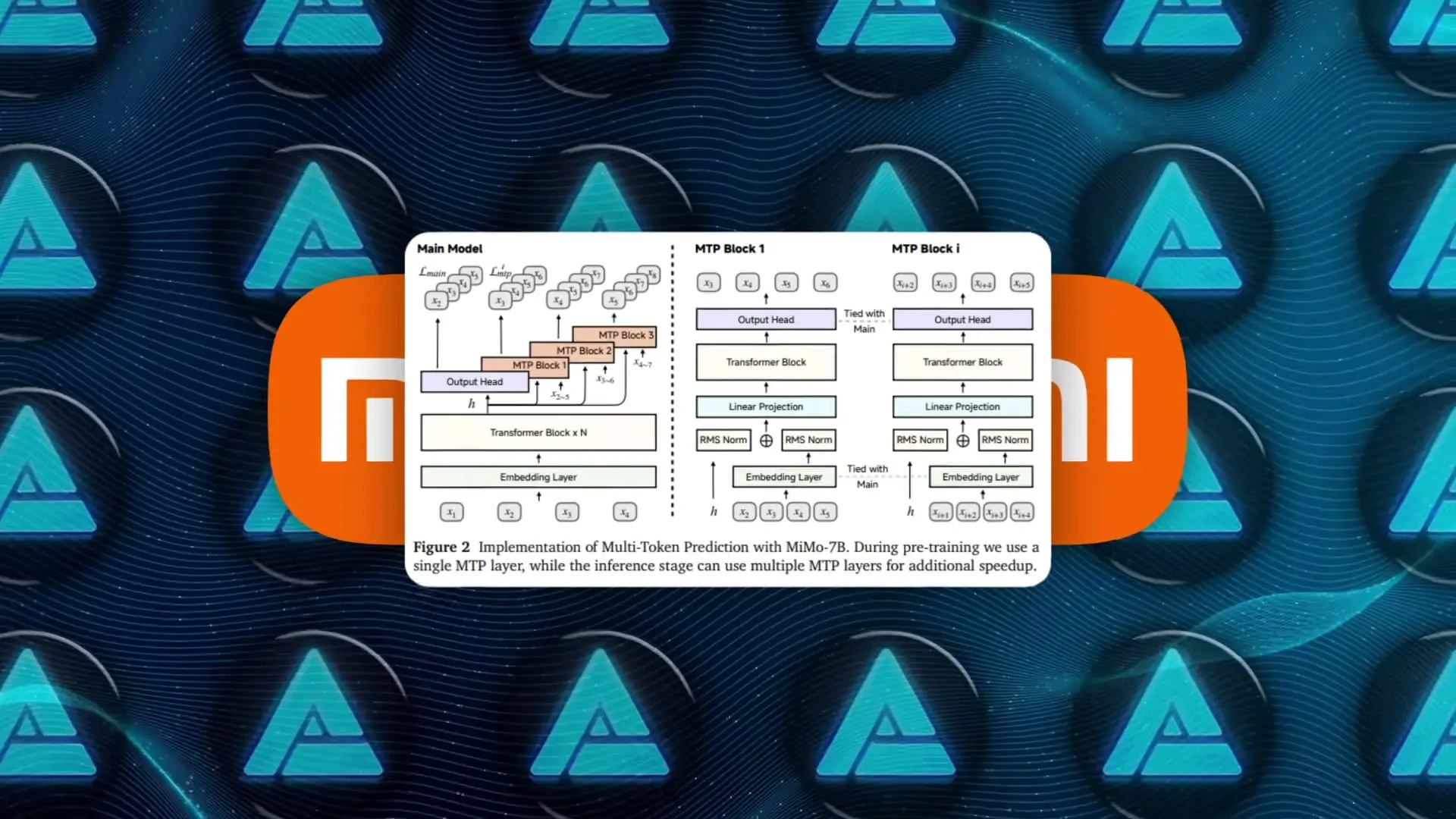

- Extended context length of 32,768 tokens, allowing it to process entire code bases or complex math proofs without losing context

- Multi-token prediction capability that enables it to predict several tokens at once, improving reasoning speed and inference performance

- Two reinforcement learning variants: MIMO 7B RL0 (built from raw base weights) and MIMO 7B RL (built on a supervised fine-tuned version)

- Training on 130,000 verifiable math and coding problems with a test difficulty-driven reward system

- Innovative easy data resampling technique to prevent overfitting on simpler problems

The performance numbers for MIMO 7B are impressive. On the AIME 2025 benchmark, it achieves a score of 55.4, outperforming OpenAI's O1 Mini by 4.7 points. On LiveCodebench V5, it reaches 57.8%, significantly surpassing Alibaba's QWQ preview (which runs on 32 billion parameters but only achieves 41.9%).

Most importantly, MIMO 7B can run on a single high-VRAM workstation, making it accessible for local development without relying on cloud services or external APIs. While Xiaomi acknowledges some limitations (occasional language switching to Chinese during English tasks), all three variants (base, RL0, and RL) are available on GitHub under a permissive license.

Microsoft's FI4 Reasoning Family: The Goldilocks Approach

Microsoft Research has taken a middle path with their FI4 reasoning family, built around a 14 billion parameter model. This places it between Xiaomi's compact approach and DeepSeek's massive architecture, offering what might be considered the "Goldilocks zone" for practical deployment.

The FI4 family comes in three variants: FI4 Reasoning, FI4 Reasoning Plus, and a smaller Mini version. All are descended from Microsoft's base V4 model but specially tuned for advanced reasoning in mathematics, science, and software development.

Microsoft's Training Approach

Rather than focusing on raw data volume like Xiaomi, Microsoft curated 1.4 million boundary prompts—edge cases designed to push the model's reasoning capabilities. Reference answers were sourced from OpenAI's O3 mini in high reasoning mode, with responses carefully structured using explicit tags to separate the chain of thought from final answers.

The FI4 Reasoning Plus variant received additional group relative policy optimization training on 6,400 challenging math puzzles, with a reward system that favors concise, properly formatted answers while penalizing repetition and verbosity.

Like Xiaomi's model, Microsoft's FI4 also features a 32k token context window, allowing it to handle complex, multi-step problems without losing track of previous reasoning steps.

Performance and Accessibility

Microsoft's variance testing shows FI4 Reasoning Plus matching or slightly outperforming OpenAI's O3 Mini on AIME 2025 benchmarks while maintaining more consistent results than distilled versions of DeepSeek R1. The model also demonstrates strong generalization capabilities, performing well on planning puzzles like TSP and Thresat despite not being explicitly trained on these tasks.

From a deployment perspective, the 14B parameter size hits an ideal balance—when quantized to 4-bit precision, it can run on a single high-end consumer GPU, making it accessible for classroom environments or independent developers. Microsoft has also published full training logs and evaluation traces on HuggingFace, encouraging community auditing and derivative experiments.

The Implications for AI Development and Competition

These three models represent different approaches to the same challenge: creating more powerful, accessible AI systems for mathematical reasoning and coding tasks. They demonstrate that the AI landscape is no longer dominated by a single player but is becoming increasingly competitive and diverse.

- Size isn't everything: Xiaomi's MIMO 7B proves that clever training on targeted data can outperform much larger models in specific domains.

- Specialized models are gaining ground: Rather than general-purpose AI, these models excel in specific domains like mathematical reasoning and code generation.

- Open access is accelerating innovation: All three models are available under permissive licenses, allowing researchers and developers to build upon them.

- Hardware requirements are becoming more reasonable: Through quantization and optimization techniques, even powerful models can run on consumer-grade hardware.

Potential Applications and Future Directions

These models open up exciting possibilities across multiple domains:

- Education: Providing advanced tutoring in mathematics and programming with step-by-step reasoning

- Mathematical research: Assisting researchers by verifying proofs and exploring new mathematical concepts

- Software development: Enhancing coding workflows with sophisticated code generation and debugging capabilities

- Formal methods in cryptography: Verifying security properties of cryptographic systems through mathematical proofs

- Automated grading: Evaluating complex mathematical assignments with detailed feedback

While these advancements raise legitimate concerns about security implications and responsible AI deployment, they also represent a significant democratization of AI capabilities. The open-source nature of these models allows for greater transparency, accessibility, and collaborative improvement compared to closed, proprietary systems.

Conclusion: A New Era of AI Competition

The simultaneous release of these three powerful AI models signals a significant shift in the AI landscape. DeepSeek's massive Prover V2, Xiaomi's efficient MIMO 7B, and Microsoft's balanced FI4 family demonstrate that innovation in AI is accelerating across multiple approaches and organizations.

For developers, researchers, and organizations looking to leverage AI capabilities, this increased competition means more options, potentially lower costs, and the ability to select models that precisely match specific use cases rather than relying on one-size-fits-all solutions.

As these models continue to evolve and new competitors enter the field, we can expect even more rapid advancement in AI capabilities for mathematical reasoning, coding, and other specialized domains. The era of AI diversity has arrived, and it promises to be an exciting time for innovation and practical applications.

Let's Watch!

3 New AI Models That Challenge OpenAI's Dominance in Math and Coding

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence