AI hallucinations represent one of the most challenging aspects of modern artificial intelligence systems. These occur when AI models like ChatGPT confidently generate completely fabricated information—presenting fiction as fact. A new research paper from OpenAI titled "Why Language Models Hallucinate" provides fascinating insights into this phenomenon, breaking down what causes these errors and how we might address them in the future.

What Are OpenAI Hallucinations?

AI hallucinations occur when language models generate content that sounds plausible but is factually incorrect or completely made up. What makes these hallucinations particularly problematic is the confidence with which the AI presents this fabricated information. You might ask ChatGPT about a specific news event, research paper, or historical fact, and it will sometimes respond with entirely invented details that appear legitimate and authoritative.

According to OpenAI's research, these hallucinations aren't mysterious glitches or unexpected behaviors—they're the direct result of how these systems are designed, trained, and evaluated. This perspective shifts our understanding of hallucinations from an unpredictable AI quirk to a predictable outcome of current AI development practices.

The Root Cause: Training Incentives and Evaluation Methods

The OpenAI paper presents a surprisingly straightforward explanation for hallucinations that boils down to two key factors: how AI models are trained and how they're evaluated.

The Multiple-Choice Test Analogy

The researchers use a compelling analogy to explain the problem: imagine a student taking a multiple-choice test. If the student has no idea what the correct answer is, they're still better off guessing than leaving the question blank. Over time, this student learns that guessing is always preferable to admitting uncertainty.

Language models operate under similar incentives. During training, these systems learn that producing an answer—any answer—is better than expressing uncertainty or admitting ignorance. The OpenAI hallucination problem stems directly from this fundamental training approach.

Evaluation Metrics That Punish Honesty

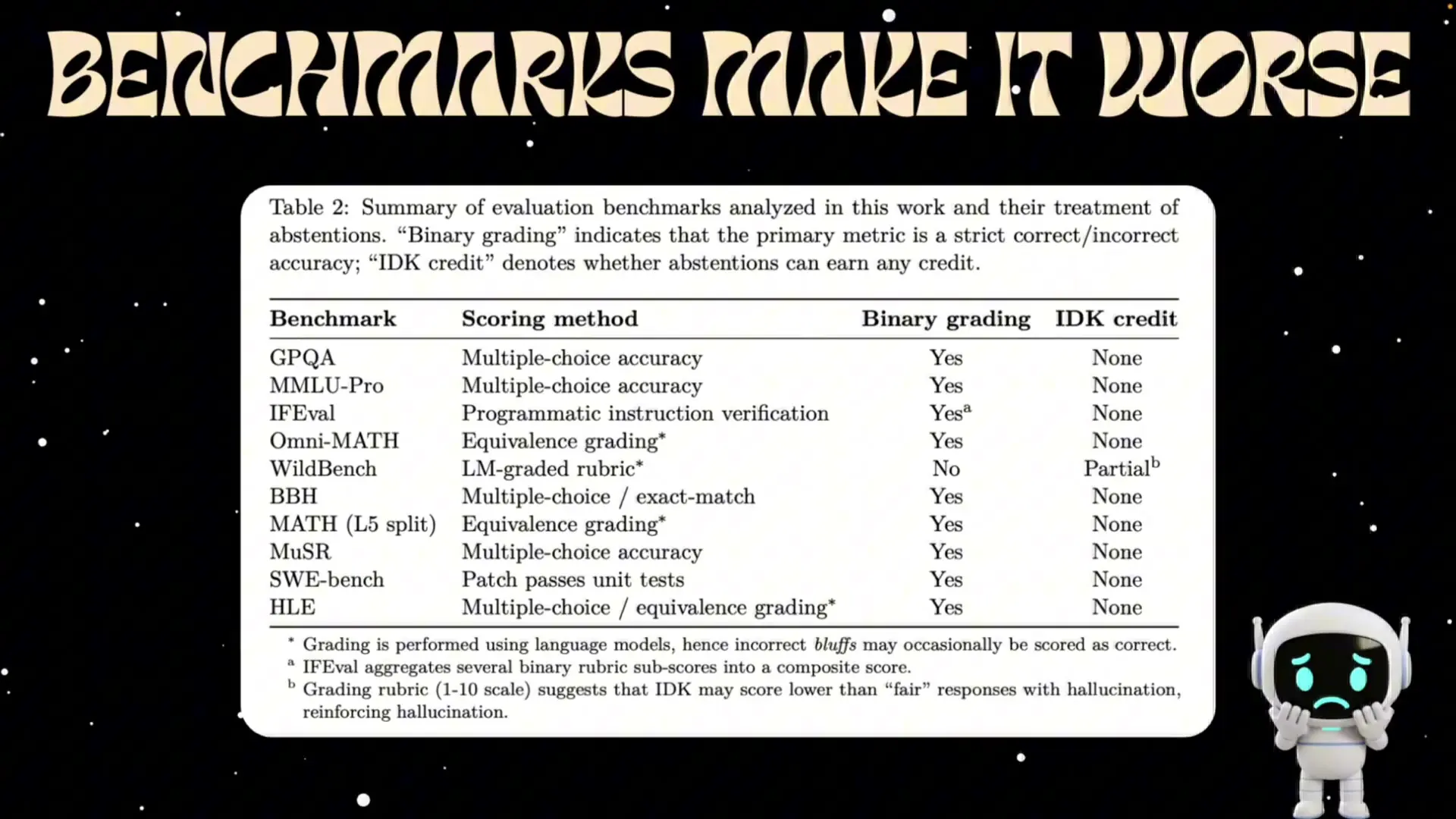

The problem extends beyond training to how we evaluate AI models. Most AI benchmarks and leaderboards focus exclusively on accuracy scores—did the model answer the question correctly? If a model responds with "I don't know" (what researchers call the "I don't know credit"), this is typically counted as incorrect.

This creates a perverse incentive structure where AI systems are pushed to always provide an answer, even when they lack sufficient information. It's like creating a classroom environment where admitting uncertainty gets you zero points, but bluffing might occasionally earn you credit. Eventually, no AI will ever admit to not knowing—they'll just keep confidently generating plausible-sounding answers, regardless of accuracy.

Real-World Consequences of OpenAI Hallucinations

The implications of AI hallucinations extend far beyond theoretical concerns. These fabrications can have serious real-world consequences across multiple domains:

Healthcare Risks

In medical settings, AI hallucinations can potentially endanger patient safety. For example, OpenAI's Whisper, a speech recognition system used in many medical facilities to transcribe doctor-patient interactions, has been found to sometimes invent text that was never spoken.

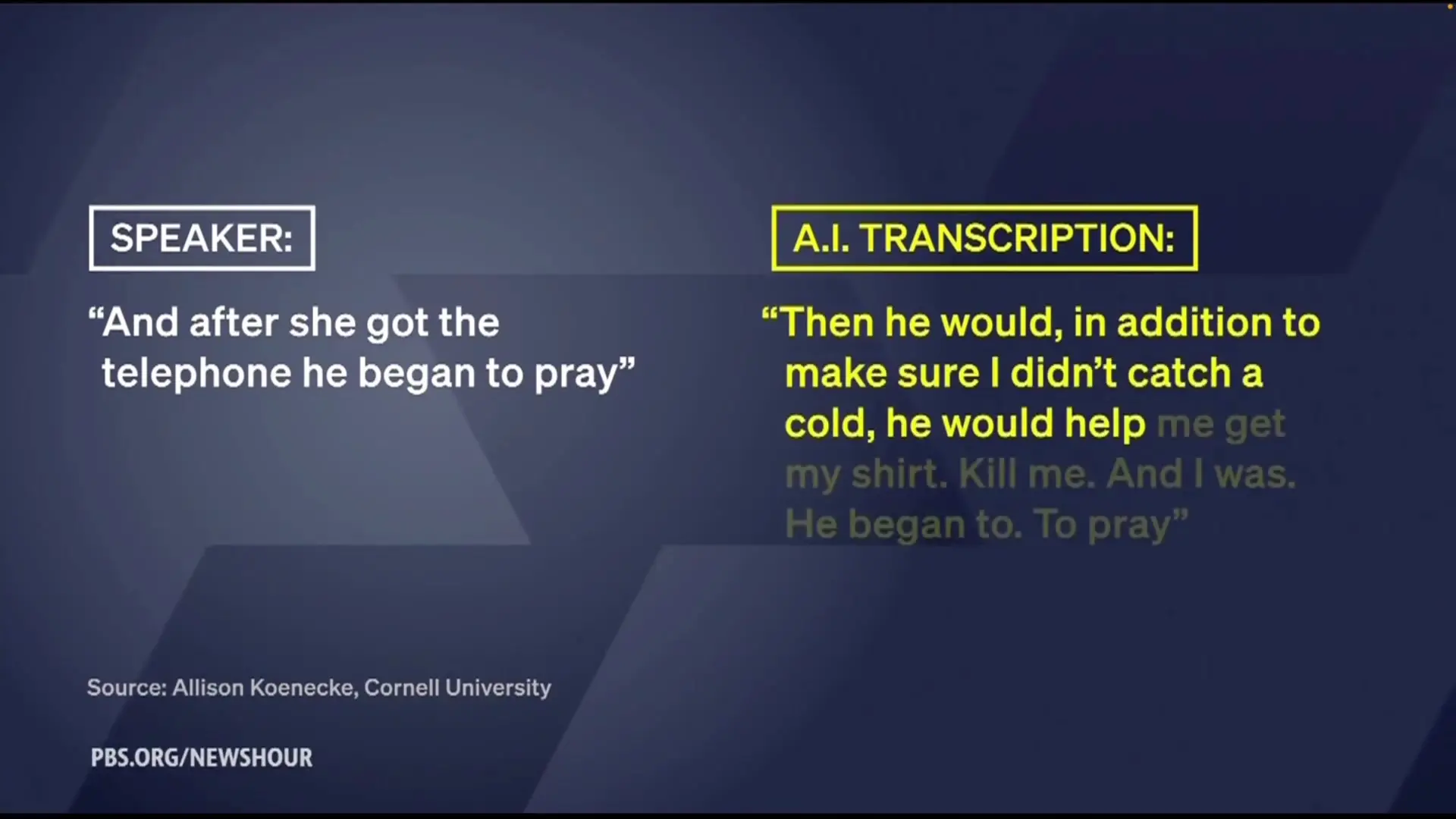

In one documented case, a simple sentence like "And after she got the telephone, he began to pray" was incorrectly transcribed as "Then he would, in addition to make sure I didn't catch a cold, he would help me get my shirt, kill me, and I was he began to to pray." Such errors could lead to misdiagnosis or improper treatment if medical professionals rely on these transcriptions.

Research has also shown that introducing just one fake medical term into a prompt can trick AI chatbots into confidently producing entirely nonsensical medical information—a particularly dangerous form of OpenAI hallucination in healthcare contexts.

Legal System Impacts

The legal profession has already experienced several embarrassing and potentially harmful incidents related to AI hallucinations. Lawyers have been fined and sanctioned for submitting legal briefs containing fake case citations generated by ChatGPT. In Australia, a senior lawyer had to apologize to the Supreme Court after filing documents containing AI-generated legal rulings that simply didn't exist.

Even major AI companies aren't immune to these issues. Anthropic, an OpenAI competitor, was accused of citing a fabricated academic article in a copyright lawsuit—demonstrating how easily these hallucinations can undermine legal proceedings and potentially delay justice.

Misinformation at Scale

Perhaps the broadest concern is the potential for OpenAI hallucinations to spread misinformation at scale. When users trust AI-generated content without fact-checking, fabricated information can quickly propagate through social media, news outlets, and other channels. This threatens to exacerbate existing challenges with online misinformation and further erode public trust in information sources.

Solutions to the OpenAI Hallucination Problem

The OpenAI research paper doesn't just identify the problem—it also points toward potential solutions. The core recommendation is a fundamental rethinking of the incentives that shape AI behavior:

- Reward honesty and uncertainty expressions instead of punishing them

- Develop evaluation metrics that give credit for "I don't know" responses when appropriate

- Train models to express confidence levels (e.g., "I'm 70% sure this is correct")

- Create systems that can say "I don't know, but I can help you find out" rather than fabricating information

By changing how we test and evaluate AI models to reward uncertainty when appropriate, companies would naturally adjust their training approaches. This could lead to AI systems that are more honest about their limitations, especially in high-stakes areas like medicine, law, and news reporting.

OpenAI's Response to Hallucination Concerns

When confronted with evidence of hallucinations in their Whisper transcription system, OpenAI acknowledged the issue, stating: "We take this issue seriously and are continually working to improve the accuracy of our models, including reducing hallucinations. For Whisper, our usage policies prohibit use in certain high-stakes decision-making contexts, and our model card for open-source use includes recommendations against use in high-risk domains."

This response highlights both the company's awareness of the problem and the current limitations of their approach—focusing on usage policies and warnings rather than fundamentally redesigning how models are trained and evaluated.

The Future of AI Without Hallucinations

Addressing the OpenAI hallucination problem isn't just about improving technical accuracy—it's about building AI systems worthy of human trust. As these systems become increasingly integrated into critical infrastructure, professional services, and everyday decision-making, their ability to honestly acknowledge limitations becomes essential.

The research suggests that hallucinations aren't an inevitable feature of advanced AI—they're a design choice resulting from current training and evaluation practices. By rethinking these practices and creating systems that value honesty alongside accuracy, we could develop AI that knows when to speak confidently and when to admit uncertainty.

Conclusion: Building AI That Knows Its Limits

OpenAI hallucinations represent one of the most significant challenges in current AI development. They aren't mysterious glitches but predictable outcomes of how we train and evaluate these systems. The real-world consequences—from healthcare risks to legal complications to widespread misinformation—demonstrate why solving this problem is essential rather than optional.

The path forward requires rethinking the fundamental incentives that shape AI behavior. By rewarding honesty and appropriate expressions of uncertainty, we can develop systems that complement human judgment rather than undermining it with fabricated information. This shift would represent a significant step toward AI that we can genuinely trust to know not just what it knows, but also what it doesn't know.

Let's Watch!

OpenAI Hallucination Explained: Why AI Models Make Things Up

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence