In a surprising move that lives up to its name, OpenAI has released two powerful open-source models with Apache 2 licenses. The new GPT-OSS 20B and GPT-OSS 120B models represent the company's first truly open releases since GPT-2, promising impressive reasoning capabilities that rival much larger proprietary models.

GPT-OSS 20B: AI That Runs on Your Laptop

The smaller GPT-OSS 20B model is particularly noteworthy for its accessibility. Despite having 20 billion parameters, it can run on edge devices with just 16GB of memory—making it perfect for local inference, on-device applications, or development without expensive cloud infrastructure.

With a substantial context length of 128,000 tokens, this model delivers performance comparable to GPT-3.5 Mini while running entirely on consumer hardware. Using tools like Ollama, users can download and run the model locally with minimal setup, experiencing surprisingly fast inference speeds of 30-40 tokens per second on standard laptops—with some providers achieving up to 3,000 tokens per second on more powerful hardware.

GPT-OSS 120B: Near GPT-4 Mini Performance

The larger GPT-OSS 120B model approaches GPT-4 Mini-level performance on core reasoning benchmarks while requiring only a single 80GB GPU to run efficiently. For those without such hardware, cloud providers are offering access at remarkably affordable rates—around 15 cents per million input tokens and 60 cents per million output tokens.

This model represents a significant advancement in open-source AI, bringing near-commercial quality capabilities to the open ecosystem at a fraction of the cost of proprietary alternatives.

Real-World Performance Testing

When tested on practical coding challenges, the models showed mixed but promising results. The 20B model, running locally, was able to handle basic reasoning tasks and generate functional code, though with some limitations in complex scenarios.

A physics simulation test involving bouncing balls in a polygon container demonstrated the models' capabilities. While the 20B model produced functional but imperfect code with some physics glitches, the 120B version created a more stable implementation. Neither reached the UI sophistication of some commercial models, but they performed admirably given their open nature.

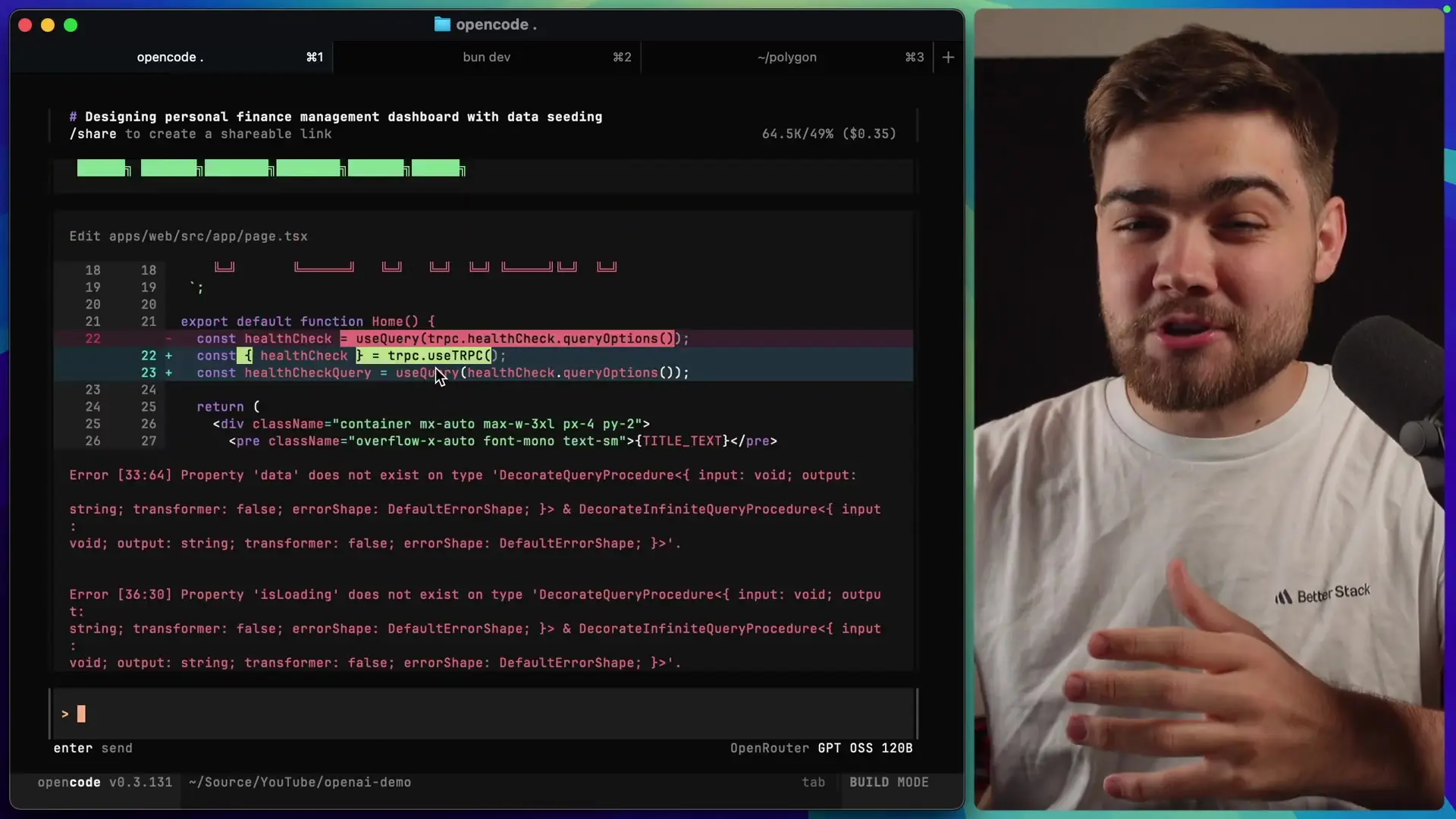

When tasked with developing a Next.js project with TRPC integration, the models struggled with some of the more complex framework interactions, indicating areas where these open models still lag behind their commercial counterparts.

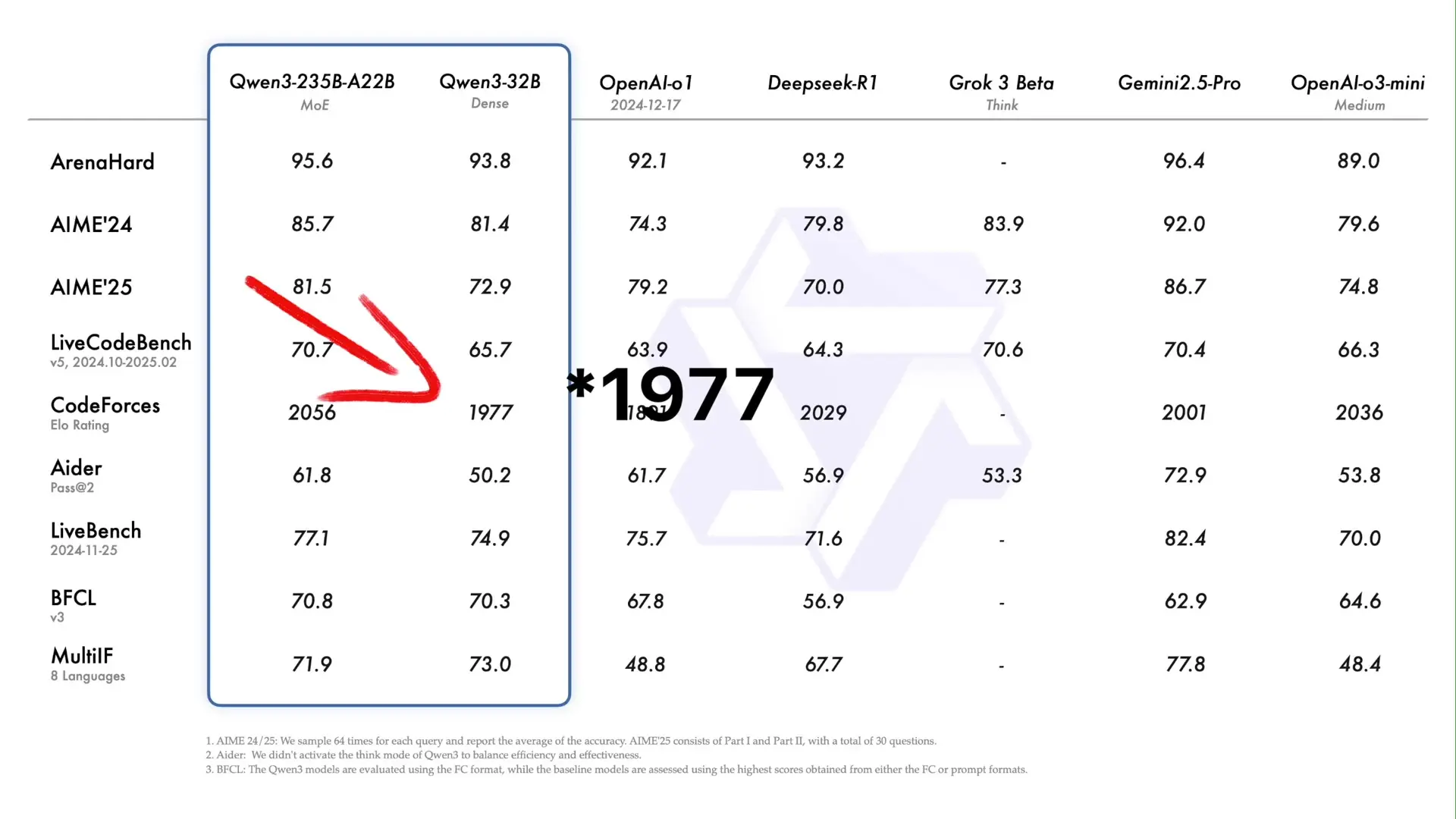

Benchmark Performance: Impressive Numbers

On official benchmarks, both models demonstrated impressive capabilities. In the CodeForces benchmark, the 120B model scored 2,622, nearly matching GPT-3 and surpassing GPT-3.5 Mini. Even more surprisingly, the 20B model achieved 2,516, also outperforming GPT-3.5 Mini.

For math reasoning on AIME 2025, both models scored exceptionally well—96.6% for the 120B model and 96% for the 20B model—actually outscoring both GPT-3 and GPT-3.5 Mini. On the GPQA Diamond benchmark, the 120B model approached GPT-4 Mini levels while exceeding GPT-3.5 Mini performance.

What This Means for Open Source AI

The release of these models marks a significant milestone for the open-source AI community. With Apache 2 licensing, developers can freely use, modify, and distribute these models for commercial applications without the restrictions that typically accompany OpenAI's commercial offerings.

- Democratizes access to high-quality AI models for developers with limited resources

- Enables on-device AI applications that preserve privacy and work offline

- Provides a foundation for customized models tailored to specific domains

- Reduces dependency on cloud-based API services and their associated costs

- Accelerates innovation by allowing direct model modification and improvement

Limitations and Considerations

Despite their impressive performance, these models do have limitations. UI generation quality falls short of some commercial alternatives, and complex framework integrations can be challenging. The models also occasionally produce incorrect or hallucinated information, as is common with all large language models.

Hardware requirements, while modest for the 20B model, become more substantial for the 120B version, potentially limiting its accessibility to those with high-end consumer hardware or cloud resources.

Getting Started with GPT-OSS Models

For developers interested in experimenting with these models, several options are available:

- Download and run locally using Ollama (ideal for the 20B model on machines with 16GB+ RAM)

- Access through cloud providers like OpenRouter at competitive rates

- Deploy on your own infrastructure using the openly available weights

- Integrate with existing applications via API or direct implementation

# Example: Running GPT-OSS 20B locally with Ollama

ollama pull openai/gpt-oss-20b

ollama run openai/gpt-oss-20bConclusion: A Milestone for Open AI

OpenAI's release of the GPT-OSS models represents a significant contribution to the open-source AI ecosystem. While they may not yet match the capabilities of the company's most advanced proprietary models, they provide remarkable performance for their size and accessibility.

For developers, researchers, and organizations looking to build AI-powered applications without the constraints of API-based services, these models offer a compelling alternative that balances performance, cost, and freedom. As the first truly open release from OpenAI in years, GPT-OSS signals a positive direction for AI accessibility and transparency.

Let's Watch!

OpenAI Releases Two Open Source Models: GPT-OSS 20B and 120B

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence