Hugging Face recently released SmolLM3, a new open source language model that claims to be better, faster, and cheaper than competitors like Qwen 3 and Gemma. With 3 billion parameters and trained on an impressive 11 trillion tokens, SmolLM3 appears to pack substantial capabilities into a relatively small model size. But how does it actually perform in practical application development scenarios? Let's explore through a hands-on performance test.

Understanding SmolLM3's Technical Specifications

Before diving into performance testing, it's important to understand what makes SmolLM3 noteworthy. The model boasts several impressive features:

- Compact size with only 3B parameters

- Multilingual capabilities

- Long context window of up to 128K tokens

- Trained on a massive 11 trillion tokens

The training approach is particularly interesting - Hugging Face has packed an enormous amount of training data into a relatively small parameter space, which theoretically should result in a highly efficient model that punches above its weight class.

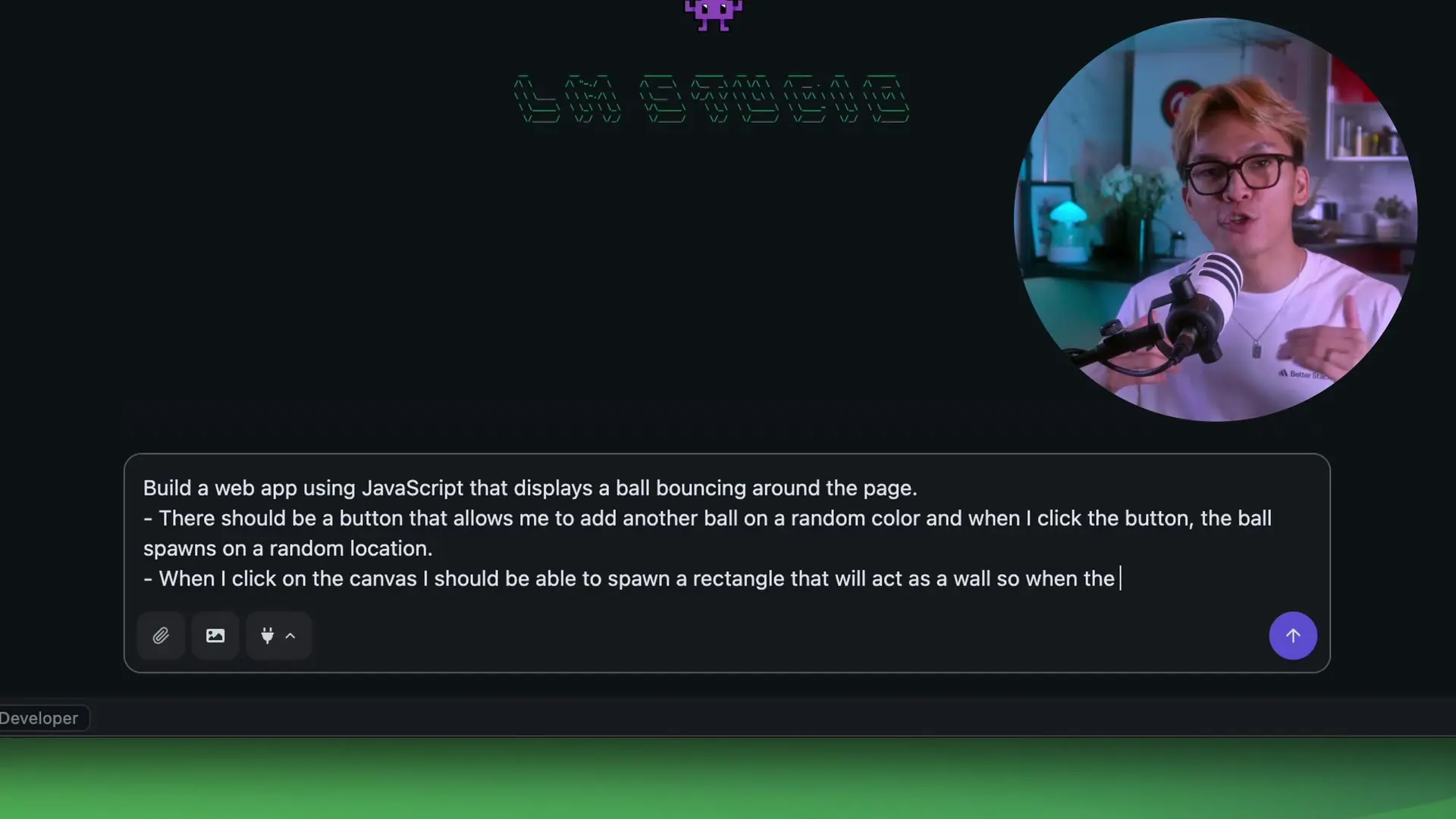

The Performance Test Setup: JavaScript Animation App

To evaluate SmolLM3 against its competitors, we designed a straightforward but practical test: asking each model to create a JavaScript application with specific interactive features. The application requirements included:

- A canvas with bouncing balls

- Ability to spawn new balls

- Functionality to create rectangles/bricks on mouse click

- Physics interaction where larger balls consume smaller ones and grow

This test would evaluate each model's ability to understand requirements, generate functional code, and handle iterative improvements - all essential skills for AI assistance in real-world development scenarios.

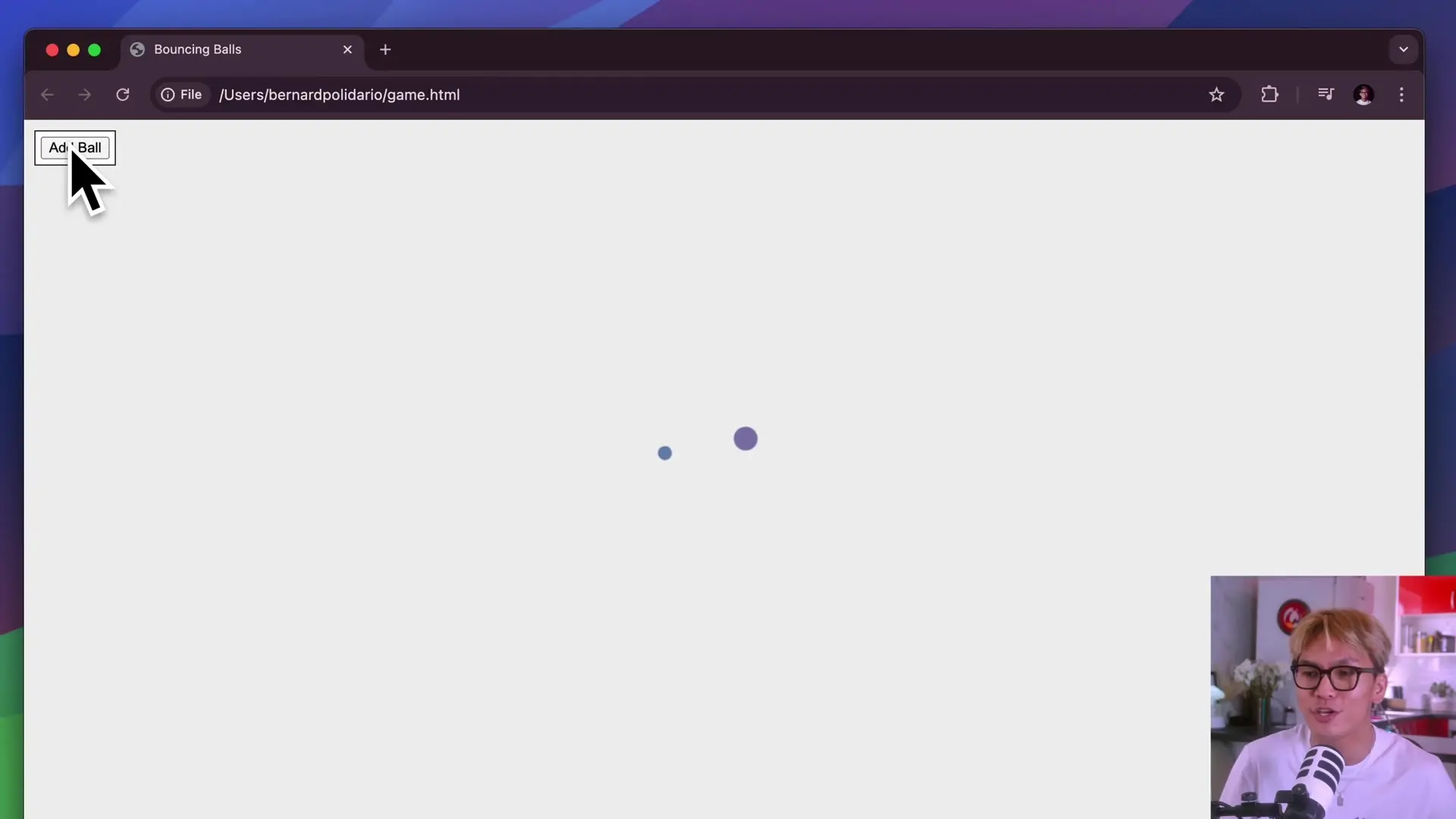

Performance Test Results: Gemma

Our first test subject was Gemma (12B parameters, though we initially intended to use the 34B version). Gemma performed admirably from the start:

- First attempt: Created a button and properly scaled canvas, though rectangle spawning didn't work

- Second attempt: Successfully implemented object spawning after a prompt refinement

- Third attempt: Successfully implemented the ball consumption and growth mechanics

Gemma showed strong capabilities in understanding the requirements and implementing the core functionality. It also demonstrated thoughtful implementation details, such as automatically scaling the canvas to 100% without being explicitly instructed to do so.

Performance Test Results: Qwen 3

Next, we tested Qwen 3 (34B parameters):

- First attempt: Successfully added boxes/bricks but missed the ball-adding functionality

- Second attempt: Added balls with different colors but all had the same radius

- Third attempt: Fixed the radius issue

- Final attempt: Implemented ball consumption mechanics and even added an unrequested but visually appealing background color change

Qwen 3 performed exceptionally well, even adding creative touches beyond the specified requirements. However, it did lose some functionality (the button to add more balls) in its final iteration - a common challenge in iterative development with language models.

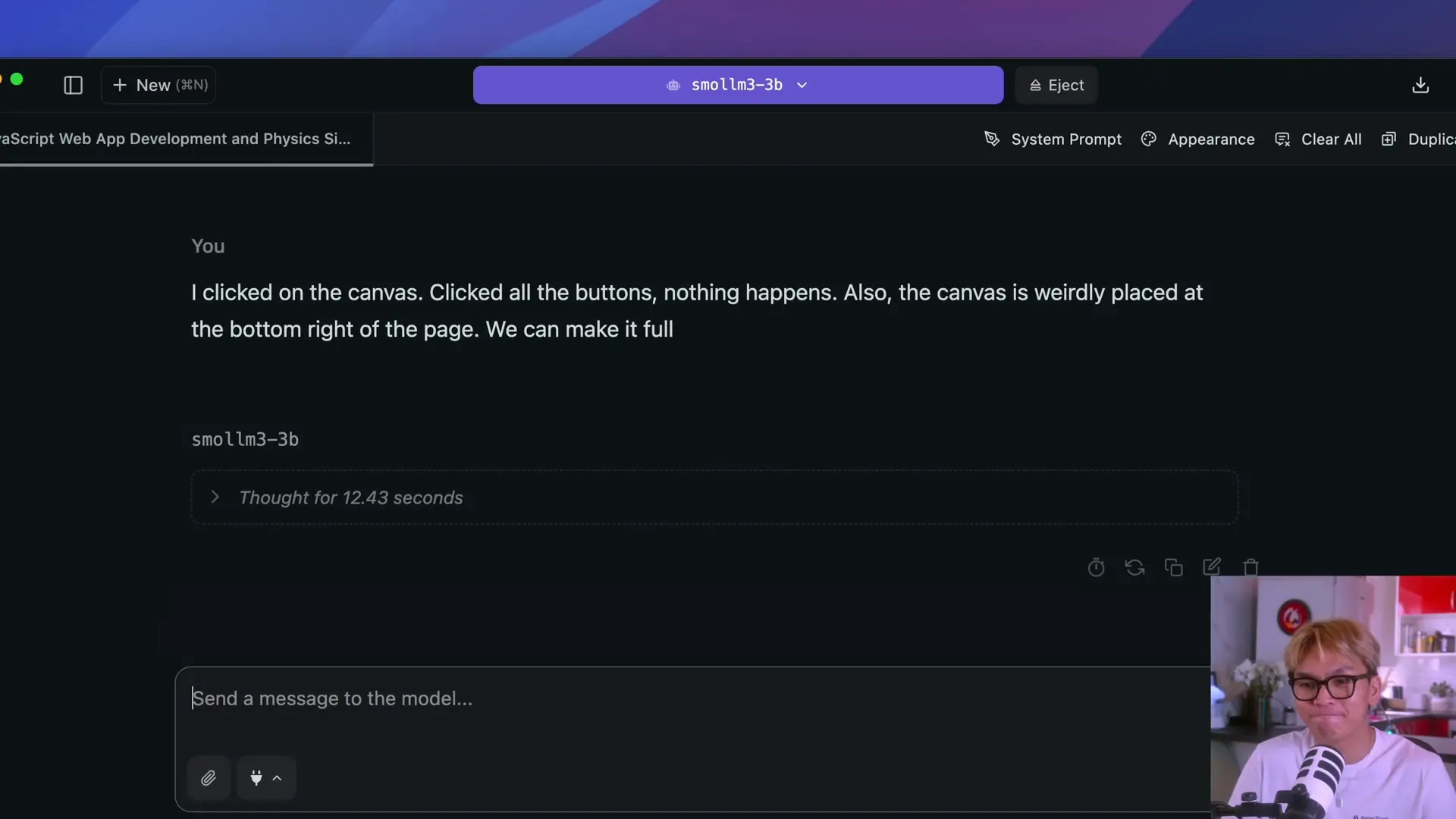

Performance Test Results: SmolLM3

Finally, we tested SmolLM3, the newest contender:

- First attempt: Generated code with syntax errors (mismatched HTML tags) and non-functional buttons

- Second attempt: Still produced non-working code despite feedback

- Final attempt: Failed to provide a working solution after multiple rounds of feedback

Despite its impressive training specifications, SmolLM3 struggled significantly with this practical development task. The model generated code with fundamental errors and was unable to correct these issues even with specific feedback.

Analysis: Why the Performance Test Results Matter

The results of our performance simulation test were surprising. Despite SmolLM3's impressive training statistics and the claims of superior performance, it significantly underperformed compared to both Gemma and Qwen 3 in this practical coding task.

This highlights an important consideration in model evaluation: training metrics and parameter counts don't always translate directly to real-world performance. While SmolLM3 may excel in benchmark evaluations, our hands-on testing revealed limitations in its ability to generate functional code for even moderately complex applications.

Code Sample: Successful Implementation

For reference, here's a simplified version of the type of code that Qwen 3 successfully generated for the bouncing balls application:

const canvas = document.getElementById('gameCanvas');

const ctx = canvas.getContext('2d');

// Set canvas to full window size

canvas.width = window.innerWidth;

canvas.height = window.innerHeight;

let balls = [];

function Ball(x, y, radius, dx, dy, color) {

this.x = x;

this.y = y;

this.radius = radius;

this.dx = dx;

this.dy = dy;

this.color = color;

this.draw = function() {

ctx.beginPath();

ctx.arc(this.x, this.y, this.radius, 0, Math.PI * 2);

ctx.fillStyle = this.color;

ctx.fill();

ctx.closePath();

}

this.update = function() {

// Bounce off walls

if (this.x + this.radius > canvas.width || this.x - this.radius < 0) {

this.dx = -this.dx;

}

if (this.y + this.radius > canvas.height || this.y - this.radius < 0) {

this.dy = -this.dy;

}

this.x += this.dx;

this.y += this.dy;

// Check collision with other balls

for (let i = 0; i < balls.length; i++) {

if (this === balls[i]) continue;

const dx = this.x - balls[i].x;

const dy = this.y - balls[i].y;

const distance = Math.sqrt(dx * dx + dy * dy);

if (distance < this.radius + balls[i].radius) {

// Consume smaller ball

if (this.radius > balls[i].radius) {

this.radius += balls[i].radius / 3;

balls.splice(i, 1);

}

}

}

this.draw();

}

}

function addBall() {

const radius = Math.random() * 20 + 10;

const x = Math.random() * (canvas.width - radius * 2) + radius;

const y = Math.random() * (canvas.height - radius * 2) + radius;

const dx = (Math.random() - 0.5) * 5;

const dy = (Math.random() - 0.5) * 5;

const colors = ['#FF5733', '#33FF57', '#3357FF', '#F3FF33', '#FF33F3'];

const color = colors[Math.floor(Math.random() * colors.length)];

balls.push(new Ball(x, y, radius, dx, dy, color));

}

// Add brick on click

canvas.addEventListener('click', function(event) {

const brickWidth = 50;

const brickHeight = 20;

const x = event.clientX - brickWidth / 2;

const y = event.clientY - brickHeight / 2;

ctx.fillStyle = '#8B4513';

ctx.fillRect(x, y, brickWidth, brickHeight);

});

// Animation loop

function animate() {

requestAnimationFrame(animate);

ctx.clearRect(0, 0, canvas.width, canvas.height);

for (let i = 0; i < balls.length; i++) {

balls[i].update();

}

}

// Initialize with a few balls

for (let i = 0; i < 5; i++) {

addBall();

}

animate();Conclusion: Implications for Model Selection

Our performance testing sample size is admittedly small - just one specific application scenario - but it provides valuable insights into how these models perform in real-world development tasks. The results suggest several important considerations:

- Parameter size doesn't always correlate with practical performance

- Training token count, while impressive for SmolLM3, doesn't guarantee functional code generation

- Established models like Qwen 3 and Gemma currently demonstrate superior capabilities for JavaScript application development

- Model selection should be based on specific use cases rather than general claims of superiority

While SmolLM3 may improve with further refinement, our current performance test modelling suggests that developers seeking AI assistance for practical coding tasks might be better served by more established models despite their larger size. The promise of a smaller, faster, and cheaper model remains appealing, but only when it can deliver comparable functional results.

As with all technology evaluations, it's worth conducting your own small test runs with these models on tasks specific to your development needs. The landscape of AI language models continues to evolve rapidly, and today's performance testing results may change as models improve and adapt.

Let's Watch!

SmolLM3 vs. Gemma & Qwen: Performance Test Reveals Surprising Results

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence