Google recently released Gemma 3N, an open model supposedly designed to run on low-powered devices like laptops and mobile phones. Despite Google's claims that it has an ELO score approaching that of Claude 3.7, there's significant skepticism about such performance claims. After all, how could a model designed for consumer devices match the capabilities of models running on warehouse-scale computing infrastructure? This article puts Gemma 3N to the test against other popular open models to see if it lives up to the hype or if it's just another case of overhyped AI.

The Testing Framework: Three Coding Challenges

To evaluate these models fairly, we'll test them on three common coding challenges that require different skills:

- A bouncing ball in a hexagon (physics simulation)

- A snake game (interactive game development)

- A rotating ASCII Torus (3D rendering and animation)

The models being compared are Gemma 3N (Google's new offering), Llama 4 (Meta's latest open model), and DeepSeek R1. It's worth noting that the current Ollama version of Gemma 3N doesn't support tool use, which limits its capabilities somewhat.

Challenge 1: Bouncing Ball in a Hexagon

Starting with Llama 4, the model produced a hexagon with a ball on the first attempt, though the ball didn't move. On the second attempt, it created movement, but the physics were flawed—the ball occasionally clipped through the hexagon's walls instead of bouncing properly.

DeepSeek also produced a hexagon with a ball on the first attempt and even added a nice slider to add additional balls. However, there was minimal movement. The second attempt allowed for adding balls with click functionality, but the collision physics remained problematic.

Gemma 3N, the supposedly revolutionary model, produced the most unexpected results. Instead of a hexagon with a bouncing ball, it generated an odd spinning shape that accelerated over time. Even after multiple attempts, it only managed to create a hexagon without a ball, and the hexagon's rotation was inconsistent.

Winner of Challenge 1: Llama 4, though its solution wasn't perfect.

Challenge 2: Snake Game Development

For the snake game challenge, Llama 4 created a game on the first attempt, but it was completely unplayable. However, it easily fixed the issues on the second attempt, producing a functional game.

DeepSeek impressed by nailing the implementation on the first try, even adding a restart button for better user experience. This demonstrated superior understanding of game mechanics and user interface design.

Gemma 3N did produce a snake game with score and high score features, but the game wasn't playable. After examining the code, errors were identified. While Gemma fixed some issues in the second attempt, it still failed to produce a functional game.

Winner of Challenge 2: DeepSeek, with a clean, functional implementation on the first attempt.

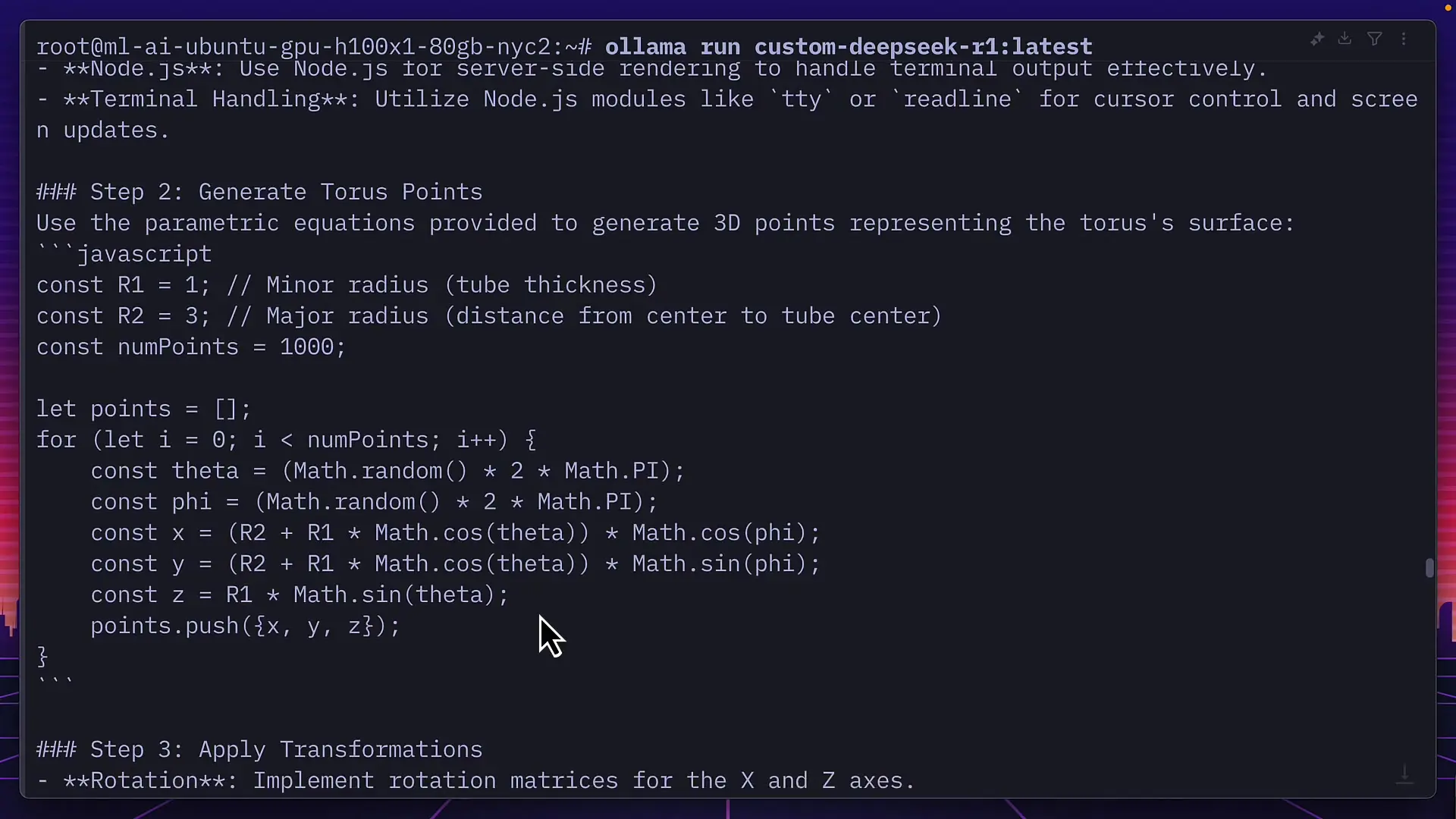

Challenge 3: Rotating ASCII Torus

The final challenge involved creating a rotating ASCII Torus, testing the models' abilities with 3D graphics and animation concepts.

Llama 4's first Python attempt produced an error, and the second attempt yielded a blank screen. When instructed to use JavaScript instead, it created a rotating shape—not exactly a torus, but at least it was animated.

DeepSeek's first attempt came with numerous errors. It attempted to use Three.js without providing CDN links or installation instructions. When informed of this issue, it offered steps to improve the code but still didn't provide the necessary CDN link that would have fixed the problem.

Gemma 3N's performance was particularly disappointing. Multiple Python attempts produced the same non-functional output. When asked to switch to JavaScript, it generated code that resulted in blank screens and console errors across five different attempts.

Winner of Challenge 3: Llama 4, though only by default as it managed to produce something resembling the intended output.

The Reality Behind Overhyped AI Models for Coding

While Llama 4 performed better in two of the three challenges, it's important to note that it requires significantly more computing resources than either Gemma 3N or DeepSeek R1. Even on a high-performance GPU server, Llama 4 demands substantial space and RAM to operate effectively.

This highlights a crucial reality about open AI models: they're still far from ready to handle complex coding tasks efficiently. The current state of overhyped AI for coding presents developers with a difficult choice—either use models that can run on consumer hardware but produce subpar results, or invest in expensive computing infrastructure to run more capable models.

For those serious about using AI for coding assistance, finding a specialized open model trained specifically for coding tasks might be the best approach. However, running such models effectively might require multiple high-end machines—a solution that's impractical for most individual developers.

Conclusion: Managing Expectations for AI Coding Assistants

The testing results clearly demonstrate that Google's Gemma 3N falls far short of the capabilities suggested by its marketing. While it's designed to run on consumer devices, its performance on even basic coding tasks is inconsistent and often disappointing.

This pattern of overhyped AI capabilities isn't unique to Gemma 3N. The gap between marketing claims and actual performance remains a persistent issue in the AI space, particularly for models targeting developers. The reality is that truly capable AI coding assistants still require significant computing resources, making them inaccessible for many everyday developers.

For now, developers should approach claims about revolutionary AI coding assistants with healthy skepticism. The technology is advancing rapidly, but we're still some distance from having truly capable, resource-efficient AI coding partners that can reliably handle complex programming tasks on consumer hardware.

As the field evolves, the most practical approach may be to use cloud-based solutions or to carefully select specialized models for specific coding tasks rather than expecting a single, lightweight model to excel across all programming challenges.

Let's Watch!

The Truth About Overhyped AI: Testing Gemma 3N for Coding Tasks

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence