The cybersecurity community is facing a new and unexpected challenge: a flood of AI-generated bug reports that describe vulnerabilities that don't actually exist. This emerging problem threatens to overwhelm security teams, waste limited resources, and potentially allow real vulnerabilities to slip through undetected.

The HTTP/3 Vulnerability That Never Existed

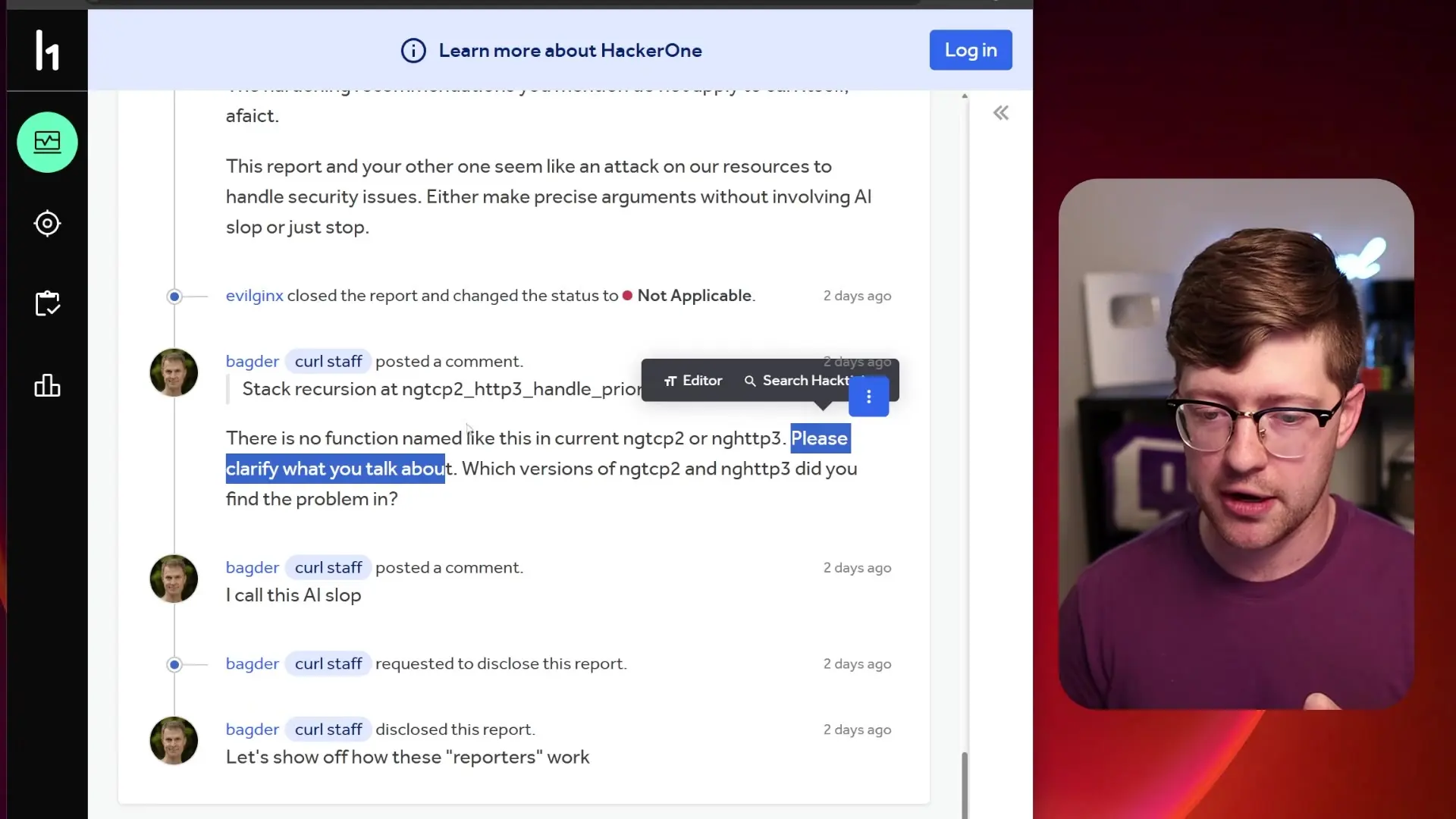

A recent incident on Hacker1, a popular bug bounty platform, perfectly illustrates this growing problem. A researcher using the handle "Evil Gen X" submitted what appeared to be a legitimate security report detailing a "novel exploit leveraging stream dependency cycles in HTTP/3 resulting in memory corruption and potential denial of service" in the widely-used curl library.

At first glance, the report seemed credible. It included detailed steps to reproduce the environment, explanations of the exploit method, and even crash data showing that the return address register (R15) was compromised—suggesting potential code execution capabilities. For curl, such critical vulnerabilities can earn bounties of up to $9,200, creating significant financial incentives for researchers.

However, something wasn't right. When Daniel Stenberg, curl's maintainer and primary developer, reviewed the report, he quickly identified a fatal flaw: the supposedly vulnerable function "ngtcp2_http3_handle_priority_frame" simply doesn't exist in the codebase.

AI Hallucinations Creating Security Mirages

The report wasn't just wrong—it appeared to be completely fabricated by an AI system. The writing style shifted from natural human communication to robotic, generic text as the report progressed. When questioned about implementation details, the responses became increasingly vague and theoretical rather than specific to curl's actual codebase.

This phenomenon, which Stenberg termed "AI slop," represents a dangerous trend: AI systems hallucinating security vulnerabilities that don't exist, complete with fabricated function names, non-existent code paths, and imaginary crash scenarios.

The Denial of Service Against Security Teams

The implications of this trend extend far beyond wasted time on a single report. Security teams already face significant resource constraints—there simply aren't enough security engineers to properly review all legitimate vulnerability reports. When these teams must also filter through AI-generated false reports, real security issues risk being overlooked.

This creates what could be called a "meta denial-of-service attack" against the security community itself. By flooding security teams with convincing but false reports, bad actors could potentially:

- Exhaust limited security resources on wild goose chases

- Reduce confidence in the bug reporting ecosystem

- Create "boy who cried wolf" fatigue that causes real vulnerabilities to be dismissed

- Hide actual exploits among numerous false reports

- Test security teams' ability to distinguish real from fake reports before launching actual attacks

New Policies to Combat AI Slop

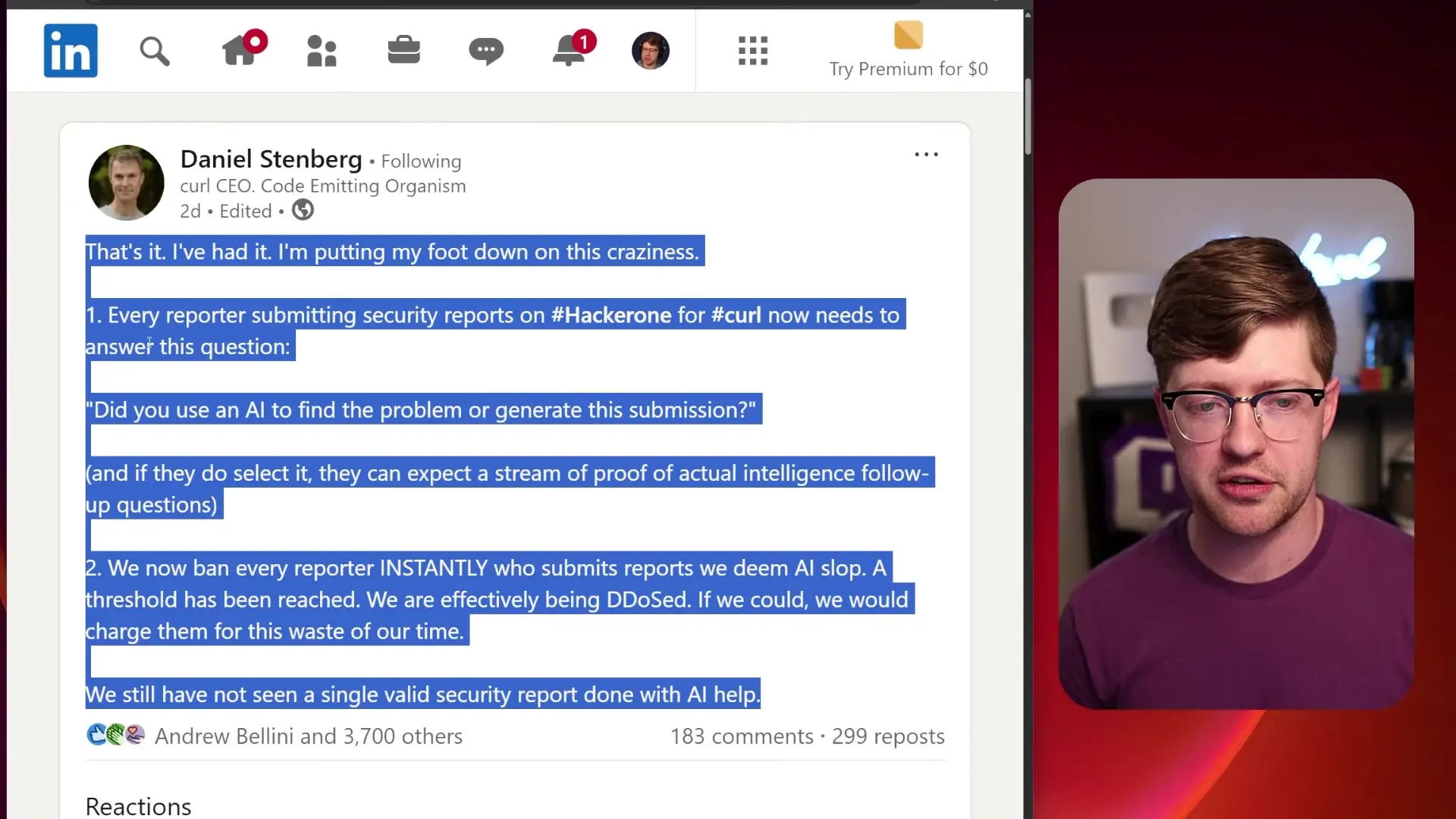

In response to this growing problem, Stenberg has implemented new policies for curl's bug bounty program on Hacker1. Researchers must now disclose whether they used AI to find vulnerabilities or generate their submissions. Those who do will face additional scrutiny and verification questions to prove the legitimacy of their findings.

Most notably, Stenberg announced that researchers submitting what his team deems to be "AI slop" will be immediately banned from the program. This zero-tolerance approach reflects the severity of the threat that AI-generated false reports pose to the security ecosystem.

The Perverse Incentives of Bug Bounty Programs

This situation highlights a fundamental tension in the bug bounty ecosystem. While these programs create positive incentives for researchers to find and responsibly disclose vulnerabilities, they also create financial motivation to submit as many reports as possible, hoping some will pay out.

With AI tools making it easier to generate convincing-looking reports at scale, the temptation to flood platforms with AI-generated submissions in hopes of occasional success becomes stronger. A single $9,000 payout makes up for dozens of rejected reports, creating a lottery-like dynamic that could encourage quantity over quality.

Malicious Implications: Testing the Waters

There's also a more sinister possibility: that some AI-generated reports are tests by malicious actors to gauge how well security teams can distinguish real from fake vulnerabilities. By submitting fabricated reports, attackers could learn which types of submissions receive serious attention and which are quickly dismissed.

This information could then be used to craft real exploits that mimic patterns security teams are likely to overlook, or to time attacks during periods when teams are overwhelmed with false reports.

The Current State and Future of AI in Security Research

According to security professionals involved in this case, there has yet to be a single valid security report created with AI assistance. While AI excels at processing large amounts of data, it currently lacks the contextual understanding and technical precision needed to identify genuine security vulnerabilities.

However, this will likely change as AI systems improve. Eventually, AI may become valuable for security research by analyzing large codebases and identifying potential vulnerabilities that humans might miss. The challenge will be distinguishing between these legitimate AI-assisted discoveries and hallucinated vulnerabilities.

Best Practices for Security Report Validation

For security teams facing this new challenge, implementing robust validation processes is essential. Here are some recommended approaches for distinguishing legitimate reports from AI slop:

- Require specific technical details that can be verified against the actual codebase

- Ask follow-up questions that would be difficult for AI to answer without understanding the specific implementation

- Request proof-of-concept exploits that can be reproduced in controlled environments

- Implement progressive disclosure requirements where researchers must demonstrate increasing levels of understanding

- Verify that function names, code paths, and error messages match those in the actual software

- Look for stylistic inconsistencies that might indicate AI-generated content

- Maintain a database of known researchers with established credibility

Conclusion: A New Challenge for Cybersecurity

The emergence of AI-generated false security reports represents a significant challenge for the cybersecurity community. As AI tools become more accessible and convincing, distinguishing between legitimate security concerns and hallucinated vulnerabilities will require new processes, tools, and skills.

This situation highlights the complex relationship between AI and cybersecurity—while AI has the potential to enhance security research in the future, its current limitations and misapplications are creating new vulnerabilities in our security ecosystems. For now, human expertise, critical thinking, and technical verification remain the best defenses against this growing problem of AI slop.

Let's Watch!

The AI Threat to Cybersecurity: How Hallucinated Bug Reports Are Overwhelming Security Teams

Ready to enhance your neural network?

Access our quantum knowledge cores and upgrade your programming abilities.

Initialize Training Sequence